Have you ever wished you could download files, cook dinner, and write an email all at once? While literal multitasking might just get you burnt toast and garbled sentences, there is a new development in handling multiple tasks, seemingly, at the same time. You can achieve these feats of digital efficiency through what we will teach you in this concurrency vs parallelism article. But what exactly separates these two terms—concurrency vs parallelism—and how do they impact the way your computers work?

Today, we are going to explore the concurrency vs parallelism concept, explaining their meanings, strengths, and quirks, and even witness how they play out in everyday scenarios.

Concurrency vs Parallelism: What Is Concurrency?

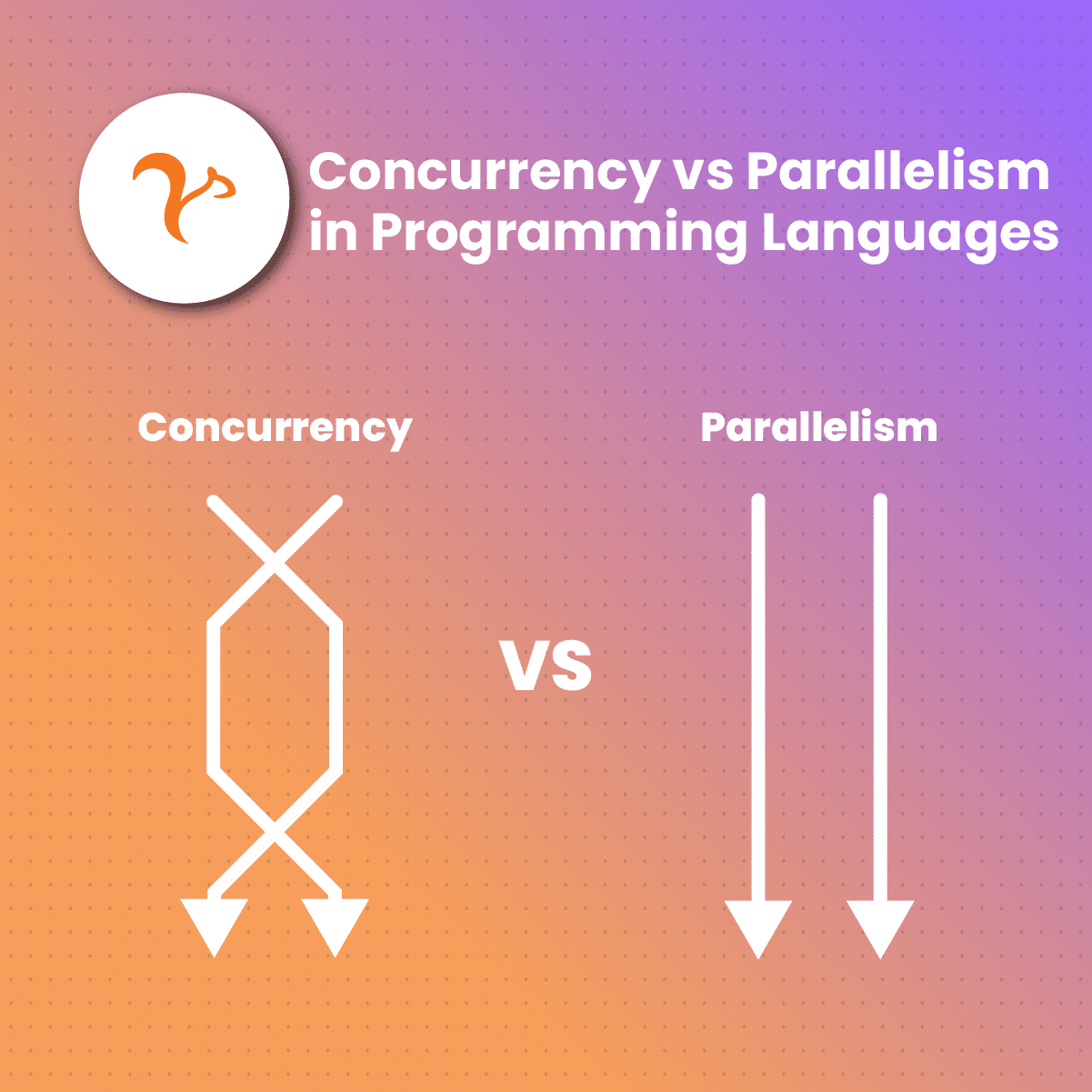

Formally, concurrency refers to the ability of a program or system to handle multiple activities or tasks simultaneously. Imagine yourself downloading files, streaming music, and chatting with friends, all at the same time, and everything unfolds smoothly without delays. In a concurrency vs parallelism debate, this juggling act of performing multiple tasks seemingly in parallel is the essence of concurrency.

Let’s look at the techniques behind concurrency including multiprocessing and threading in the following section of this concurrency vs parallelism discussion.

Concurrency vs Parallelism: How To Achieve Concurrency Through Multiprocessing

Multiprocessing is one of the concurrency vs parallelism techniques for achieving concurrency and maximizing performance in applications involving heavy computations, data processing, and parallel algorithms. It uses multiple processors or cores to handle different tasks concurrently.

How Multiprocessing Works

For further understanding of this concurrency vs parallelism concept, here is how multiprocessing works behind the scenes.

Process Formation

Your program (based on the concurrency vs parallelism concept) splits into independent units called processes, each with its own memory space and resources.

Independent Execution

Each process can then execute its assigned task concurrently, without waiting for the others to finish. This concurrency vs parallelism concept within a program empowers multiple programs to carry out different processes at the same time.

Communication and Synchronization

While independent, processes often need to communicate and synchronize. Imagine chefs needing to pass ingredients between them or ensuring dishes are served in the correct order. In a concurrency vs parallelism comparative study, tools like pipes, queues, and locks enable processes to share data and prevent chaos.

Concurrency vs Parallelism: How To Achieve Concurrency Through Threading

As we progress further in this concurrency vs parallelism article, imagine a computer grappling with multiple tasks splitting its brain, and doing everything at once. Well, this is not what happens. What happens is that it employs a cunning strategy called threading, allowing it to handle requests concurrently, giving an illusion of simultaneous execution, hence the concurrency vs parallelism effect.

How Threading Works

Thread Creation

A process is the unit of execution in an operating system. Within a process, multiple threads can be created. Each thread represents an independent flow of control within the process.

Shared Resources

Threads typically share file descriptors, allowing them to work with the same files or sockets. Similarly, they share other resources like open database connections.

Concurrency and Scheduling

The operating system’s thread scheduler allocates CPU time to each thread. Threads are scheduled for execution based on priority and other scheduling algorithms. However, in the concurrency vs parallelism context, threads within a process can run concurrently, allowing multiple tasks to progress simultaneously. This enhances overall system responsiveness and performance

Thread Communication and Coordination

Threads often need to coordinate their activities to avoid conflicts. Synchronization mechanisms, such as locks, semaphores, and mutexes, help manage access to shared resources and prevent data corruption. However, in this concurrency vs parallelism concept, Threads can communicate through shared variables or more advanced communication mechanisms like message passing and queues.

Concurrency vs Parallelism: Benefits Of Concurrency

In continuing the concurrency vs parallelism discussion, let’s look at some of the benefits of concurrency. What makes concurrency so special?

It Enhances Improved Responsiveness

Concurrency eliminates sluggishness on your computer by allowing multiple tasks to run concurrently, maintaining system responsiveness even if not precisely at the same moment. While one process crunches numbers, another can handle user input, ensuring you don’t feel like a cog in a slow-moving machine. However, we have found out in our concurrency vs parallelism comparison that to maintain a static connection, concurrency can use a static-residential proxy if provided.

It Allows Efficient Resource Utilization

In the concurrency vs parallelism concept, concurrency puts the unused CPU cores to work by distributing tasks among multiple threads or processes, it makes sure valuable resources like CPU, memory, and network bandwidth aren’t left gathering dust. This efficient resource utilization translates to smoother performance and faster completion times for all your tasks. However, to change your IP address during this process, you can use a rotating residential proxy or residential proxy for the task.

It Enables Scalability and Adaptability

As your workload grows, concurrency gracefully rises to the challenge. Its flexible nature allows for scaling by adding more processing power, through multi-core processors or distributed computing systems. This means your system can adapt to increasing demands without bogging down or requiring frequent reboots.

It Creates a User-Friendly Interactivity

Concurrency makes multitasking magic. It can allow you to work on a document while downloading files and chatting online. However, by keeping various processes running in the background, you can seamlessly switch between tasks without experiencing frustrating delays.

It Has Improved Fault Tolerance and Resilience

What if a single task failure can bring your entire system to its knees? Concurrency acts as a safety net against such crashes. By isolating processes, if one task encounters an error, it doesn’t cripple the entire system. Other tasks can continue running uninterrupted, minimizing downtime and maximizing stability.

But this is just the beginning of our journey into exploring concurrency vs parallelism. Let’s examine some challenges facing concurrency.

Concurrency vs Parallelism: Challenges Of Concurrency

In this study of concurrency vs parallelism, concurrency comes with its own set of challenges. Let’s discuss some of them below.

It Can Cause Improper Synchronization

In concurrent programs, multiple threads or processes access and modify shared resources like data structures. Without proper synchronization, like locks and semaphores, these threads can collide, leading to data corruption, unexpected behavior, and ultimately, program crashes. Mastering synchronization is crucial for keeping your digital juggling act smooth and error-free.

There is a Chance of Race Conditions

Race conditions can occur in concurrency when the outcome of a program depends on the unpredictable timing of concurrent operations. A classic example is a bank account update: if two threads try to withdraw money simultaneously, who gets the remaining balance? Race conditions can be notoriously difficult to debug and eliminate, requiring careful analysis and thorough testing.

There are Deadlock Challenges

In concurrency, a deadlock occurs when multiple threads are blocked, waiting for resources held by each other. This creates a vicious cycle where no thread can progress, effectively bringing your program to a standstill. Avoiding deadlock requires careful planning and resource management.

There Are Livelock Challenges

Ever been stuck in a conversation where people keep interrupting each other, never making real progress? In concurrency, livelock is a situation where threads are constantly busy interacting with each other but never actually completing their tasks. This can happen when threads compete for a shared resource but keep relinquishing it before reaching completion. Dealing with livelock requires identifying the resource bottleneck and either reducing contention or implementing appropriate coordination mechanisms.

Debugging Headaches Can Occur

Debugging concurrent programs can be equally challenging. Traditional debugging techniques often fall short when multiple threads are involved, making it difficult to pinpoint the exact source of errors. Special tools and techniques are needed to navigate the complex interactions of concurrent systems and track down bugs.

Concurrency vs Parallelism: Applications of Concurrency

In this study of concurrency vs parallelism, let’s find out the applications of concurrency in various domains to enhance efficiency and responsiveness. Here are some of its applications below.

It is Used in Web Servers

Concurrency allows web servers to handle multiple user requests simultaneously, ensuring quick responses and efficient use of resources.

It is Used in Database Systems

In database management, concurrency control ensures that multiple transactions can be processed concurrently without conflicting with each other, thereby maintaining data integrity.

It is Used for Multithreading in Programming

Concurrency is vital in programming, especially with multithreading, where different parts of a program can execute concurrently, improving performance and responsiveness.

It is Applicable in Parallel Computing

Concurrency is important in parallel computing, where tasks are divided into smaller subtasks that can be executed concurrently on multiple processors, thereby speeding up computations.

It is Used in Networking

Concurrency is used in networking protocols to handle multiple connections concurrently, ensuring efficient data transfer and responsiveness in communication systems.

It is Essential in Operating Systems

Concurrency is essential in operating systems for multitasking, allowing multiple processes or applications to run concurrently on a computer.

It is Used in Graphics Processing

Concurrency is utilized in graphics processing units (GPUs) to handle parallel processing tasks, enabling faster rendering of complex graphics and simulations.

It is Used In Real-time Systems

Concurrency is essential in real-time systems where tasks need to be executed within strict time constraints, ensuring timely responses to events.

Concurrency vs Parallelism: What is Parallelism?

In this concurrency vs parallelism debate, let’s look closely at the concept of parallelism.

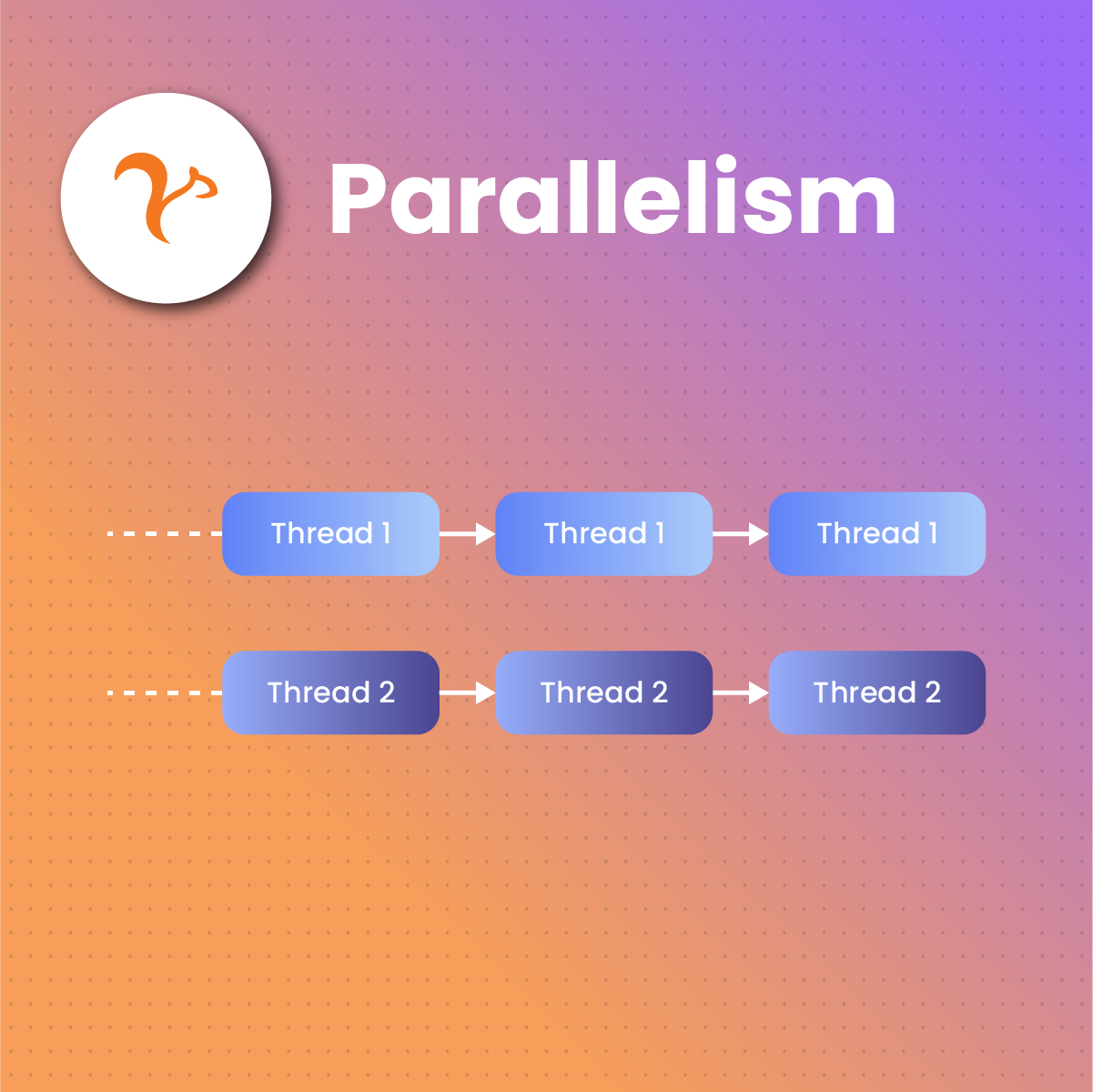

Parallelism refers to the simultaneous execution of multiple tasks or processes to achieve improved performance and efficiency. In computing, this often involves breaking down a larger task into smaller subtasks that can be executed concurrently. These subtasks are processed simultaneously, typically by multiple processors or cores, allowing for faster completion of the overall task.

However, parallelism can be employed at various levels, including instruction-level parallelism within a single processor, task-level parallelism across multiple processors or cores, and data-level parallelism by processing different parts of the data concurrently.

Concurrency Vs Parallelism: How Parallelism Requires Multiple Processing Units

As already established in this concurrency vs parallelism discussion, parallelism involves the simultaneous execution of multiple tasks to enhance overall processing speed. This efficiency is particularly notable when employing multiple processing units, such as multiple CPU cores.

Each CPU core in a system can independently execute its set of instructions. When tasks are divided into smaller sub-tasks, multiple cores can process these sub-tasks concurrently. This parallel processing significantly reduces the time required to complete the overall workload compared to a single-core system.

The coordination of tasks across multiple cores requires synchronization mechanisms to ensure proper execution. Parallelism leverages the capabilities of these multiple processing units to achieve faster computation, making it a valuable strategy for improving performance in tasks that can be divided into different parallelized components.

Next stop on our discussion of concurrency vs parallelism, let’s explore the benefits of parallelism

Concurrency Vs Parallelism: Benefits of Parallelism

Remember the last time you opened multiple browser tabs at once? While each tab seemed to load separately, your computer was juggling their demands concurrently. But when it comes to concurrency vs parallelism, parallelism takes this multitasking to a whole new level, allowing multiple processing units to crunch through tasks simultaneously.

Let’s explore its most impressive benefits.

It Uses Supercharged Speeds

In the context of concurrency vs parallelism, the core strength of parallelism lies in its ability to execute tasks simultaneously. This translates to significantly faster processing times, especially for computationally intensive tasks like video editing, scientific simulations, and large-scale data analysis. However, when debating concurrency vs parallelism, the latter leads to enhancing efficiency through simultaneous job executions.

It Unlocks Responsiveness

Imagine opening multiple tabs only to be greeted by unresponsive loading bars. Serial processing can bottleneck responsiveness, especially when juggling demanding tasks. Parallelism trumps this concurrency vs parallelism comparison because it ensures that user interactions remain fluid and responsive. You can search the web, edit photos, and stream music, all without experiencing significant lag or delays especially when used with a mobile proxy.

It Can be Used For Scalability For the Future

When handling your web scraping activities, Parallelism allows you to add more processing cores to your system, effectively scaling up the power and performance to tackle even the most complex challenges. In every concurrency vs parallelism debate, scalability is a great feature that often places parallelism above concurrency.

Concurrency Vs Parallelism: Challenges of Parallelism

In this concurrency vs parallelism comparison, we also discuss some of the challenges of parallelism.

Data Dependency

Not all tasks are created equal. Some rely on the output of others before they can even begin. These dependencies act like traffic lights, forcing parallel processes to wait patiently until it’s their turn to proceed. Identifying and managing these dependencies efficiently is crucial to avoid bottlenecks and maximize parallel processing gains. In the context of concurrency vs parallelism, maximizing task dependencies is an essential aspect of overall performance optimization.

Overhead

Coordinating and synchronizing multiple tasks is a cost. Every message exchanged, every shared resource accessed, adds a layer of overhead that can eat into the performance gains of parallelism. Finding the right balance between parallelization and overhead is key to maintaining efficiency. In this concurrency vs parallelism discussion, achieving this equilibrium gives you optimal performance while keeping computational cost optimal.

Debugging

Tracking down bugs in parallel programs can be like hunting for a needle in a haystack. The unpredictable nature of concurrent execution makes it challenging to pinpoint the exact cause of an error. Specialized debugging tools and techniques are essential to navigate this labyrinth of potential issues.

Memory Management

Sharing data between parallel processes raises concerns about memory management. Ensuring data integrity and preventing corruption in a multi-threaded environment requires careful synchronization and robust memory allocation strategies.

Race Conditions and Deadlocks

Race conditions occur when competing processes access and modify shared data in unpredictable ways, potentially leading to errors and crashes. Deadlocks occur when processes get stuck waiting for resources held by each other, creating a stalemate that brings everything to a halt. Dealing with these concurrency challenges requires careful planning and sophisticated tools.

Concurrency Vs Parallelism: Applications of Parallelism

In the study of concurrency vs parallelism, Parallelism finds applications in various domains, enhancing efficiency and performance. If you’re considering concurrency vs parallelism, the versatility of the latter contributes to enhanced outcomes in several fields.

It Is Used In Computing And Processing

In the concurrency vs parallelism concept, Parallelism is widely used in high-performance computing, where tasks are divided among multiple processors to execute simultaneously, accelerating complex calculations and data processing.

It Is Applicable In Data Analysis And Big Data

Parallel processing is vital in handling large datasets and performing parallel analytics. It allows for faster extraction of insights and trends from massive amounts of data.

It Is Used in Graphics Processing

Parallelism is essential in graphics processing units (GPUs) for rendering images and videos. GPUs leverage parallel architectures to handle multiple tasks concurrently, enhancing graphical performance in applications like gaming and video editing.

It Is Employed in Scientific Simulations

In the context of concurrency vs parallelism, parallel computing is employed in scientific simulations, enabling researchers to model complex phenomena and conduct simulations more quickly by distributing computations across multiple processors.

It is Used in Machine Learning and AI

In the concurrency vs Parallelism context, training and inference tasks in machine learning benefit from parallelism. Parallel processing accelerates the training of models and improves the efficiency of making predictions in real-time applications.

It is Used in Web Servers and Networking

Parallelism is employed in web servers to handle multiple simultaneous requests from users, improving the responsiveness of websites. It is also used in networking for efficient data transmission.It is Used in Parallel Algorithms

Parallelism is integral to the design of parallel algorithms, which are tailored to execute tasks concurrently. This is particularly useful in scenarios where breaking down a problem into smaller subproblems can lead to faster solutions.

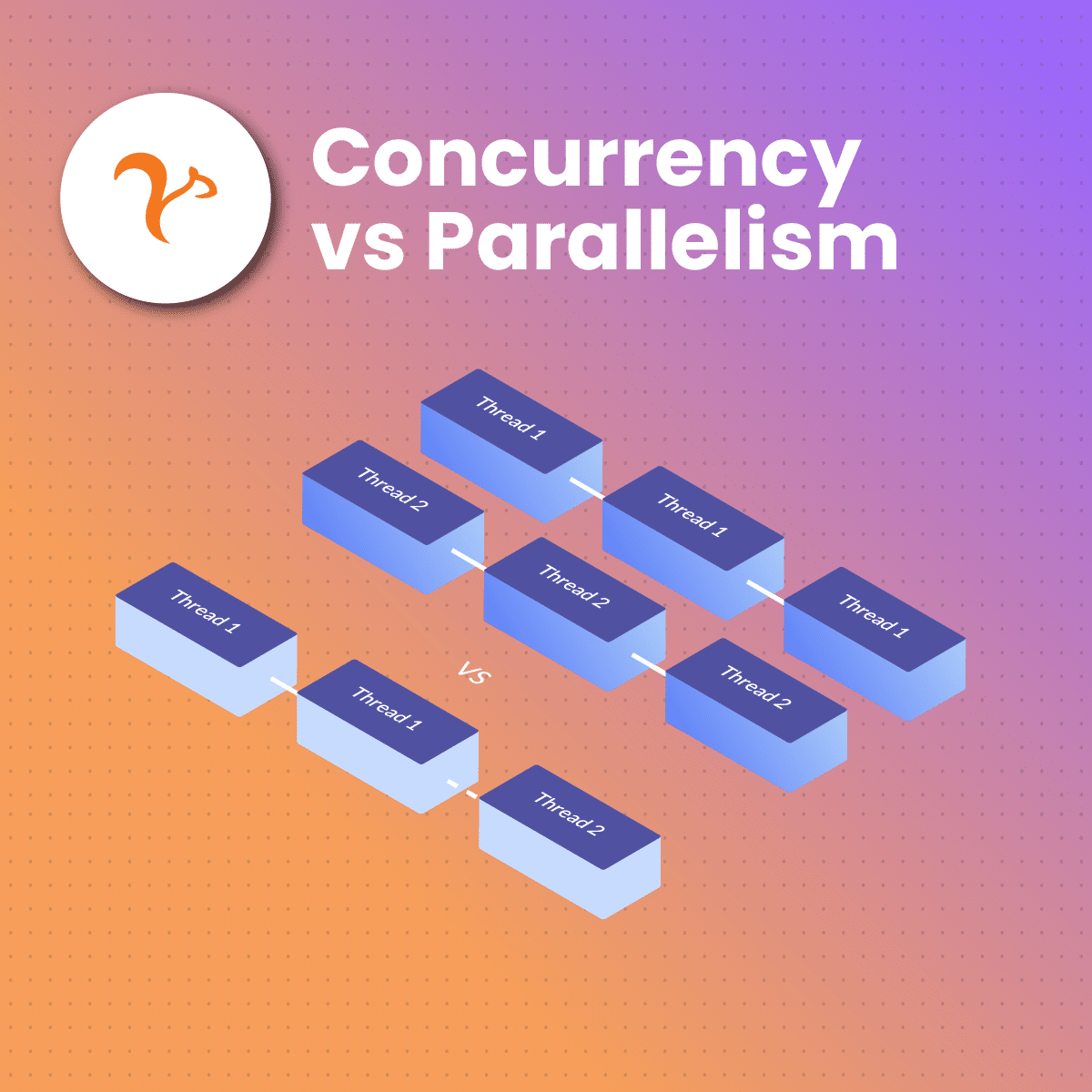

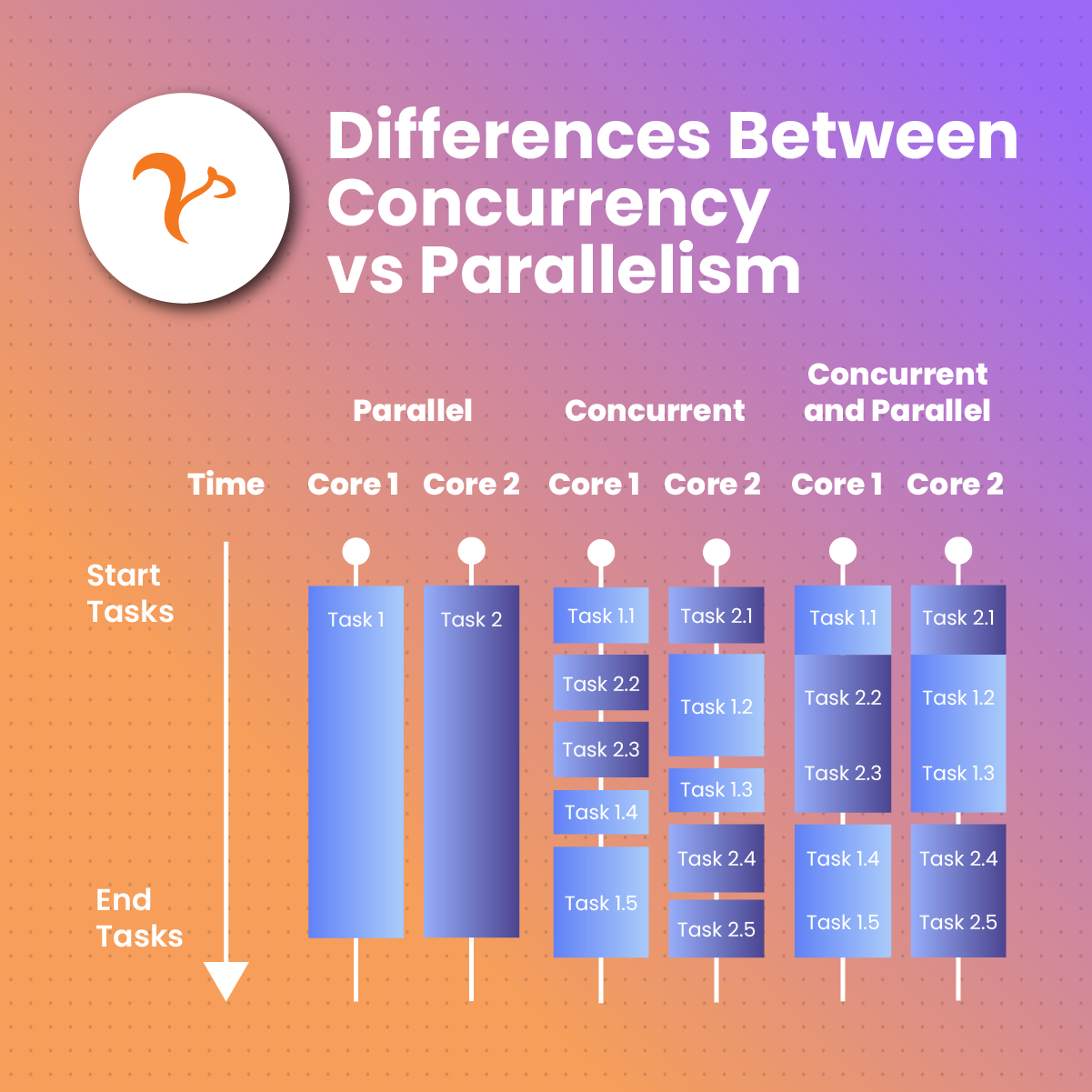

Concurrency Vs Parallelism: Key Differences Between Concurrency And Parallelism

To understand the concurrency vs parallelism concept, you need to know their key differences.

|

Aspects |

Concurrency |

Parallelism |

|

Definition |

Concurrency involves the execution of multiple tasks, but not necessarily at the same time. |

In parallelism, tasks are executed simultaneously, often utilizing multiple processors or cores. |

|

Execution |

Tasks in concurrency can be interleaved or executed in overlapping time intervals. |

Each task is broken down into smaller subtasks, and these subtasks are performed concurrently. |

|

Use Cases |

Concurrency is often used in scenarios where tasks involve waiting for external events, such as I/O operations. |

Parallelism is suitable for computationally intensive tasks. |

|

Resource Requirement |

Concurrency can be achieved on a single processor. |

Parallelism often requires specialized hardware with multiple processors. |

|

Complexity |

Concurrency may involve less coordination. |

Coordinating tasks in parallel can be more complex due to the need for synchronization. |

Concurrency Vs Parallelism: Factors To Consider When Choosing

From what you have learned so far on the concurrency vs parallelism concept, you might be wondering which option to choose at a given point in time for your multiple-tasking. Well, here are some considerations below:

Nature of Tasks

Concurrency

Well-suited for tasks with I/O operations or tasks where responsiveness is crucial. It allows progress on different tasks without waiting for each to complete.

Parallelism

Ideal for computationally intensive tasks that can be broken down into independent subtasks, suitable for simultaneous execution on multiple processors.

Resource Utilization

Concurrency

Tends to be more efficient in utilizing resources when tasks involve waiting periods, as the system can switch between tasks during idle times.

Parallelism

Requires careful consideration of resource allocation and load balancing to ensure all processors are effectively utilized, especially for tasks with similar workloads.

Programming Complexity

Concurrency

Generally involves less complex programming, focusing on task coordination and management. It’s often more straightforward for tasks with potential dependencies.

Parallelism

It requires explicit consideration of data sharing, synchronization, and load balancing, making programming more complex. Dependencies between parallel tasks must be carefully managed.

Performance Requirements

Concurrency

This emphasizes responsiveness and improved user experience. It is well-suited for applications where waiting times should be minimized.

Parallelism

It provides significant performance gains for computationally intensive tasks. It is also suitable for scenarios where maximizing throughput and reducing overall execution time are critical.

Concurrency Vs Parallelism: FAQ

What is the Fundamental Difference Between Concurrency vs Parallelism?

Concurrency involves the execution of multiple tasks, allowing progress on different tasks without necessarily executing them simultaneously. Parallelism, on the other hand, specifically refers to the simultaneous execution of multiple tasks to enhance overall performance. However, in the debate between concurrency vs parallelism, parallelism may have a slight edge over concurrency. But making your choice in the concurrency vs parallelism comparison depends on the task to be executed.

Can a System Exhibit Concurrency vs Parallelism Simultaneously?

Yes, a system can embrace concurrency vs parallelism concurrently. Concurrency is often achieved at a higher level of abstraction, managing multiple tasks logically, while parallelism is implemented at a lower level, involving simultaneous execution of these tasks using multiple processors or cores. This dual approach is common in optimizing system performance.

Are There Specific Scenarios Where Concurrency is More Suitable Than Parallelism or Vice Versa?

Yes, the concept of concurrency vs parallelism highlights that there are scenarios where one is more appropriate than the other. Concurrency is often preferred for tasks involving I/O operations or user interfaces, while parallelism excels in computationally intensive tasks such as scientific simulations or data processing. This distinction in a concurrency vs parallelism debate reflects the tailored applicability of each concept and how they fit in specific scenarios.

Concurrency Vs Parallelism: Conclusion

We’ve concluded our article on concurrency vs parallelism, where we’ve carefully explored their distinct approaches to handling tasks, examined their unique strengths and challenges, and aimed to provide you with a clearer understanding of these powerful tools.

However, to enhance concurrency you can use a proxy. That’s where NetNut comes in. NetNut offers you cutting-edge customized proxy services that can improve your web scraping and multitasking processes.