Introduction

Concurrency is a critical term in the world of concurrency that describes the ability of an operating system to perform multiple processes or computations simultaneously. This concept is crucial because it is the core of many systems, including multi-core processors and web servers.

This guide will cover everything you need to know about concurrency and proxy servers. Let us dive in!

What is Concurrency?

Concurrency can be defined as the number of requests you can send via proxy servers at the same time. It is a computer programming concept that allows multiple tasks to run independently of one another to prevent any interference that may affect their execution and results. Concurrency is critical, especially in cyber security, because it provides additional protection for accessing and altering information.

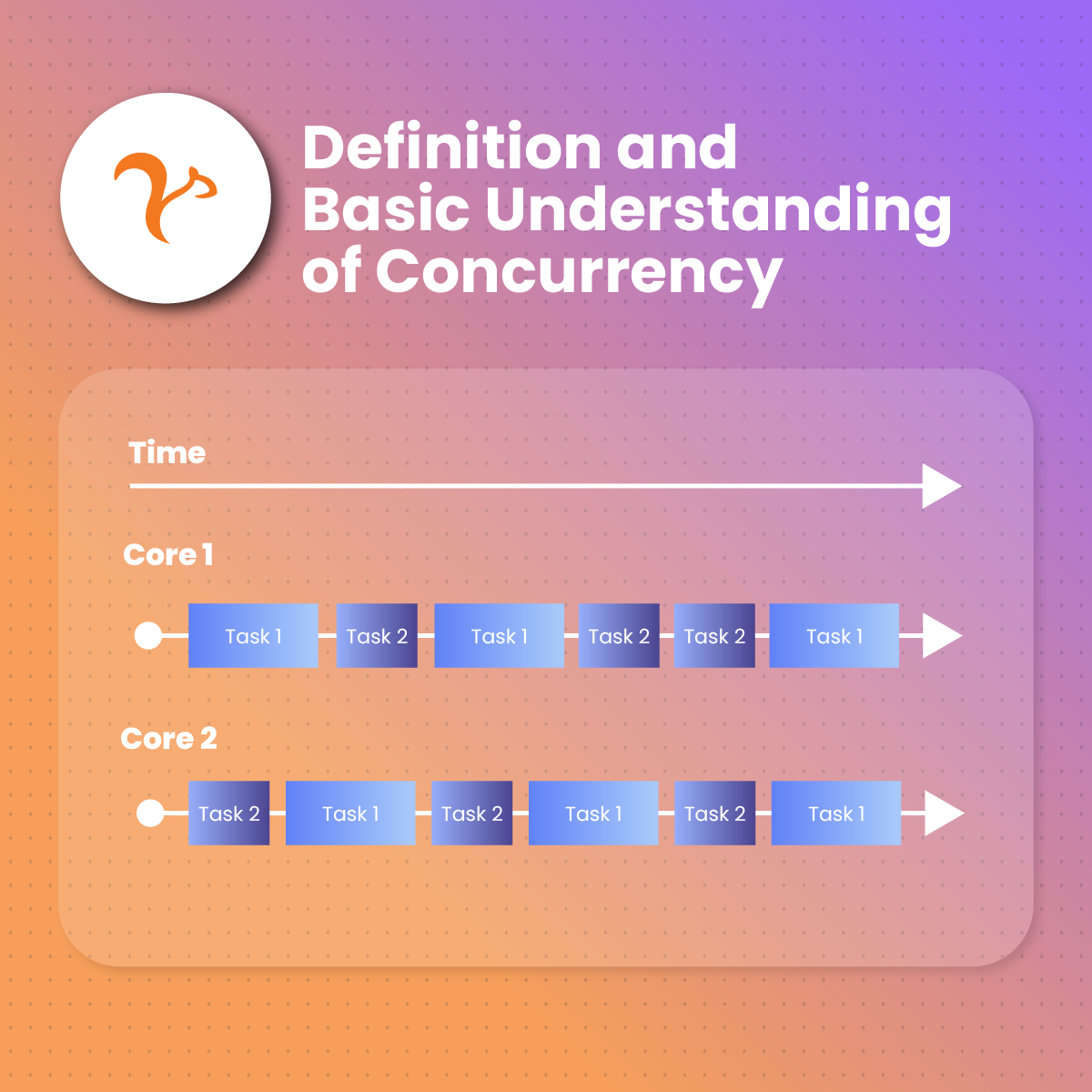

Concurrency works by dividing tasks into smaller sections and running them simultaneously on different processors. There is an increase in performance, which makes it more efficient for coders to write and maintain programs and applications. As a result, multiple tasks can run at the same time without any interference that may reduce their performance. Subsequently, this enhances the efficiency of the program because it allows for efficient use of resources by not writing for one task to finish before commencing the next.

Understanding the motivation for integrating concurrency into applications and systems provides a better understanding of the concept.

Here are some of the significance of concurrency:

Physical resource sharing

Concurrency optimizes physical resource sharing in a multi-user environment with limited hardware resources. Subsequently, concurrency ensures fair access to physical resources, which optimizes hardware utilization and overall efficiency. This is a significant motivation in an environment where multiple users simultaneously interact with shared hardware resources, including storage, CPU, and memory.

Logical resource sharing

Concurrency allows for logical resource sharing, especially when multiple people need access to the same information. In environments where collaboration and consistency are critical among various processes, concurrency saves the day.

It allows multiple processes to interact with shared data at the same time for a seamless collaborative experience. Therefore, concurrency optimizes data consistency as well as promotes a work environment where logical resources can be accessed, shared, and updated without any problems.

Computation speedup

Another motivation for integration with concurrency is the demand for computation efficiency. It increases the computation speed through parallel execution. Therefore, concurrency allows systems to execute multiple tasks simultaneously by breaking them into smaller components. Subsequently, there is reduced processing time, which is critical for applications where quick computation is significant, such as data processing.

Modularity

Concurrency works by dividing system functions into separate processes. This optimizes system performance by compartmentalizing functionalities, which ensures ease of organization and management. Therefore, it optimizes system scalability.

Relationship between Processes of Operating System

Two processes are responsible for execution in the operating system.

Independent process

As the name suggests, its state is not shared with any other process. Therefore, the result of execution relies only on the input. In addition, the result of the execution will always provide the same execution if the input remains the same. The termination of the independent process will not terminate any other ongoing process on the operating system

Cooperating system

Here, its state is shared along other processes. Therefore, the result of execution relies on the execution sequence. In addition, it cannot be predicted in advance. A unique characteristic is the result of the execution will not always be the same for the same input. Subsequently, the termination of the process may directly impact the continuation of other processes run on the operating system.

Applications of Concurrency

Concurrency has several applications in real life. Many processes depend on this concept for optimal performance. Here are some real-world applications:

Cyber security

Concurrency has a wide range of applications in cyber security. The risk of cyber-attacks increases when multiple devices are connected to networks. Therefore, you can use concurrency to efficiently identify and defend against cyber-attacks. As a result, you get a faster and more effective response, which optimizes data protection and security.

Gaming

Online gaming has become increasingly popular, especially with the global lockdown that forced many individuals to stay home. Thousands of players are logging in to various online games simultaneously from around the world. Therefore, developers have to use game servers that can handle a large number of concurrent connections.

Subsequently, games, especially online multiplayer games, depend on concurrency to handle interactions between players in real time for an enhanced gaming experience. In simpler terms, each player’s actions are processed concurrently, which ensures an interactive and seamless gaming experience.

Video streaming services

Video streaming services like Netflix and YouTube provide an optimized browsing experience with concurrency. Different users can watch the same or various videos simultaneously without any interference.

Process operation in operating system

The majority of operating systems have at least two types of operations

Process creation

A parent process can be created, as well as a children’s process. When this happens, it opens the door for several possible implementation processes. Concurrency allows the parent and children’s processes to be executed simultaneously. The parent and children share all resources. However, the children utilize only a fraction of their parent’s resources. Furthermore, the parent process waits until all the children’s processes have been terminated.

Process termination

Once a process has served its purpose, it is often terminated. Usually, the child process gets terminated before the parent process. Subsequently, the parent process can terminate a child process when the child has exceeded its allocated resources. Similarly, a child process can be terminated if the task given to it is no longer needed.

Alternatively, if a parent process gets terminated, all its children will be terminated because it is their source.

Principles of concurrency

The core of concurrency is that multiple processes unfold simultaneously. Therefore, both overlapped and interleaved processes rely on concurrency. However, it is challenging to predict the relative speed of execution.

It depends on the following factors:

Activities of other processes

The speed of execution of a process is directly affected by other activities simultaneously running in the system. This overlap of activities introduces a degree of variability, which makes it difficult to predict the specific speed of execution. Therefore, you may need to employ adaptive strategies for dynamic interactions for concurrent processes.

How the operating system handles interrupts

In the world of concurrency, the speed of execution relies on the way an operating system manages interrupts. Interrupts often introduce a degree of complexity to the situation. Therefore, the efficiency of handling them directly affects the responsiveness and speed required for concurrency processes.

Scheduling policies of the operating system

The scheduling policies of an operating system play a significant role in determining the execution speed of concurrency orders. Scheduling policies, which may range from pre-emptive to non-preemptive approaches, affect the dynamics of a concurrent process. Therefore, this introduces some control over the execution process, which makes it challenging to determine the specific.

Advantages of concurrency

Run multiple applications

One of the primary advantages of concurrency is its ability to run multiple applications simultaneously. This optimizes multitasking and allows users to execute multiple tasks without any interference that can reduce efficiency or output. Since the system does not have to wait for one task to complete before processing the next, it contributes to a more responsive experience.

Improved use of resources

Another advantage is that others can effectively use resources that are unused by one application. Therefore, concurrency optimizes the use of resources, especially in a work environment with limited resources. Subsequently, it improves resource distribution and prevents wastage.

Optimizes average response time

Concurrency optimizes the average response time of processes. The operating system produces faster results when each application does not need to be executed before moving to the next. With concurrency, the operating system ensures all applications use the resources available to run concurrently. This shortens the overall response time, which allows users to enjoy a responsive computing environment.

Better system performance

Lastly, concurrency contributes to the enhanced performance of an operating system. For example, one application uses only the processor, and another application runs with only the disk drive. Subsequently, the time to run both applications simultaneously to complete them will be shorter than the time it takes to run these applications one after the other. Therefore, concurrency enhances hardware resource utilization and distribution for optimized system performance.

Disadvantages of concurrency

While there are some excellent benefits of concurrency, it has some disadvantages. They include:

Protecting multiple applications at the same time

Concurrency requires the protection of multiple applications from one another. When various tasks are running on an operating system, it is the role of concurrency to prevent them from interfering with each other. Therefore, strategies must be implemented to prevent data corruption, potential breaches, or unauthorized access to the system.

Coordinate multiple applications

Concurrency is required to coordinate multiple applications through additional mechanisms. This leads to some complexities in synchronizing activities, managing resources, and maintaining consistency across multiple applications. Therefore, additional performance overheads and complexities in operating systems are necessary for switching between concurrent applications.

Reduced performance

Concurrency involves running multiple applications at the same time. However, running too many applications simultaneously may lead to significantly reduced system performance.

This can happen when the system is overwhelmed with the task of managing a high amount of concurrent activities. Subsequently, this could lead to delays, reduction in efficiency, and overall degradation of system performance.

Models for Concurrency

Concurrency is an integral part of today’s programming world- multiple computers in a network, multiple processors in a computer, and multiple applications running on one device. Here are two common models for concurrency:

Shared-memory model

For the shared-memory model, concurrent modules interact by reading and writing shared objects in memory. Let us consider some examples-

- A and B are two processor cores in one computer that share the same physical memory.

- Alternatively, A and B can be two programs running simultaneously on the same computer, and they share a common file system they can read and write.

- Another example is A and B can be two threads in the same Java program that share the same Java objects.

Message-passing model

The message-passing model involves interaction between concurrent modules via a communication channel. These modules send messages, and all incoming messages to each module are in a queue awaiting processing. Here are some examples that explain the message-passing model:

- A is an instant messaging client, and B is a server- they send and receive messages from each other.

- A can be a web browser, and B can be a web server. A opens a connection to B and asks for a web page while B sends the web page data back to a

- A and B can be two applications running on the same computer where their input and output are connected by a pipe typed into a command prompt.

- A and B can be two computers in a network that are communicating by network connections.

Processes and Threads

There are two basic units of execution in concurrency- processes and threads. A computer operating system usually has multiple active processes and threads. This is also applicable in systems with only a single execution core and executes one thread at any time. However, processing time for a single core is shared among processes and threads with an operating system feature known as time slicing.

In recent times, it has become a common thread for computer operating systems to have multiple processors with multiple execution cores. Subsequently, this has enhanced the system’s capacity for concurrent execution of threads and processes. Remember that concurrency is possible with simple operating systems that do not come with multiple processors.

Processes

A process in concurrency is a self-contained execution environment. It usually has a complete, private set of basic run-time resources. In other words, each process has its own memory space. It can be described as running a program isolated from others on the same machine.

Processes are often described as applications or programs. Most people see applications as a set of cooperating processes. Usually, processes do not share memory with other processes. Operating systems utilize Inter-Process Communication (IPC) resources like sockets and pipes, which facilitate communication between processes. The IPC also serves as a channel of communication for processes on different systems.

A new process is automatically ready for message passing because it was created with standard input and output streams.

Threads

Threads, in concurrency, are a component of a process and are often described as lightweight processes. Processes and threads are responsible for providing an execution environment. However, creating a new thread is less resource-intensive than a new process.

In concurrency, every process contains at least one thread. Therefore, threads share the process’s memory and files. As a result, it provides an efficient but potentially troublesome communication in concurrency.

A thread is a locus of control inside a program- this is the starting point where the program runs. Similar to how the process represents a virtual computer, the thread represents a virtual processor. Therefore, a new thread is similar to making a new processor in the virtual computer. Subsequently, the new virtual processor runs the same program and shares the same memory as other threads in the process.

Since threads share all the memory in the process, they are automatically ready for shared memory. Therefore, it needs special effort to get thread-local memory, which is unique to a single thread. In concurrency, it is critical to create and use queue data structures to set up unique message-passing.

In a situation where there are more threads than processors, concurrency is triggered by time slicing. The concept of time slicing indicates that the processor automatically switches between threads. Time slicing usually occurs non-deterministically and unpredictably, which means that a thread can pause and resume at a period.

Thread objects

Each thread is associated with an instance of the class Thread. You can use two basic strategies to create a concurrent application with Thread objects. They include:

- To directly control thread creation and management, type Thread each time the application needs to initiate an asynchronous task.

- To extract thread management from the rest of your application, pass the application’s task to an executor.

NetNut Unlimited Concurrent sessions

NetNut is a leading industry proxy server provider. The need for proxy servers is on a steady increase with the increasing need for privacy and data security. NetNut proxies offer several features, including unlimited concurrency.

Before we explore the importance of unlimited concurrent sessions in proxy servers, let us explore the concept. When you visit a website from your browser, you have established a session for it to receive your request. The website then responds to your request, and your browser displays the result.

For a regular proxy server, every user has a separate session, and it can only handle a limited number of concurrent sessions. The concurrency is determined by the hardware resources and proxy configuration. Subsequently, when the capacity of concurrent sessions is exceeded, it may result in failed requests and reduced user experience.

Since NetNut proxy servers offer unlimited concurrent sessions, there is no limit to the number of concurrent sessions they can handle. Therefore, a proxy server can establish sessions with multiple users at the same time without compromising on user experience due to the high concurrency count.

Why you should choose a proxy server with unlimited concurrency count

Unlimited concurrency count plays a significant role in proxies, especially for businesses that need to send a huge number of requests on a frequent basis. Here are some advantages of unlimited concurrent sessions:

Large-scale data collection

Data has become a cornerstone for businesses that desire to thrive in the digital landscape. Therefore, many organizations need to collect large amounts of data for research, competitor analysis, and consumer sentiment. Using a proxy server with unlimited concurrency counts is useful for faster and more reliable web data extraction.

Supports multitasking

Many businesses require proxy servers that can support multitasking activities. Therefore, choosing a proxy server provider with unlimited concurrent sessions becomes critical. Subsequently, tasks can be swiftly processed without any incidence of blocks and limitations.

Optimized user experience

Unlimited concurrency counts to ensure you do not encounter connection failure problems or delays when using proxy servers. As a result, this optimizes user satisfaction and experience.

Enhanced performance

An unlimited concurrency count allows the proxy server to handle a large number of requests at the same time. Subsequently, more users can connect and use the proxies at the same time without any bottlenecks. This is critical, especially for operations that require high efficiency and speed.

Spread out access frequency

Finally, choosing a proxy server with unlimited concurrent sessions improves access success rates. Sometimes, when you try to access a website, you may be restricted via IP block or frequency limitation. However, using a proxy server with unlimited concurrency counts reduces the chances of IP block. It spreads out the access frequency and routinely changes the IP address to increase the access success rate.

Application of unlimited concurrency counts in proxy servers

Unlimited concurrent sessions has several applications in proxy servers. They include:

Website testing

Website testing is an integral part of the development and optimization of apps and programs. It often requires simulating user visits from various locations to determine user experience. Therefore, unlimited concurrent sessions allow developers to conduct comprehensive website testing, which helps them optimize performance, stability, and usability.

Localized marketing

Many organizations prefer to localize their SEO and marketing efforts. Therefore, using proxies with unlimited concurrency counts allows them to imitate user visits in various regions to optimize their localized marketing techniques.

Web data extraction

Web data extraction is necessary to collect data from multiple websites at the same time. Therefore, using proxies with unlimited concurrency counts allows for establishing multiple connections at the same time, which optimizes the efficiency and speed of data extraction.

NetNut offers various proxy solutions, including:

Conclusion

Concurrency describes multiple computations that are running simultaneously. It is characterized by shared memory and message-passing paradigms. Two basic concepts in concurrency are processes and threads. Processes are like virtual computers, while threads are like virtual processors.

Unlimited concurrency counts are integral elements of proxies. They ensure users have a more stable and efficient proxy experience. Organizations that require speed and efficiency for multitasking tasks, as well as preventing IP restrictions, will benefit from proxy servers that offer unlimited concurrent sessions.

Do you have any questions about choosing the best proxy server for your online needs? Feel free to contact us today!

Frequently Asked Questions

Is concurrency easy to test and debug?

No, it is hard to test and debug. Concurrency bugs display poor reproducibility. Therefore, it is hard to make them happen the same way a second time. The interleaving of messages relies on the relative timing of events that are significantly affected by the environment. Delays can be caused by other factors, including variations in processor clock, other running programs, network traffic, and operating system scheduling decisions. Subsequently, every time you run a program containing a race condition, there is a high chance you get different behavior.

These bugs are heisenbugs, and they are non-deterministic, meaning they are hard to reproduce. The heisenbug may vanish even if you try to look for it with the code printIn or debugger! This happens because debugging or printing is often slower than other operations- about 100-1000X slower. Subsequently, this can significantly affect the interleaving and timing of operations. However, the bug is not fixed; it only temporarily masks itself. Therefore, an alteration in timing at any point in the program may cause the bug to suddenly reappear.

What is the difference between concurrency and parallelism?

Concurrency involves executing multiple tasks at the same time. In simpler words, concurrency is dealing with many things at once- two or more tasks are in progress at the same time.

On the other hand, parallelism is about doing a lot of things at once. It involves breaking tasks into sub-tasks, which can be processed in parallel mode.

Furthermore, an application can be concurrent but not parallel, meaning it processes more than one task at the same time but not executing two tasks at the same time by the same time.

An application can be parallel but not concurrent, meaning it processes multiple sub-tasks in a multi-core CPU at the same time.

What are some common pitfalls in concurrency?

Overuse of synchronization: Since synchronization can be resource-intensive, it reduces performance. Therefore, you need to be absolutely sure it is necessary before you apply it.

Deadlocks: One way to prevent deadlocks is to avoid acquiring multiple locks in a different order in different parts of your code.

Improper synchronization of shared data: A common pitfall is failing to use proper synchronization mechanisms. Subsequently, when you do not synchronize access to shared data, it can lead to unexpected behavior and data corruption.