Introduction

As technology advances, people are finding easier and more convenient ways to do things, including recruiting talents and finding jobs. Gone are the days when job adverts were heavily reliant on newspapers, radio, and television to reach the target audience. Subsequently, the job market has become dynamic, with several platforms that optimize recruitment and job application processes.

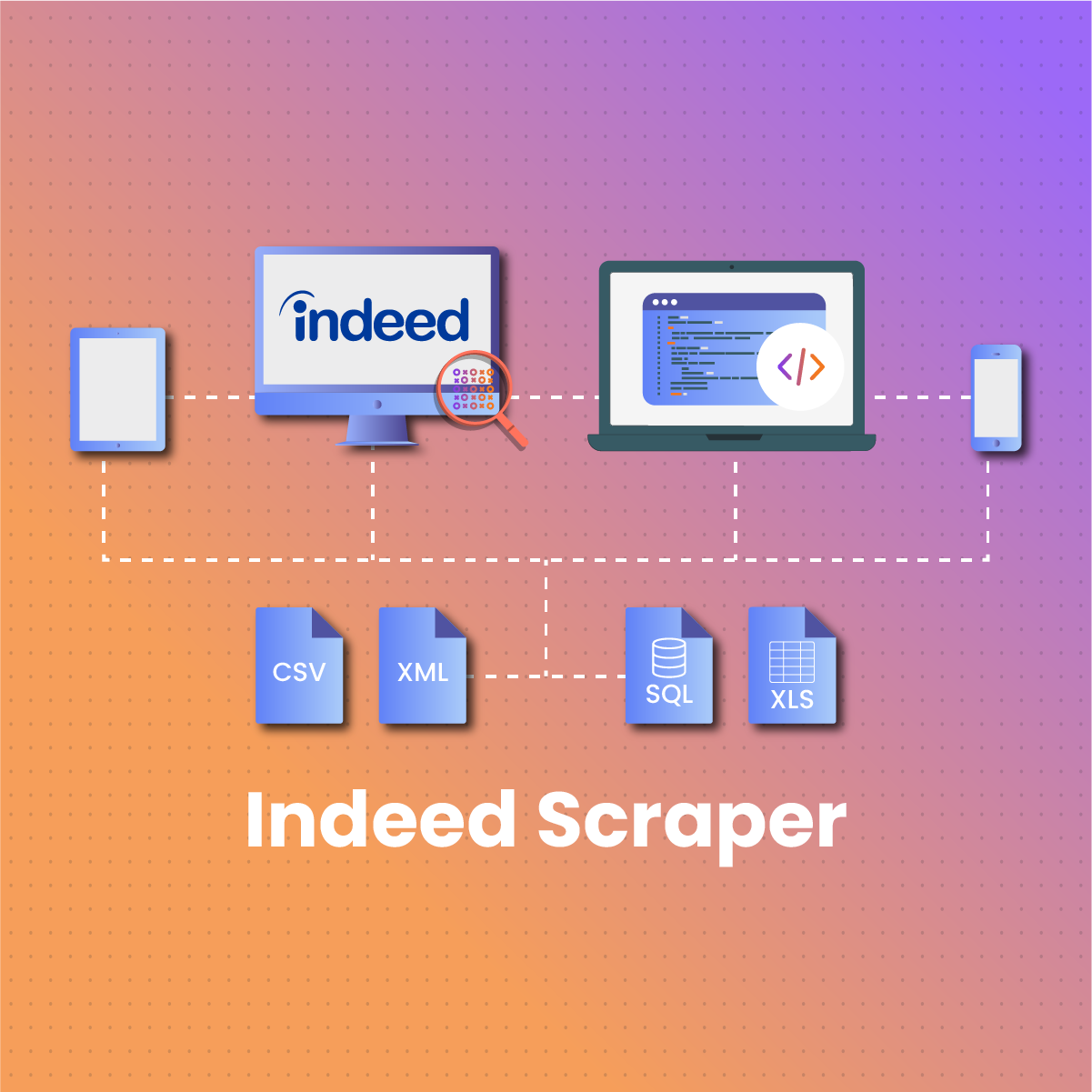

Indeed is a leading platform that allows companies to post their job openings as well as provide real-time updates on different jobs across various locations and industries. Therefore, learning how to scrape indeed job data becomes crucial for both recruiters and job seekers. One of the significant advantages of using an Indeed web scraper is that it saves time that would otherwise be spent manually exploring thousands of job listings on the platform.

The significance of learning how to scrape Indeed job data cannot be overemphasized, as it allows you to study past job trends. Subsequently, you can make predictions about future trends, which puts you at a unique advantage among the sea of talented job seekers.

Therefore, this guide will examine how an Indeed web scraper works,

Indeed Data Scraping

Indeed is a platform where people and organizations from across the world engage in job openings across different sectors. Since jobs are regularly posted on the platform, it draws in millions of users. As a result, it has become a store for large amounts of data.

In other words, Indeed acts as a middleman between hiring managers and job seekers. Therefore, Indeed contains a large volume of data that is relevant to both job seekers and recruiters. Subsequently, the best tool to collect data from the platform is the Indeed web scraper. Organizations can use this data to gather intelligence on employee sentiment, build a talent pool, and allocate a reasonable budget for hiring workers.

Some of the information you can get with Indeed web scraper includes:

- Job title

- Location

- Job type- physical, hybrid, or remote

- Description

- Location

- Work hours

- Salary and benefits

- Job Requirements

- Company information and review

- Job seeker details

What is the Significance of an Indeed Web Scraper?

Once you learn how to scrape Indeed job data, you can enjoy the numerous benefits associated with access to this large volume of information. Some of these benefits include:

Build a talent pool

Several job seekers use Indeed to find opportunities. Therefore, recruiters may receive applications for an opening from over 200 candidates within 24 hours. This can quickly become troublesome as the recruiter needs to go through them before making a decision. Once they have identified the top 5-10 candidates, interviews will be conducted, which may span several weeks.

However, recruiters can use an Indeed web scraper to build a talent pool. Subsequently, when a job vacancy needs to be filled quickly, the recruiter can reach out to candidates from the talent pool. Subsequently, this saves the time and effort that it would normally take to go through the hundreds of applications.

Attract high-level talents with competitive salary

An organization is only as good as those working together to achieve a goal. Therefore, recruiters often attempt to attract high-level talents. Salary, health benefits, insurance plans, vacations, and bonuses are some of the things that can make an organization stand out with its job offer. As a result, recruiters can learn how to scrape Indeed to collect data on competitive benefits offered by other organizations within the same industry. Once you find job offers with similar responsibilities and time commitments, it provides useful insights into how competitors are compensating employees. Bear in mind that a competitive salary with other benefits can increase the chances of getting high-level talent.

Employee sentiment

Apart from a competitive salary, you can use an Indeed web scraper to understand employee sentiments. Generally, employees prefer a collaborative and supportive work environment. Therefore, they often look for company reviews to get insight into the experiences of past and current employees.

Company reviews can serve as a yardstick for an outsider to determine the work culture, values, and structure of a place. If your company offers attractive numeration but has numerous negative reviews, you may find it difficult to hire high-value talents.

Therefore, using an Indeed web scraper becomes useful to both job seekers and recruiters. Job seekers can use the information to avoid a toxic work environment that will ultimately cause them to resign. On the other hand, organizations can leverage data extracted via Indeed web scraper to get insights on their employee’s (past and present) perceptions, as this could have a significant effect on the brand reputation.

Allocate a reasonable budget

Finding the right person for a job is not always easy, especially since there are numerous people on the platform. Therefore, extracting data via an Indeed web scraper provides insight into the amount of money your company needs to set aside for the whole hiring process.

How To Scrape Indeed Job Data

In this section, we shall examine a step-by-step guide on how to scrape Indeed job data.

Step 1: Creating the environment

Before we dive into scraping Indeed, we need to install some prerequisites. First, download the latest version of Python, install it on your device and follow the installation wizard to set it up.

Next, you need to install your preferred Python IDE (Integrated Development Environment). The most popular options for Python projects are PyCharm and Visual Studio Code. In addition, the virtual environment creates an isolated space where you can install libraries and dependencies, which eventually affects your actual Python setup.

Subsequently, you need to create a folder, which you can name indeed.py. To launch it, you need to use the command as shown below in the terminal:

mkdir indeed-scraper

cd indeed-scraper

python -m venv env

After launching it, you need to activate the environment. For Windows devices:

env\Scripts\activate.ps1

On the other hand, if you are using Linux or macOS, you can use this command:

./env/bin/activate

Now, you can initialize the indeed.py file containing the line below in the folder:

print(“Hello, World!”)

Over the course of this tutorial, this folder will contain the lines of code to scrape data from Indeed.

Python scraping libraries

Python is considered one of the best languages for scraping due to its extensive libraries. Some of the popular libraries include Request, BeautifulSoup, MechanicalSoup, Selenium, Playwright, and others. Therefore, you need to understand these libraries, their strengths, and limitations so you can make an informed decision.

When you examine Indeed on your browser, you will discover that it contains dynamic content. Therefore, you need a library that can run JavaScript, and that is Selenium. Selenium allows you to scrape dynamic websites with Python. Therefore, it renders Indeed in a controllable web browser and performs operations as you command it.

To install Selenium in the activated Python virtual environment:

pip install selenium

Bear in mind that installing Selenium may take a while. The most recent version of Selenium comes with the ability to detect drivers. However, if you have an older version of Selenium, you can update it with this line of code:

Set up a controllable Chrome instance in headless mode

service = Service()

options = webdriver.ChromeOptions()

options.add_argument(“–headless”) # Corrected argument for headless mode

driver = webdriver.Chrome(service=service, options=options)

# Scraping logic…

Here, you would include the code to navigate to web pages, scrape data, etc.

Close the browser and free up the resources

driver.quit()

This code ensures the browser will open in headless mode. In addition, it is critical to tackle any error reported by the Python IDE.

Step 3: Connect to the target page

To do this, go to Indeed and search for jobs that might interest you. For this guide, we shall attempt to scrape hybrid job postings for copywriters in Atlanta. Regardless of the job you are searching for, the logic will be the same; you only need to change the job title and location.

Get the page URL. For example:

https://www.indeed.com/jobs?q=copywriter&l=Atlanta%2C%20GA&from=searchOnHP

Use Selenium to connect to the target URL:

driver.get(“https://www.indeed.com/jobs?q=copywriter&l=Atlanta%2C%20GA&from=searchOnHP”)

The get() function gives the browser the command to visit the target URL. Once the page is opened, choose a window size that gives you an overview of all the elements on the page with:

driver.set_window_size(1920, 1080)

Review the structure of the target page

A critical step before scraping Indeed job data is to get familiar with the structure of the target page. Bear in mind that scraping involves identifying the HTML elements to retrieve data from them. However, finding the target nodes from the DOM structure might be challenging, which is why you need to review and analyze the HTML content.

Inspecting the Indeed search page requires you to visit and use the developer’s tools > inspect option to get the HTML elements, class, IDs, and tags.

Retrieve the job data

When you search for a job on Indeed, several openings are displayed. Therefore, you need to keep track of the jobs you have scraped from a page. You can do this with this function:

Jobs = [ ]

Next, we will leverage the find_elements ()/ find_element () method from Selenium to find the elements on the target Indeed page.

Other methods supported by Selenium include:

- By .CSS_SELECTOR to use a CSS selector strategy

- By .XPATH to search for elements using the XPath expression

- By .ID to search for an element by the HTML id attribute

- By .TAG_NAME to search for elements by their HTML tag

Subsequently, we need to import By with this code:

from Selenium.webdriver.common.by import By

Next, iterate over the list of job cards and use this code to initialize a Python dictionary to store the retrieved details:

for job_card in job_cards:

# initialize a dictionary to store the scraped job data

job = {}

# job data extraction logic…

A job posting has several attributes. However, you may not need to scrape them all. Here is an example of those variables. You can substitute the “None” for your desired inquiry with this code:

posted_at = None

title = None

job_type = None

applications = None

location = None

company_name = None

company_rating = None

company_reviews = None

pay = None

benefits = None

description = None

Handle anti-scraping measures

Many websites, including Indeed, employ some anti-scraping strategies to prevent bot activities. Therefore, if you don’t account for this interference, your Selenium scraping may not work as intended.

You can use this code to handle it:

try:

dialog_element = driver.find_element(By.CSS_SELECTOR, “[role=dialog]”)

close_button = dialog_element.find_element(By.CSS_SELECTOR, “.icl-CloseButton”)

close_button.click()

except NoSuchElementException:

pass

Sending too many requests will cause the website to block your activities in no time. However, you can implement random delays in your code. First, you need to import the necessary Python libraries with this code:

import random

import time

Once the libraries have been imported into your code, you can add the delay, usually from 1-5 seconds.

time.sleep(random.uniform(1, 5))

Extract the job details

To get the company name, use:

try:

company_link_element = job_details_element.find_element(By.CSS_SELECTOR, “div[data-company-name=’true’] a”)

company_name = company_link_element.text

except NoSuchElementException:

pass

To extract information regarding the job description:

try:

description_element = job_details_element.find_element(By.ID, “jobDescriptionText”)

description = description_element.text

except NoSuchElementException:

pass

Store data in JSON format

Once you initiate the scraper, all the data is stored in a list of Python dictionaries. However, you need to export to JSON to optimize ease of reading and sharing with others. You don’t need to install additional dependencies since the json package comes from the Python Standard Library.

import json

# scraping logic…

with open(“jobs.json”, “w”) as file:

json.dump(output, file, indent=4)

Ethical Practices for Using the Indeed Web Scraper

Respect robot.txt file/ Terms of Service

One of the ways to ensure ethical use of the Indeed web scraper is by checking the robots.txt file as well as the policy page. This gives you a general overview of how you can use bots to access the page. Subsequently, failure to comply with Indeed’s terms may result in a legal case.

Limit your request

Sending too many requests within a short period triggers the anti-scraping measures on Indeed. Therefore, it becomes necessary to add random delays to your Python script, as we have demonstrated above. Websites like Indeed are wary of too many requests from a single IP address as it can cause their server to be overloaded, lag, or experience temporary downtime.

Use a real user-agent string

A crucial ethical practice for using the Indeed web scraper is to always use authentic user agent strings. Indeed, anti-bot measures can identify your scraper’s activities through the user agent string. Using an authentic one is good, but for optimal performance, you need to rotate the user-agent regularly.

Handle errors

Before you launch the Indeed web scraper on a large scale, you need to test it. There are several errors that could occur, and you need to resolve them so the scraper will not return incomplete or irrelevant data.

Use proxies

One of the most common challenges in learning how to scrape Indeed job data is IP blocks. This can happen for a number of reasons, including sending too many requests from a single IP address, geographic restrictions, or inability to pass the CAPTCHA test. Subsequently, proxies are intermediaries between your device and Indeed. Therefore, it masks your IP address while promoting security and anonymity.

NetNut Proxies For Indeed Web Scraper

The use of proxies can make all the difference when you are learning how to scrape Indeed job data. However, you have to consider factors like speed, IP pool size, cost, CAPTCHA-solving solutions and more when choosing a proxy provider.

NetNut is an industry-leading proxy service provider. With an extensive network of over 85 million rotating residential proxies in 200 countries and over 250,000 mobile IPS in over 100 countries, you can scrape data from any website with ease.

NetNut rotating residential proxies can hide your IP address so the website can only interact with the proxy IP. Subsequently, rotating your IP makes it look like you are sending various requests from different locations, which minimizes the chances of being blocked.

Another limitation to learning how to scrape Indeed job data is geo-restrictions and CAPTCHAs. NetNut residential proxies come with CAPTCHA-solving software to ensure the website does not identify your scraping bot. In addition, it allows you to bypass geographical restrictions, which ensures timely access to data.

Alternatively, if you do not want to learn how to build an Indeed scraper, you can use our in-house solution- NetNut Scraper API. In addition, if you want to scrape data using your mobile device, NetSuite also has a customized solution for you. NetNut’s Mobile Proxy uses real phone IPs for efficient web scraping and auto-rotates IPs for continuous data collection.

Conclusion

In this tutorial, we have examined a detailed guide on how to build an Indeed web scraper with Python. Indeed is a leading global platform for job seekers and recruiters; therefore, it contains a huge database.

Using a scraper helps you save time and resources and allows you to extract data within a short period. Since Indeed is dependent on JavaScript, Selenium has become a top choice for Python web scraping frameworks.

Some of the ethical practices include using a proxy, an authentic user-agent string, rate limiting, and more. Do you need help in choosing the best proxy solution for your Indeed job scraping needs? Feel free to contact us, and you can speak with an expert who can guide you.

Frequently Asked Questions

What are the challenges associated with extracting data from Indeed?

- Indeed depends on JavaScript which means the content on the pages are dynamic

- Anti-scraping measures like CAPTCHAs, rate limiting, and checking user-agent strings

- Frequent update of Indeed website structure may break a scraper that depends on CSS selectors and HTML

- Indeed has a vast number of job listings which are paginated; handling this amount of pagination can be complex

What are the advantages of using an Indeed web scraper?

- The use of an Indeed web scraper saves time because it allows you to collect data from hundreds of listings in a few minutes.

- Data collected from an Indeed web scraper can be easily integrated into email platforms to optimize workflow.

- Learning how to scrape Indeed data allows for highly targeted and relevant lead lists. Subsequently, the scrapers allow you to filter the result by location, skills, job type, etc, to obtain data that is useful.

Is it legal to use Indeed web scraper?

Yes, it is legal to use Indeed web scraper to gather data from the platform. The information is publicly available, so it is legal to extract the data. However, bear in mind that scraped data, like images, can be protected by copyright. In addition, using data collected with an Indeed web scraper unethically can violate data protection laws, so it can be considered illegal.