Large Language Models (LLMs) like GPT, Claude, and LLaMA are at the forefront of AI innovation, powering applications from chatbots and content generation to code assistants and search engines. These models don’t just “learn” in the traditional sense—they are trained on massive volumes of text data to develop a deep understanding of language, context, and meaning. But where does all this data come from? How do AI companies source such immense and diverse datasets to train LLMs effectively?

Understanding the origin and quality of training data is essential for building accurate, ethical, and scalable AI systems. The data that feeds an LLM directly influences its capabilities, biases, and reliability. With growing demand for multilingual, up-to-date, and domain-specific data, sourcing training datasets has become both a technical and strategic challenge.

In this article, we’ll explore the fundamentals of LLM training data, dive into where LLMs get their data, and show how tools like NetNut’s proxy infrastructure are essential for efficiently and ethically collecting large-scale web data. Whether you’re a data scientist, AI engineer, or business leader in the AI space, this guide will give you the clarity and tools you need to optimize your data acquisition workflows.

What Is LLM Training and Why Does It Matter?

LLM training is the process of teaching a machine learning model to understand and generate human-like language. At its core, this involves feeding the model vast amounts of text and allowing it to learn patterns, structures, relationships, and semantics across different languages and domains.

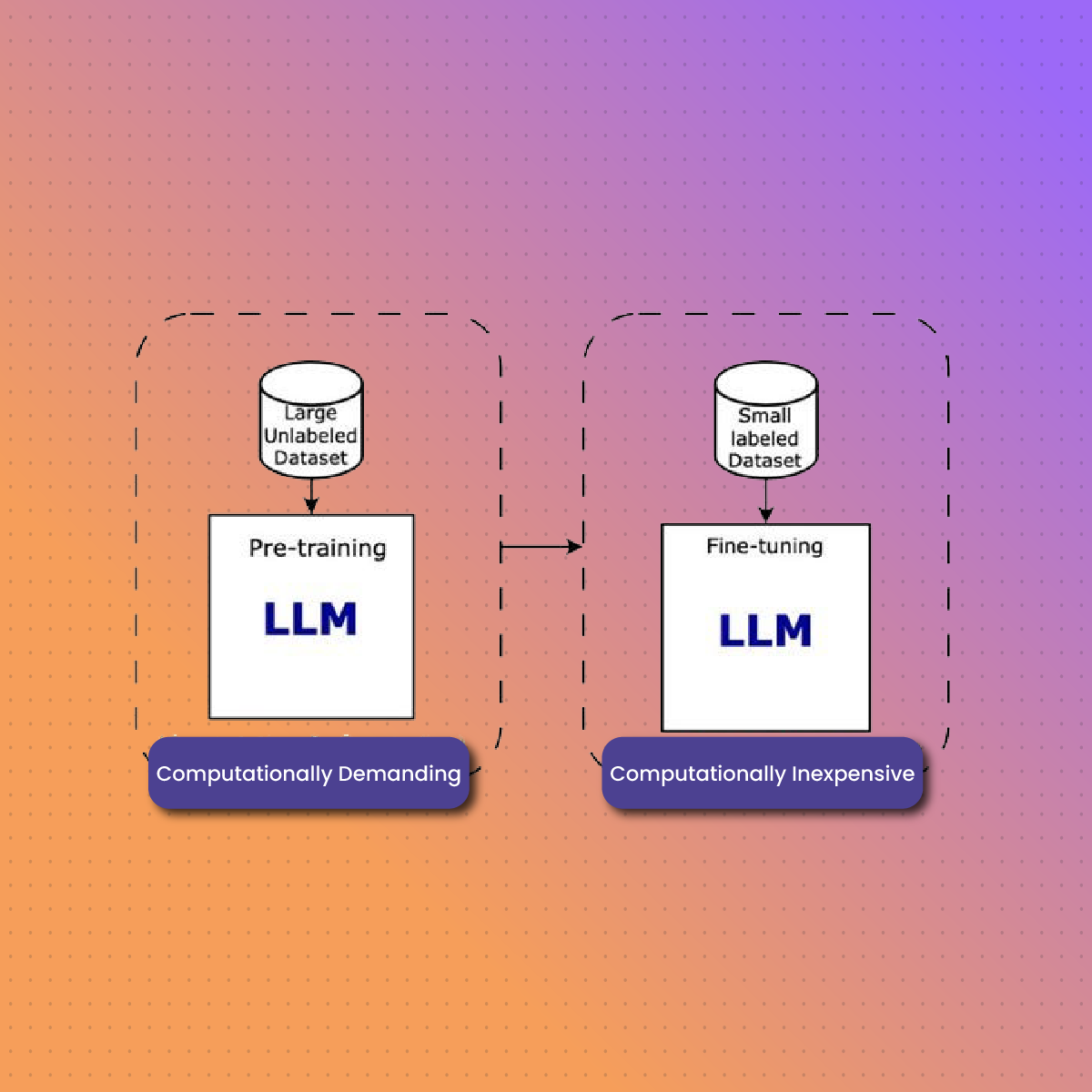

There are two main stages in LLM training:

- Pre-training: The model learns general language patterns using large-scale, diverse datasets (often sourced from the public web).

- Fine-tuning: The model is refined using task-specific or domain-specific data to improve accuracy for a particular use case (e.g., legal documents, healthcare records, customer support chats).

The quality, diversity, and scale of training data directly affect the performance and usefulness of the LLM. Poor or biased data can lead to models that are inaccurate, offensive, or unreliable. Conversely, models trained on rich, up-to-date, and balanced datasets are more likely to produce safe, relevant, and valuable outputs across a wide range of tasks.

This makes the data sourcing process not just a technical requirement but a strategic differentiator. AI companies need to ensure that they are collecting the right types of data at scale, from the right sources, and with the right tools—which is where solutions like NetNut’s residential proxies and datacenter proxy services come into play. With NetNut, teams can scrape web content securely and efficiently, even from geo-restricted or heavily protected sites, ensuring a steady stream of high-quality training data.

What Kind of Data Do LLMs Use for Training?

LLMs are trained on a wide spectrum of text-based data. The broader and more diverse the dataset, the more robust and flexible the language model becomes. This data typically includes both general knowledge sources and specialized content, ensuring that the model can understand context, language nuance, and domain-specific terminology.

Here are the primary types of data LLMs use during training:

- Books and Literature: Digitized books (especially public domain texts) provide structured, high-quality language with proper grammar and storytelling.

- News and Articles: News websites and blogs help LLMs understand current events, formal tone, and journalistic styles.

- Wikipedia and Encyclopedic Content: These sources offer factual, neutral, and informative content across a wide array of subjects.

- Web Forums and Q&A Platforms: Websites like Reddit, Quora, and Stack Overflow offer conversational, opinionated, and crowd-sourced content useful for dialogue generation and problem-solving.

- Social Media Posts: With proper filtering, social platforms provide insights into informal language, slang, and trending topics.

- Academic and Scientific Papers: Used in models geared toward research or technical fields, these datasets offer specialized vocabulary and structured knowledge.

- Code Repositories: For coding LLMs like Codex or StarCoder, public code from platforms like GitHub is used to train models to generate and understand programming languages.

Some of these data sources are freely available, while others are collected through large-scale web scraping pipelines. In all cases, the training data must be cleaned, de-duplicated, and filtered to ensure the model doesn’t ingest low-quality, harmful, or redundant content.

Gathering this data—especially from the live web—requires reliable, stealthy, and scalable access to websites. This is where NetNut’s proxy network becomes a critical enabler for LLM developers. With residential proxies that mimic real users, data collection systems can safely access dynamic content, forums, news platforms, and region-specific sites without triggering anti-bot systems or being blocked.

Where Do LLMs Get Their Training Data?

LLMs don’t learn from a single source—they’re trained on aggregated datasets compiled from a variety of origins. Some of the most common include:

1. Web Scraping of Public Websites

One of the most significant sources is automated data scraping from publicly available websites. This includes news outlets, forums, blogs, product reviews, and more. Because much of the internet is dynamic and geo-restricted, scraping tools often rely on residential or datacenter proxies to avoid detection, rate limits, and IP bans.

This is where NetNut’s proxies are invaluable—providing global IP rotation, location-based access, and the speed required for high-volume data acquisition.

2. Open-Source Datasets

AI research communities and institutions often release large-scale, curated datasets for public use. Examples include:

- Common Crawl – A massive open archive of web data.

- The Pile – A diverse mix of books, code, academic papers, and web content.

- OpenWebText – Inspired by Reddit-linked content, focused on high-quality web data.

While useful, open-source datasets are often not enough on their own. To build competitive LLMs, companies must complement them with fresh, real-world data from the web.

3. Licensed and Proprietary Datasets

Some AI companies license data directly from publishers, content providers, or commercial aggregators to access specialized or gated content. These deals can be expensive and time-consuming, which is why many teams prefer to supplement licensed data with proxy-enabled scraping of public sources.

4. User-Generated or Crowdsourced Data

In fine-tuning stages, some LLMs may use human feedback or domain-specific conversational data (e.g., customer service logs) to improve performance for particular industries.

Ultimately, the most effective LLMs are trained on a blend of public, proprietary, and real-time web data—much of which is accessible only through advanced scraping infrastructure powered by proxy networks like NetNut.

Challenges in LLM Data Collection

Collecting the massive volumes of text data required to train LLMs is far from straightforward. While the internet offers a seemingly endless supply of content, turning that content into usable, high-quality training data involves a host of technical, legal, and operational challenges.

1. Scale and Infrastructure Limitations

Training an LLM requires billions—sometimes trillions—of tokens, which translates to petabytes of text. Scraping this data in a reasonable time frame demands high-performance infrastructure and robust parallel processing capabilities.

2. Data Quality and Noise

Much of the web is filled with duplicate, low-quality, or irrelevant content. If not properly filtered, this noise can degrade LLM performance or introduce bias. Effective data pipelines must include de-duplication, content scoring, and content moderation systems.

3. Geo-Restrictions and Content Access

Many valuable content sources are either geo-blocked or customized based on location. Without access to region-specific content, LLMs risk developing geographic bias or missing out on multilingual and culturally diverse data.

4. IP Bans, Rate Limits, and CAPTCHAs

Websites increasingly use anti-bot systems to block large-scale scraping attempts. These include IP throttling, user-agent filtering, and CAPTCHAs—all of which can severely disrupt data collection efforts.

5. Legal and Ethical Considerations

AI developers must navigate complex issues around copyright, data ownership, user privacy, and ethical use of scraped content. Staying compliant with regulations like GDPR and CCPA is critical, especially when handling user-generated content.

To overcome these obstacles, AI companies need advanced tools—not just scrapers, but smart proxy networks that enable secure, undetectable, and scalable access to the open web. This is where NetNut’s proxies become indispensable.

How Proxies Enable Effective LLM Data Collection

Proxy networks play a pivotal role in LLM data acquisition by enabling scrapers and data pipelines to collect information at scale—without getting blocked, flagged, or limited. Here’s how:

1. Bypass Geo-Restrictions and IP Blocks

With NetNut’s global proxy network, AI teams can access websites from specific countries or regions, ensuring that their datasets are geo-diverse and multilingual. This is crucial for training inclusive models that understand language variation and local context.

2. Maintain Anonymity and Stealth

NetNut’s residential proxies mimic real users by routing traffic through real ISP-assigned IPs. This makes them nearly impossible to detect, unlike datacenter proxies, which are often flagged by websites. This stealth is essential for extracting data from sites with strict anti-bot protections.

3. Enable Scalable, High-Speed Scraping

NetNut’s infrastructure is built for performance, with high concurrency support, fast response times, and intelligent IP rotation. This enables you to run thousands of requests simultaneously without hitting rate limits or triggering blocks.

4. Access Mobile-Optimized and App-Based Content

Some data is only accessible through mobile-specific web views or apps. With NetNut’s mobile proxies, developers can access mobile-first platforms and APIs, collecting data that’s otherwise unreachable from standard desktops or datacenter networks.

5. Stay Compliant and In-Control

NetNut offers transparent proxy management and analytics, so teams can monitor usage, manage regions, and ensure that scraping activities remain ethical and legally compliant.

In short, proxies are the backbone of web data pipelines for AI teams—and NetNut provides one of the most reliable, scalable, and secure proxy infrastructures in the world for LLM data collection.

NetNut Solutions for LLM Training Data Acquisition

At the core of every high-performing LLM is a reliable, diverse, and scalable data pipeline—and that’s exactly where NetNut delivers a competitive edge. Designed to meet the demanding needs of AI companies and data engineering teams, NetNut’s proxy infrastructure empowers organizations to collect massive volumes of high-quality web data efficiently and securely.

Here’s how NetNut supports your LLM training data acquisition process:

Global Proxy Coverage for Geo-Targeted Scraping

Access region-specific data with precision. NetNut offers millions of residential IPs across the globe, allowing your scrapers to simulate local traffic and bypass geo-restrictions. This ensures your LLMs can be trained on multilingual, culturally diverse, and location-specific content—key for building inclusive AI systems.

Enterprise-Grade Residential, Datacenter, and Mobile Proxies

Whether you need undetectable residential IPs, high-speed datacenter proxies for bulk scraping, or carrier-grade mobile proxies for app-based content, NetNut has it all. Choose the right proxy type for your data source, and scale as needed.

Intelligent IP Rotation and High Uptime

NetNut’s infrastructure includes automated IP rotation, ensuring that requests are spread across a broad pool of IPs to reduce the risk of bans and throttling. With 99.9% uptime, you can count on uninterrupted access to critical data sources—24/7.

Flexible API Integration and Dashboard Control

Integrate NetNut’s proxies directly into your data collection workflows with ease. The user-friendly dashboard provides real-time analytics, usage tracking, and IP management, so your team always stays in control.

Built for Compliance and Ethical Data Collection

NetNut operates with transparent sourcing policies and infrastructure that supports GDPR- and CCPA-compliant practices, making it a responsible choice for AI teams concerned about the ethical use of training data.

With NetNut, you don’t just get proxies—you get a complete data acquisition solution tailored for AI development, LLM training, and advanced data-driven applications.

Final Thoughts on LLM Data Training

Training powerful Large Language Models requires more than just compute power—it requires access to high-quality, large-scale datasets from across the web. From news and forums to code and research papers, the content that fuels LLMs is scattered, protected, and increasingly difficult to gather at scale.

To stay competitive, AI companies need efficient, compliant, and undetectable ways to source data from global websites—and that’s where NetNut’s proxy solutions become mission-critical.

Whether you’re pre-training a foundation model or fine-tuning for a specialized use case, NetNut’s residential, datacenter, and mobile proxies enable you to gather the exact training data you need—safely, reliably, and at scale.

Ready to power your next LLM with smarter data sourcing? Explore NetNut’s proxy network and start collecting training data with confidence.