Web scraping is no longer just about grabbing HTML elements and parsing text—it’s evolving into something smarter. As websites grow more dynamic and data becomes more unstructured, traditional scraping methods often fall short. That’s where Large Language Models (LLMs) come in, offering the ability to understand and extract content semantically, just like a human would.

At the forefront of this evolution is ScrapeGraphAI—an open-source framework that combines web scraping with LLM capabilities. By structuring scrapers as modular graphs and integrating AI at the data extraction layer, ScrapeGraphAI enables flexible, intelligent data workflows that are adaptable to a wide variety of use cases.

But even the smartest scraper needs access. Websites today are equipped with bot-detection systems, rate-limits, and geo-restrictions that can block your scraping pipeline before it even starts. That’s why pairing ScrapeGraphAI with a high-quality proxy solution like NetNut is essential. With rotating residential and mobile proxies, NetNut ensures you can access content reliably and at scale, while keeping your scraping operations undetectable.

In this guide, we’ll walk you through how ScrapeGraphAI works, how to set it up, and how to integrate it with NetNut for seamless, intelligent web scraping.

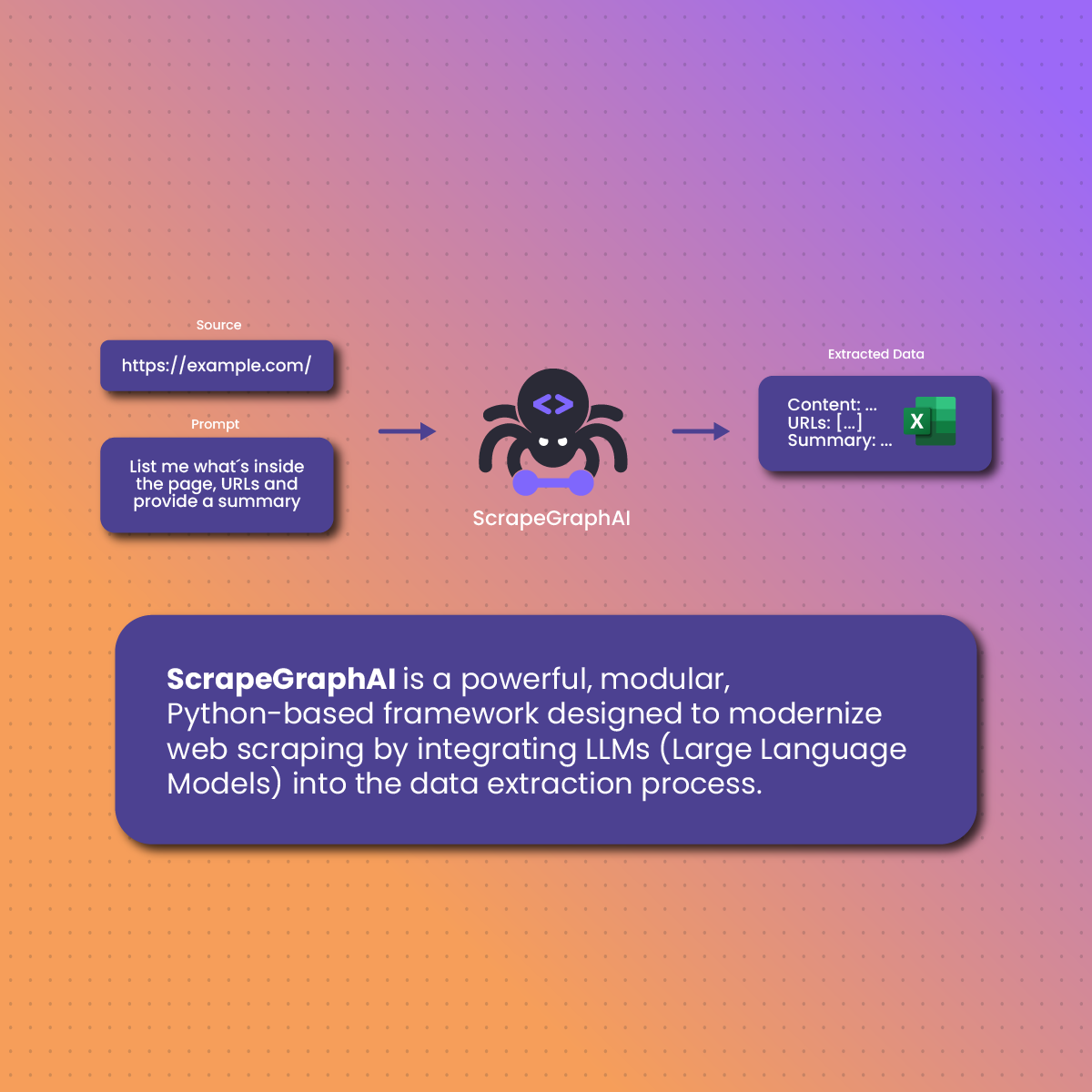

What is ScrapeGraphAI?

ScrapeGraphAI is a powerful, modular, Python-based framework designed to modernize web scraping by integrating LLMs (Large Language Models) into the data extraction process. Unlike traditional scrapers that rely on HTML parsing with CSS selectors or XPath rules, ScrapeGraphAI allows developers to create graph-based workflows that include both scraping and LLM-based data transformation nodes.

At a high level, ScrapeGraphAI helps you:

- Extract raw data from web pages

- Use LLMs to interpret, summarize, or clean that data

- Output structured results (like JSON or CSV) from semi-structured or unstructured content

This makes it particularly useful for scraping content like:

- Blog posts or articles

- Product descriptions

- Forum threads

- News summaries

- Legal or financial documents

One of the most compelling aspects of ScrapeGraphAI is its graph-based design. You can build modular scraping workflows where each node performs a specific task—like crawling, rendering, extracting, or summarizing. This flexibility enables users to quickly prototype and adapt scraping flows for new data sources or changing page structures.

While ScrapeGraphAI handles the intelligence layer, it still needs help getting past roadblocks—like IP bans, CAPTCHAs, and regional content restrictions. That’s where NetNut’s proxy infrastructure becomes critical, ensuring your LLM-enhanced scrapers can access the content they need without interruptions.

How ScrapeGraphAI Works

ScrapeGraphAI operates through a graph-based architecture, where each node in the graph represents a task or operation. This modular design makes it easy to customize scraping flows and insert AI functionality where it makes the most impact.

Core Workflow of ScrapeGraphAI:

- Input Node (URL Loader)

Starts the graph with one or more URLs to scrape. These can be static or dynamically loaded pages. - Web Crawler or Renderer

Some pages need browser-based rendering (especially if they’re JavaScript-heavy). You can plug in tools like Playwright to render the content before passing it to the LLM. - LLM Processing Node

Here’s where the magic happens. The raw HTML or text is sent to an LLM (like OpenAI’s GPT) using your API key. The LLM is prompted to extract or summarize specific content—titles, authors, product specs, summaries, etc. - Post-Processing Node

The LLM output is formatted into structured formats (e.g., JSON), optionally filtered or cleaned, and saved to a database or file. - Output Node

Defines where the final data should go—local storage, a remote database, or an API endpoint.

This LLM-driven approach eliminates the need for brittle CSS selectors and XPath rules that break every time a webpage updates its structure. Instead, you’re relying on semantic understanding to pull out the data you need—more human-like, more accurate, and more scalable.

Installing and Setting Up ScrapeGraphAI

Before you can build your first AI-powered scraper, you’ll need to install and configure ScrapeGraphAI in your development environment. Thankfully, the setup process is straightforward.

Requirements

- Python 3.8 or later

- A modern operating system (Linux, macOS, or Windows)

- A working terminal or command prompt

- An API key from your preferred LLM provider (e.g., OpenAI)

Step-by-Step Installation

- Create a virtual environment (optional, but recommended)

- Install ScrapeGraphAI via pip

- Install additional dependencies for LLMs (if needed)

- Set your LLM API key as an environment variable

Once installed, you’re ready to begin building graphs that scrape, analyze, and extract meaningful data using LLMs.

Your First ScrapeGraphAI Project (Step-by-Step Tutorial)

Let’s walk through a simple yet powerful example: scraping a blog article and using GPT-4 to summarize its content.

Goal:

Scrape the content of a tech blog post and generate a 3-bullet summary using an LLM.

Step 1: Define Your Graph

Step 2: Run Your Script

Execute your script and review the output. The result should be a structured JSON response with:

- Title of the article

- A concise summary (generated by GPT or your chosen model)

Step 3: Add Complexity

Expand your graph with additional nodes if needed:

- Crawl multiple pages

- Filter by topic or keyword

- Feed results into a database or vector store

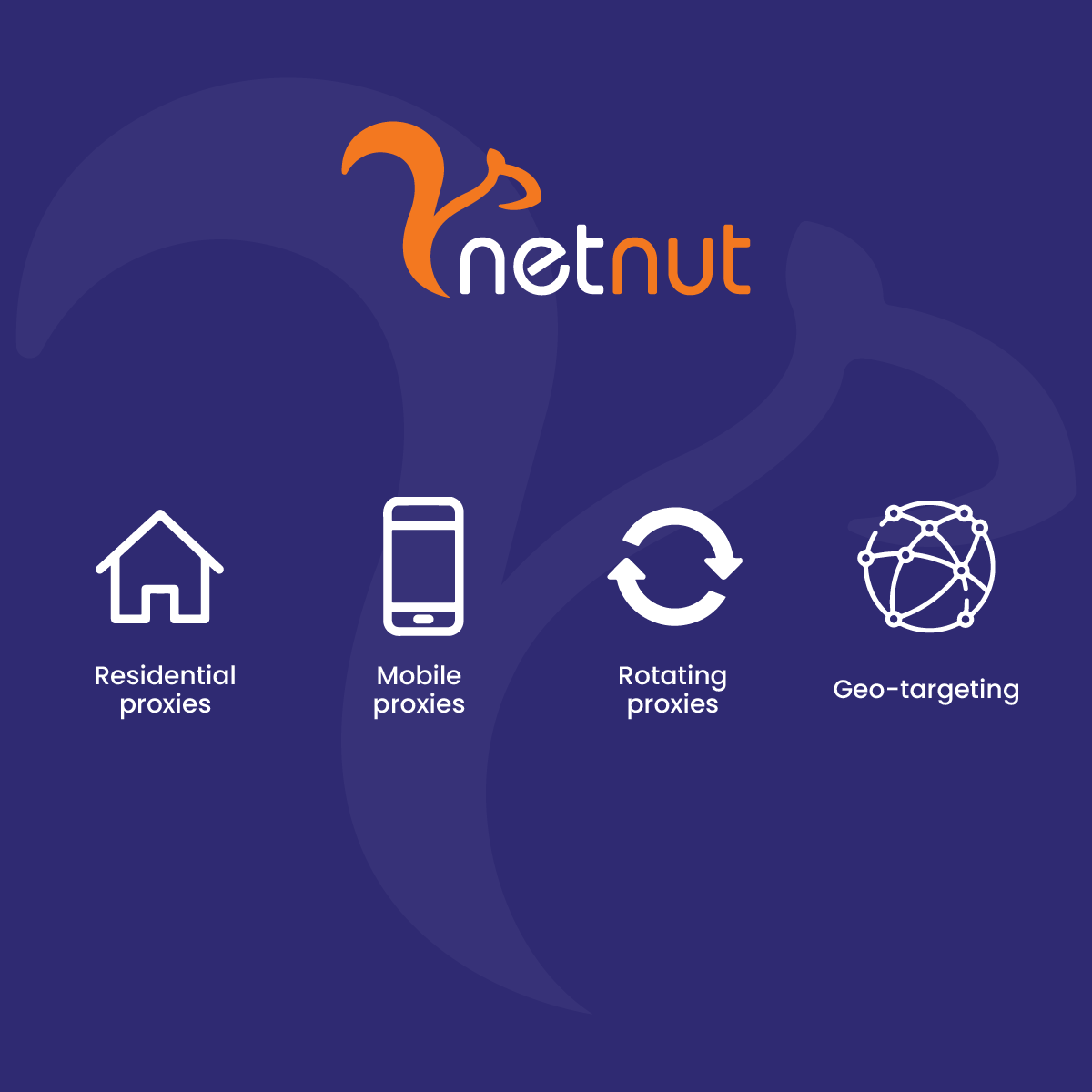

Integrating NetNut Proxies for Reliable Scraping

Even the smartest LLM scraper fails if it can’t access the content in the first place. ScrapeGraphAI doesn’t inherently solve the problem of IP blocks, bot detection, or geo-restricted content. That’s where NetNut comes in.

Why Use Proxies with ScrapeGraphAI?

- Many sites detect and block repeated requests from a single IP

- LLM-powered scraping can trigger rate limits more quickly due to parallel requests

- Certain content may be accessible only from specific regions or devices (e.g., mobile views)

How NetNut Helps:

- Residential proxies simulate real users from around the world

- Mobile proxies grant access to mobile-optimized or restricted content

- Rotating proxies change IPs automatically between requests

- Geo-targeting lets you scrape region-specific pages

Advanced Use Cases with ScrapeGraphAI + LLMs

Once you’re comfortable with basic scraping tasks, ScrapeGraphAI really begins to shine in more advanced, real-world workflows—especially when paired with LLMs and robust proxy infrastructure like NetNut’s.

1. Multi-Page and Paginated Scraping

ScrapeGraphAI supports the creation of workflows that follow pagination links or index pages, allowing you to crawl:

- News archives

- Product listings

- Forum threads

This is especially effective when combined with rotating proxies from NetNut, ensuring your scraper doesn’t get blocked across dozens or hundreds of pages.

2. Retrieval-Augmented Generation (RAG) Pipelines

ScrapeGraphAI can be integrated with vector databases (like Pinecone or Weaviate) to build RAG-based systems. Use cases include:

- Chatbots fed with live news or blog content

- Custom knowledge bases built from scraped data

- Search engines with summarized results

3. Real-Time Monitoring Agents

Set up your graph to run periodically—scraping stock market headlines, trending products, or competitor updates. When using NetNut’s rotating or mobile proxies, these AI agents can run 24/7 without getting flagged.

4. Domain-Specific Data Extraction

Need to extract and summarize content from niche sources (e.g., legal filings, real estate listings, or healthcare databases)? ScrapeGraphAI’s LLM layer is perfect for interpreting inconsistent formats—and NetNut’s geo-targeted proxies ensure you can access them reliably, even across restricted jurisdictions.

These advanced use cases showcase the synergy between intelligent data parsing and smart access routing—ScrapeGraphAI for intelligence, and NetNut for reach and resilience.

Benefits of Using ScrapeGraphAI for LLM Web Scraping

ScrapeGraphAI isn’t just another scraping framework—it’s built for the next generation of data extraction, where context, flexibility, and adaptability are key. Here’s why it’s quickly becoming a go-to tool for AI developers and data engineers:

Semantic Accuracy

Instead of relying on fragile selectors, LLMs understand what content matters. ScrapeGraphAI taps into this capability, producing better results even when page layouts change.

Modular Graph Architecture

Workflows are visualized and built as graphs—making them easy to update, extend, or debug. You can quickly plug in new LLMs, inputs, or output formats.

Reduced Maintenance

With fewer hardcoded selectors, your scrapers are less likely to break when sites update their HTML structure—saving you countless hours of refactoring.

LLM + Proxy Integration = Maximum Reach

LLMs make the scraper smart. Proxies make it scalable and undetectable. Together, they allow you to extract complex data from anywhere on the web, with minimal friction.

Rapid Prototyping

Need to test a new scraping concept or run a quick experiment? ScrapeGraphAI lets you build working flows in minutes—ideal for agile teams and MVP development.

Common Challenges and How to Solve Them

Even with powerful tools like ScrapeGraphAI and LLMs, web scraping isn’t without its hurdles. Here are the most common issues developers face—and how to overcome them.

Challenge 1: LLM Hallucination

Sometimes, LLMs generate inaccurate or overly confident outputs.

Solution:

- Fine-tune your prompts for clarity

- Ask for outputs in structured formats (e.g., JSON)

- Use post-processing nodes to validate or cross-check data

Challenge 2: API Costs or Rate Limits

Calling GPT-4 repeatedly can get expensive.

Solution:

- Use smaller models for non-critical tasks

- Batch multiple extractions into a single call

- Cache intermediate results

Challenge 3: Blocked or Throttled Requests

Sites may ban your IP or show fake content when scraping too aggressively.

Solution:

- Integrate NetNut’s rotating residential or mobile proxies

- Use proxy rotation per session or request

- Set crawl delays and rotate user agents

Challenge 4: JavaScript-Heavy Pages

Some pages load content dynamically and won’t work with simple HTTP requests.

Solution:

- Use ScrapeGraphAI with browser renderers like Playwright

- Route Playwright sessions through NetNut proxies to ensure access remains unblocked

By combining ScrapeGraphAI’s adaptability with NetNut’s resilient, stealthy proxy infrastructure, you can overcome nearly any obstacle in modern LLM-based web scraping.

FAQs

What models does ScrapeGraphAI support?

ScrapeGraphAI supports any LLM with an accessible API, including OpenAI (GPT-3.5, GPT-4) and open-source models like LLaMA, Mistral, or Hugging Face models. You simply plug in your API key and choose the model.

Is ScrapeGraphAI suitable for production scraping?

Yes—with the right setup. For scalable production use, pair ScrapeGraphAI with:

- Reliable proxies (e.g., NetNut)

- Rate limiting and retry logic

- Caching and result validation

Do I still need proxies if I’m using LLMs?

Absolutely. LLMs enhance what you extract—but they don’t solve access issues. Without proxies, your scraper may get blocked, throttled, or served fake content. NetNut’s proxies ensure consistent and undetected access.

Can ScrapeGraphAI handle dynamic content?

Yes. You can integrate it with Playwright or browser renderers to load JavaScript-heavy sites before passing the content to an LLM for extraction.

How can I reduce LLM costs while using ScrapeGraphAI?

Use summarization only where necessary. You can:

- Pre-filter pages using keywords

- Cache and reuse LLM outputs

- Use smaller models or open-source LLMs for low-stakes tasks