Artificial intelligence (AI) is transforming industries by automating decision-making, improving predictions, and optimizing processes. However, the effectiveness of AI models heavily depends on the quality and quantity of training data they receive. Without a diverse and comprehensive dataset, AI systems can struggle with inaccuracies, biases, and inefficiencies. This makes web scraping an essential technique for gathering vast amounts of real-world data to train AI models effectively.

Web scraping enables AI developers to extract text, images, financial data, product information, and more from publicly available sources. AI-powered scrapers can continuously collect and process data at scale, feeding machine learning models with updated and relevant information. This is particularly crucial for AI applications in natural language processing (NLP), computer vision, financial forecasting, and cybersecurity, where large datasets are required to improve accuracy and adaptability.

Despite its advantages, AI-driven web scraping comes with several challenges. Websites implement IP bans, rate limits, CAPTCHAs, and anti-bot measures to prevent automated data extraction. Additionally, geo-restrictions can limit access to region-specific information, making it difficult for AI companies to train models on global datasets. These barriers can slow down AI development and reduce the effectiveness of machine learning models.

Proxies play a critical role in overcoming these challenges. By routing web scraping requests through different IP addresses, proxies allow AI scrapers to bypass restrictions, access geo-specific data, and avoid detection. Solutions like NetNut’s premium proxy network provide AI companies with fast, reliable, and scalable access to web data, ensuring that their AI models receive the high-quality training data they need.

In this article, we will explore how AI is revolutionizing web scraping, the key use cases for AI-driven data extraction, and the best methods for implementing proxies to enhance the efficiency and security of web scraping operations.

How AI is Used for Web Scraping

AI has transformed web scraping from a simple data extraction method into an adaptive, intelligent process capable of handling complex websites, dynamic content, and anti-scraping defenses. Unlike traditional web scrapers that follow predefined rules, AI-powered web scrapers leverage machine learning (ML), natural language processing (NLP), and computer vision to extract, process, and analyze web data more efficiently.

One of the biggest advantages of AI-driven web scraping is its ability to adapt to changes in website structures. Many websites update their HTML layouts, use JavaScript-rendered content, or implement bot detection systems that make it difficult for conventional scrapers to function. AI-powered scrapers learn from past interactions and can dynamically adjust their extraction techniques, making them more resilient against structural changes.

AI also enhances data cleaning and structuring, ensuring that extracted information is properly formatted and free from duplicates or irrelevant content. This is particularly useful for training AI models that require high-quality, structured datasets. Traditional scrapers often collect raw, unfiltered data that requires extensive post-processing, whereas AI-driven scrapers can automate data validation, classification, and normalization in real time.

In addition, AI-based web scrapers can integrate computer vision techniques to extract data from non-text elements, such as images, graphs, and infographics. For example, AI-powered scrapers can analyze product images in e-commerce listings, extract text from scanned documents, or identify patterns in financial charts—all of which contribute to richer training datasets for machine learning models.

While AI greatly enhances web scraping efficiency, it does not eliminate the challenges associated with large-scale data collection. Many websites deploy IP blocking, rate limits, and geo-restrictions to prevent automated access. This is where proxies become an essential component of AI-driven web scraping, ensuring uninterrupted data extraction by providing rotating IPs, geo-targeted access, and anonymity. In the next section, we’ll explore the key use cases where AI-powered web scraping is driving innovation across industries.

Key Use Cases of Web Scraping for AI Training

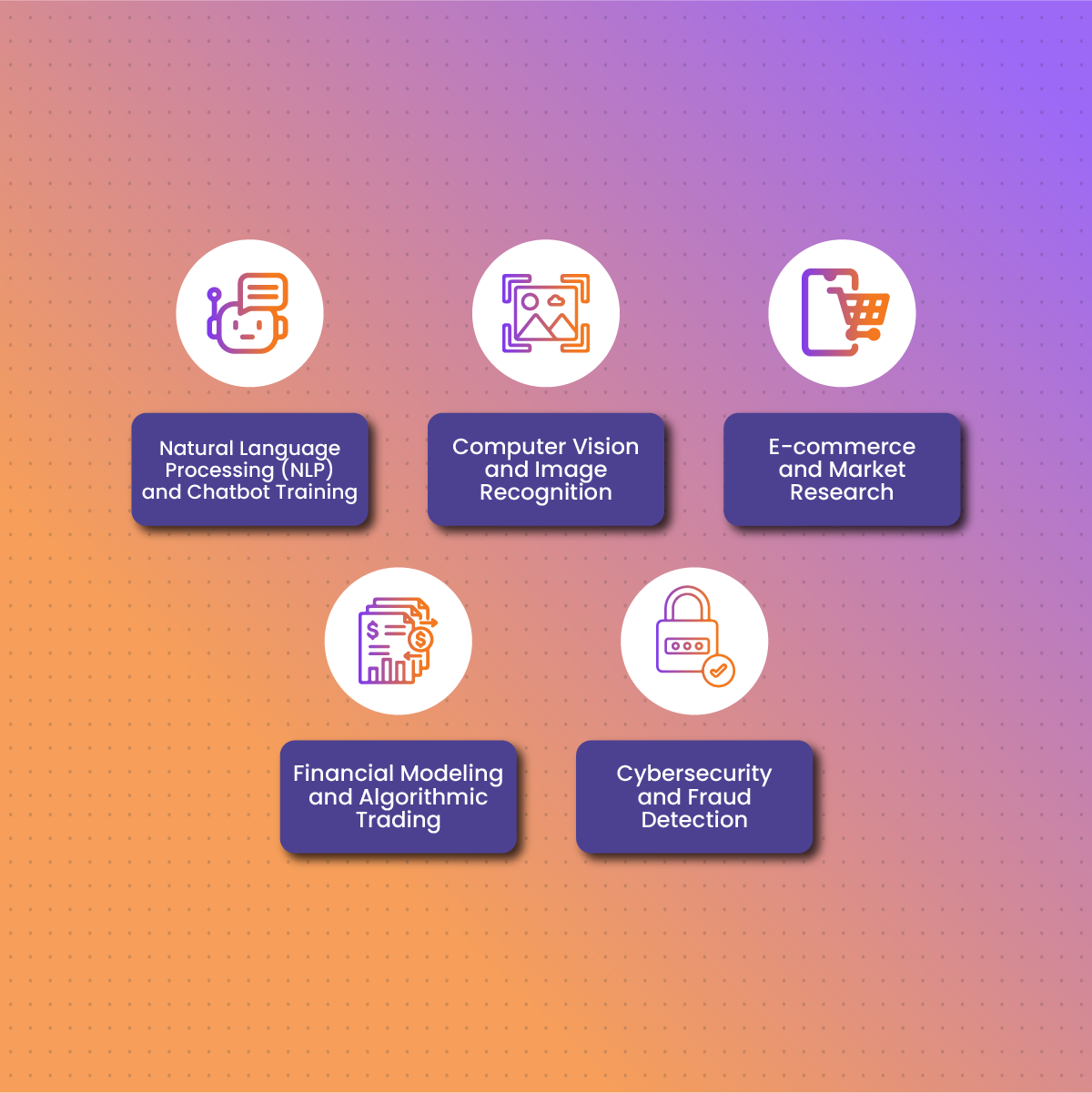

AI-powered web scraping has become essential for gathering large-scale datasets that fuel machine learning models. By automating data extraction from websites, AI systems can analyze vast amounts of real-world information, improving their accuracy and adaptability. Various industries rely on AI-driven web scraping to enhance their models and drive innovation. Below are some of the most impactful use cases.

Natural Language Processing (NLP) and Chatbot Training

AI models designed for chatbots, sentiment analysis, language translation, and text generation require diverse text datasets to improve their understanding of human language. Web scraping enables AI developers to collect news articles, blog posts, product reviews, and social media comments, providing real-world conversational data. By continuously gathering fresh content, NLP models can better adapt to new slang, trends, and linguistic nuances.

Computer Vision and Image Recognition

AI-powered web scraping extends beyond text-based data to visual information, which is crucial for training computer vision models. E-commerce platforms, social media, and stock image websites contain vast libraries of images that AI can use for facial recognition, object detection, medical imaging, and autonomous vehicle training. By extracting and categorizing images, AI models improve their ability to recognize patterns, differentiate objects, and make visual predictions.

E-commerce and Market Research

Retail and e-commerce companies use AI-driven web scraping to monitor competitor pricing, track product availability, and analyze consumer behavior. By collecting product descriptions, customer reviews, and pricing trends, AI-powered recommendation systems can offer personalized shopping experiences and dynamic pricing strategies. This data is also valuable for brands conducting market research to identify trends and optimize product offerings.

Financial Modeling and Algorithmic Trading

AI in finance depends on real-time market data to make accurate predictions. Web scraping allows financial institutions and traders to extract stock prices, economic reports, and financial news from multiple sources. Machine learning models process this data to predict market fluctuations, detect fraud, and optimize algorithmic trading strategies. The ability to collect and analyze financial data at scale gives AI-driven trading systems a competitive edge.

Cybersecurity and Fraud Detection

AI models used for cybersecurity and fraud prevention require large-scale data collection to identify patterns in malicious activity. Web scraping enables AI to monitor phishing websites, track fake social media accounts, and analyze fraudulent transaction behaviors. By continuously gathering cybersecurity-related data, AI models can stay ahead of evolving cyber threats and detect anomalies in real time.

Each of these use cases relies on high-quality, structured datasets to improve AI accuracy. However, web scraping at scale presents significant challenges, including IP bans, anti-bot detection, and geo-restrictions. In the next section, we’ll examine these obstacles and how AI companies can overcome them using proxies and advanced web scraping techniques.

Challenges in AI-Powered Web Scraping

While AI-powered web scraping has revolutionized data collection for machine learning, it is not without its challenges. Websites actively implement anti-scraping measures to prevent automated access, making large-scale data extraction increasingly difficult. AI companies must navigate technical, legal, and ethical challenges to ensure their data collection efforts remain efficient and compliant.

IP Bans, Rate Limits, and CAPTCHA Challenges

One of the biggest hurdles in AI-driven web scraping is IP detection and blocking. Websites monitor traffic patterns and automatically block IP addresses that send too many requests in a short period. Many platforms also enforce rate limits, restricting the number of requests an IP can make before being temporarily or permanently blocked.

To counteract scrapers, websites frequently deploy CAPTCHA challenges, which require human verification before granting access. AI-powered web scrapers that cannot solve these CAPTCHAs are often disrupted, slowing down or even halting data collection.

Dynamic Website Structures and JavaScript-Rendered Content

Modern websites rely on JavaScript, AJAX, and dynamically loaded content, making it difficult for traditional scrapers to extract meaningful data. Many web pages do not load content in static HTML format but instead rely on client-side rendering, where data appears only after user interaction or JavaScript execution.

AI scrapers must integrate headless browsers (like Selenium, Puppeteer, or Playwright) to mimic real users and interact with dynamic content. While this approach improves data extraction, it increases processing time and computational costs.

Geo-Restrictions Limiting Data Access

Many websites restrict access to their content based on the user’s location. AI models that require region-specific datasets for training face obstacles when trying to collect information from localized e-commerce markets, financial reports, or government databases. Without the ability to access data from multiple geographic locations, AI models risk being biased or incomplete.

Legal and Ethical Considerations

AI companies must also navigate data privacy laws such as GDPR, CCPA, and website Terms of Service (ToS). Web scraping must be conducted in a way that respects user privacy, intellectual property rights, and data security regulations. Companies that fail to comply with these laws risk legal repercussions and reputational damage.

Slow Data Retrieval and Scalability Issues

As AI projects scale, the demand for real-time, high-frequency data collection increases. However, slow scraping speeds, inefficient request handling, and server limitations can hinder data retrieval. AI companies need optimized proxy solutions to ensure their scrapers operate at maximum efficiency without disruptions.

Overcoming these challenges requires advanced scraping strategies, including the use of proxies to bypass restrictions, rotate IP addresses, and access geo-restricted content. In the next section, we’ll explore how proxies solve these issues and enable AI companies to scale their web scraping operations efficiently.

The Role of Proxies in AI Web Scraping

Proxies play a critical role in AI-powered web scraping, enabling seamless, large-scale data collection while bypassing common obstacles such as IP bans, rate limits, geo-restrictions, and CAPTCHAs. By acting as an intermediary between AI scrapers and target websites, proxies provide anonymity, access to region-specific data, and improved request distribution, making them essential for efficient AI data extraction.

Bypassing IP Bans and Rate Limits

Websites track incoming traffic and block IP addresses that send an unusually high number of requests in a short period. This is a major challenge for AI-driven scrapers, which operate at scale and require uninterrupted access to data. Rotating proxies solve this issue by changing the IP address for each request, distributing traffic across a vast pool of IPs and preventing detection.

For example, NetNut’s rotating residential proxies allow AI scrapers to simulate real user behavior, making it difficult for websites to identify them as bots. This ensures that AI models can continuously gather data without the risk of being blocked or flagged.

Accessing Geo-Restricted Content for Diverse AI Training

AI models trained on region-specific data require access to localized content, such as e-commerce listings, financial news, and social media trends from different countries. However, many websites restrict access based on a user’s geographic location, preventing scrapers from retrieving global datasets.

By using geo-targeted proxies, AI developers can route their traffic through IP addresses from specific countries or cities, bypassing location-based restrictions. This is particularly valuable for multilingual NLP models, AI-driven market research, and financial forecasting systems that rely on diverse, region-specific datasets.

Avoiding CAPTCHAs and Anti-Bot Detection

Websites employ CAPTCHA challenges, fingerprinting techniques, and JavaScript-based detection to block automated scrapers. AI-powered web scrapers must use advanced anti-detection methods, including proxy rotation, user-agent spoofing, and headless browsing, to stay undetected.

Residential and mobile proxies are particularly effective at bypassing CAPTCHAs since they use real IPs from legitimate internet users, making them less likely to trigger bot detection systems. Pairing proxies with AI-driven CAPTCHA solvers further enhances scraping efficiency, ensuring smooth data extraction without disruptions.

Enhancing Speed and Scalability in AI Scraping

AI models often require high-speed, real-time data retrieval to improve their accuracy and adaptability. Datacenter proxies provide fast, low-latency connections that enable AI scrapers to extract data quickly and efficiently. Load balancing strategies, combined with intelligent IP management, further improve performance, allowing AI systems to scale data collection without slowdowns.

NetNut’s high-speed proxy network offers enterprise-grade performance, ensuring AI-driven scrapers can retrieve vast amounts of data at optimal speeds without sacrificing reliability.

Ensuring Security and Compliance in AI Data Collection

AI companies must comply with data privacy regulations such as GDPR and CCPA while collecting web data. Proxies provide an additional layer of security by masking the scraper’s identity, reducing the risk of tracking, data leaks, or unauthorized access. Working with a trusted proxy provider like NetNut ensures that AI web scraping remains ethical, secure, and legally compliant.

With the right proxy strategy, AI companies can overcome data collection challenges, optimize efficiency, and scale their web scraping operations. In the next section, we’ll explore the best methods for implementing AI-driven web scraping using proxies.

Best Methods for AI-Driven Web Scraping

AI-powered web scraping requires a combination of advanced scraping techniques, proxy management strategies, and intelligent automation to extract high-quality data efficiently. Implementing the right methods ensures faster, more scalable, and undetectable data collection, reducing the risk of IP bans, CAPTCHAs, and incomplete datasets. Below are the best practices for optimizing AI-driven web scraping.

Leveraging AI-Powered Scrapers for Adaptive Data Extraction

Traditional web scrapers rely on static rules and predefined patterns, making them vulnerable to website changes. AI-powered scrapers, on the other hand, use machine learning algorithms to detect structural changes in web pages and adjust their extraction techniques automatically.

For instance, AI models trained on different HTML patterns can identify content elements dynamically, allowing scrapers to extract data from constantly evolving websites without manual reconfiguration. This is especially useful for e-commerce, news aggregation, and social media monitoring, where content updates frequently.

Using Rotating Proxies and User-Agent Spoofing

Websites detect and block scrapers that send multiple requests from the same IP address. To stay undetected, AI-driven scrapers should use rotating proxies, which assign a new IP address for each request, mimicking real user behavior.

Additionally, user-agent spoofing—changing browser and device identifiers—helps AI scrapers appear as different users. Combining residential or mobile proxies with randomized headers and cookies further improves stealth, making it harder for websites to recognize scrapers as bots.

Deploying Headless Browsers for JavaScript-Rendered Content

Many modern websites rely on JavaScript, AJAX, and dynamic content loading, making it difficult for traditional HTML-based scrapers to extract data. AI-powered scrapers should use headless browsers like Selenium, Puppeteer, or Playwright, which simulate real browser interactions and allow scrapers to access fully rendered web pages.

By using headless browsing combined with AI-driven click simulation and form submission, scrapers can bypass login pages, dropdown menus, and infinite scrolling, making data extraction more efficient.

Integrating API-Based Scraping for Structured Data Collection

Some websites provide official APIs that allow structured data access. While web scraping through HTML parsing is often necessary, API-based scraping can be more efficient, reliable, and legal when available. AI scrapers should be programmed to detect and prioritize API endpoints when possible, reducing reliance on traditional web scraping techniques.

Enhancing Data Quality with AI-Driven Validation and Cleaning

Extracted data often contains duplicates, inconsistencies, or irrelevant information. AI-powered data cleaning algorithms can automatically detect and remove duplicate entries, outliers, and irrelevant data points, ensuring a high-quality dataset for machine learning models.

By applying natural language processing (NLP) and entity recognition, AI scrapers can filter out noise and categorize data accurately. This is particularly useful for sentiment analysis, topic modeling, and content classification.

Combining Proxies with AI-Optimized Load Balancing

Large-scale AI data collection requires handling millions of requests per day without overloading servers. Proxies help distribute traffic efficiently by implementing load balancing strategies, ensuring scrapers operate at peak performance.

Using NetNut’s premium proxy network, AI companies can achieve fast, uninterrupted data retrieval, allowing scrapers to scale without being blocked or throttled.

By applying these best methods, AI companies can enhance their web scraping capabilities, improve data accuracy, and scale their operations while remaining undetected. In the next section, we’ll cover the best practices for implementing proxies in AI web scraping to maximize efficiency and security.

Implementing Proxies for AI Web Scraping: Best Practices

To maximize the efficiency, speed, and security of AI-driven web scraping, proper proxy implementation is essential. Simply using proxies is not enough—AI companies must integrate them strategically to avoid detection, optimize data collection, and maintain compliance with legal and ethical standards. Below are the best practices for implementing proxies in AI-powered web scraping.

Selecting the Right Proxy Type for AI Scraping

Different types of proxies serve different purposes in AI-driven data collection. Choosing the right one ensures optimal speed, reliability, and access to restricted content.

- Residential Proxies – Ideal for long-term, undetectable scraping as they use real IP addresses assigned to household users. Best for avoiding bans and accessing geo-restricted content.

- Datacenter Proxies – Best for high-speed, large-scale data extraction. While cost-effective, they may be easier for websites to detect.

- Mobile Proxies – Useful for scraping mobile-specific content such as app store data or mobile ad verification.

- ISP Proxies – Offer a balance between speed and legitimacy, making them effective for automated AI scraping requiring high trust levels.

NetNut’s proxy network provides high-speed, reliable, and ethically sourced proxies, ensuring AI scrapers operate without interruptions.

Rotating Proxies to Prevent IP Bans

AI-driven scrapers send thousands of requests per hour, making IP rotation a necessity to avoid detection. A well-implemented proxy rotation strategy ensures that each request is sent from a different IP, preventing websites from flagging and blocking scrapers.

- Session-based rotation – Maintains the same IP for a set period before switching, useful for maintaining login sessions.

- Request-based rotation – Assigns a new IP for every request, ideal for high-volume data extraction where stealth is required.

- Geo-rotation – Switches between IPs from different locations, allowing AI models to collect region-specific data without geo-blocking issues.

Optimizing Request Timing and Scraping Behavior

Scrapers that send too many requests in a short period can trigger anti-bot detection. AI-driven web scraping should mimic human behavior by:

- Randomizing request intervals to avoid predictable traffic patterns.

- Using real browser headers and user-agent strings to blend in with normal traffic.

- Implementing click simulations and scrolling actions to appear as a real user.

Pairing AI scrapers with NetNut’s intelligent IP rotation ensures scrapers remain undetected while efficiently extracting data.

Ensuring Compliance with Data Privacy Laws

AI companies must ensure their web scraping practices align with GDPR, CCPA, and website Terms of Service (ToS). Ethical AI scraping should:

- Avoid collecting personal data (PII) without consent.

- Respect website robots.txt guidelines when applicable.

- Use data strictly for legal and ethical AI training purposes.

By using trusted proxy providers like NetNut, AI businesses ensure secure and compliant data collection while minimizing legal risks.

Monitoring Proxy Performance for Maximum Efficiency

Regular proxy performance monitoring helps AI companies maintain high-speed, uninterrupted data retrieval. Key factors to track include:

- Success rates – Ensuring proxies are not frequently blocked.

- Latency and response time – Minimizing delays in AI data processing.

- IP blacklist detection – Replacing flagged proxies to avoid downtime.

NetNut’s premium proxy infrastructure offers real-time analytics, 99.9% uptime, and a global IP pool, ensuring AI scraping operates at maximum efficiency.

By following these best practices, AI companies can optimize data collection, prevent detection, and ensure compliance, making web scraping more efficient and scalable. In the next section, we’ll explore how AI and proxy technologies are evolving to shape the future of web scraping.

The Future of AI in Web Scraping

As AI technology continues to evolve, web scraping is becoming more intelligent, efficient, and scalable. AI-driven automation is no longer limited to simple data extraction—it is now capable of adapting to website changes, bypassing sophisticated anti-bot defenses, and processing vast amounts of structured and unstructured data. The future of AI-powered web scraping will be defined by several key advancements.

AI-Driven Scrapers Becoming More Adaptive

Traditional scrapers often break when websites update their structure, requiring manual adjustments. However, AI-powered scrapers use machine learning models to detect changes in website layouts and automatically adjust their extraction methods. These adaptive systems will reduce the need for constant human intervention, making web scraping more efficient and resilient.

Integration of AI with Natural Language Processing (NLP) and Computer Vision

Future AI scrapers will go beyond text-based data extraction by leveraging NLP for contextual understanding and computer vision for image-based data analysis. This will allow AI models to analyze complex content such as scanned documents, infographics, and videos, providing richer datasets for training machine learning models.

Automated CAPTCHA Solving and Anti-Bot Evasion

Websites are deploying increasingly sophisticated anti-bot mechanisms, including behavioral analysis, fingerprinting, and AI-powered CAPTCHA systems. To counteract these defenses, AI-driven scrapers will integrate automated CAPTCHA-solving techniques, headless browsing, and human-like interaction simulations. By combining proxies, AI-based behavioral mimicry, and anti-detection techniques, AI scrapers will stay ahead of anti-bot measures.

The Rise of Proxy AI for Intelligent IP Management

Proxy management is becoming more intelligent with the integration of AI-driven analytics. Future proxy networks will use real-time machine learning models to:

- Automatically detect which proxies are performing best and route traffic accordingly.

- Identify and avoid IP addresses that are likely to be flagged or blacklisted.

- Optimize request routing for maximum speed and efficiency.

NetNut is already at the forefront of this innovation, providing AI-optimized proxy solutions that offer high-speed, reliable, and intelligent IP rotation to support the next generation of AI-driven web scraping.

Ethical Considerations and Regulatory Challenges

As web scraping technology advances, so do legal and ethical challenges surrounding data collection. Governments and regulatory bodies are increasing oversight on automated data extraction, and AI companies must ensure they comply with GDPR, CCPA, and data protection laws. Future AI scraping tools will integrate built-in compliance checks and ethical AI guidelines to ensure responsible data collection.

The Future of AI and Proxies: A Powerful Partnership

As AI scrapers become more advanced, proxies will remain an essential component in ensuring scalability, anonymity, and unrestricted data access. Proxy providers like NetNut will continue to innovate, offering faster, more secure, and adaptive proxy solutions tailored to the demands of AI-driven data extraction.

With AI and proxy technology evolving in tandem, the future of web scraping will enable businesses to gather real-time, high-quality data more efficiently than ever before. In the final section, we’ll summarize the key takeaways and explore why NetNut’s proxies are the ideal solution for AI-powered web scraping.

Frequently Asked Questions About Web Scraping for AI Training

To address common questions about AI-driven web scraping, proxies, and best practices, here’s a detailed FAQ section to help AI companies and developers navigate the complexities of large-scale data collection.

1. What is AI-powered web scraping?

AI-powered web scraping refers to the use of machine learning algorithms, natural language processing (NLP), and automation to extract, process, and analyze data from websites. Unlike traditional scrapers, AI-driven scrapers can adapt to website changes, extract structured and unstructured data, and bypass anti-scraping defenses more efficiently.

2. Why is web scraping important for AI model training?

AI models require large-scale, diverse, and high-quality datasets to improve accuracy and adaptability. Web scraping enables AI developers to collect real-world text, images, financial data, e-commerce trends, and social media interactions, making AI systems more effective in natural language processing (NLP), computer vision, predictive analytics, and automation.

3. How do proxies help with AI web scraping?

Proxies act as intermediaries between AI scrapers and target websites, allowing scrapers to:

- Bypass IP bans and rate limits by rotating IP addresses.

- Access geo-restricted content by using region-specific IPs.

- Avoid CAPTCHAs and anti-bot detection by mimicking real user behavior.

- Ensure secure and anonymous data collection, reducing the risk of getting blocked.

NetNut’s high-performance proxy network provides residential, datacenter, mobile, and ISP proxies to support AI-driven web scraping.

4. What are the best proxies for AI web scraping?

The choice of proxy depends on the AI project’s requirements:

- Residential Proxies – Best for stealthy, undetectable data collection.

- Datacenter Proxies – Ideal for high-speed, large-scale scraping.

- Mobile Proxies – Useful for scraping mobile-specific content and ads.

- ISP Proxies – A mix of speed and legitimacy, reducing detection risks.

NetNut’s proxy solutions offer a variety of options tailored for AI web scraping and large-scale data extraction.

5. How can AI-powered scrapers avoid detection?

To prevent websites from blocking AI scrapers, developers should:

- Use rotating proxies to distribute requests across multiple IPs.

- Randomize request timing, headers, and user agents to mimic human behavior.

- Use headless browsers (e.g., Selenium, Puppeteer) to interact with JavaScript-heavy sites.

- Implement AI-driven CAPTCHA solvers to bypass security challenges.