Introduction

Are you looking for an easier way to retrieve relevant data online? You are in the right place!

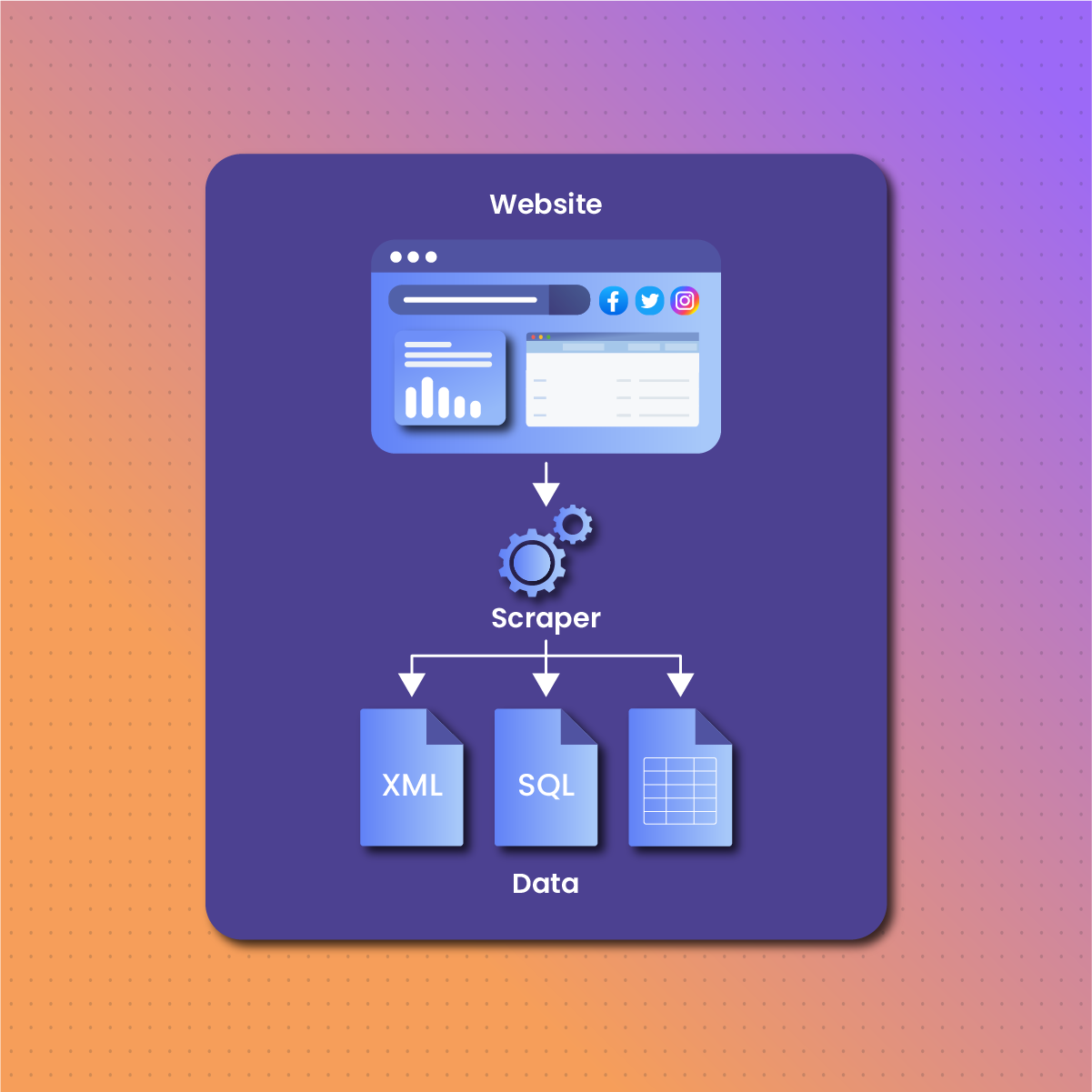

An auto web scraper emerges as a dynamic solution, representing the process of extracting data from websites. Also, it allows for the automated retrieval of information, transcending the limitations of manual data collection.

Manual data retrieval is not only time-consuming but also prone to errors; which limits its effectiveness where speed and accuracy are paramount. To overcome this challenge, an efficient data retrieval process becomes a strategic necessity. Whether for market research, competitive analysis, or staying updated on industry trends, the ability to swiftly and accurately gather information is a cornerstone of success.

This becomes easy to achieve when you use an auto web scraper. This guide examines an auto web scraper, its applications, and how to integrate it with NetNut for data-driven excellence.

About Auto Web Scraper

Auto web scraping, at its core, is the automated process of extracting information from websites. It involves using software or tools to navigate through web pages, gather data, and organize it for analysis. The benefits of an auto web scraper are multifaceted, ranging from efficiency and accuracy to scalability.

- Efficiency: Unlike manual web scraping, which is time-consuming and prone to errors, an auto web scraper executes tasks with precision and speed. It can handle vast amounts of data in a fraction of the time it would take a human, streamlining the information retrieval process.

- Accuracy: An auto web scraper reduces the risk of human error, ensuring that data is collected consistently and without inaccuracies. This accuracy is particularly crucial when dealing with large datasets or when high precision is required for decision-making.

- Scalability: It can easily scale to meet the demands of growing data needs. Whether scraping a single website or multiple sources simultaneously, automation allows for the extraction of vast amounts of data without sacrificing efficiency.

Differentiating Manual vs. Auto Web Scraper

Manual web Scraping

Features of manual web scraping include:

- Manual web scraping relies on human interaction to navigate websites, locate information, and copy it manually.

- It is prone to errors, inconsistencies, and slower data retrieval.

- This technique is suitable for small-scale data extraction tasks but becomes impractical for larger datasets or frequent updates.

Auto Web Scraper

Features of an auto web scraper include:

- This method utilizes software, scripts, or tools to automate the data extraction process.

- It enhances speed, accuracy, and scalability, making it suitable for handling extensive datasets and frequent updates.

- An auto web scraper allows for the scheduling of tasks, ensuring timely and regular data retrieval without constant human intervention.

Best Practices for Successful Use of an Auto Web Scraper

Here are some best practices to optimize the use of an auto web scraper:

Respecting Website Policies and Legal Considerations

Respecting Website Policies and Legal Considerations delves into the crucial aspects of navigating the digital realm responsibly. This ensures that your web data extraction aligns with legal frameworks and the ethical standards set by the online community. These policies are:

- Review and Respect Terms of Service: Before initiating any scraping activity, thoroughly review the target website’s terms of service. Ensure compliance with their policies to avoid legal repercussions.

- Check for Robots.txt: Respect the guidelines outlined in the website’s robots.txt file. This file provides instructions on which parts of the site can or cannot be scraped. Adhering to these guidelines is crucial for ethical usage of an auto web scraper.

- Use Scraping Headers: Configure your scraping script to include appropriate headers, such as a user-agent string, to mimic the behavior of a legitimate user. This contributes to a more ethical and inconspicuous use of an auto web scraper.

Overcoming Challenges and Avoiding Potential Setbacks During Auto Web Scraping

Navigating through the intricacies of data extraction requires a strategic approach to overcome obstacles and ensure a seamless data scraping experience.

- Handle CAPTCHAs Effectively: Implement mechanisms to handle CAPTCHAs gracefully. Utilize CAPTCHA-solving services or introduce delays in your scraping script to allow manual intervention if required.

- Monitor Changes in Website Structure: Regularly check for changes in the target website’s structure. Websites often undergo updates that can impact your web scraping script. Stay vigilant and adapt your script accordingly to avoid disruptions.

- Handle Dynamic Content: Websites with dynamic content loaded through JavaScript may pose challenges. An auto web scraper is a tool that handles dynamic content efficiently or considers headless browser automation for a more accurate extraction.

Tips for Maintaining Anonymity and Avoiding IP Bans

- Rotate IPs Strategically: If you are using rotating IPs, implement a strategic rotation schedule to mimic natural user behavior. This helps avoid detection and reduces the risk of getting banned.

- Use Residential IPs: Opt for residential IPs over datacenter IPs. Residential IPs are less likely to be flagged as suspicious by websites, enhancing anonymity and reducing the chance of IP bans.

- Implement IP Throttling: Introducing IP throttling in an auto web scraper can limit the frequency of requests. This not only helps maintain anonymity but also prevents overloading the target website’s servers.

- Monitor Scraping Activity: Regularly monitor an auto web scraper activity and adjust parameters if necessary. Being proactive in detecting potential issues can prevent IP bans and ensure a smoother scraping process.

Successful use of an auto web scraper goes beyond efficient data retrieval; it requires a holistic approach that considers website policies, potential pitfalls, and strategies for maintaining anonymity.

Integrating NetNut With Auto Web Scraper

NetNut stands as a beacon in the realm of auto web scraping, positioning itself as a premium proxy service that goes beyond conventional offerings. At its core, NetNut provides users with a gateway to the internet through a network of residential IP addresses. This distinction is crucial, as it allows for a level of anonymity and reliability that is unparalleled in the world of web scraping. These distinctions are evident in:

- Residential IP Network: NetNut’s strength lies in its extensive network of residential IPs. Unlike data center proxies, residential IPs mimic real user behavior, making it difficult for websites to detect and block scraping activities.

- Anonymity and Security: NetNut ensures user anonymity by routing requests through residential IPs, providing a secure environment for web scraping. This not only protects the user’s identity but also safeguards against IP bans or restrictions.

Key Features that Make NetNut Ideal for Auto Web Scraper

- High Performance: NetNut boasts a high-performance infrastructure that translates into exceptional speed when using auto web scraper for data extraction. This is a game-changer for users dealing with large datasets or requiring real-time information retrieval.

- Global Reach: With servers strategically located around the world, NetNut offers a global reach. This means users can access geo-restricted content, ensuring that their auto web scraping efforts are not hindered by geographical limitations.

- Rotating IPs: NetNut’s dynamic IP rotation feature adds an extra layer of sophistication. It automatically rotates IPs during scraping sessions, mimicking organic user behavior and reducing the risk of detection.

- Scalability: NetNut is designed to meet the evolving needs of users. Whether you’re using an auto web scraper as a small business with modest requirements or an enterprise dealing with extensive data, NetNut’s scalability ensures that it can cater to a diverse range of users.

How NetNut Enhances Data Extraction Speed and Accuracy For Auto Web Scraper

To a great extent, there are game-changing features that propel NetNut to the forefront, revolutionizing its speed and accuracy for an auto web scraper.

- Reduced Latency: By utilizing a vast network of residential IPs, NetNut minimizes latency, ensuring that data is retrieved swiftly. This is particularly advantageous for users who require real-time data from an auto web scraper or are dealing with time-sensitive information.

- Reliability in Handling Blocks: NetNut’s residential IPs provide a level of reliability in handling blocks. Websites are less likely to flag or block requests, enhancing the overall accuracy and success rate of data extraction processes.

- Optimized for Auto Web Scraper: Unlike general-purpose proxies, NetNut is specifically optimized for web scraping. Its features are tailored to meet the unique demands of automated data extraction, making it a specialized and efficient tool for this purpose.

With the points highlighted above, it becomes evident that NetNut service is not just a means to an end; it’s a catalyst for elevating the activities of an auto web scraper. The strategic integration of NetNut into the auto web scraper enhances not only speed and accuracy but also the reliability and sustainability of data extraction endeavors.

Setting Up Auto Web Scraper using NetNut

Step-by-step Guide to Setting up NetNut for Scraping

Getting started on your journey into using an auto web scraper with NetNut is an exciting endeavor, and a proper setup is key to a seamless experience. Let’s walk through the essential steps:

- Create a Netnut Account:

- Visit the Netnut website and sign up for an account.

- Choose a plan that aligns with your auto web scraping needs.

- Receive API Credentials: Upon registration, you’ll receive API credentials. These credentials are essential for authenticating your requests and ensuring a secure connection with an auto web scraper.

- Integrate NetNut into Your Auto Web Scraper Script: Incorporate NetNut’s API into the script of the auto web scraper. This involves making API calls using the provided credentials to route your requests through NetNut’s residential IP network.

- Configure-Request Headers: Next, adjust the script of the auto web scraper to include appropriate headers to mimic human-like behavior. NetNut’s rotating IPs work optimally when combined with headers that resemble those of a genuine user.

- Handle Rate Limiting: Implementing rate-limiting mechanisms in an auto web scraper can prevent overwhelming the target website. NetNut provides guidelines on optimal request rates to ensure smooth scraping operations.

Configuring NetNut for Auto Web Scraper Optimal Performance

- Choose Proxy Pools Wisely: NetNut offers various proxy pools optimized for different purposes. Select a pool that aligns with your specific auto web scraping requirements for the best performance.

- Understand IP Rotation Options: Familiarize yourself with NetNut’s IP rotation options. Select either static or rotating IPs to integrate with an auto web scraper.

- Monitor Usage and Statistics: Leverage NetNut’s dashboard to monitor your usage and access valuable statistics. This helps in understanding how an auto web scraper works and optimize its performance accordingly.

- Utilize Global Server Locations: If your auto web scraping targets are geographically diverse, take advantage of NetNut’s global server locations. This ensures that the auto web scraper can access data from different regions without any geographical limitations.

Troubleshooting Common Issues in the Setup Process

Highlighted below are common issues that most users encounter while setting up an auto web scraper with NetNut.

- API Authentication Issues: Double-check your API credentials to ensure accurate authentication. Authentication issues can often be the root cause of connection problems.

- Handling CAPTCHAs: In scenarios where CAPTCHAs are encountered, consider implementing CAPTCHA-solving services. NetNut provides recommendations on third-party services compatible with its infrastructure.

- Adjusting Request Rates: In case of blocks or restrictions, revisit the script to adjust the request rates of the auto web scraper. Adjust them to align with the guidelines provided by NetNut to avoid triggering anti-scraping measures.

- Proxy Pool Selection: If you experience performance issues, review your choice of proxy pool. Different pools cater to specific use cases, so selecting the most suitable pool for an auto web scraper is essential.

By following this step-by-step guide, configuring NetNut for optimal performance, and troubleshooting common issues, you lay a solid foundation for a successful use of an auto web scraper. The nuanced understanding of NetNut’s setup intricacies ensures that you harness its full potential in enhancing the speed, accuracy, and reliability of your automated data extraction processes.

Real-world Applications Of Auto Web Scraper

An auto web scraper offers profitable services in various industries. Some of these industries implement an auto web scraper in their daily activities. They include:

Industries Benefiting from Auto Web Scraper

- E-commerce and Retail: Price monitoring, product information aggregation, and competitor analysis empower businesses to stay competitive in dynamic markets.

- Finance and Investment: Gathering real-time financial data, tracking market trends, and sentiment analysis contribute to informed investment decisions and portfolio management.

- Healthcare and Pharmaceuticals: Monitoring medical research, tracking drug prices, and analyzing healthcare trends assist professionals in making data-driven decisions for improved patient outcomes.

- Travel and Hospitality: Scraping for pricing data, customer reviews, and competitor offerings helps travel agencies and hospitality businesses optimize services and pricing strategies.

- Marketing and Advertising: Extracting data on consumer behavior, social media trends, and advertising strategies aids marketers in developing targeted and effective campaigns.

Future Trends in Auto Web Scraper

With the innovation of the auto web scraper, it is safe to say that the future of auto web scraping is safe. An auto web scraper plays a crucial role through:

- Machine Learning Integration: The integration of machine learning algorithms into an auto web scraper enhances pattern recognition, making them more adaptive to evolving website structures.

- Increased Use of APIs: The reliance on APIs for data exchange is likely to grow, providing a more standardized and efficient way to access and retrieve data.

From revolutionizing industries to adapting to emerging technologies, the journey of automated data retrieval is one of constant evolution, with tools like NetNut playing a pivotal role in shaping its trajectory.

Conclusion

Without doubt, the significance of an auto web scraper cannot be overstated. It serves as a linchpin for businesses and individuals seeking to harness the power of data for strategic decision-making. From market intelligence and competitor analysis to real-time insights, the ability to extract and analyze vast amounts of data efficiently is a cornerstone of success in today’s information-driven world.

The ability to extract actionable insights from the vast ocean of online data is within reach, and NetNut is poised to be your ally in this endeavor. Embrace the transformative power of an auto web scraper, not just as a technological tool, but as a strategic enabler for your business or personal ventures.

The journey into the world of data-driven decision-making awaits, and with NetNut the possibilities are boundless. Explore, implement, and navigate the future with confidence in the realm of automated data retrieval.

Frequently Asked Questions And Answers

Is web scraping legal, and how can I ensure compliance with regulations?

Web scraping itself is not illegal, but the legality depends on how it’s done. Always review and adhere to the terms of service of the websites you are scraping. Respect robots.txt guidelines and ensure your scraping activities comply with applicable laws and regulations.

How does NetNut handle IP bans and ensure anonymity with an auto web scraper?

NetNut’s global server locations allow users to access geographically restricted content. By leveraging its extensive residential IP network, NetNut enables scraping from different regions without encountering geographical limitations. For this, NetNut employs a vast network of residential IPs, reducing the likelihood of detection and bans. Its dynamic IP rotation and strategic scheduling mimic natural user behavior, enhancing anonymity and minimizing the risk of IP bans.

What makes NetNut ideal for handling dynamic content on websites?

NetNut’s infrastructure is optimized for web scraping, including sites with dynamic content loaded through JavaScript. Its high-performance design ensures efficient handling of dynamic elements, making it suitable for a wide range of scraping tasks.