Introduction Scraping Google Flights Data

Google Flights is a handy tool for travelers worldwide. It offers a user-friendly platform to search for flights, compare prices, and explore various travel options. Since its launch in 2011, Google Flights quickly gained popularity for its intuitive interface and search abilities. It gathers flight information from multiple airlines and travel agencies, allowing users to find the best deals and plan their trips efficiently.

However, while Google Flights offers a convenient way to search for flights manually, scraping its data opens up a myriad of possibilities. This begs the need to understand how to scrape Google Flights with Python. Scraping Google Flights data allows users to gather large volumes of flight information efficiently.

In this article, we’ll look deeper into the process of scraping Google Flights with Python. We will cover everything from setting up the development environment to handling challenges and implementing advanced techniques. Let’s get on this journey to unlock the treasure of flight data waiting to be discovered!

What Are the Benefits of Scraping Google Flights Data?

Scraping Google Flights data gives you direct access to real-time travel insights that would otherwise require hours of manual research. Whether you’re building a fare comparison tool, analyzing travel trends, or simply trying to track the cheapest flight routes, programmatically collecting flight data opens the door to powerful automation and competitive intelligence.

For businesses in the travel or data analytics industry, having fresh flight data means you can monitor airline pricing behavior, detect shifts in demand, and tailor services to customers in real time. Researchers and developers also benefit by feeding this data into forecasting models or dynamic pricing engines. Ultimately, scraping Google Flights empowers you with a continuous stream of up-to-date flight information—fueling smarter decisions and more efficient solutions.

What to know about Web Scraping

Web scraping, also known as web harvesting or web data extraction, refers to the process of extracting data from websites. The purpose of web scraping is to gather structured information from web pages, which can then be analyzed, processed, or stored for various purposes.

The key components of web scraping include:

- Data Extraction: This involves extracting specific data elements such as text, images, or links from web pages.

- Data Transformation: Data transformation means converting the extracted data into a structured format, such as JSON or CSV, for further analysis or storage.

- Automation: This is the process of retrieving and parsing web pages, allowing for efficient and scalable data extraction.

Web scraping is commonly used in fields such as:

- Business Intelligence: This involves gathering market data, competitor analysis, and pricing information.

- Research: Collecting data for academic research, sentiment analysis, and trend monitoring.

- E-commerce: Scraping product information, reviews, and pricing data from online retailers.

- Finance: Extracting financial data, stock prices, and market trends from financial websites.

Introduction to Python Libraries for Web Scraping Google Flights

Python offers a rich ecosystem of libraries specifically designed for web scraping. Two popular libraries for web scraping are BeautifulSoup and Requests.

BeautifulSoup

BeautifulSoup is a Python library for parsing HTML and XML documents. It provides a simple and intuitive interface for navigating and searching the parsed tree, making it easy to extract data from web pages. BeautifulSoup’s tag-based approach allows users to target specific elements on a web page using CSS selectors or regular expressions.

Requests

Requests is a Python library for making HTTP requests. It simplifies the process of sending HTTP requests and handling responses, making it an essential tool for web scraping. With requests, users can retrieve the HTML content of web pages, which can then be parsed and analyzed using other libraries like BeautifulSoup.

Legal and Ethical Considerations for Web Scraping Google Flights

Although web scraping offers valuable insights and opportunities, it’s essential to consider the legal and ethical implications of scraping data from websites.

Respect Website Terms of Service

Many websites have terms of service or terms of use that explicitly prohibit web scraping or impose restrictions on the use of automated tools. It’s crucial to review and comply with these terms to avoid legal consequences.

Copyright and Intellectual Property

Web content is often protected by copyright laws and intellectual property rights. Scraping copyrighted material without permission may infringe on these rights and lead to legal action.

Rate Limiting and Throttling

Some websites implement rate-limiting or throttling mechanisms to prevent excessive scraping and protect their servers from overload. Therefore, respect these limitations and avoid making too many requests in a short period.

Data Privacy

Be mindful of data privacy concerns when scraping personal information or sensitive data from websites. Avoid collecting or using data in ways that may violate privacy laws or regulations.

By understanding and adhering to legal and ethical considerations, web scrapers can mitigate risks and ensure responsible and respectful scraping practices.

Setting Up Your Environment for Web Scraping Google Flights

Before getting into the exciting world of web scraping Google Flights, it’s essential to ensure your environment is properly configured. Follow these steps to set up your environment for successful scraping:

Installing Python

Python is the primary programming language used for web scraping. Therefore, it’s essential to have it installed on your system before you can start scraping web data. Follow these steps to install Python:

- Download Python: Visit the official Python website and download the latest version of Python for your operating system (Windows, macOS, or Linux).

- Install Python: Run the downloaded installer and follow the on-screen instructions to install Python on your system. Make sure to check the option to add Python to your system PATH during installation.

- Verify Installation: After installation, open a command prompt (Windows) or terminal (macOS/Linux) and type the following command to verify that Python is installed correctly:

python –version

You should see the installed Python version displayed in the output.

Installing Required Libraries (BeautifulSoup, requests)

Once Python is installed, you’ll need to install the required libraries for web scraping: BeautifulSoup and requests. Follow these steps to install the libraries using Python’s package manager, pip:

- Open Command Prompt or Terminal: Open a command prompt (Windows) or terminal (macOS/Linux).

- Install BeautifulSoup: Type the following command to install the BeautifulSoup library:

pip install beautifulsoup4

- Install requests: Type the following command to install the requests library:

pip install requests

These commands will download and install the BeautifulSoup and request libraries along with any dependencies they require.

Setting Up the Development Environment

With Python and the required libraries installed, you’re ready to set up your development environment for web scraping. Here are some recommended steps:

- Choose a Code Editor: Select a code editor or integrated development environment (IDE) for writing and editing your Python scripts. Popular options include Visual Studio Code, PyCharm, and Sublime Text.

- Create a Project Directory: Create a dedicated directory for your web scraping project. Organizing your files and scripts in a separate directory will help keep your project organized and manageable.

- Create a Python Script: Use your chosen code editor to create a new Python script for web scraping. Save the script in your project directory with a meaningful name, such as scrape_google_flights.py.

- Import Libraries: In your Python script, import the BeautifulSoup and request libraries at the beginning of the file using the following import statements:

from bs4 import BeautifulSoup

import requests

With your environment set up, you’re now ready to start writing Python code to scrape data from Google Flights. In the next section, we’ll explore the process of scraping Google Flights and extracting flight data using Python.

How To Scrape Google Flights

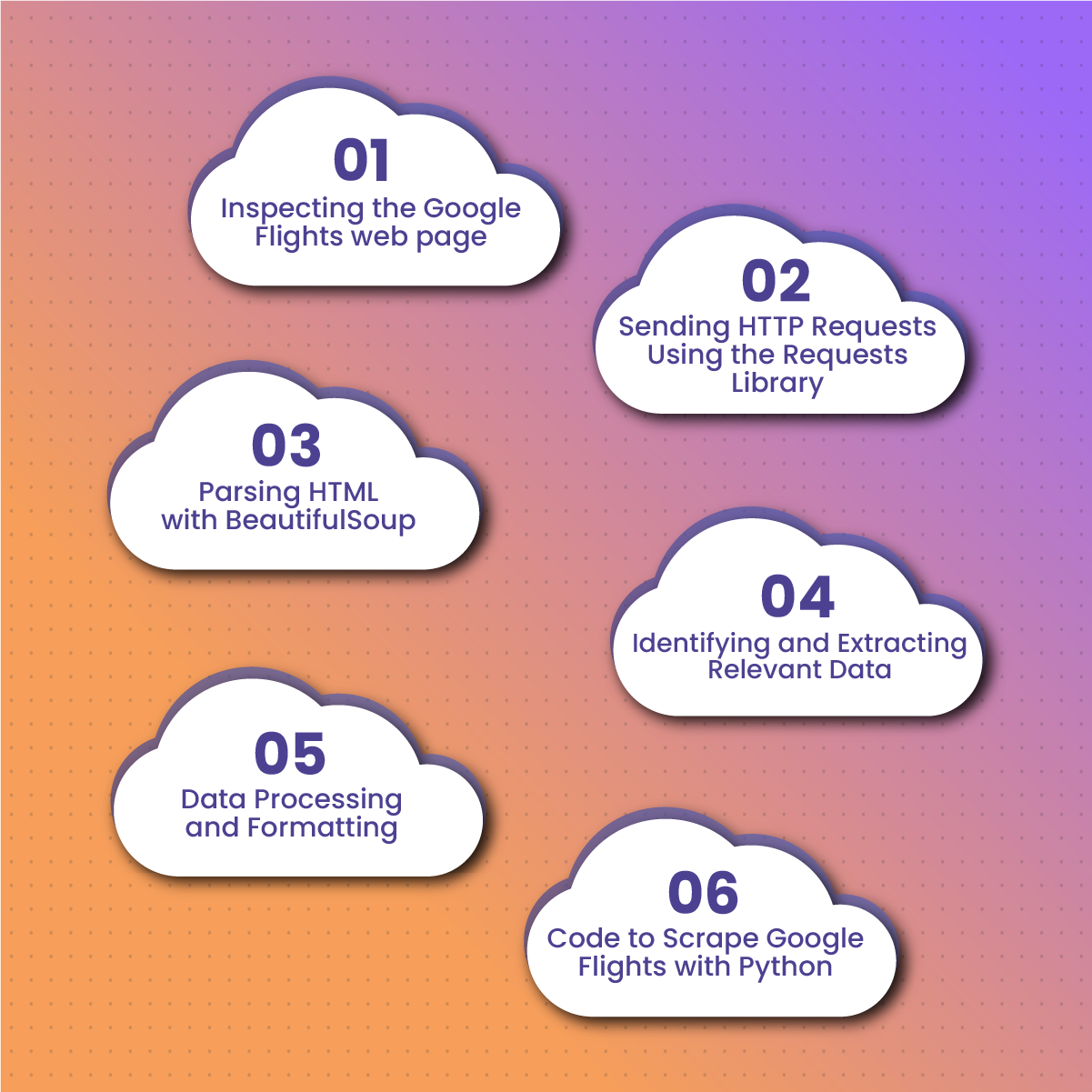

Here, we’ll walk through the steps to scrape data from Google Flights using Python. Follow along to extract valuable flight information for your analysis or automation needs.

Inspecting the Google Flights web page

Before scraping data from Google Flights, it’s essential to inspect the structure of the webpage to identify the HTML elements containing the data you want to extract. Follow these steps to inspect the Google Flights webpage:

- Open Google Flights: Visit the Google Flights website in your web browser.

- Open Developer Tools: Right-click on any part of the webpage and select “Inspect” or “Inspect Element” from the context menu. This will open the browser’s Developer Tools panel, allowing you to view the HTML source code of the webpage.

- Explore HTML Structure: Use the Developer Tools panel to explore the HTML structure of the webpage. Look for elements such as <div>, <span>, and <table> that contain the flight data you’re interested in scraping.

- Identify Data Attributes: Pay attention to the attributes of HTML elements, such as class names, IDs, and data attributes, that uniquely identify the data you want to extract. These attributes will be useful when writing your scraping script.

Sending HTTP Requests Using the Requests Library

Once you’ve identified the relevant HTML elements on the Google Flights webpage, you can use the requests library in Python to send HTTP requests and retrieve the HTML content of the webpage. Follow these steps to send an HTTP request to Google Flights:

Import the requests Library

At the beginning of your Python script, import the requests library using the following import statement:

import requests

Send a GET Request

Use the requests.get() function to send a GET request to the Google Flights URL. Replace URL_HERE with the URL of the Google Flights search page you want to scrape:

response = requests.get(“URL_HERE”)

Check Response Status

Verify that the request was successful by checking the status code of the response. A status code of 200 indicates that the request was successful:

if response.status_code == 200:

print(“Request successful”)

else:

print(“Request failed”)

Parsing HTML with BeautifulSoup

After sending an HTTP request and receiving the HTML content of the webpage, you can use the BeautifulSoup library in Python to parse the HTML and extract the relevant data. Follow these steps to parse HTML with BeautifulSoup:

Import the BeautifulSoup Library

At the beginning of your Python script, import the BeautifulSoup library using the following import statement:

from bs4 import BeautifulSoup

Create a BeautifulSoup Object

Use the BeautifulSoup() constructor to create a BeautifulSoup object from the HTML content of the webpage:

soup = BeautifulSoup(response.content, “html.parser”)

Find HTML Elements

Use BeautifulSoup’s methods such as find() and find_all() to find HTML elements that contain the data you want to extract. Pass the name of the HTML element and any attributes as arguments to these methods.

Identifying and Extracting Relevant Data

Once you’ve parsed the HTML with BeautifulSoup, you can identify and extract the relevant data from the webpage. Follow these steps to identify and extract data using BeautifulSoup:

- Inspect HTML Elements: Refer back to the HTML structure of the webpage you inspected earlier to identify the HTML elements containing the data you want to extract.

- Use BeautifulSoup Methods: Use BeautifulSoup’s methods such as find() and find_all() to locate the HTML elements containing the desired data.

- Extract Data: Once you’ve identified the HTML elements, use BeautifulSoup’s attributes like text, get(), or find() to extract the text or attributes of the elements.

Data Processing and Formatting

After extracting the data from the webpage, you need to process and format it for further analysis or storage. Follow these steps to process and format the extracted data:

- Clean Data: Remove any unnecessary whitespace, HTML tags, or special characters from the extracted data.

- Convert Data Types: Convert the extracted data into appropriate data types, such as integers or floats, if necessary.

- Format Data: Format the extracted data into a structured format such as JSON or CSV for further analysis or storage.

Code to Scrape Google Flights with Python

Use this Python code to start scraping Google Flights to extract flight information:

import requests

from bs4 import BeautifulSoup

def scrape_google_flights(origin, destination, date):

url = f’https://www.google.com/flights?hl=en#flt={origin}.{destination}.{date}’

headers = {

‘User-Agent’: ‘Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3’

}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.content, ‘html.parser’)

flights = []

# Extract flight information

for result in soup.find_all(‘div’, class_=’gws-flights-results__result-item’):

flight_details = {}

# Extract specific details (e.g., price, departure time, airline, duration)

price = result.find(‘div’, class_=’gws-flights-results__price’).text.strip()

departure_time = result.find(‘div’, class_=’gws-flights-results__times’).text.strip()

airline = result.find(‘div’, class_=’gws-flights-results__carriers’).text.strip()

duration = result.find(‘div’, class_=’gws-flights-results__duration’).text.strip()

# Store details in a dictionary

flight_details[‘price’] = price

flight_details[‘departure_time’] = departure_time

flight_details[‘airline’] = airline

flight_details[‘duration’] = duration

# Append flight details to the list

flights.append(flight_details)

return flights

Finally, make sure to test and adjust the code based on the specific structure of Google Flights’ HTML at the time of your scraping, as these structures can change over time.

The Role of NetNut Proxies in Scraping Google Flights with Python

It is no longer news that accessing and extracting data from websites like Google Flights can be a game-changer for various industries. This includes travel agencies seeking competitive pricing insights to researchers analyzing flight trends.

However, web scraping Google Flights comes with its challenges, including IP blocking, CAPTCHA challenges, and rate limiting. This is where NetNut proxies step in as a powerful solution to facilitate smooth and uninterrupted scraping operations.

It is safe to say that NetNut provides proxies that serve as a comprehensive solution to overcome the challenges associated with web scraping. As a leading provider of residential proxies and ISP proxies, to mention a few, NetNut offers a vast network of IP addresses sourced from real users.

This provides anonymity, reliability, and scalability for scraping operations. Here’s how NetNut proxies play a pivotal role in the web scraping Google Flights process:

- Anonymity and IP Rotation: NetNut proxies route scraping requests through a diverse pool of residential IP addresses. This promotes anonymity and prevents detection by target websites. By rotating IP addresses, NetNut proxies reduce the risk of proxy blocking and enable continuous scraping operations.

- CAPTCHA Solving: Also, NetNut proxies integrate with CAPTCHA-solving services, automating the process of bypassing CAPTCHA challenges encountered during scraping. This seamless integration saves time and resources, allowing scraping operations to proceed without interruption.

- Bypassing Rate Limiting: In addition, NetNut residential proxies enable scraping at scale by distributing requests across multiple IP addresses, effectively bypassing rate limits and throttling mechanisms imposed by websites. This ensures that scraping operations can handle large volumes of data without encountering restrictions.

- Geographical Diversity: Furthermore, NetNut proxies offer a wide range of mobile proxies from different geographical locations. This allows scrapers to access region-specific content and bypass regional restrictions. This geographical diversity enhances scraping capabilities and enables global data collection efforts.

With NetNut proxies at your disposal, you can unlock the full potential of web scraping Google Flights and extract valuable insights to drive innovation across various industries.

Final Thoughts on Scraping Google Flights Data

In conclusion, mastering the art of how to scrape Google Flights with Python unlocks a wealth of opportunities for travelers, businesses, researchers, and developers alike. By using the power of web scraping, you can analyze flight prices and trends, automate fare alerts, integrate data with other applications, and derive valuable insights to inform decision-making.

In this guide, we’ve covered the essential steps and techniques for scraping Google Flights responsibly and effectively. Also, it’s crucial to carry out web scraping with a mindset of respect, responsibility, and ethics. By respecting website terms of service, implementing delays and timeouts, logging and handling errors gracefully, and testing and debugging your scraping scripts thoroughly, you can scrape data responsibly while minimizing the risk of being detected or blocked by target websites.

Frequently Asked Questions and Answers

Will scraping Google Flights get me banned or blocked?

Scraping Google Flights excessively or in violation of website policies may result in your IP address being blocked or banned. It’s essential to scrape responsibly, respect website terms of service, and implement techniques like rate limiting, delays, and proxies to avoid detection and mitigate the risk of being banned.

How often should I scrape Google Flights for up-to-date data?

The frequency of scraping depends on your specific use case and requirements. Some users scrape Google Flights daily to track price changes and trends, while others may scrape less frequently based on their needs. It’s essential to balance the need for up-to-date data with considerations like server load and website policies.

What are the best practices for storing and managing scraped data from Google Flights?

Best practices for storing and managing scraped data include ensuring data security, privacy compliance, and data integrity. Store scraped data securely, encrypt sensitive information, and implement access controls to protect data from unauthorized access or misuse. Additionally, consider data retention policies and regularly review and update stored data to ensure relevance and accuracy.