Introduction

Data has become mainstream in recent years, and Yelp is a reliable source of information. More than 5 million businesses are listed on Yelp, and the platform attracts over 178 million visitors every month. Therefore, Yelp has become a valuable source of data regarding these businesses.

There are several ways to extract data from this platform. However, reviewing all the information on Yelp can be time-consuming and frustrating. Therefore, this guide will focus on how to scrape Yelp data with Python for quick and effective data retrieval.

Let us dive in!

What is Yelp?

Yelp is one of the biggest websites for business directories. There is also a mobile app that allows users to search and review local businesses such as restaurants, bars, shops, professionals, and more. Founded in San Francisco in 2004, the mission of Yelp is to connect people to local businesses.

Learning how to scrape Yelp data is also useful for researchers and marketers when identifying market trends and analyzing competitors. Since Yelp holds thousands of reviews and ratings, businesses can scrape them to get an idea of customer sentiment and how to serve them better.

Understanding the Structure of Yelp Website

Yelp, like many modern websites, has a unique structure and design that allows it to effectively cater to its larger user base. The core of Yelp’s functionality is its search mechanism, which is a sophisticated system designed to return relevant responses to queries.

In addition, Yelp uses various front-end technologies to provide an interactive user experience. Therefore, understanding this technology is a crucial aspect of learning how to scrape Yelp data with Python. They include:

- HTML: HTML defines the content of Yelp pages. In other words, it determines how information is organized such that data returned appears in a structured manner.

- jQuery: This is a lightweight JavaScript library that optimizes activities like AJAX requests, DOM manipulation, and event handling. Yelp uses jQuery for its front-end interactivity to ensure a responsive and simple user interface.

- CSS: Often working together with HTML, CSS is responsible for the visual presentation of content on Yelp. Therefore, it determines the visual elements like color, fonts, and layout to ensure consistency in aesthetically pleasing designs.

- React: React is another front-end technology used by Yelp. Its component-based architecture allows Yelp to build reusable UI components. Subsequently, this optimizes the development process as well as ensures a consistent user experience across different parts of the website.

Structure of Yelp search result page

When you visit Yelp and type in a restaurant, it provides a list of relevant results. Therefore, before you dive into how to scrape Yelp data with Python, let us examine what we can find on this page. They include:

Search bar

The search bar is located at the top of the page, and you can enter the business or service you are looking for. For example, you can enter “Mexican restaurants” or “bars,” and it will return a list of businesses that fit the search tag.

Filters

You will find the filters next to the search bar. Filters help to narrow your search such that the returned response will be what you are looking for. For example, you can search “Mexican restaurants” but filter it to “New York City.” Subsequently, the search result page will only contain Mexican restaurants located in New York City.

List of result

Once you have added all your filters and selected “Search,” a list of results will be returned on your screen. The businesses displayed will come with a name, image, rating, and short description.

Map

Another information you can get from Yelp search result page is map. Once you have found the ideal choice, you can use the map to locate it, determine how many hours it is from you, their opening as well as closing hours.

Other details

When you click on a business name or image on the search result page, it takes you to another page with additional information. It could include addresses, phone numbers, reviews from customers, and more.

Why You Should Scrape Yelp Data in Python?

We have mentioned in the earlier parts of this guide that Yelp contains a vast amount of data. However, what are the applications of the data to individuals and businesses?

Let us find out!

Competitive analysis

Collecting data from Yelp, especially reviews, allows you to identify competitor’s strengths and weaknesses. Therefore, this gives you an insight into their performance and strategies. Analysis of reviews and ratings allows you to understand where your business stands in comparison to the competitors.

Subsequently, access to this data means you can find out the chief complaints of their uses and their best point of service. Other business metrics you can identify from scraping Yelp data include product quality, reliability, and service. This presents a unique opportunity for you to provide a solution to customer’s unsatisfied requests. Therefore, you can focus on things that customers like about competitors and improve on other aspects of your business.

Lead generation

Another significant aspect of scraping Yelp data is lead generation. The core of marketing is obtaining new leads as well as nurturing warm leads. However, manually sourcing leads may be time-consuming and effort-intensive.

Since Yelp has a massive business directory, you can leverage it to generate quality leads to complement your marketing strategies. Addresses, emails, and phone numbers are some of the information you can scrape from Yelp to build a cold list.

Learning how to scrape Yelp data with Python is a cost-effective option that provides a steady stream of leads. In other words, you can generate a large volume of leads from multiple pages effectively and efficiently.

Sentiment analysis

Customer sentiment is a powerful tool that every business must understand. Learning how to scrape Yelp data with Python allows businesses to gain insight into customer opinions. Subsequently, extracting data ensures you can identify any issues with your business so they can be promptly addressed. Therefore, you can understand customers’ sentiments- either positive or negative towards your business.

Sentiment analysis involves collecting data on what people like or dislike about their products and services. Subsequently, this helps them to create products that the customers will appreciate.

Market research

Market research allows companies to collect data that provides insight into current trends, market pricing, optimal points of entry, and competitor monitoring. Therefore, learning how to scrape Yelp data is a critical aspect of the research and development of any organization. It provides accurate information to facilitate decision-making that could alter the direction of operations. Therefore, learning how to scrape Yelp data with Python guarantees access to high-quality, large-volume, and insightful data from across the web for optimal market analysis.

Improving user experience

Another significant benefit of scraping data from Yelp with Python is that it allows you to improve user experience. In recent years, people have no longer been too shy to leave a review, which could be a detailed explanation of their experience with your business. Therefore, Yelp provides an avenue for you to gather the information with ease.

In addition, customers can find the best solution or alternative on Yelp. This generally increases their experience, especially when they are satisfied. The core of every business is profit, and this is directly related to customer satisfaction. When your customers are happy with your products and services, they will not hesitate to recommend you to others either through word of mouth or with high ratings and positive reviews on your website.

Informing marketing strategies

Learning how to scrape Yelp data with Python can inform marketing strategies in many ways. You understand what the customer wants, and you can strategically tailor it into your product development. In addition, Yelp data can inform SEO strategies by providing insights into trending niche-specific keywords. This allows businesses to create targeted content that attracts more traffic as well as ranks higher on search engine result pages.

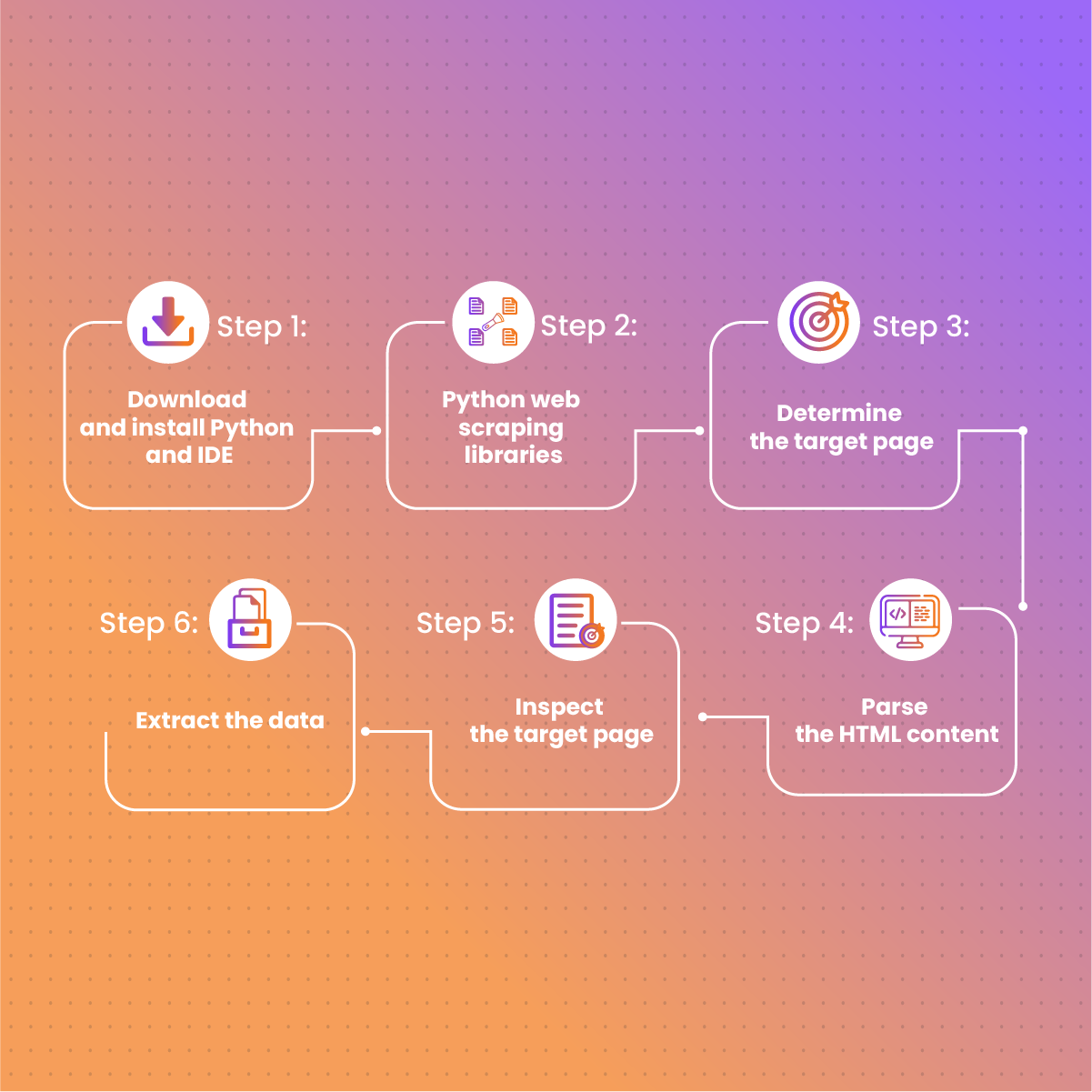

How to Scrape Yelp Data in Python

Python is an excellent language for scraping various websites. This can be attributed to its ease of use, powerful scraping libraries, simple syntax, and active online community. Therefore, in this section, we shall examine how to scrape Yelp data with Python.

Step 1: Download and install Python and IDE

The first step in scraping Yelp data is to download and install Python. Ensure you download the latest version compatible with your device from the official website. In addition, you need an Integrated Development Environment (IDE). They provide a suitable environment that allows you to write code, check for errors and optimize the functionality of the codes. Visual Studio Code and PyCharm are the most popular IDEs compatible with Python.

After downloading the software, you need to create a yelp-scraper folder. The next step is to initialize it as a Python project with a virtual environment via:

mkdir yelp-scraper

cd yelp-scraper

python -m venv env

To run the command on macOS or Linux:

env/bin/activate

Alternatively, if you are a Windows user, you can run the command with the code:

env\Scripts\activate.ps1

Now, you need to add a scraper.py file with the code print(‘Hello, World!’) in the project folder. This is the most basic Python script to confirm if the software you downloaded is actually working.

When you launch the scraper with python scraper.py, the result in the terminal will look like this:

Hello, World!

Now that we are certain that everything works, open the project folder in your preferred Python IDE and get ready to write some Yelp scraping codes.

Step 2: Python web scraping libraries

As mentioned earlier, Python has powerful libraries that optimize web scraping. However, for this guide, we shall focus on Request and Beautiful Soup libraries. Requests is the most popular HTTP client Python library that handles sending HTTP requests to target websites as well as receiving their responses.

On the other hand, Beautiful Soup is an HTML and XML parsing library with extensive features for extracting data from the DOM. Bear in mind that all our coding is happening in the Integrated Development Environment. Subsequently, you can use the pip command to install the libraries as shown below:

pip install beautifulsoup4 requests

At this stage, you can clear the “Hello, World!” code in the scraper.py file. Once that is done, you can import the libraries with the code:

import requests

from bs4 import BeautifulSoup

# scraping logic…

Look out for any error messages from the IDE as they may affect the performance of the scraper if left unattended. However, you can ignore error warnings related to unused imports since we already imported the libraries we need for this task.

Step 3: Determine the target page

Go to Yelp and determine the page from which you want to extract data. For this guide, we shall discuss how to scrape data from the list of Miami’s top-rated Mexican restaurants. Now, you need to assign the URL of the target page to a variable with the following code:

url= ‘https://www.yelp.com/search?find_desc=Mexico+Restaurants&find_loc=Miami%2C+FL%2C+United+States

Next, use requests. get() to make an HTTP GET request to that URL:

page = requests.get(url)

Step 4: Parse the HTML content

To parse the HTML content, we will use BeautifulSoup() function:

soup = BeautifulSoup(page.text, ‘html.parser’)

The above command contains two arguments- one is the string containing the HTML and the other is the parser that Beautiful Soup will use (html.parser). Some of the BeautifulSoup() functions that we will use include:

- find(): This method returns the first HTML element that matches the selector strategy

- find_all(): It returns the list of HTML elements that corresponds with the input selector strategy

- select(): Returns the first HTML elements that match the CSS selector

- select_one(): The function returns the first HTML elements that match the CSS selectors

Step 5: Inspect the target page

A crucial step in scraping Yelp data with Python is to inspect the target page. Open the page on your browser, right-click anywhere on the screen and click on Inspect to access the DevTools. One thing that you will notice when examining a Yelp page is that it relies on CSS classes that seem to be randomly generated at build time. Therefore, they might change at a later time so you don’t need to base your CSS selectors on them.

However, upon further examination of the DOM, you will realize that the most important elements have unique HTML attributes- on which you base your selector strategy.

Step 6: Extract the data

At this stage, you can extract data from each card on the target page. However, to keep track of the data extracted, you need a structure to store it:

items = [ ]

After inspecting the card HTML element, you can select them all with:

html_item_cards = soup.select(‘[data-testid=”serp-ia-card”]’)

The next step is to iterate over them and prepare your script to extract data from each of them, save them in a Python dictionary, and add it to items as shown below:

for html_item_card in html_item_cards:

item = {}

# scraping logic…

items.append(item)

For this guide, we can inspect the image element on the target page and extract the URL with:

image = html_item_card.select_one(‘[data-lcp-target-id=”SCROLLABLE_PHOTO_BOX”] img’).attrs[‘src’]

In the above code, we retrieved the HTML element with the select_one() function. Therefore, we can access its HTML attribute via the attrs member:

name = html_item_card.select_one(‘h3 a’).text

url = ‘https://www.yelp.com’ + html_item_card.select_one(‘h3 a’).attrs[‘href’]

Next, we will extract user review ratings on Yelp:

html_stars_element = html_item_card.select_one(‘[class^= “five-stars”]’)

stars = html_stars_element.attrs[‘aria-label’].replace(‘ star rating’, ”)

reviews = html_stars_element.parent.parent.next_sibling.text

In the above command, we use the replace() function to clean the string so we can retrieve only the data we need.

For the tags and price range elements, you need to select them all and iterate over them with the following command:

tags = []

html_tag_elements = html_item_card.select(‘[class^= “priceCategory”] button’)

for html_tag_element in html_tag_elements:

tag = html_tag_element.text

tags.append(tag)

On the other hand, you can run the code below to retrieve the price range on the target Yelp page:

price_range_html = html_item_card.select_one(‘[class^= “priceRange”]’)

# Since the price range info is optional

if price_range_html is not None:

price_range = price_range_html.text

Another element to extract is the services offered by the restaurant. Again, you need to iterate over every single node with:

services = []

html_service_elements = html_item_card.select(‘[data-testid=”services-actions-component”] p[class^=”tagText”]’)

for html_service_element in html_service_elements:

service = html_service_element.text

services.append(service)

Now, you need to add the scraped data variables to the Python dictionary, as shown below:

item[‘name’] = name

item[‘image’] = image

item[‘url’] = url

item[‘stars’] = stars

item[‘reviews’] = reviews

item[‘tags’] = tags

item[‘price_range’] = price_range

item[‘services’] = services

Step 7: Save scraped data in CSV format

Saving data in a readable format is a final yet crucial stage of scraping Yelp data with Python.

import csv

# …

# initialize the .csv output file

with open(‘restaurants.csv’, ‘w’, newline=”, encoding=’utf-8′) as csv_file:

writer = csv.DictWriter(csv_file, fieldnames=headers, quoting=csv.QUOTE_ALL)

writer.writeheader()

# populate the CSV file

for item in items:

# transform array fields from “[‘element1’, ‘element2’, …]”

# to “element1; element2; …”

csv_item = {}

for key, value in item.items():

if isinstance(value, list):

csv_item[key] = ‘; ‘.join(str(e) for e in value)

else:

csv_item[key] = value

# add a new record

writer.writerow(csv_item)

Limitations Associated with Scraping Yelp Data with Python

This guide will be incomplete if we do not examine common challenges associated with scraping Yelp data with Python. They include:

CAPTCHA

Yelp, like many other big websites, employs CAPTCHAs as one of its anti-bot measures. The purpose of CAPTCHA is to tell computers apart from humans. Therefore, if the server detects a behavior similar to automated scraping, it triggers the CAPTCHA challenge. Bots are not usually equipped to solve a CAPTCHA, and this could lead to an IP ban.

You can avoid this challenge by implanting CAPTCHA solvers into your web scraping code. However, this may potentially slow down the process of web data extraction. Using NetNut proxies is a secure and reliable way to bypass CAPTCHAs.

IP ban

Yelp can ban your IP address if it detects an abnormal browsing behavior. Another common trigger for IP blocks is geographical restrictions. You can filter your search based on location. Subsequently, this can affect the availability of data. This can be quite a challenge when you need to gather data from various locations.

Rotating proxies is a necessity if you want to avoid IP bans when scraping Yelp data with Python.

Rate limiting

Another significant challenge to scraping Yelp data with Python is rate limiting. The website puts a limit on the number of requests you can make within a certain period. This is necessary to avoid overloading the server which could cause it to lag or temporarily become unresponsive. Sending too many requests within seconds can trigger an IP ban which could negatively affect your data extraction efforts. However, you can implement delays between your requests to mimic human browsing behavior.

Dynamic website structure

One of the challenges associated with scraping Yelp data with Python is the dynamic content which is loaded asynchronously via JavaScript. This implies that the data you want to scrape may not be available in the initial HTML code extracted but is loaded at a later time.

In addition, Yelp can change the structure of their website at any time. As a result, your Yelp scraper may be unable to interact with the new structure and extract the data you need with ease.

One of the ways to handle this challenge is to use headless browsers like Selenium or Puppeteer which can handle dynamic content. In addition, you may need to regularly update your scraping code to conform with the changes on the website.

In summary, Yelp has many business details including contact information, name, reviews, and physical location. Therefore, it can become quite intense scraping, organizing, and sorting such large volume of data. You need to account for computing power and storage space before you dive into how to scrape Yelp data with Python. In other words, getting efficient methods of storing, processing, and managing data is a crucial aspect of scraping data from Yelp.

Best Practices for Scraping Yelp Data with Python

Regardless of the challenges associated with how to scrape Yelp data with Python, you can still extract its data. Here are some practical tips that can help you avoid some of the common pitfalls we discussed above:

Respect robots.txt file/ terms of service

Before you begin to scrape Yelp data with Python, you need to review the robots.txt file. This file indicates the scraping rules for the website. Therefore, adhering to these rules is necessary for legal scraping.

Apart from the robots.txt file, you can check out Yelp’s Terms of Service. While many may overlook this, it often contains additional information that can be relevant to your data extraction activities.

Throttle request

One of the practices that you must not overlook when learning how to scrape Yelp data with Python is throttling requests. You can achieve this by implementing delays between requests. Python has the time.sleep() function that allows you to add a delay.

Make requests at a slower pace to avoid triggering the anti-bot measures or overloading the server, which can be perceived as a DDoS attack.

Use headers

Websites easily identify bots due to lack of headers. Therefore, your scraper should send appropriate headers with each request. This helps your scraper to mimic a human user which significantly reduces the chance of IP ban/blocks.

User-agent string

Another practice that can make a difference in your Yelp scraping efforts is the use of user-agent string. In addition, you need to rotate the user-agent and IP address to avoid getting detected. The best way to implement this is to use rotating IP servers that can automatically change your IP address and user agent with each request.

Be ethical

Ethical data scraping is a crucial aspect of gathering data from the website. Do not scrape personal data without consent, as this may be an infringement on privacy or copyright laws. In addition, be considerate of the impact of your scraping activities on the server’s performance.

Choosing The Best Proxy To Scrape Yelp Data With Python-NetNut

Since Yelp has strong anti-scraping measures, it becomes necessary to use proxies to avoid IP bans. While there are several proxies in the market, choosing a premium provider like NetNut optimizes your scraping activities. Here are the different types of proxies you can use for scraping:

- Residential proxies: These proxies are associated with an actual physical location. Since they are provided by Internet service providers (ISPs), they are less likely to be detected as proxies. In addition, they are less likely to be blocked by Yelp, which makes them a bit more expensive than other types of proxies.

NetNut rotating residential proxies are your automated proxy solution that offers high-level anonymity. In addition, you can enjoy a fast connection and low latency, which optimizes Yelp data scraping efforts.

- Datacenter proxies: IP addresses from this type of server originate from datacenters. They are generally less expensive. However, they can easily be identified by sophisticated websites that can track IPs from hosting companies. NetNut offers high performance datacenter proxies that allows you to easily bypass geographic restrictions.

- Mobile proxies: These proxies rely on actual mobile IP addresses, so they route your traffic through mobile devices. Similar to residential proxies, they have a high trust coefficient because they align with devices used by real humans. NetNut Mobile proxies provide security, privacy, and anonymity as you extract data from Yelp.

In summary, NetNut stands out for its competitive and transparent pricing model that guarantees you only pay for data that you receive. We are customer-oriented so we ensure you get 24/7 support via email or live chat.

Conclusion

In this guide, we have discussed how to scrape Yelp data with Python. With a few lines of code, you can build a powerful scraper to extract Yelp data that can be used for competitive analysis, lead generation, sentiment analysis, market research, and more.

You have to remember that Yelp website undergoes regular updates to its structure and interface to optimize user experience. Therefore, you need to regularly update your Python scraper to accommodate these changes for effective data retrieval. An alternative to the expensive option of regularly maintaining your scraper is NetNut Scraper API.

While there are several challenges including rate limiting, CAPTCHAs, associated with collecting data from Yelp, following the best practices suggested in this guide will optimize your efforts.

Finally, you can optimize the Yelp scraper’s performance by integrating it with NetNut proxies. Contact us today to get started!

Frequently Asked Questions

What is the legal status of scraping Yelp data?

The legal status of web scraping remains a gray area in many countries. Therefore, before scraping Yelp, you need to review the Terms of Service and robots.txt file. Subsequently, you can get useful insights into what is permissible on the platform.

Although Yelp’s content is publicly accessible, the way you extract it can be categorized as malicious or ethical. In addition, scraping personal data from user’s reviews or profiles may violate data protection regulations depending on your location. Therefore, you need to understand the platform’s guidelines as well as the laws of the land.

What is Yelp scraping?

Yelp scraping is the process of gathering data from the website. Data collected may include the name of the business, address, phone number, ratings, customer reviews, opening hours, and more. Scraping Yelp is essential for various reasons, such as market research, sentiment analysis, competitor analysis, and so much more.

What data can be extracted from Yelp?

Before you can scrape Yelp data with Python, you need to understand the type of data you can collect. These data points allow you to gather all the necessary information, which makes your data scraping more efficient. Here is a breakdown of the data you can retrieve from Yelp:

- Business name: The name of the business- whether it is a restaurant, bar, or service provider.

- Address: You can also access the physical location(s) of the business including building number, street, and city.

- Contact information: Yelp allows access to a business’s contact information, including phone numbers, emails, or social media accounts as provided by the business.

- Images: Visual representation of a business is a crucial aspect of listing on Yelp. Therefore, you can get access to food images, service images, and others.

- Ratings: This is the average score given by users and often starts to represent their level of satisfaction.

- Reviews: Apart from ratings, customers can leave reviews, which provides an opportunity for them to leave actual comments regarding their experience with a business. Subsequently, you can gather insight into the reputation of a business from the reviews.

- Opening hours: This information is necessary to give you an overview of which business is open or closed.

- Website URL: Some businesses often include a link to their main business website. If you are curious, you can follow the link to learn more about their operations and the services they offer.

- Price range: You can determine how expensive a business is from the categorization $ to $$$.