Introduction

Node-Fetch is a popular HTTP library that simplifies the process of making asynchronous fetch requests. This library is different from the default Fetch API because it can be used in the backend of Node.js applications and scripts, which makes it an excellent option for web scraping. It involves additional Node.js specific functionality, such as the ability to work with Node.js HTTP agents. Subsequently, the HTTP agent is a tool that manages connection pooling, which allows you to reuse connections for HTTP requests.

Regardless, it still faces the same challenge as other web scrapers, as it can easily be blocked by websites with robust anti-bot measures. Therefore, it becomes necessary to learn how to use a proxy with Node-Fetch to imitate human actions and optimize online anonymity.

This guide will explore how to use proxies with the Node-Fetch library. In addition, you will learn about NetNut proxy service and the various solutions available for your web scraping needs.

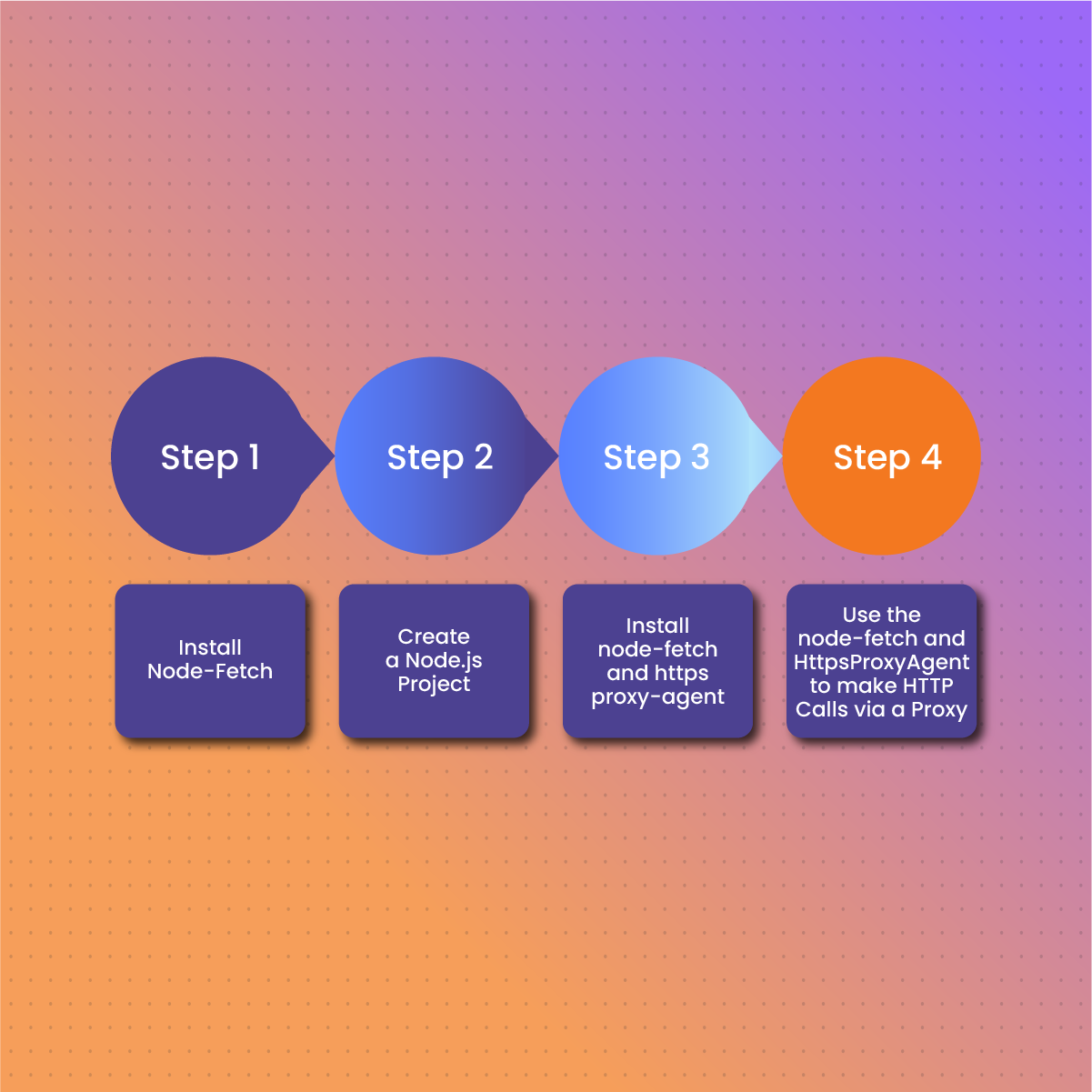

Integrating proxies with Node-Fetch

Step 1: Install Node-Fetch

The first step is to install the latest Node.js version from the official website. Next, you need to install Node-Fetch via the following command in the terminal:

npm i node-fetch

Occasionally, you may encounter an error message when using the Node-fetch library:

Error: cannot find module ‘node-fetch’

This error can occur when the module resolution cannot find the installed Node-Fetch module. Subsequently, you can resolve this issue by installing the necessary dependencies by running the following command in the directory as shown below:

npm install

Step 2: Create a Node.js Project

Once you have a working installation of Node.js and access to a premium proxy, you can begin the process of integration. Subsequently, you need to create a Node.js project and initialize it with npm via the command below:

mkdir node-fetch-proxy

cd node-fetch-proxy

npm init -y

Bear in mind that this command creates a folder called node-fetch-proxy, navigates into it and creates a package.json file that contains some default values.

Step 3: Install node-fetch and https-proxy-agent

The next step involves installing the node-fetch and https-proxy-agent libraries as dependencies for your project:

npm install –-save node-fetch https-proxy-agent

Subsequently, the above command installs the libraries to your project’s node_modules folder and updates your package.json file accordingly.

Step 4: Use the node-fetch and HttpsProxyAgent to make HTTP Calls via a Proxy

Once you have successfully installed the node-fetch and https-proxy-agent libraries on your device, you can use the HttpsProxyAgent class from the https-proxy-agent library with the fetch function from the node-fetch library to make HTTP calls through a proxy. You can do this by creating a file called proxy.js in your project folder and adding the following command:

import fetch from ‘node-fetch’;

import { HttpsProxyAgent } from ‘https-proxy-agent’;

// Replace <proxy_url> with your actual proxy URL

const agent = new HttpsProxyAgent(‘<proxy_url>’);

// Use fetch with the agent option to make an HTTP request through the proxy

// Replace <target_url> with the URL you want to request

fetch(‘<target_url>’, { agent })

.then((response) => response.text())

.then((text) => console.log(text))

.catch((error) => console.error(error));

Here is a breakdown of how the code works:

- It imports the fetch function from the node-fetch library that provides a browser-compatible Fetch API for Node.js.

- It imports the HttpsProxyAgent class from the https-proxy-agent library and creates an HTTP agent that supports HTTPS proxies.

- In addition, it creates an HttpsProxyAgent instance with your proxy URL. You need to replace <proxy_url> with your actual proxy URL, which should have the following format: https://username:password@host:port.

- Subsequently, it uses the fetch function with the agent option to make an HTTP request through the proxy. Therefore, you need to replace <target_url> with the URL you want to request- this can be any valid HTTP or HTTPS URL.

- The command uses the fetch function with the agent option to make an HTTP request through the proxy. As a result, you need to replace <target_url> with the URL you want to request, which can be any valid HTTP or HTTPS URL.

- Finally, the codes handle the response and print out any errors that may occur.

At this stage, you can save the code. Run the command below to execute the code and make an HTTP request through the proxy. Once it is successful, you should see the response text or any error message in the terminal:

node proxy.mjs

Use a Rotating Proxy with Node-Fetch

Many modern websites have advanced strategies aimed at preventing bot traffic. These strategies often include IP bans, rate limiting, or CAPTCHAs. Therefore, if you are sending too many requests from a single IP address, it can trigger these anti-bot measures, which will result in an IP ban.

However, you can bypass these limitations by rotating your proxy IPs. Premium proxy server providers like NetNut offer automatic IP rotation to ensure you enjoy uninterrupted access to target websites. Subsequently, each request will appear to come from a different address, which makes it less likely to trigger the anti-bot measures.

The first step to using a rotating proxy with Node-Fetch is to get a pool of multiple proxies from your provider. Subsequently, the proxy list is usually in a CSV file, which you can integrate with Node-Fetch to randomize with each request.

Step 1: Import the necessary dependencies and define the Node-Fetch proxy list

import fetch from ‘node-fetch’;

import { HttpsProxyAgent } from ‘https-proxy-agent’;

const proxyList = [

{ host: ‘100.69.108.78’, port: 5959 },

{ host: ‘94.29.96.146’, port: 9595 },

{ host: ‘172.204.58.155’, port: 5959 },

];

Step 2: Define a function that takes your proxy list array and target URL as arguments. You can use the for loop function, which will construct a proxy URL, generate a user agent and make a request through each proxy in the array. Subsequently, the code, as shown below, should print the HTML content of the target page.

async function RotateProxy(proxyList, targetUrl) {

for (const proxy of proxyList) {

try {

const proxyUrl = `https://${proxy.host}:${proxy.port}`;

const proxyAgent = new HttpsProxyAgent(proxyUrl);

const response = await fetch(targetUrl, { agent: proxyAgent });

const html = await response.text();

console.log(html);

} catch (error) {

console.error(error);

}

}

}

Step 3: Define the target URL and call the function

const targetUrl = ‘https://ident.me/ip’;

await RotateProxy(proxyList, targetUrl);

How to use Node-Fetch Proxy User Agents

User agents are a crucial aspect of your scraper that ensures you don’t trigger an IP ban. You can use user agents using the options argument in your node fetch proxy call. However, you can use the simple fetch request:

const scrape = await fetch(‘https://www.example.com/’);

It can also be done with the options argument after the URL with the code as shown below:

const scrape = await fetch(‘https://www.example.com/’, { headers: { /** request headers here **/ }} );

Apart from user agents, you can also add other header arguments. Below is an example of using node- a fetch proxy and custom user agent:

(async () => {

const proxyData = new HttpsProxyAgent(‘https://username:password@hostname:port’);

const options = {

agent: proxyData,

headers: {

‘User-Agent’: ‘Mozilla/5.0 (Macintosh; Intel Mac OS X 13_0_1) AppleWebKit/605.1.15 (KHTML, like Gecko) Version/16.1 Safari/605.1.15’

}

};

const scrape = await fetch(‘https://www.example.com/’, options );

const html = await scrape.text();

console.log(html);

})();

Benefits of using Proxies with Node-Fetch

Regardless of why you need to use proxies with Node-Fetch, there are several benefits you can enjoy. However, we will focus on the main advantages of using node-fetch for web scraping:

Anonymity

Proxies work by masking your IP address, which makes it seem like the request is coming from another location. Therefore, it becomes challenging for websites to identify and block your scraper. As a result, you can maintain anonymity when browsing online. Since proxies hide your actual IP address, the website cannot determine your actual location. Subsequently, this allows you to easily bypass geo-restrictions and access data from any part of the world. Proxies have become useful to data scientists and machine data training for obtaining unbiased data.

Security

Another significant advantage of individuals and organizations using proxies is security. One of the cons of the advancement in technology is the rise in cybercriminals. These individuals often attempt to commit cyber theft by intercepting your network. Therefore, the use of proxies ensures third parties cannot access identifying information about your device. If you are a regular on the internet and need to protect the integrity of your data, then proxies are the best solution for you.

Optimized scalability and concurrency

Another unique benefit of using proxies is optimized scalability and concurrency. Since proxies allow you to distribute your scraping request across multiple IP addresses simultaneously, it increases the scalability of your data mining activities. Subsequently, you can collect data at a faster speed without overloading the target website server. Overloading a server can cause it to lag, which negatively affects the actual user’s performance.

Optimized data accuracy

The use of proxies optimizes data accuracy because you can access data from various locations without worrying about restrictions. In addition, it increases the chances of successful web scraping. The use of rotating IP proxies ensures you can evade rate limits and collect as much data as you need to make informed decisions.

Market research

Another usefulness of using proxies with Node-Fetch is that it allows you to conduct market research. Market research is crucial for competitor analysis, price monitoring, brand monitoring, and sentiment analysis. These data are crucial for businesses to gain a competitive advantage in the highly competitive market.

Choosing the Best Proxies for Node-Fetch- NetNut

One of the common challenges with using the internet is IP blocks or website blocks. Therefore, it becomes crucial to choose a reputable proxy provider with an extensive network of data in various countries of the world.

NetNut is an industry-leading provider with an extensive network of over 85 million rotating residential proxies in 200 countries and over 1 million mobile IPS in over 100 countries. Subsequently, there are various proxy solutions to cater to your web scraping needs. They include:

Residential proxies

These IP addresses are associated with actual physical locations. Therefore, they are more expensive because they have a lesser chance of being blocked. Residential proxies can either be static or rotating, depending on your use case. You can opt for static residential proxies if you need to maintain the same IP for a particular session. However, for web scraping, you need rotating residential proxies. In addition, NetNut offers automated IP rotation to ensure the highest level of anonymity.

Datacenter proxies

Datacenter IP addresses originate from data centers. They are often commonly used because they are less expensive. Regardless, they offer high speed and scalability, which makes them an excellent choice for gamers who require fast download speed. However, there is a high chance of getting blocked if the target website has a sophisticated system that can identify IPs from a data center. Subsequently, NetNut datacenter proxies, you can enjoy high speed and performance that optimizes your scraping efforts.

Mobile Proxies

NetNut Mobile IPs are made of actual mobile IP addresses linked to the ISP. Since your network is routed through these servers, the chances of being blocked are significantly reduced. Subsequently, Mobile proxies can be more expensive than data center IPs because they have a higher confidence rating. In addition, these proxies come with Smart features that allow your scraper to bypass CAPTCHAs.

Why NetNut Proxy Servers?

Here are some reasons why NetNut proxies are the best choice if you prioritize security, privacy, and anonymity:

- NetNut proxies guarantee high-level anonymity, which is necessary to avoid IP blocks and prevent cyber theft.

- If you want fast connection speed and low latency, then NetNut is the perfect solution. In addition, NetNut guarantees 99% uptime for uninterrupted scraping activities.

- NetNut stands out because it has an extensive network that covers a wide range of locations. In addition, the dashboard is user-friendly, so you can manage your proxies with ease.

- Bypassing geographical restrictions has never been more automated than with NetNut proxies.

- Another unique feature of NetNut is it offers excellent customer support services. You can always get prompt support via email or live chat on the website.

Conclusion

This guide has examined how to use proxies with Node-Fetch- a lightweight and powerful library for Node.js. This library allows developers to make HTTP requests from the server side. In addition, it is a fast and efficient option when you need to make frequent requests to a target website. Subsequently, it can be used with a proxy to ensure security and privacy of web requests.

We also examined the NetNut proxy service, which offers several proxy solutions for your web scraping needs. Using proxies with Node-Fetch allows you to bypass network restrictions, mask your actual IP address and access blocked websites. Subsequently, you need to use premium proxies if you need access to advanced features, IP rotation, and geo-targeting.

An alternative for web data collection and accessing blocked content is to use NetNut Scraper API or Website Unblocker. Feel free to contact us to get started today!

Frequently Asked Questions

What are the applications of Node-Fetch proxy?

The main application of Node-Fetch is making asynchronous requests to the browser to load the content. However, there are other applications on the server side, such as web scraping. Subsequently, the Node-Fetch can send a request to a web server and then receive the response. Then, the Node-Fetch can use this response to parse the HTML element, extract the data, and store it in a database. Therefore, it makes it possible to create a web scraper and store data from a website in a database.

In addition, Node-Fetch can make HTTP requests to external APIs and receive the data in a JSON format. Subsequently, this data can be processed and stored in a database, which makes it easy to create a web application that can use external APIs to get data and store it in the specific database.

What is a Node-fetch proxy?

A node-fetch proxy is an HTTP proxy written in Node.js, which makes it easier for developers to request HTTP from remote servers. Subsequently, it works like the fetch API but with additional features like caching, authentication, and logging of requests and responses. In addition, it allows developers to make HTTP and HTTPS requests to any server, regardless of the protocol.

What are the best practices for using Node-Fetch proxy for web scraping?

Here are some of the best practices to optimize web scraping via Node-Fetch proxy:

- Rotate your proxy IP to avoid being detected by websites with advanced anti-bot features.

- Randomize the timing and order of your request to mimic the behavior of humans.

- Randomize user agent headers to simulate requests coming from regular browsers

- Use headless browsers like Selenium and Puppeteer with node-fetch to optimize web scraping and other automation tasks.

- Implement error-handling techniques to deal with any possible challenges like network failures.

- Frequently update your scraping script to accommodate changes in the website structure.