Web scraping has become an essential tool for businesses and researchers to gather data from the internet. It involves extracting data from websites and storing it in a structured format for analysis or other purposes. One of the most popular formats for storing web scraped data is JSON (JavaScript Object Notation). This article serves as a comprehensive guide to understanding the process of converting data from HTML to JSON, covering various aspects of web scraping, data structuring, and best practices.

Web Scraping and Data Extraction from HTML

Web scraping involves extracting data from websites. Websites are built using HTML (HyperText Markup Language), which structures the content and provides a way to display it in a web browser. To scrape data from a website, you need to understand the HTML structure and identify the elements that contain the desired information. This often involves inspecting the HTML source code and understanding how the data is organized within the HTML tags.

One way to extract information from a web page’s HTML is to use string methods. For instance, you can use .find() to search through the text of the HTML for specific tags and extract the text within those tags. Another approach is to use libraries that provide more advanced functionalities for parsing and extracting data from HTML.

For example, the mlscraper library allows automatic extraction of structured data from HTML without manual specification of nodes or selectors. This can be particularly useful when dealing with complex or irregular HTML structures.

In addition to understanding the HTML structure, it’s important to be familiar with HTTP methods like GET and POST, which are used to request data from a web server. GET is used for retrieving data, while POST is used for submitting data. These methods are essential for interacting with web servers and retrieving the HTML content of web pages.

Customizing Data Extraction with HTML Agility Pack

When extracting data from HTML, you might need to customize the extraction process to capture specific elements or attributes. For instance, you can use selectors like “InnerText” or “InnerHtml” to define what data to extract from an HTML element.

For more complex extraction tasks, especially when dealing with dynamic content or intricate HTML structures, the HTMLAgilityPack can be a valuable tool. This library provides a robust set of features for parsing and manipulating HTML documents, allowing for more precise and efficient data extraction.

Libraries and Tools for Web Scraping

Python

Python is a popular language for web scraping due to its extensive libraries and ease of use. Here’s a table summarizing some of the most popular Python libraries for web scraping:

| Library | Description | Strengths | Weaknesses |

|---|---|---|---|

| Beautiful Soup | Parses HTML and XML documents | Easy to use, good for beginners | Not as efficient for large-scale projects |

| Scrapy | A complete web scraping framework | Powerful, efficient, handles complex projects | Steeper learning curve |

| Selenium | Controls a web browser | Useful for dynamic websites, handles JavaScript | Resource-intensive |

| Requests | Simplifies HTTP requests | Easy to use, versatile | Primarily for fetching content, not parsing |

| Urllib3 | Powerful HTTP client | Thread-safe, supports connection pooling | More complex than Requests |

| Lxml | Processes XML and HTML documents | Fast, supports XPath and CSS selectors | Can be less user-friendly |

| MechanicalSoup | Automates website interactions | Simulates human browsing behavior | Can be slower than other libraries |

When choosing a Python web scraping library, consider the complexity of your project, the type of website you are scraping, and your experience with Python. For example, Beautiful Soup is a good choice for beginners due to its simplicity, while Scrapy is more suited for complex projects that require handling large amounts of data and complex website structures.

JavaScript

JavaScript is another popular language for web scraping, especially for client-side scraping. Here’s a table summarizing some of the most popular JavaScript libraries for web scraping:

| Library | Description | Strengths | Weaknesses |

|---|---|---|---|

| Cheerio | Parses HTML | Fast, flexible, jQuery-like syntax | Doesn’t handle dynamic content |

| Puppeteer | Controls headless Chrome/Chromium | Powerful, handles JavaScript, good for dynamic websites | Resource-intensive |

| Playwright | Controls headless Chromium, Firefox, and WebKit | Versatile, supports multiple browsers, modern features | Steeper learning curve |

| Selenium | Controls web browsers | Supports multiple browsers, WebDriver API | Can be complex to set up |

When choosing a JavaScript web scraping library, consider the type of website you are scraping, your experience with JavaScript, and the specific features you need. For instance, Cheerio is lightweight and fast but doesn’t handle dynamic content, whereas Puppeteer is more powerful but resource-intensive.

Headless Browsers in Web Scraping

Headless browsers are browsers without a graphical user interface, used for automating tasks and running web scrapers without the need for a visual display. They are becoming increasingly popular in web scraping as a way to deal with dynamic javascript and scraper blocking.

Many websites use complex front-end frameworks that generate data on demand either through background requests or javascript functions. To access this data, a javascript execution environment is needed, and headless browsers provide this functionality. Libraries like Playwright and Selenium are commonly used to control headless browsers for web scraping in Python.

JSON Data Format and Structure

JSON (JavaScript Object Notation) is a lightweight data-interchange format that is easy for humans to read and write and easy for machines to parse and generate. It is a text-based format that uses a specific syntax to represent data as key-value pairs, arrays, and nested objects.

JSON is widely used for data transmission on the web, especially in web APIs (Application Programming Interfaces). It is a popular choice for storing web scraped data because it is human-readable, machine-readable, lightweight, and flexible.

JSON is language-independent, meaning it can be used with various programming languages. It was originally derived from JavaScript, but many modern programming languages include code to generate and parse JSON-format data.

JSON Structure

JSON data is structured using the following elements:

- Objects: Objects are enclosed in curly braces {} and contain a collection of key-value pairs. Keys are strings enclosed in double quotes “”, and values can be any valid JSON data type, such as strings, numbers, booleans, arrays, or other objects.

- Arrays: Arrays are enclosed in square brackets “ and contain an ordered list of values. Values in an array can be of different data types.

- Key-value pairs: Key-value pairs are the basic building blocks of JSON objects. Each key-value pair consists of a key (a string) and a value, separated by a colon :

Here’s an example of a simple JSON object:

JSON

{

“name”: “John Doe”,

“age”: 30,

“occupation”: “Software Engineer”

}

This JSON object represents a person with the name “John Doe”, age 30, and occupation “Software Engineer”.

JSON Web Signatures (JWS)

While JSON is often transmitted as clear text, it can also be used for secure data transfers. JSON web signatures (JWS) are JSON objects securely signed using either a secret or a public/private key pair. These signatures ensure the integrity and authenticity of the data being transmitted.

JSON Formatting and Debugging Tools

Tools like JSON Formatter and JSONLint can be helpful for validating and formatting JSON data. These tools help to ensure that the JSON data is correctly formatted and adheres to the JSON specification, making it easier to read and debug.

How To Convert HTML to JSON

Converting HTML data to JSON involves extracting the relevant information from the HTML document and structuring it into a JSON format. This process typically involves the following steps:

- Fetching the HTML content: Use a library like requests in Python or fetch in JavaScript to retrieve the HTML content of the web page you want to scrape. For example, in Python, you can use the requests library to send an HTTP GET request to the URL of the webpage you want to scrape, which will respond with HTML content.

- Parsing the HTML: Use an HTML parsing library like Beautiful Soup in Python or Cheerio in JavaScript to parse the HTML content and create a structured representation of the document.

- Extracting data: Use CSS selectors or XPath expressions to locate the specific elements in the HTML that contain the data you want to extract.

- Structuring the data: Organize the extracted data into a JSON object or array, using appropriate keys and values to represent the information.

- Outputting the JSON: Use the json.dumps() function in Python or JSON.stringify() function in JavaScript to convert the structured data into a JSON string.

Libraries and Tools for JSON Conversion

There are various libraries and tools available to help with the HTML to JSON conversion process.

- Python: The html-to-json library in Python provides a convenient way to convert HTML to JSON. Another option is the aspose.cells library, which allows you to load an HTML file and save it as a JSON file.

- JavaScript: In JavaScript, you can use libraries like html-to-json and DOMParser for converting HTML to JSON. You can also convert selected HTML to JSON using JavaScript and store it in a database for later use.

It’s important to note that HTML and JSON are two distinct data formats that serve different purposes. HTML is used to structure web page content, whereas JSON is used for data transmission. Therefore, there is no straightforward way to “convert” HTML into JSON. You need to extract data from HTML and then represent it in JSON format.

Example: Converting HTML to JSON in Python

Here’s an example of how to convert HTML source code to a JSON file on the local system using Python:

Python

import json

import codecs

# Load the HTML file

with codecs.open(filename=“sample.html”, mode=“r”, encoding=“utf-8”) as html_file:

html_content = html_file.read()

# Parse the HTML content (using a library like Beautiful Soup)

# …

# Extract the desired data

# …

# Structure the data into a JSON object

data = {

“title”: “Example Title”,

“content”: “Example Content”

}

# Output the JSON data to a file

with open(“output.json”, “w”) as json_file:

json.dump(data, json_file, indent=4)

This example demonstrates the basic steps involved in converting HTML to JSON in Python. You would need to replace the placeholder comments with the actual code for parsing the HTML and extracting the data based on the specific structure of the HTML file.

Choosing the Right Tools and Techniques

The choice of tools and techniques for converting HTML to JSON depends on the specific website and data being scraped. For dynamic websites that load content using JavaScript, you might need to use tools like Selenium or Puppeteer to handle JavaScript rendering. For static websites, libraries like Beautiful Soup or Cheerio might be sufficient.

Best Practices for Structuring Data into JSON

When structuring extracted data into JSON, it’s essential to follow best practices to ensure that the data is well-organized, consistent, and easy to use. Here are some key recommendations:

- Use meaningful keys: Choose descriptive and informative keys that accurately represent the data being stored. For example, instead of using generic keys like “field1” or “value”, use more specific keys like “productName” or “price”.

- Maintain consistent structure: Use a consistent structure for similar data elements across the JSON document. This makes it easier to parse and process the data.

- Use arrays for lists of data: When extracting multiple data points of the same type, use JSON arrays to store them. This keeps the data organized and makes it easier to iterate over the elements.

- Avoid unnecessary nesting: Keep the JSON structure as flat as possible to avoid excessive nesting, which can make the data harder to read and process.

- Validate the JSON: Use a JSON validator to ensure that the generated JSON conforms to the JSON specification. This helps to identify and fix any syntax errors or inconsistencies24.

JSON Syntax Rules

JSON has specific syntax rules that need to be followed to ensure valid JSON data. These rules include:

- Objects: Objects are enclosed in curly braces {}.

- Arrays: Arrays are enclosed in square brackets “.

- Key-value pairs: Data is represented in key-value pairs, where the key is a string enclosed in double quotes “”, and the value can be any valid JSON data type.

- Data types: JSON supports various data types, including strings, numbers, booleans, null, objects, and arrays.

Documenting JSON File Structure

It’s important to document the structure of your JSON data to ensure maintainability and clarity. You can use schemas and tools like JSON Schema to define and validate the structure of your JSON data. This helps to ensure that the data is consistent and conforms to the expected format.

Designing JSON Schema for Intended Use

When designing a JSON schema, consider the intended use of the data. Different schema designs might be appropriate for different purposes, such as data visualization, database storage, or API integration. For example, a schema for data visualization might focus on hierarchical relationships, while a schema for database storage might prioritize data normalization.

Challenges and Issues in Web Scraping and Data Structuring

Web scraping and data structuring can present various challenges and issues that you need to be aware of. Some common challenges include:

- Website structure changes: Websites frequently update their structure and design, which can break your web scraping scripts. You need to be prepared to adapt your scripts to handle these changes.

- Anti-scraping measures: Some websites implement anti-scraping measures to prevent bots from extracting data. These measures can include IP blocking, CAPTCHAs, and rate limiting. IP-based blocking, where the website blocks requests from specific IP addresses, is a common method used to prevent web scraping. You may need to use techniques like rotating proxies or CAPTCHA solving services to overcome these challenges.

- Dynamic content: Many websites use JavaScript to load content dynamically, which can make it difficult to scrape the data using traditional methods. You may need to use tools like Selenium or Puppeteer to handle JavaScript rendering.

- Data quality issues: Web scraped data can sometimes be inconsistent or incomplete. You need to implement data validation and cleaning processes to ensure the accuracy and reliability of the data. This includes implementing data governance practices, which involve establishing policies and procedures to manage data throughout its lifecycle.

Common Challenges and Issues in Data Structuring

In addition to the challenges in web scraping, there are also common challenges and issues specifically related to data structuring. These challenges include:

- Understanding complex concepts: Data structures and algorithms can involve complex concepts that require careful study and understanding.

- Avoiding memorization: It’s important to understand the underlying principles of data structures and algorithms rather than simply memorizing solutions.

- Practicing consistently: Mastering data structures and algorithms requires consistent practice and application of the concepts.

- Overcoming the fear of math and logic: Data structures and algorithms often involve mathematical and logical thinking, which can be intimidating for some learners.

Ethical and Legal Considerations

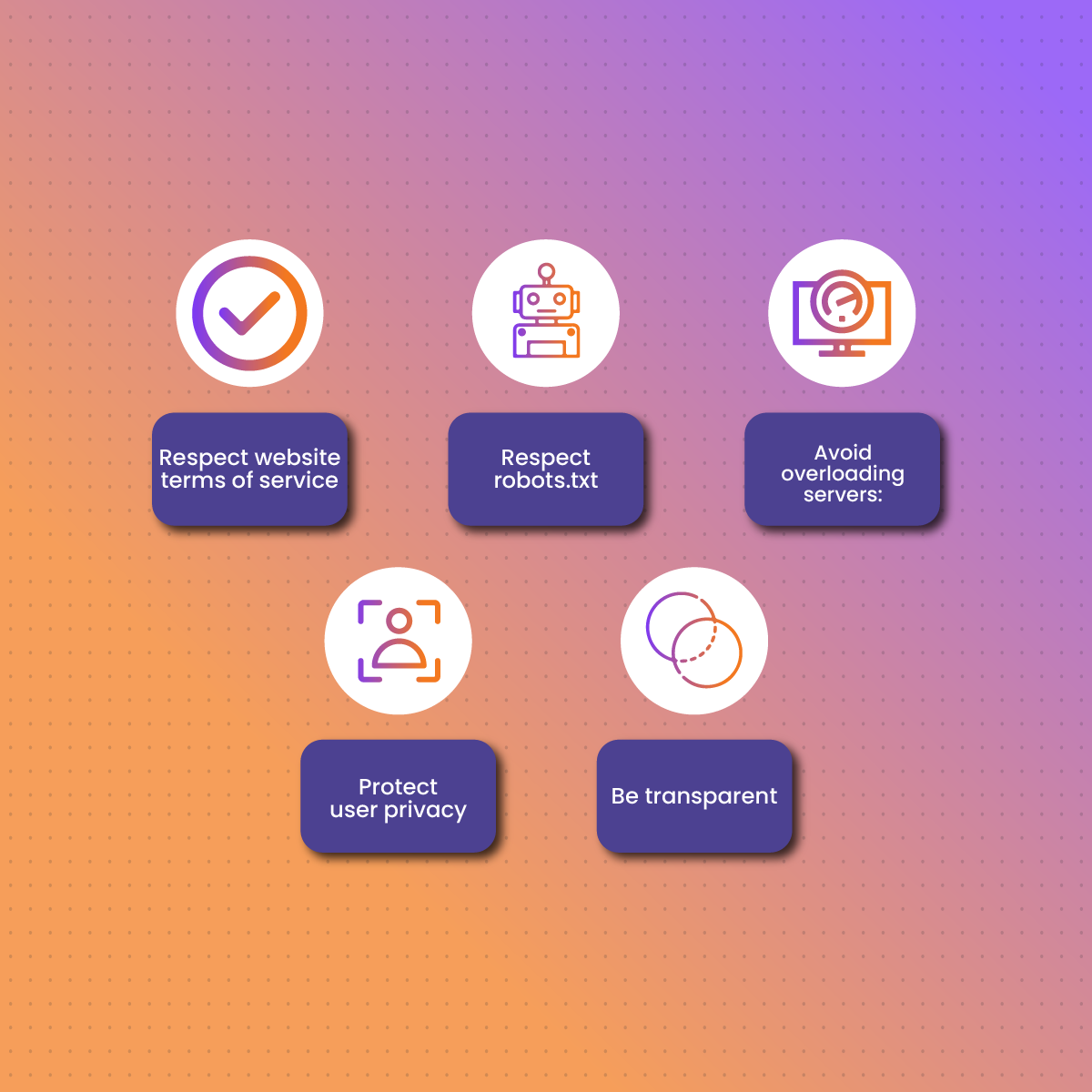

Web scraping raises ethical and legal considerations that you need to be mindful of. Here are some key points to consider:

- Respect website terms of service: Always check the website’s terms of service to ensure that web scraping is allowed. Some websites explicitly prohibit scraping, and violating their terms can have legal consequences.

- Respect robots.txt: The robots.txt file provides instructions to web crawlers about which parts of the website should not be accessed. Always check the robots.txt file and adhere to its directives.

- Avoid overloading servers: When scraping a website, be mindful of the load you are putting on their servers. Avoid making too many requests too quickly, which can disrupt the website’s performance or lead to your IP address being blocked.

- Protect user privacy: If you are scraping personal data, ensure that you comply with relevant privacy laws and regulations. Obtain consent where necessary and handle the data responsibly.

- Be transparent: If you are using web scraped data for research or commercial purposes, be transparent about your data sources and methods.

Legal Implications of Web Scraping

While web scraping is generally legal for publicly available data, there are legal implications to consider.

- Selling web scraped data: The legality of selling web scraped data depends on the specific data and the terms of service of the website. It’s crucial to ensure that you have the right to sell the data and that you are not violating any copyright or other legal restrictions.

- Scraping Google search results: Scraping Google search results can be legally problematic, as it may violate Google’s terms of service.

- Copyright considerations: Scraping copyrighted content without permission can be a violation of copyright law. However, there are fair use provisions that allow for limited use of copyrighted material for purposes such as criticism, commentary, news reporting, teaching, scholarship, or research.

- Relevant laws: In the United States, laws like the Computer Fraud and Abuse Act (CFAA) and the Digital Millennium Copyright Act (DMCA) are relevant to web scraping activities. The CFAA prohibits unauthorized access to computer systems, while the DMCA addresses copyright infringement in the digital environment.

Consequences of Unethical Web Scraping

Unethical web scraping can have various consequences, including:

- Legal action: Websites can take legal action against scrapers who violate their terms of service or infringe on their intellectual property rights.

- Reputational damage: Unethical scraping practices can damage your reputation and credibility.

- Website blacklisting: Websites can blacklist IP addresses associated with unethical scraping, preventing you from accessing their content.

Final Thoughts on HTML to JSON Conversion

Converting data from HTML to JSON is a crucial process in web scraping. By understanding the principles of web scraping, the JSON data format, and best practices for data structuring, you can effectively extract and organize data from websites for various purposes.

JSON offers several advantages for storing web scraped data, including its human-readable format, compatibility with various programming languages, lightweight nature, and flexibility in representing different data structures. When structuring data into JSON, it’s essential to follow best practices such as using meaningful keys, maintaining a consistent structure, and validating the JSON data.

Web scraping and data structuring can present challenges, including website structure changes, anti-scraping measures, dynamic content, and data quality issues. It’s crucial to be aware of these challenges and implement appropriate strategies to overcome them.

Finally, ethical and legal considerations are paramount in web scraping. Always respect website terms of service, robots.txt directives, and user privacy. Avoid overloading servers and be transparent about your data sources and methods. Unethical web scraping can lead to legal consequences, reputational damage, and website blacklisting. By adhering to ethical practices and legal guidelines, you can ensure responsible and compliant data collection.