Discover how machine learning for web scraping revolutionizes data extraction and analysis, with practical examples to enhance your projects.

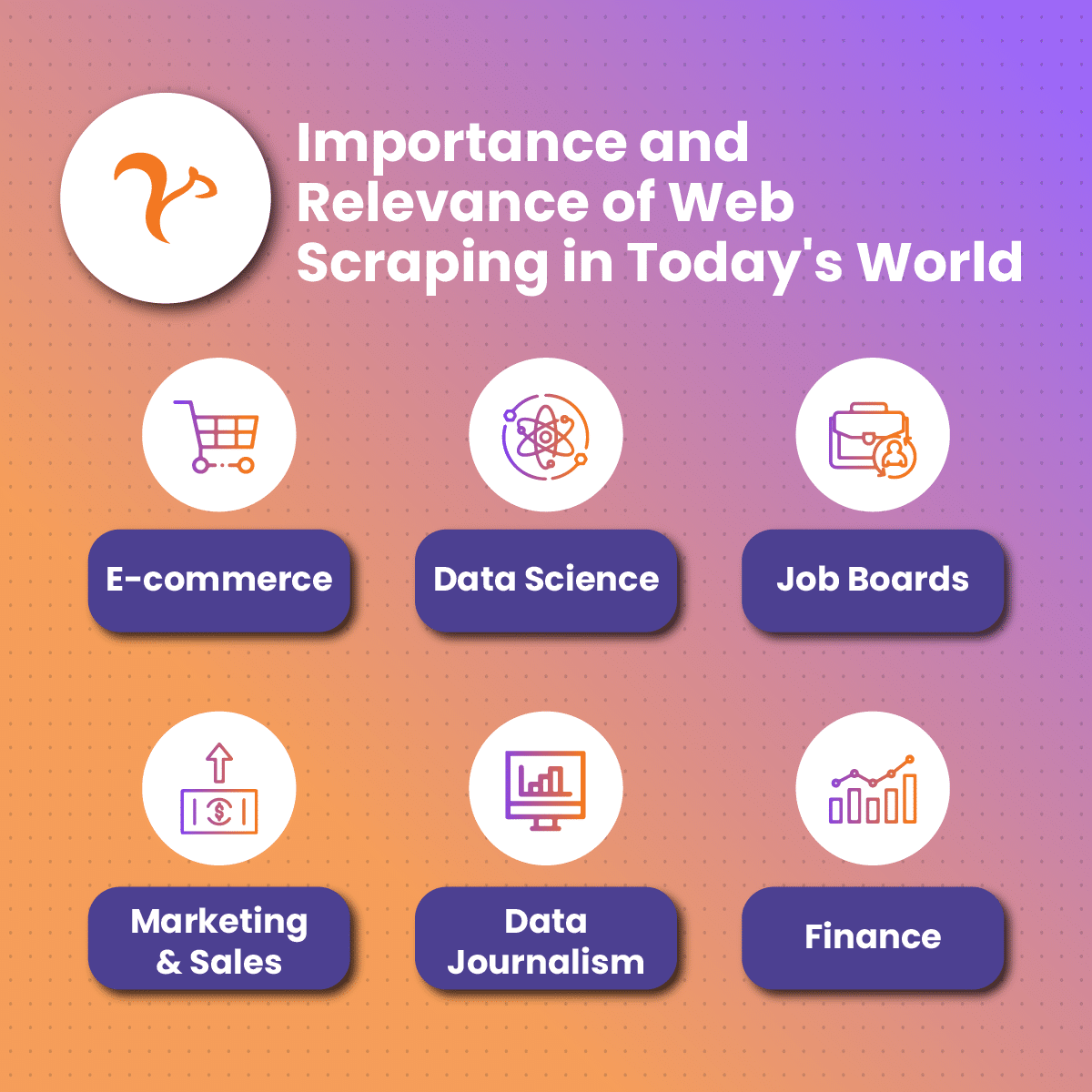

Importance and Relevance of Web Scraping in Today’s World

Automation and Scheduling

One of the significant advantages of web scraping is the potential for automation and scheduling. The process can run on a schedule, continually updating the data set with the most recent information. This feature of automation is further enhanced when we introduce machine learning for web scraping, as the system can learn and improve over time, becoming more efficient and accurate.

Cost-effectiveness

Web scraping is also cost-effective. Instead of manually gathering data, which is time-consuming and costly, web scraping automates this process, reducing costs and increasing efficiency.

Competitive Advantages

Web scraping provides competitive advantages in many industries. For instance, in e-commerce, companies can scrape competitor prices and adjust their pricing strategies accordingly. When paired with machine learning, the insights derived can be more precise and actionable.

Customer Understanding

Finally, web scraping provides valuable insights into customer behavior and preferences, enabling businesses to customize their offerings and marketing strategies. Machine learning can help make sense of this data, identifying patterns and trends that may not be immediately apparent.

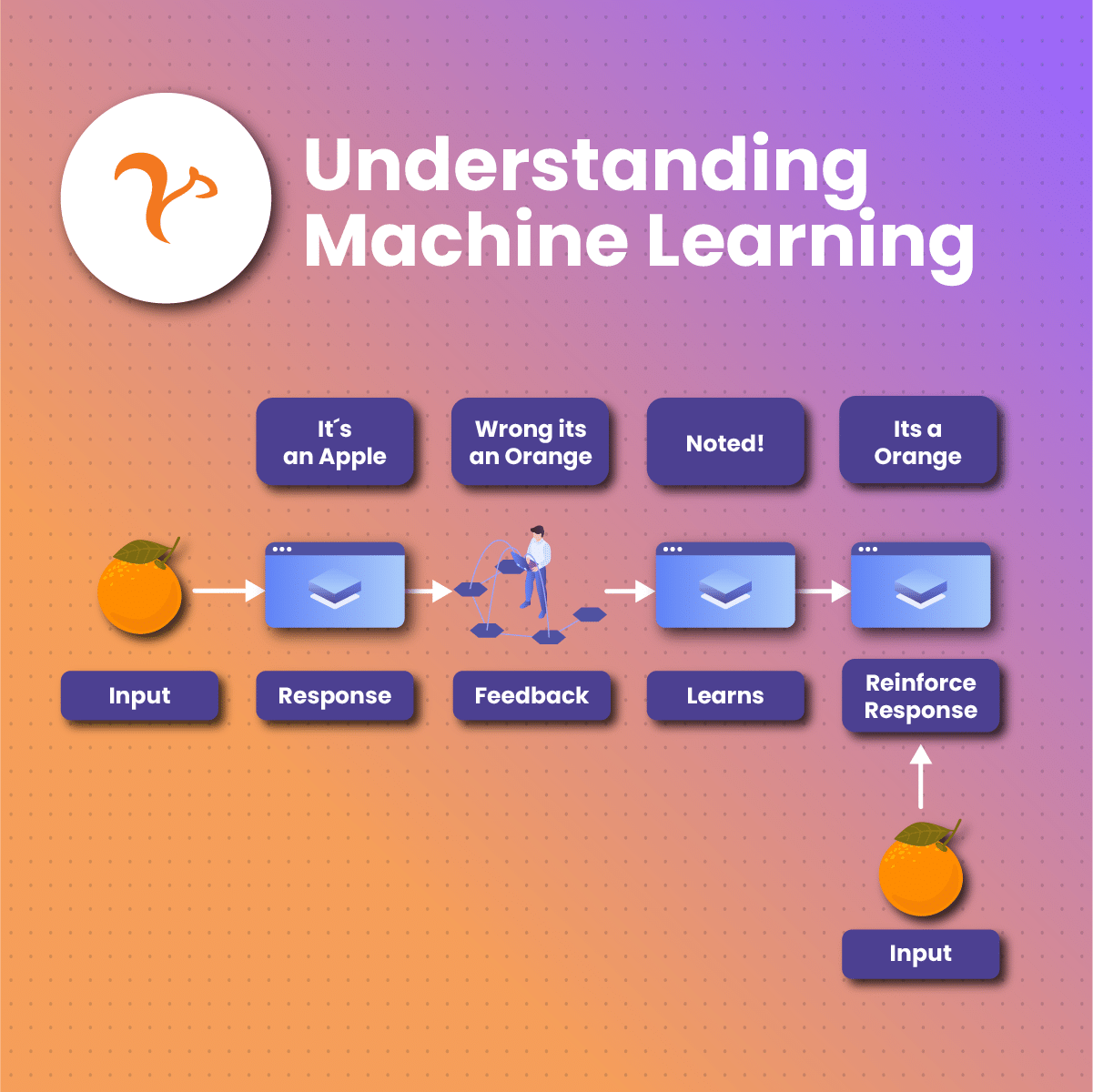

Understanding Machine Learning

Machine learning is a branch of artificial intelligence (AI) that enables computers to learn from experience and improve their performance without being explicitly programmed.

Definition and Core Features

Machine learning algorithms use statistical models to identify patterns in data. These patterns can then be used to make predictions or decisions without being explicitly programmed to perform the task. The core feature of machine learning is its ability to learn and adapt over time as it is exposed to new data.

Real-life Applications

- Customer Service Chatbots: Machine learning algorithms power many customer service chatbots, enabling them to learn from interactions and improve their responses over time.

- AI-powered Proxy Solutions: AI-powered proxy solutions use machine learning to optimize their performance, learning from the traffic patterns and adjusting their behavior to provide the most efficient and secure service.

- Computer Vision: Machine learning plays a crucial role in computer vision, enabling systems to recognize images, identify objects, and even understand human emotions.

- Stock Trading Automation: In finance, machine learning algorithms are used to predict stock market trends and automate trading decisions.

Role of Web Scraping in Machine Learning

Web scraping plays an essential role in machine learning by providing high-quality, real-world data for algorithms to learn from.

Focus on Data Quality

The quality of the data used in machine learning is crucial. Web scraping can help ensure the data used is accurate and up-to-date.

Importance of External Data Sources

Web scraping provides a way to gather external data sources, which can provide additional insights and improve the accuracy of machine learning models.

The Necessity of Data Cleanup in Web Scraping

Before being used in machine learning algorithms, the data gathered through web scraping often needs to be cleaned and preprocessed. This cleanup process is crucial to ensure the data is in a format that the machine learning algorithm can use.

Using Machine Learning for Web Scraping

The application of machine learning for web scraping enhances the effectiveness of the data extraction process. Machine learning can be used to identify patterns in the data, recognize changes in website structures, and adapt scraping strategies accordingly, providing a more flexible and robust approach to data extraction.

Explanation of a Python-based Web Scraping Project

A Python-based web scraping project involves using Python libraries to extract data from websites. Machine learning for web scraping can significantly streamline this process.

Project Requirements: Python Version and Necessary Libraries

For a successful web scraping project, the latest version of Python is recommended, along with several libraries that aid in the data extraction process. Key libraries include BeautifulSoup for parsing HTML and XML documents, and Scrapy or Requests for fetching URLs.

Data Extraction Process

The data extraction process in a Python-based web scraping project involves sending a GET request to the target URL and then parsing the response to extract the desired data. Machine learning can be used to improve the accuracy and efficiency of this process.

Utilizing Jupyter Notebook for Machine Learning Projects

Jupyter Notebook is an open-source web application that allows the creation and sharing of documents containing both code and narrative text. It is an ideal tool for machine learning projects, including machine learning for web scraping.

Importing Necessary Libraries

To start, we import the necessary Python libraries for our project. This often includes libraries like pandas for data manipulation, NumPy for numerical operations, matplotlib for data visualization, and sklearn for machine learning.

Creating a Session and Getting a Response from the Target URL

Next, we create a session using the requests library and use this session to send a GET request to the target URL. The server’s response, containing the website’s HTML, is stored and parsed for data extraction.

Using XPath to Select Desired Data

XPath, a language used for selecting nodes in an XML document, can be used to pinpoint the data we wish to scrape from the website’s HTML. Libraries like lxml and BeautifulSoup can utilize XPath to simplify the data extraction process.

Data Cleaning and Preprocessing

After extracting the data, the next step is data cleaning and preprocessing. This stage is crucial for ensuring that the data is in a suitable format for machine learning algorithms.

Transforming Data into Suitable Format for Machine Learning

The data extracted from the website needs to be converted into a format suitable for machine learning. This involves several steps, including data cleaning, feature selection, and feature scaling.

Cleaning Data: Converting Data Types and Handling Null Values

Data cleaning involves converting data types to formats that can be handled by machine learning algorithms, replacing or removing null values, and handling outliers. In the context of machine learning for web scraping, this process is essential for ensuring the accuracy of the model’s predictions.

Visualizing the Data

Data visualization is a critical step in understanding the data you’re working with. Libraries like Matplotlib and Seaborn can help with creating plots to visualize the data.

- Plotting Key Features: By plotting key features, you can gain insights into relationships between variables, which is crucial when building a machine-learning model.

- Adjusting the Closing Price Trend: In cases where the scraped data involves time-series data, like stock prices, adjustments may need to be made to account for trends. Detrending can help make the data more suitable for machine learning algorithms.

Preparing Data for Machine Learning

Once the data is cleaned and visualized, the next step is preparing it for machine learning. This involves feature selection, feature scaling, and splitting the data into training and test sets.

Feature Selection and Feature Scaling

Feature selection involves choosing which features to include in the machine learning model. Feature scaling, on the other hand, involves standardizing the range of features so that they can be compared on a common scale.

Splitting the Data into Training and Test Sets

To evaluate the performance of the machine learning model, the dataset is typically split into a training set and a test set. The training set is used to train the model, while the test set is used to evaluate its performance.

Handling Time-series Data

Time-series data, which is data that is collected over time, presents unique challenges for machine learning. These challenges can be handled by using techniques like differencing and seasonality adjustment.

Training the Machine Learning Model

Once the data has been prepared, the next step is to train the machine learning model. This involves selecting a suitable algorithm, fitting the model to the training data, and then making predictions.

Creating a Neural Network with LSTM Layer

For certain types of data, a neural network with a Long Short-Term Memory (LSTM) layer can be effective. LSTM networks are a type of recurrent neural network that is capable of learning long-term dependencies, making them suitable for time-series data.

Training the Model and Making Predictions

The model is trained by feeding it the training data and allowing it to adjust its parameters to minimize the difference between its predictions and the actual values. Once trained, the model can make predictions on unseen data.

Comparing Actual Values and Predicted Values

By comparing the model’s predictions with the actual values, we can evaluate its performance. Performance metrics can include measures like accuracy, precision, recall, and the F1 score.

Importance of Proxies and Advanced Data Acquisition Tools in Web Scraping

Proxies and advanced data acquisition tools play a crucial role in web scraping, particularly when machine learning is involved. These tools can help bypass restrictions, increase scraping speed, and ensure data privacy.

Real-life Examples of Web Scraping Use Cases

Web scraping, particularly when enhanced by machine learning, has a myriad of real-life use cases. These range from sentiment analysis and market research to data mining and competitive analysis. The adaptability and efficiency of machine learning for web scraping make it a powerful tool in many industries.

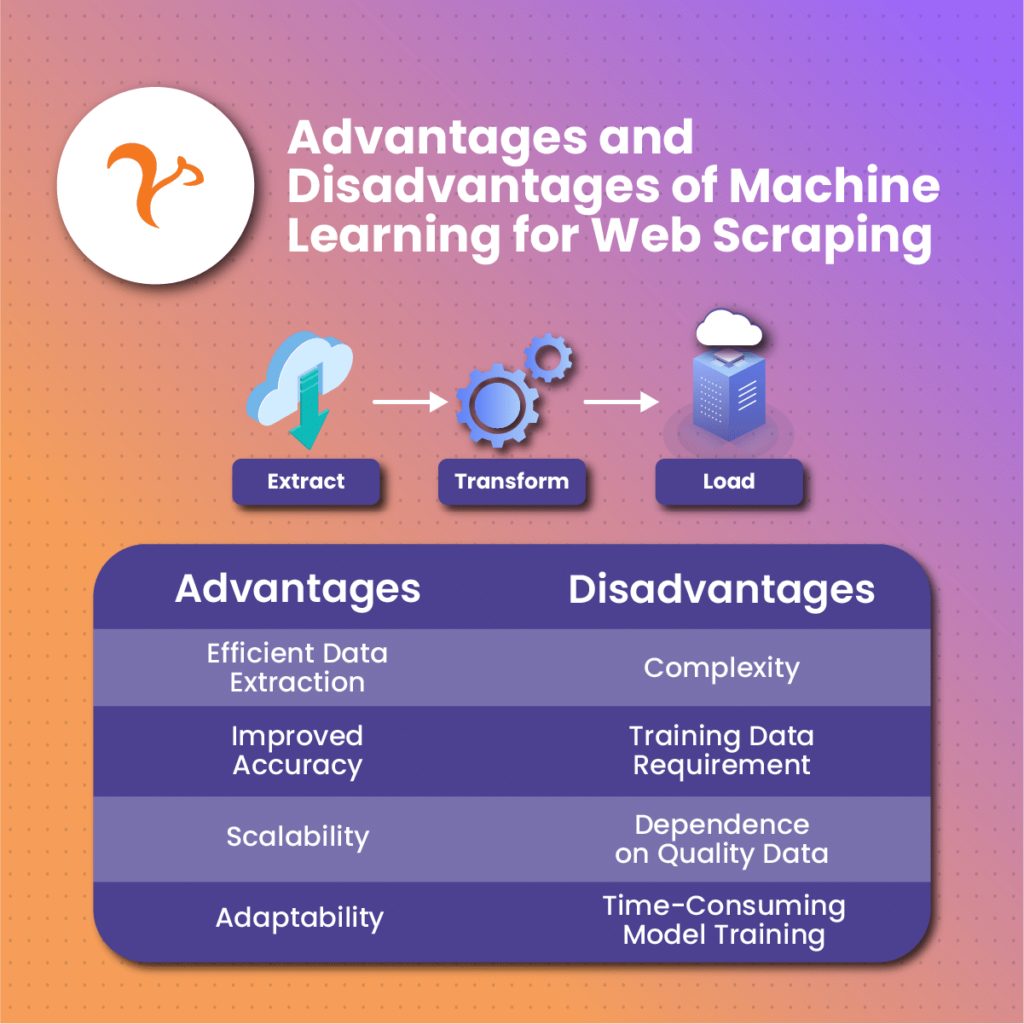

Advantages and Disadvantages of Machine Learning for Web Scraping

Machine learning has transformed the landscape of web scraping, enabling more efficient and accurate data extraction. However, like any technology, it has both strengths and weaknesses.

Advantages of Machine Learning for Web Scraping

- Efficient Data Extraction: Machine learning algorithms can be trained to recognize and extract specific types of data, making the process faster and more efficient than manual extraction.

- Improved Accuracy: Machine learning algorithms can learn and improve over time, reducing errors and increasing the accuracy of data extraction.

- Scalability: Machine learning models can handle large amounts of data, making them suitable for large-scale web scraping projects.

- Adaptability: As machine learning models learn from the data they process, they can adapt to changes in the structure of web pages, making them more resilient to changes in website design.

Disadvantages of Machine Learning for Web Scraping

- Complexity: Machine learning models can be complex to set up and require a certain level of expertise to implement and maintain.

- Training Data Requirement: For machine learning models to be effective, they require a substantial amount of training data, which can be time-consuming and costly to acquire.

- Dependence on Quality Data: The performance of machine learning models is heavily dependent on the quality of the data they are trained on. Poor quality data can lead to poor results.

- Time-Consuming Model Training: Training machine learning models can be a time-consuming process, especially for complex models or large datasets.

Comparison Table of Advantages and Disadvantages

| Advantages | Disadvantages | |

| Efficiency | Machine learning enables efficient data extraction | Complex models can be time-consuming to set up |

| Accuracy | Improves accuracy and reduces errors | Depends heavily on the quality of training data |

| Scalability | Can handle large datasets effectively | Requires substantial amounts of training data |

| Adaptability | Can adapt to changes in web page structures | Complex models may require expertise to implement and maintain |

Resources

- How Web Scraping Is Shaping the Future of Machine Learning: This article explains how web scraping providers have already begun implementing artificial intelligence- and machine learning-driven technologies into their pipelines, such as turning HTML code into structured data through adaptive parsing.

- Leveraging Machine Learning for Web Scraping | TechBeacon: This article explains how web-scraping providers have already begun implementing AI- and ML-driven technologies into their pipelines. Many of these involve getting the most out of proxies and minimizing the likelihood of getting blocked.

- A Beginner’s Guide to AI and Machine Learning in Web Scraping: This article explains how AI and ML improve data fetching and parsing data gathered from a website.

- In-Depth Guide to Web Scraping for Machine Learning in 2023 – AIMultiple: This article explains the top 3 use cases of web scraping in data science.