Introduction

In the bustling world of ecommerce, data is supreme. It’s the lifeblood that fuels business decisions, shapes marketing strategies, and drives growth. Every click, purchase, and review generates valuable information that businesses can leverage to stay ahead in the game.

However, with the sheer volume of data available online, how do ecommerce businesses make sense of it all? This is where web scraping ecommerce websites steps in as a powerful tool in their arsenal.

Businesses can leverage web scraping ecommerce websites to collect data on products, prices, customer reviews, and more with ease. It’s a game-changer for market research, competitor analysis, and price monitoring.

Now, you might be thinking, “How do I unlock the power of web scraping for my ecommerce business?” This guide will provide a comprehensive breakdown of what is involved with scraping ecommerce websites.

So, let’s dive in and uncover the secrets of mastering ecommerce data scraping.

What is Web Scraping?

Web scraping, in simple terms, is the process of extracting information from web pages, turning unstructured data into structured, usable formats. Imagine you’re browsing an online store, and you want to compile a list of all the product names and prices. Instead of copying and pasting each item into a spreadsheet manually, web scraping automates this task, collecting the data in a fraction of the time.

How Web Scraping Works

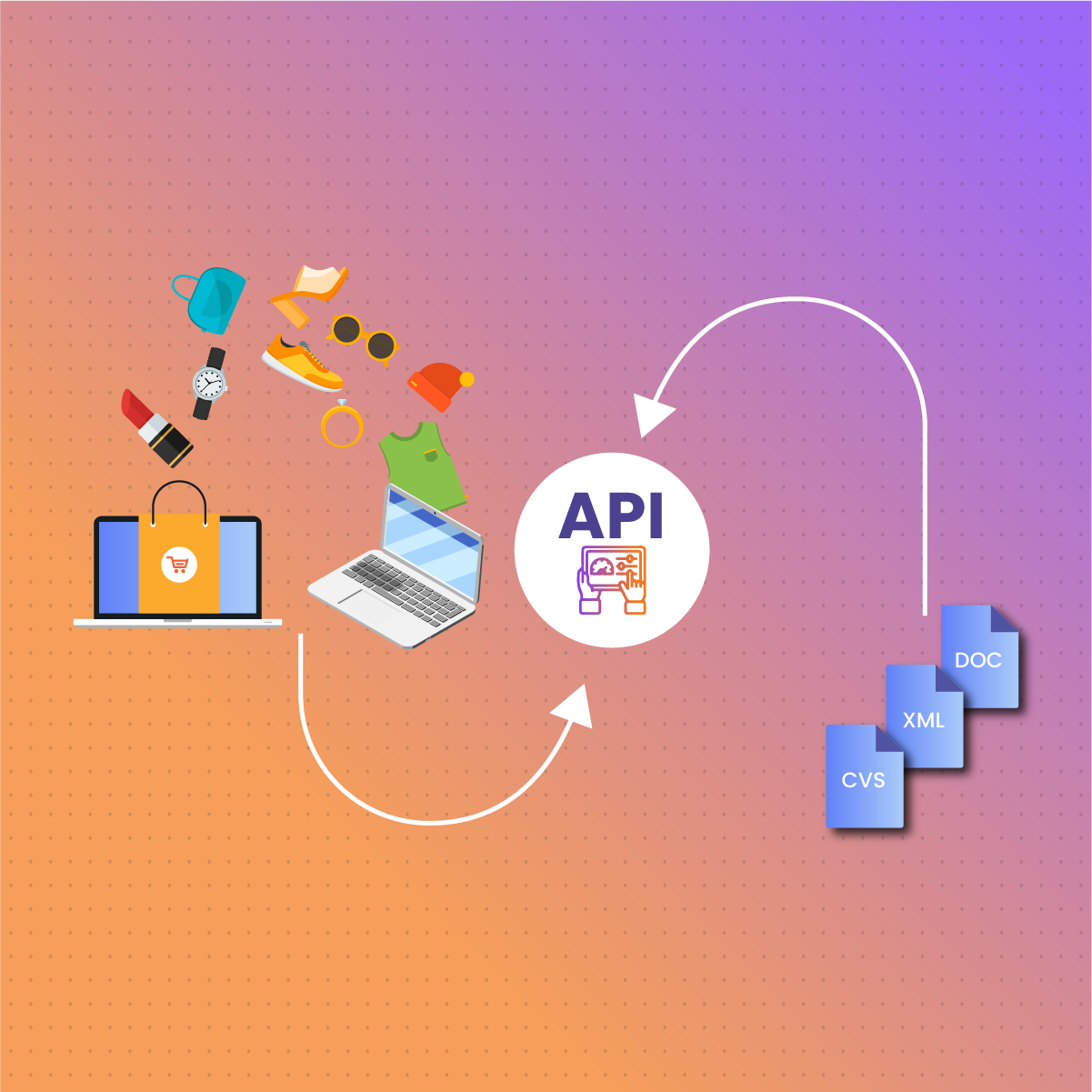

For easy implementation, web scraping involves three main steps:

- Sending a Request: The scraping tool sends a request to the target website’s server, asking for the HTML code of a specific web page.

- Parsing the HTML: Once the HTML code is received, the scraping tool parses it, extracting the relevant data based on predefined rules or patterns.

- Outputting the Data: Finally, the scraped data is converted into a structured format, such as CSV or JSON, making it easy to analyze and manipulate.

Legal and Ethical Considerations before Web Scraping Ecommerce Websites

While web scraping ecommerce websites offers a wealth of benefits, it is essential to understand the legal and ethical protocols of websites carefully. Before scraping any website, consider the following:

- Respect Website Policies: Many websites have terms of service or robots.txt files that dictate how their data can be accessed. It’s crucial to abide by these rules to avoid legal repercussions.

- Copyright and Intellectual Property: Be mindful of copyright laws and respect the intellectual property rights of website owners. Avoid scraping copyrighted content without permission.

- Data Privacy: Consider the privacy implications of scraping personal data, such as customer information or user-generated content. Ensure compliance with data protection laws, such as GDPR or CCPA, when handling sensitive data.

- Ethical Use of Data: Use scraped data responsibly and ethically. Avoid engaging in activities that could harm users, deceive consumers, or undermine the integrity of the web.

By understanding and adhering to legal and ethical considerations, businesses can harness the power of web scraping responsibly, leveraging data to drive innovation and growth while maintaining respect for privacy and intellectual property rights.

Tools and Technologies for Web Scraping Ecommerce Websites

When it comes to web scraping ecommerce websites, having the right tools at your disposal can make all the difference. Here’s a brief description of some popular web scraping tools:

- BeautifulSoup: This Python library is a favorite among beginners for its simplicity and ease of use. It allows you to parse HTML and XML documents, making it ideal for extracting data from static web pages.

- Scrapy: If you’re looking for a more powerful and scalable solution, Scrapy is the way to go. It’s a high-level web crawling and scraping framework for Python, designed for efficiency and extensibility. Scrapy is great for scraping large websites or crawling multiple pages in parallel.

- Selenium: Unlike BeautifulSoup and Scrapy, Selenium is not specifically designed for web scraping. Instead, it’s a web browser automation tool that allows you to simulate user interactions on web pages. Selenium is useful for scraping content rendered using JavaScript or for handling complex web forms.

Therefore, the right tool for the job is the one that meets your specific needs and fits within your technical capabilities. With the right tool in hand, you’ll be well-equipped to tackle any web scraping challenge that comes your way.

Techniques for Effective Ecommerce Web Scraping

Web scraping ecommerce websites is not just about extracting data; it’s about doing it efficiently and effectively. Here are some techniques to enhance your scraping process:

Basic Scraping Techniques

- HTML Parsing: Use libraries like Beautiful Soup or lxml to parse HTML and extract relevant data from web pages.

- CSS Selectors: Leverage CSS selectors to target specific elements on a web page, such as product names, prices, or links.

- Regular Expressions: Employ regex patterns to extract data that follows a specific format or pattern, such as phone numbers or email addresses.

- Request Headers: Mimic browser requests by setting user-agent strings and headers to avoid being detected as a bot and bypass proxy block.

Advanced Scraping Techniques

- Handling Dynamic Content: Use tools like Selenium or Puppeteer to scrape websites with dynamic content generated by JavaScript. These tools simulate user interactions and can scrape content that’s loaded dynamically after the initial page load.

- Pagination Handling: Implement logic to navigate through paginated results and scrape data from multiple pages. This may involve extracting pagination links and iterating through them to collect all desired data.

- Proxy Rotation: You can rotate IP addresses and use proxy servers to avoid being blocked by websites that impose restrictions on scraping activities.

- Session Management: This involves maintaining a persistent session when scraping websites that require authentication or use cookies to track user sessions.

Dealing with Common Challenges and Obstacles

While web scraping ecommerce websites, certain issues might come. Hence, it is important to understand what they are and how to resolve them easily on your own. Some of the issues to look out for include but not limited to:

- Anti-Scraping Measures: Many websites implement anti-scraping measures to detect automated scraping activities. To bypass these measures, consider using techniques like rotating user agents, delaying requests, or using headless browsers.

- CAPTCHA Challenges: Some websites use CAPTCHA challenges to prevent automated scraping. To overcome CAPTCHA challenges, you can use CAPTCHA-solving services or implement human-solving strategies.

- Data Cleaning and Validation: Scraped data may contain inconsistencies, errors, or irrelevant information. Perform data cleaning and validation to ensure that the scraped data is accurate, consistent, and reliable.

- Robust Error Handling: Implement robust error handling mechanisms to deal with connection issues, timeouts, and other potential errors that may occur during scraping. Retry failed requests, log errors, and handle edge cases gracefully to avoid disruptions in the scraping process.

By mastering these techniques, you can tackle a wide range of scraping tasks and overcome common challenges and obstacles encountered in the scraping process.

Prerequisites to Writing a Web Scraping Script for Ecommerce Websites

There are some things you must understand before you go into writing the code for scraping a website .

Identify Data Sources

The first step in the web scraping process is to identify the data sources from which you want to extract information. For ecommerce, this could include competitor websites, product listings, pricing information, customer reviews, and more.

Understand Website Structure

Before writing a scraping script, it’s crucial to understand the structure of the target website. Analyze the HTML structure of web pages to identify the location of relevant data elements such as product names, prices, descriptions, and reviews.

Choose the Right Tool

Select a suitable web scraping tool or library based on the complexity of the scraping task and your programming proficiency. Popular choices for web scraping in Python include BeautifulSoup, Scrapy, and Selenium.

Develop Scraping Logic

Once you’ve chosen a scraping tool, develop the logic for your scraping script. This involves writing code to send HTTP requests to the target website, parse HTML content, extract desired data elements using CSS selectors or XPath expressions, and handle pagination if necessary.

Handle Dynamic Content

Many ecommerce websites use dynamic content loaded via JavaScript, such as product prices or reviews. If scraping dynamic content, consider using tools like Selenium that can interact with JavaScript-rendered elements.

Implement Error Handling

Implement robust error handling mechanisms to deal with common issues encountered during scraping, such as connection errors, timeouts, or HTTP status code errors. Retry failed requests and log errors to ensure smooth operation of your scraping script.

Test and Refine

Test your scraping script thoroughly to ensure it extracts data accurately and efficiently. Iterate on your code and make refinements as needed to improve its performance and reliability.

How to Write a Web Scraping Script for Ecommerce Websites

Web scraping has become a vital tool for ecommerce businesses seeking to gain valuable insights, optimize strategies, and stay competitive in the crowded online marketplace. Here, we will look into the web scraping process for ecommerce. Shall we?

Writing a Ecommerce Web Scraping Script

Below is a basic example of a web scraping script written in Python using the BeautifulSoup library to extract product names and prices from an ecommerce website:

import requests

from bs4 import BeautifulSoup

# URL of the target website

url = ‘https://example.com/products’

# Send a GET request to the website

response = requests.get(url)

# Parse HTML content

soup = BeautifulSoup(response.text, ‘html.parser’)

# Extract product names and prices

products = soup.find_all(‘div’, class_=’product’)

for product in products:

name = product.find(‘h2′, class_=’product-name’).text.strip()

price = product.find(‘span’, class_=’product-price’).text.strip()

print(f’Product: {name}, Price: {price}’)

By mastering the web scraping process and writing effective scraping scripts, ecommerce businesses can maintain a competitive edge in online commerce.

Integrating NetNut Proxy for Efficient Web Scraping Ecommerce Websites

First, it is important to mention that the success of web scraping lies not only on effective scraping techniques but also on overcoming challenges such as IP blocking and detection. Therefore, to resolve these challenges, the use of NetNut proxy is important.

NetNut is a premium residential proxy service that provides businesses with access to a vast pool of residential IP addresses. Unlike traditional datacenter proxies, residential proxies utilize IP addresses assigned to real residential devices, making them more reliable, secure, and less likely to be detected or blocked by websites.

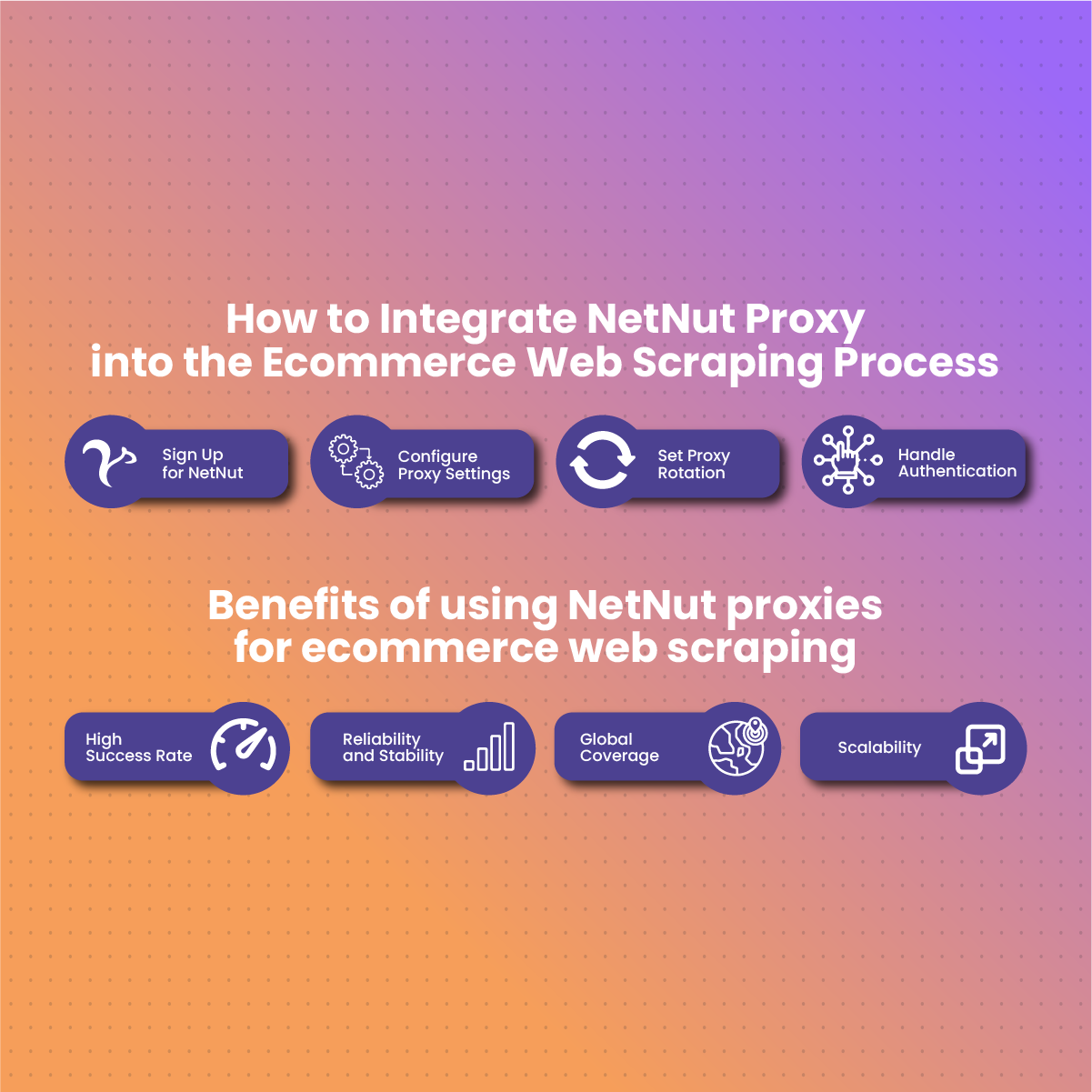

How to Integrate NetNut Proxy into the Ecommerce Web Scraping Process

- Sign Up for NetNut: The first step is to sign up for a NetNut proxy account and choose a suitable plan based on your scraping needs. NetNut offers flexible pricing options and provides access to a global network of residential IP addresses.

- Configure Proxy Settings: Once you’ve signed up for NetNut proxy service, you’ll receive API credentials and instructions for configuring proxy settings in your scraping script. NetNut offers seamless integration with popular scraping libraries such as Scrapy, BeautifulSoup, and Selenium.

- Set Proxy Rotation: NetNut offers automatic IP rotation, allowing you to use rotating residential proxies at regular intervals to avoid detection and ensure uninterrupted scraping. You can configure proxy rotation settings based on your scraping frequency and requirements.

- Handle Authentication: More so, NetNut requires authentication using your API credentials to access the proxy network. Ensure that you include authentication parameters in your scraping script to authenticate with the NetNut API and establish a connection to the proxy network.

Once all these have been completed, you can scrape data from ecommerce websites with confidence. This reduces the risk of IP blocking and improves the reliability and success rate of your scraping efforts.

Benefits of using NetNut proxies for ecommerce web scraping

Some of the advantages of integrating NetNut proxies with ecommerce web scraping process include:

- High Success Rate: NetNut provides access to a large pool of residential IP addresses, static proxies, and mobile proxies. This increases the likelihood of successful data extraction without being detected or blocked by websites.

- Reliability and Stability: Residential proxies offer greater reliability and stability compared to data center proxies, ensuring consistent performance and minimizing disruptions during the scraping process.

- Global Coverage: NetNut offers a global network of residential and ISP proxies. This allows you to scrape data from ecommerce websites located in various regions and geographies.

- Scalability: NetNut offers scalable solutions to accommodate the growing needs of your scraping projects, whether you’re scraping data from a few websites or conducting large-scale scraping operations.

Integrating NetNut proxies into the ecommerce web scraping process enhances the efficiency, reliability, and success rate of data extraction efforts. With NetNut proxies in your tool box, there is nothing for you to worry about while web scraping ecommerce websites.

Conclusion

On a final note, as long as ecommerce is concerned, mastering web scraping is a potent tool for marketing and to improve sales. From this guide, we have been able to look into the fundamental principles attached to web scraping. Also, we have talked about the advanced techniques tailored specifically for ecommerce websites.

Without a doubt, it’s clear that deeply understanding web scraping gives business owners the potential for ecommerce success. By using the power of data, businesses can uncover hidden opportunities, optimize strategies, and enhance customer experiences. However, the journey does not end here; it is important to stay updated on recent trends and innovation.

Now, you can stay committed to improving your skills in the art of web scraping. Improve your activities on ecommerce websites with web scraping techniques today!

Frequently Asked Questions and Answers

How can I ensure ethical scraping practices when extracting data from ecommerce websites?

To ensure ethical scraping practices, respect website terms of service, adhere to legal requirements, and prioritize user privacy. Be transparent about your scraping activities, minimize the impact on target websites, and handle scraped data responsibly.

Is it possible to scrape product reviews from ecommerce sites?

Yes, it’s possible to scrape product reviews from ecommerce sites using web scraping techniques. Tools like Beautiful Soup or Scrapy can be used to extract review data from product pages, allowing businesses to analyze customer feedback and sentiment.

Can web scraping help with market research for ecommerce startups?

Yes, web scraping is a valuable tool for market research for ecommerce startups. By scraping data from competitor websites, online forums, and social media platforms, startups can gain insights into market trends, customer preferences, and competitor strategies.