Introduction

As the world’s largest online marketplace, Amazon holds a large amount of data. These data ranges from product details and prices to customer reviews and seller information. With millions of daily transactions, the platform has become a goldmine for businesses and individuals seeking to harness valuable insights. This is where Amazon scraping comes into play.

Amazon scraping is increasingly important as businesses shift toward data-driven decision-making. The ability to gather, analyze, and act on vast amounts of data has revolutionized how companies operate. From small businesses to large corporations, the power of scraping lies in its ability to extract valuable information that would otherwise be time-consuming and tedious to collect manually.

To better understand how Amazon scraping works and how to properly utilize it, we got you covered here. In this guide, we would provide a comprehensive overview of Amazon scraping and best scrapers in 2024. Whether you’re new to scraping or a professional, this guide will help you navigate the key aspects of using Amazon scrapers effectively.

Let’s get started!

What is an Amazon Scraper?

An Amazon scraper is a specialized tool or software designed to automate the process of collecting and extracting data from Amazon’s website. Instead of manually browsing Amazon to gather information, an Amazon scraper can retrieve large amounts of data quickly, efficiently, and without human intervention.

These tools work by simulating a user’s interaction with the website, allowing businesses and individuals to pull information directly from Amazon’s product listings, customer reviews, and other key areas. Scrapers use web crawling and data extraction techniques to access and parse HTML structures on Amazon’s web pages.

In short, an Amazon scraper takes the bulky and time-consuming task of manual data collection and automates it. This enables users to access real-time data from one of the largest e-commerce platforms in the world.

How Amazon Scrapers Work

Amazon scrapers automate the process of collecting valuable data from Amazon’s large database by mimicking a user browsing the site. Understanding how these tools function can help businesses use them effectively for competitive analysis, pricing strategies, and market research. Below is a breakdown of how Amazon scrapers work, from inputting target URLs to extracting and storing data.

Inputting Target URLs or Keywords

The first step in using an Amazon scraper is to define what specific data you want to scrape. Users can typically input target URLs or keywords to direct the scraper to the relevant Amazon pages. The scraper then uses the input to identify the Amazon pages it will crawl and extract data from, ensuring the process is targeted and relevant to the user’s needs.

Web Crawling Process

Once the target URLs or keywords are provided, the web crawling process begins. Web crawling involves scraper bots systematically navigating through the various pages of Amazon’s website to locate the desired data. Here’s how it works:

- Page Navigation: The scraper simulates user behavior by automatically clicking on links, buttons, or pagination (e.g., “Next Page”) to traverse Amazon’s site. This allows the scraper to access multiple product pages, categories, or even customer reviews.

- Finding the Relevant Pages: Web crawlers identify pages that contain the data you want, such as specific product pages, pricing, reviews, or sales rankings. The crawler reads the HTML structure of each page to find relevant sections.

- Dealing with Amazon’s Anti-Scraping Mechanisms: Amazon employs measures such as CAPTCHA challenges, IP blocking, and rate limiting to prevent bots from scraping their data. To bypass these, scrapers often use rotating proxies, which simulate requests from different IP addresses to avoid detection and ensure smooth operation.

Crawling involves gathering the necessary web pages so that the next step—data extraction—can begin.

Data Extraction

After the scraper crawls through the Amazon pages, the next step is data extraction. This is where the scraper identifies and pulls specific pieces of information from the website’s HTML structure. Here’s how it works:

- Parsing HTML: Each webpage on Amazon is made up of HTML code, which contains the data presented on the page, such as product titles, prices, and descriptions. Scrapers are programmed to recognize the structure of this HTML and locate the data points the user is interested in.

- Locating Data Points: The scraper looks for certain elements, tags, or classes within the HTML to extract the relevant data. For example:

- Product titles are typically located within <h1> or <h2> tags.

- Prices are often found within <span> or <div> elements that are marked with specific class names (e.g., “price”).

- Reviews and ratings are found in dedicated sections of the HTML that the scraper is programmed to identify.

- Handling Dynamic Content: Amazon uses dynamic elements like JavaScript to load some data. Advanced scrapers are capable of rendering these dynamic elements to ensure all relevant data—whether it’s hidden behind a click or loaded through scripts—is collected.

- Real-Time Data Extraction: Many scrapers operate in real-time, meaning they pull the most current data from Amazon at the time of the scraping session. This ensures that the information extracted is up to date and accurate.

At this stage, the scraper has successfully gathered the necessary data from Amazon’s site and is ready to store it for the user.

Data Output and Storage

Once the scraper has collected the data, the final step is output and storage. This involves saving the scraped data in a structured and easily accessible format. The most common formats include:

- CSV (Comma-Separated Values)

- JSON (JavaScript Object Notation)

- Excel

- Databases

Once the data is stored, it can be used for a variety of purposes, such as competitive analysis, pricing strategies, inventory management, or market research.

Best Practices for Using an Amazon Scraper

To effectively and ethically use an Amazon scraper, following a set of best practices is essential. These practices ensure not only the smooth functioning of your scraping tool but also help avoid legal issues and maintain a good relationship with Amazon’s platform. Here are the key best practices to keep in mind when scraping Amazon:

Respect Amazon’s Terms of Service

Before beginning any web scraping activities, it’s critical to read and understand Amazon’s Terms of Service (TOS). Amazon has strict rules prohibiting unauthorized scraping, which means if you scrape their site without permission, you could face serious legal consequences. It’s important to stay within the boundaries outlined by Amazon, which often requires using their official API for legitimate data extraction purposes.

Set Rate Limits

When scraping Amazon, it’s essential to set rate limits on the number of requests you send to the platform within a specific time frame. Sending too many requests in a short period can overload Amazon’s servers, which is not only unethical but also increases the likelihood of getting detected and blocked. Rate limits control the speed of requests, mimicking human browsing behavior.

Regularly Test and Update Scraping Scripts

Amazon frequently updates its website structure, which can cause scraping scripts to break or malfunction. As a result, it’s critical to regularly test and update your scraping scripts to ensure they continue functioning properly. If your scraper stops working due to changes in Amazon’s site layout, you’ll need to adjust the code to reflect the new HTML structure or use updated versions of your scraping tool.

Keep Data Storage Secure

When scraping large volumes of data from Amazon, it’s essential to ensure that the storage and handling of the data are secure. Whether storing the scraped data in CSV, JSON, or databases, make sure to follow proper data security protocols, such as encryption and access controls. This is particularly important if the scraped data is being used for business analytics or market research.

Avoid Scraping Personal Information

Scraping personal information, such as customer emails, contact details, or private messages, is a serious violation of Amazon’s TOS and can lead to legal action under data privacy laws like the General Data Protection Regulation (GDPR). When using an Amazon scraper, focus on public data such as product listings, reviews, prices, and rankings.

As incredible as Amazon scrapers can be, using them responsibly is key to avoiding legal pitfalls and ensuring the longevity of your data extraction efforts. By following these best practices you can maximize the benefits of scraping while minimizing risks.

Challenges of Amazon Scraping

While Amazon scraping can provide invaluable insights into market trends, product performance, and competitor analysis, it comes with its own set of challenges. These obstacles often stem from Amazon’s security measures designed to prevent unauthorized scraping and ensure the platform’s stability. Below are some of the most common challenges associated with scraping Amazon and how to address them:

IP Blocking

IP blocking is one of the most common anti-scraping techniques used by Amazon to protect its site from automated bots. When Amazon’s system detects multiple requests originating from the same IP address in a short period, it may flag the activity as suspicious and block the IP. This prevents scrapers from continuing to gather data.

To resolve this issue, rotating proxies are essential. By using a rotating proxy service, you can distribute your requests across multiple IP addresses, making it harder for Amazon to detect and block your activity. Some advanced scraping tools allow users to integrate rotating proxies seamlessly, helping to bypass IP bans.

CAPTCHA Challenges

Another common roadblock in Amazon scraping is the use of CAPTCHAs. CAPTCHAs are designed to determine whether the user interacting with the site is a human or a bot. When scraping bots make multiple requests or exhibit suspicious behavior, Amazon may deploy CAPTCHAs to interrupt the scraping process. CAPTCHAs can stop automated scrapers in their tracks, as bots are typically unable to solve them without human intervention.

To overcome CAPTCHA challenges, several tools and services are available, such as CAPTCHA solvers or anti-CAPTCHA services. These services automatically solve CAPTCHAs, allowing the scraper to continue working without disruption. Some advanced scraping platforms have built-in features that can handle CAPTCHAs.

Data Consistency Issues

Amazon frequently updates its website layout and underlying HTML structure. These changes can lead to data consistency issues, where scraping scripts that were previously working suddenly break because the data is no longer located in the same place within the HTML code. If a scraper is not updated to account for these changes, it may extract incorrect or incomplete data, rendering the results useless.

The solution is to regularly maintain and update your scraping scripts to adapt to changes in Amazon’s HTML structure. This involves periodically checking if Amazon has made any significant updates to its page layouts and adjusting your scraping logic accordingly. For users who prefer not to handle these technical updates manually, many scraping tools offer automated updates or have communities that share updated templates for Amazon scraping.

On the bright side, these challenges can be resolved proactively by integrating NetNut proxies. With these proxies you can ensure that your scraping efforts remain efficient, reliable, and compliant, helping you gain valuable insights into market trends, competitor pricing, and product performance.

Resolving Amazon Scraping Challenges with NetNut Proxies

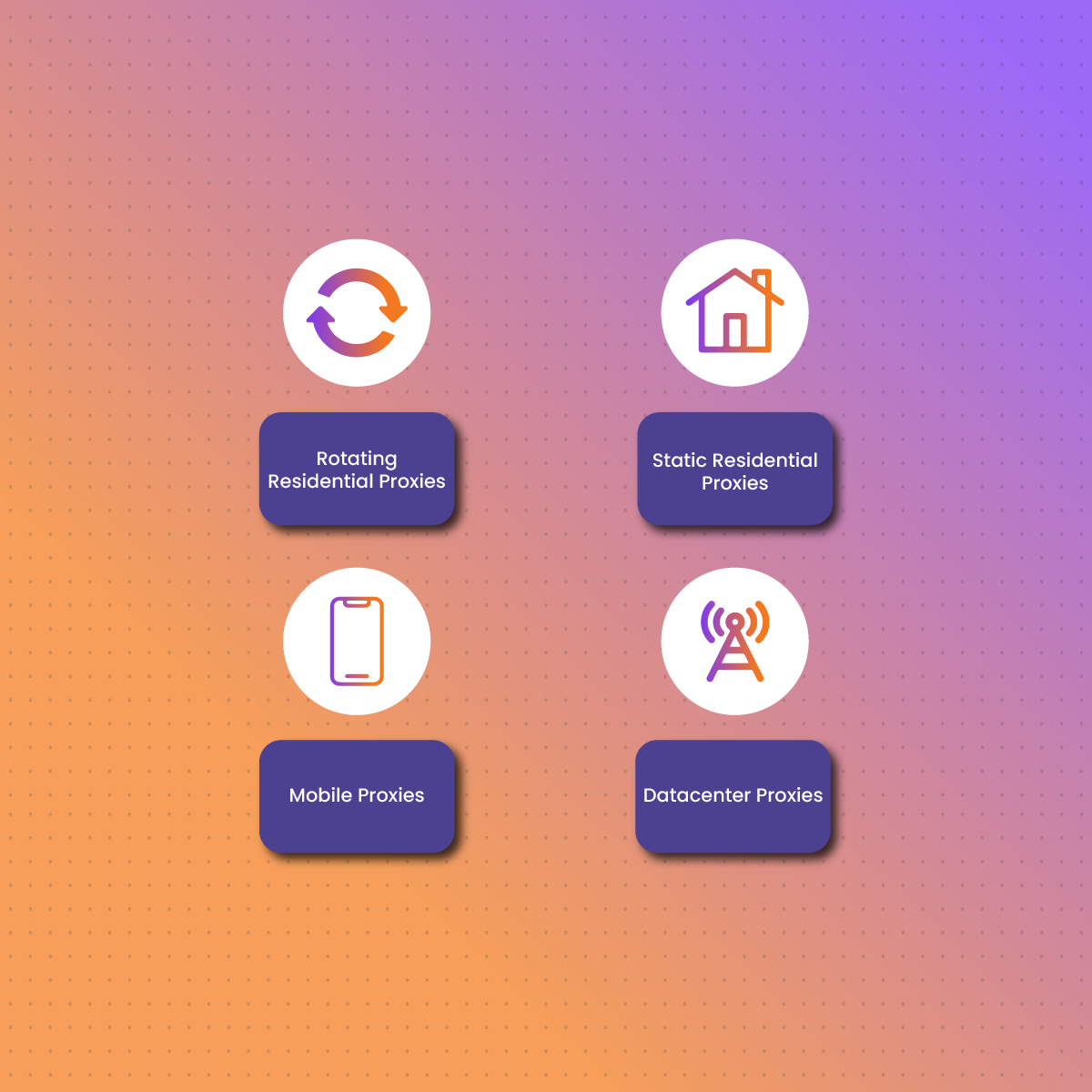

When it comes to scraping data from Amazon, using a reliable proxy service is crucial to ensure smooth and uninterrupted data collection. NetNut Proxies stand out as a highly effective solution for this purpose. NetNut offers several types of proxies to help resolve issues regarding Amazon scrapers. Here’s a breakdown of these proxies:

Rotating Residential Proxies

Rotating Residential proxies use IP addresses provided by Internet Service Providers (ISPs) and are associated with real residential addresses. These proxies are highly effective for bypassing geo-restrictions and avoiding detection because they mimic regular user traffic. For Amazon scraping, residential proxies help in achieving a high level of anonymity and reducing the chances of IP bans.

Mobile Proxies

Mobile proxies use IP addresses assigned to mobile devices and are associated with mobile networks. These proxies are highly effective for bypassing restrictions and are less likely to be flagged by websites due to their mobile nature. For Amazon scraping, mobile proxies can provide enhanced anonymity and access to data that might be restricted to mobile users.

Static Residential Proxies

Static residential proxies offer a static IP address associated with a residential location. This type of proxy provides the benefits of residential proxies with the added stability of a fixed IP address. This can be advantageous for tasks that require a consistent IP address for session.

Datacenter Proxies

Datacenter proxies are hosted on servers in data centers and are often faster and more affordable than residential proxies. While they may be more easily detected by websites due to their data center origin, they offer high-speed performance and are suitable for scraping tasks that require rapid data extraction. Integrating data center proxies with your Amazon scraper can be a cost-effective solution for high-volume data scraping.

Conclusion

In summary, Amazon scraper continues to be an invaluable asset for businesses seeking a competitive edge in the ever-evolving e-commerce space. When executed correctly, it offers profound insights that can drive smarter decision-making, enhance Amazon market strategies, and optimize product offerings.

While challenges such as IP blocking, CAPTCHA issues, and data consistency need to be addressed, the benefits of using Amazon scraper far outweighs the challenges. By employing advanced tools and adhering to best practices, businesses can effectively navigate these challenges and leverage Amazon’s vast data resources to their advantage.

On a final note, Amazon scraping, when performed with precision and compliance, not only enhances operational efficiency but also positions businesses to thrive in a competitive market. It’s a strategic approach that, if utilized thoughtfully, can lead to significant business growth and success.

Frequently Asked Questions and Answers

Can Amazon block me for scraping?

Yes, Amazon can block your IP address if it detects scraping activity. To minimize the risk of being blocked, it’s important to use techniques like rotating proxies and setting appropriate request limits.

Do I need coding skills to use a scraper?

It depends on the tool you choose. Some scraping tools are designed for users with no coding experience and offer a user-friendly interface. However, tools like NetNut do not require coding knowledge to customize and execute scraping scripts.

What data can be scraped from Amazon?

Common data points that can be scraped from Amazon include product details (such as descriptions and specifications), prices, reviews, sales rankings, and seller information.