Machine learning is only as powerful as the data it learns from. While algorithms and model architectures often get the spotlight, the dataset—the raw material that powers machine learning—plays an equally critical role. In fact, a high-quality dataset can make the difference between a mediocre AI model and one that delivers real-world results.

In today’s data-driven world, the ability to source, structure, and scale training data has become a competitive advantage. That’s especially true when developing models for natural language processing, computer vision, or decision-making systems. But sourcing the right dataset isn’t always straightforward. From finding domain-specific content to accessing real-time or geo-restricted information, developers and data scientists often face major hurdles.

This is where NetNut steps in. As a global provider of residential, mobile, and rotating proxies, NetNut empowers AI teams to collect clean, diverse, and scalable training data from the web—ethically and efficiently. Whether you’re building a sentiment classifier or fine-tuning a large language model, the right dataset (and the right access to it) is key.

What Is a Dataset in Machine Learning?

In machine learning, a dataset refers to a structured collection of data used to train, validate, and test models. Each entry in a dataset represents an instance or observation that the algorithm will learn from—ranging from a sentence, an image, or a numeric feature set, depending on the use case.

A dataset typically includes:

- Features (Inputs): The variables or raw data used to make predictions (e.g., text, pixels, numbers).

- Labels (Targets): The desired output the model is trained to predict (e.g., a category, sentiment, or value).

- Metadata: Information about the data source, timestamps, user information, or location.

Machine learning datasets can be:

- Labeled (Supervised Learning): Where each data point is tagged with a correct output.

- Unlabeled (Unsupervised Learning): Where the model finds patterns without prior annotations.

- Structured or Unstructured: Depending on the format—structured data fits neatly into rows and columns; unstructured data includes free-form text, audio, or images.

If you’re building datasets from online sources (news sites, forums, product pages), NetNut’s proxy solutions allow you to gather diverse, authentic data without interruptions—ensuring your models are trained on robust, real-world input.

Types of Machine Learning Datasets

There isn’t a one-size-fits-all dataset in machine learning. The type of dataset you need depends on the learning approach and the task at hand. Here’s how they break down:

Supervised Learning Datasets

These datasets include both inputs and labeled outputs. The model learns to predict labels based on input data.

Examples:

- Sentiment-labeled reviews (text → positive/negative)

- Image classification (image → “cat” or “dog”)

- Predicting customer churn (user activity → churned/not churned)

Unsupervised Learning Datasets

These datasets contain unlabeled data, used to identify hidden patterns, groupings, or structures.

Examples:

- Clustering customer behavior

- Topic modeling in large text corpora

- Dimensionality reduction of numeric data

Reinforcement Learning Datasets

These are sequences of states, actions, and rewards, where the model learns by interacting with an environment.

Examples:

- Game AI learning strategies from trial and error

- Robotics tasks like grasping or walking

Semi-Supervised and Self-Supervised Learning

- Semi-Supervised: Combines a small labeled dataset with a large volume of unlabeled data.

- Self-Supervised: Uses intrinsic patterns in the data to generate labels automatically (e.g., predicting missing words in sentences).

Components of a High-Quality AI Dataset

Not all datasets are created equal. The quality of your machine learning dataset will have a direct impact on the model’s accuracy, generalization, and ethical behavior. Here are the core attributes that define a high-quality AI dataset:

Relevance

The data must be closely aligned with the problem you’re solving. For example, if you’re training a financial fraud detector, data from healthcare systems won’t help much.

Volume and Diversity

Larger datasets with a wide variety of samples improve a model’s ability to generalize. This includes variation in:

- Language or dialect (for NLP)

- Visual contexts (for computer vision)

- User demographics or locations (for personalization)

Accuracy of Labels

If you’re using supervised learning, the labels must be reliable and consistently applied. Poor labeling can mislead the model and reduce performance.

Cleanliness

Noise, duplicates, missing values, and irrelevant text/images degrade model performance. Clean data = clean learning.

Freshness

In fast-moving domains like news, finance, or eCommerce, stale data leads to outdated predictions. A dataset that reflects the current environment is far more valuable.

Popular Datasets for Machine Learning Projects

If you’re just getting started or looking to benchmark your model, here are some well-known, open-source machine learning datasets grouped by application:

Image & Computer Vision

- MNIST – Handwritten digit images (great for beginners)

- CIFAR-10 / CIFAR-100 – Labeled images of objects across multiple categories

- ImageNet – Massive image dataset used for large-scale vision tasks

Text & Natural Language Processing

- IMDB – Sentiment-labeled movie reviews

- SQuAD – Stanford Question Answering Dataset

- CoNLL-2003 – Named entity recognition dataset (PER, LOC, ORG)

Audio & Speech Recognition

- LibriSpeech – Audiobook recordings for speech-to-text tasks

- Common Voice (Mozilla) – Crowdsourced multilingual voice dataset

Structured & Tabular Data

- UCI Machine Learning Repository – Diverse collection of datasets for regression, classification, etc.

- Titanic Dataset (Kaggle) – Predict survival outcomes based on passenger info

- Credit Card Fraud Detection – Often used for anomaly detection and classification

These datasets are helpful for research and learning, but they may not reflect your unique business needs or data requirements. That’s when it’s time to build your own.

Where to Find Datasets for Machine Learning

If you’re not building a dataset from scratch, there are many reputable sources for ready-to-use machine learning datasets. Here’s where to look:

Public Repositories

- Kaggle – Offers thousands of free datasets, often with accompanying notebooks.

- Hugging Face Datasets – NLP-focused hub with plug-and-play integrations.

- UCI Machine Learning Repository – Academic, classic datasets across multiple tasks.

Government & Open Data Portals

- Data.gov (USA)

- EU Open Data Portal

- World Bank Open Data Great for economic, environmental, and demographic data.

Academic & Research Organizations

- Stanford, MIT, and Berkeley often publish datasets with research papers.

The Web (Custom Scraping)

When public datasets don’t fit the bill, custom scraping is the solution:

- News websites for NLP summarization

- Reddit or Quora for sentiment and opinion mining

- Product pages for building recommendation models

- Legal or financial sites for industry-specific AI

Creating Custom AI Datasets via Web Scraping

While public datasets are useful, they often fall short when it comes to niche domains, industry-specific use cases, or real-time applications. That’s why many teams opt to build custom AI datasets by collecting relevant data directly from the web.

Why Create Your Own Dataset?

- Public datasets may be outdated or irrelevant

- You need data for a low-resource language or underrepresented industry

- You want your AI model to reflect your users, not a generic audience

- Real-time use cases (e.g., stock news, trending products) demand fresh inputs

Data Sources to Scrape:

- News sites (for summarization, sentiment, event detection)

- Social media and forums (for opinion mining, user intent)

- eCommerce platforms (for product descriptions and reviews)

- Legal or technical blogs (for question-answering systems)

- Company websites (for training domain-specific LLMs)

Scraping Tools:

- Scrapy – Powerful framework for large-scale crawls

- Playwright / Puppeteer – For interacting with dynamic JavaScript content

- BeautifulSoup – Great for lightweight HTML parsing

Structuring and Formatting ML Datasets

Once your data is collected—whether from open sources or your own web scrapers—it must be structured in a way that machine learning models can interpret and use efficiently.

Common File Formats:

- CSV/TSV: Widely used for tabular data (structured rows and columns)

- JSON / JSONL: Ideal for NLP tasks, supporting nested data and key-value pairs

- Parquet / Feather: Efficient for large-scale, columnar storage

- TFRecords: TensorFlow’s optimized format for high-performance model training

Best Practices for Structuring Datasets:

- Organize data with clear input/output mappings (e.g., text → label)

- Include metadata such as source_url, language, or timestamp

- Standardize label formats (e.g., “positive”/”negative” vs “pos”/”neg”)

- Break long texts into manageable chunks for training (especially for LLMs)

Annotation Tools (For Labeled Datasets):

- Label Studio

- Doccano

- Prodigy

Common Dataset Pitfalls (And How to Avoid Them)

A poorly constructed dataset can derail even the most advanced machine learning models. Here are the most frequent issues—and how to avoid them.

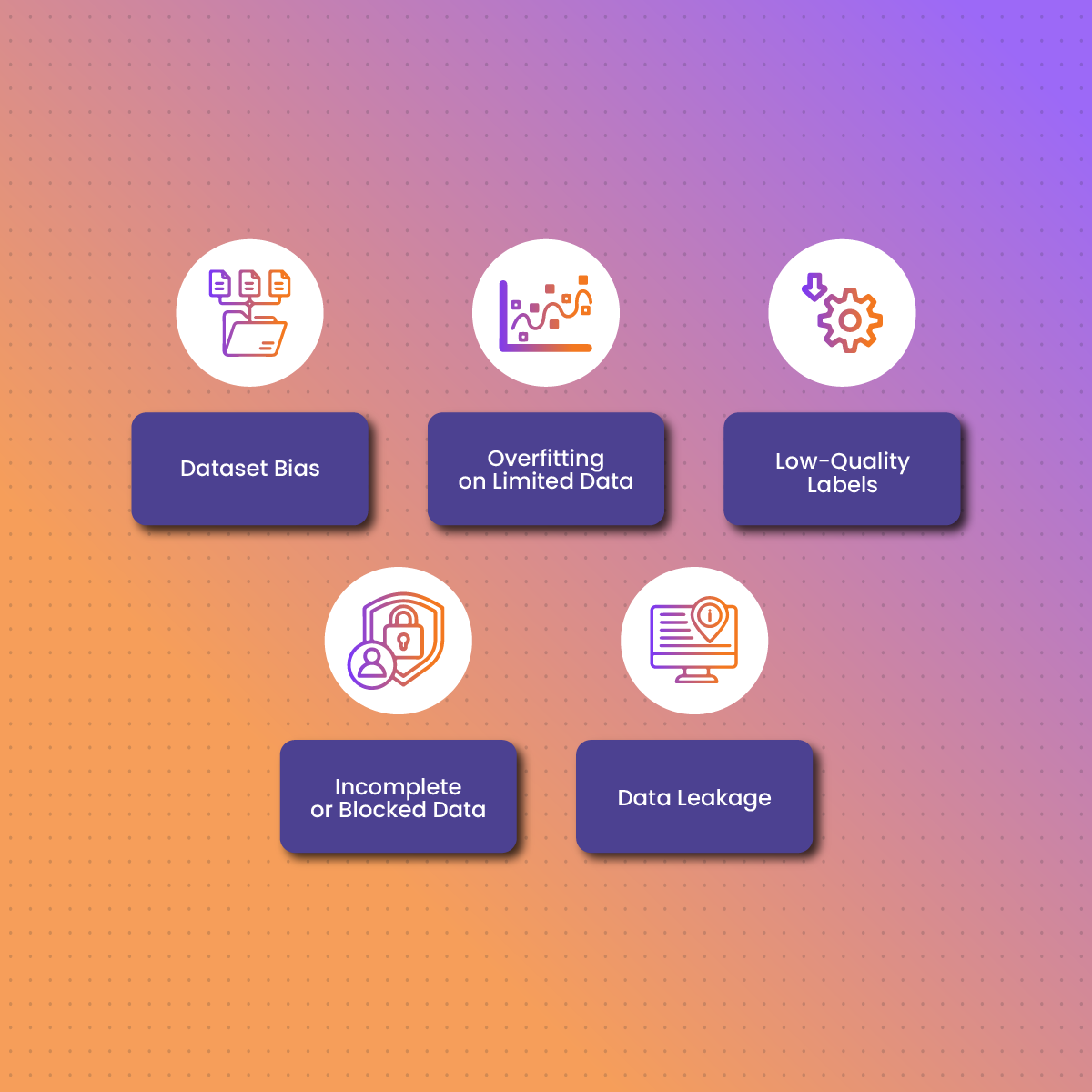

1. Dataset Bias

If your training data lacks diversity, your model will develop blind spots or reinforce harmful biases.

Solution: Collect data from multiple sources and regions. Use NetNut’s geo-targeted proxies to gather more representative content.

2. Overfitting on Limited Data

Relying on a small or repetitive dataset causes your model to perform well in training but fail in real scenarios.

Solution: Increase volume and variation using rotating proxies to scale scraping across the web.

3. Low-Quality Labels

Inconsistent or incorrect labels reduce the value of supervised learning datasets.

Solution: Use clear annotation guidelines and reliable tools. Consider semi-supervised learning to reduce dependency on labeled data.

4. Incomplete or Blocked Data

Web scraping efforts often get blocked midway, returning incomplete pages or dummy content.

Solution: Use NetNut’s residential proxies or mobile proxies to avoid detection, access full-page loads, and maintain session persistence with sticky sessions.

5. Data Leakage

Including future or test data in the training set can result in misleading model accuracy.

Solution: Strictly separate training, validation, and test datasets. Monitor your pipeline for accidental overlap.

The Role of Datasets in AI Model Performance

When building AI models, it’s tempting to focus solely on algorithms and architectures. But in reality, the dataset is often the most important factor in determining a model’s success or failure. A well-curated, balanced, and diverse dataset can outperform a sophisticated model trained on poor data.

Why Datasets Matter More Than You Think:

- Garbage In, Garbage Out: Even the best algorithms fail if trained on noisy, irrelevant, or biased data.

- Real-World Generalization: A model trained on varied, high-quality data is more likely to perform well in unpredictable environments.

- Bias and Fairness: A diverse dataset helps reduce ethical risks and improves the inclusivity of AI outputs.

- Training vs. Validation vs. Testing: Each dataset split has a different role. A good dataset helps ensure your model is learning—not just memorizing.

Ultimately, your model is only as good as the data you feed it. If you want to train AI systems that are reliable, adaptable, and production-ready, you need access to clean, scalable, and real-world data—which is exactly what NetNut helps you collect.

FAQs

What is the difference between a dataset and a database?

A dataset is a structured collection of data used for training or testing a model. A database is a system used to store, manage, and retrieve data—often in real time. You might build a dataset by exporting or querying data from a database.

How large should a machine learning dataset be?

It depends on the task. For small classification problems, thousands of samples may suffice. For training large language models, you may need billions of tokens. The key is quality + diversity, not just quantity.

Can I use scraped data for commercial machine learning?

In many cases, yes—but it depends on the source, jurisdiction, and intended use. Always check terms of service and consider legal consultation when scraping data for commercial purposes.

What proxy is best for collecting training data?

- Residential proxies (for human-like traffic)

- Mobile proxies (for mobile-optimized content)

- Rotating IPs (for large-scale scraping)

- Geo-targeted IPs (for multilingual or region-specific data)

NetNut offers all of these, optimized for AI and data teams.

How do I clean a dataset?

Use standard preprocessing: remove HTML tags, normalize text, filter out irrelevant content, and deduplicate entries. Use annotation tools to label data accurately, and split it into training, validation, and test sets.