Introduction

Artificial Intelligence is a giant leap in the evolution of technology. While it can be deployed for various automated tasks that optimize various aspects of human life, it can quickly become a burden in some aspects. Subsequently, many website managers are constantly finding ways to protect their data and integrity of performance, as AI bots, crawlers, and scrapers are more prevalent than before.

Although some AI companies clearly identify their web scraping tools, others are not very transparent. A recent example is the accusation of Perplexity on impersonating human users as an attempt to scrape data. Subsequently, the value of up-to-date data is on a steady incline.

So, how can websites gain independence over AI bots, scraper bots and crawlers? Cloudflare has launched a new feature that allows users to block all AI bots with a single click of a button.

Introduction to Cloudflare Anti-Bot Solution

Before we dive into Cloudflare’s one-click solution that guarantees AIndependence, let us examine some bot statistics.

Here are some data from Cloudflare’s analysis of AI bot traffic across its network to give you an insight into its effect:

- ByteDance is TikTok’s parent company and controls ByteSpider, which takes the lead in terms of request and crawling of internet property.

- GPTBots that are owned by OpenAI rank second in both crawling activity and being blocked by website anti-bot mechanisms

- The most active AI bots that send the highest number of requests to websites are Bytespider, GPTBot, Amazonbot, and ClaudeBot.

- Popular websites are often associated with human traffic, which makes them an ideal target for AIbots. Subsequently, this influences the implementation of robust anti-bot features on these websites.

- Despite AI bots accessing 39% of the top one million internet properties using Cloudflare, only 2.98% of these properties actively block or challenge AI bot requests.

Cloudflare has been at the forefront of providing protection to websites from bots. However, they recently announced the launch of a button that allows users to block all bots- both good and bad ones. A bot is generally considered as good when it behaves well by following the website robots.txt file, does not run interference for RAG through the website data and is not trained with unlicensed content. Regardless of the fact that the activities of these good bots are largely ethical, many Cloudflare users are not hesitant to block them.

Since it has become apparent that users do not want AI bots to visit their websites, which could possibly cause some challenges, Cloudflare added a button that blocks all AI bots. This feature is available to all customers, including those on the free plan.

Why Businesses Choose Cloudflare Solution

Here are some reasons why businesses choose Cloudflare:

Ease of use

Probably the biggest advantage of Cloudflare one-click solution is its ease of use. It does not require any technical expertise. Once you get on the dashboard, you can activate it in less than five steps, as we discussed above. In addition, it can be deployed rapidly which significantly reduces the time and effort required to initiate robust anti-bot measures.

High-level protection

Cloudflare uses up-to-date machine learning training data to adapt to evolving threats and provide comprehensive protection against bots. In addition, they provide real-time threat detection by continuously monitoring for suspicious activities. Subsequently, Cloudflare one-click solution is designed to respond immediately to these threats to ensure optimal protection and data security.

Maintains optimal website performance

One of the biggest challenges of having AI bots access a website is that they can overload the server with multiple requests. Subsequently, this could lead to performance issues and human users may have a negative experience.

However, Cloudflare one-click solution automates the blocking of AI bots, scrapers, and crawlers which efficiently reduces the need for constant manual monitoring and blocking. In addition, it helps to prevent server overload, minimize downtime, conserve bandwidth, optimize resource usage for increased website performance.

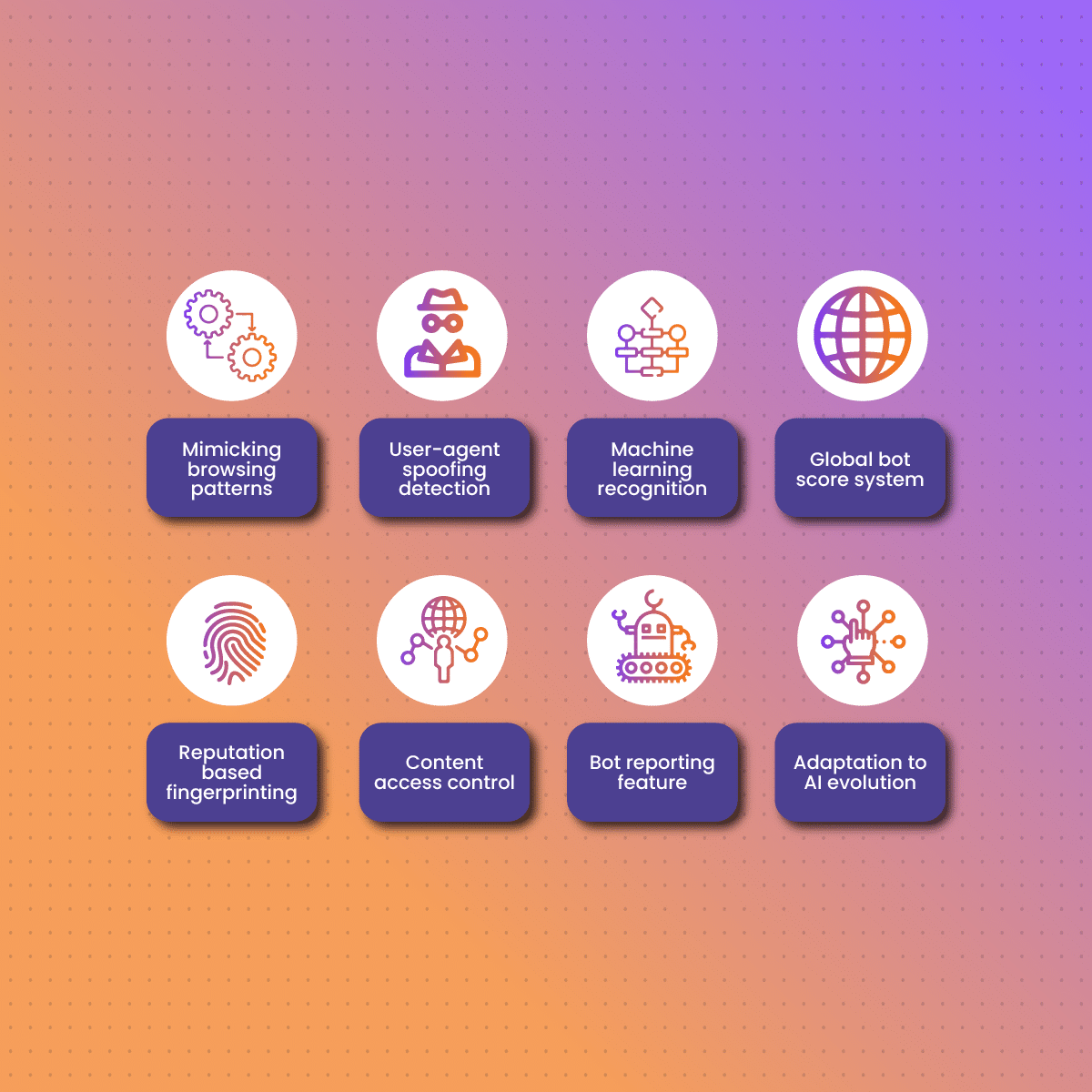

How does Cloudflare find AI bots mimicking human browsers?

Many bots are designed to evade AI blockers because they can mimic human browser browsing patterns. Spoofing the user-agent header is a common technique used by AI bots and web crawlers to appear as a human user. Cloudflare leverages a global machine-learning model that can recognize this activity as a bot. Subsequently, with Cloudflare’s newest model, the change in user agent has no effect on the identification and blocking of AI bots. However, NetNut has robust features that ensure your web scraper has access to real-time data.

Cloudflare global bot score system can accurately detect traffic from AI bots. Subsequently, when malicious individuals attempt to crawl websites at a large scale, they use various tools and frameworks, which the system is able to identify and block.

The Cloudflare system leverages global aggregates across many signals to determine the reputation of a fingerprint. Therefore, based on these signals, the system can correctly find traffic from bots mimicking human users. It is fair to point out that this is possible due to updating already available data on scraping tools and their behavior. In addition, manual fingerprinting of the bot is not necessary, which ensures users are safeguarded from the effects of the growing bot activities.

Therefore, this one-click solution by Cloudflare is a way for website owners to gain independence. In simpler terms, it gives them better control over what part of their content can be accessed and collected by bots, which are often used in AI training. Subsequently, this is a way of sending a clear message to AI companies about the extent of their activities. In addition, it is a reminder that they have to respect the rights of content creators and obtain the necessary permission before attempting to extract data.

Another notable addition to Cloudflare is the feature that allows users to report bad AI bots, scrapers, and crawlers. All Cloudflare users, regardless of subscription tier, can use a special reporting tool to make complaints about bots that extract their data unethically or without permission. Meanwhile, users on the Enterprise Bot Management plan can submit false negative feedback reports via the Bot Analytic feature on the Cloudflare dashboard.

Furthermore, we have to bear in mind that the evolution of AI is going at a steady pace. These bots play a crucial role in data collection especially for training machine learning models. Subsequently, NetNut offers solutions that optimize web scraping to ensure your business has uninterrupted access to real-time data.

What is the Ethical Solution to Web Data Collection?

Regardless of how notorious some AI bots can be, we cannot underestimate the significance of these automated programs for data collection. There are several challenges as well as opportunities for data collection in this AI era. HR, medical research, academicians, and many others rely on real time data for various activities like competitive pricing, market research, ad verification, SEO, machine-learning model training, etc . Therefore, there is a need for ethical web scraping.

NetNut is an industry leading company for web data extraction that focuses on helping individuals and businesses collect data from the web ethically.

NetNut has an extensive network of over 85 million rotating residential proxies and 5 million+ mobile IPS in 195 countries, which helps provide exceptional data collection services to customers.

NetNut offers various proxy solutions designed to overcome the challenges of web scraping. In addition, the proxies promote privacy and security while extracting data from the web.

NetNut rotating residential proxies are your automated proxy solution that ensures you can access websites despite geographic restrictions. Therefore, you get access to real-time data from all over the world that optimizes decision-making.

Alternatively, you can use our in-house solution- Web Unblocker, to access websites and collect data. Moreover, if you need customized web scraping solutions for mobile devices, you can use our mobile proxies that support 4G/5G/LTE networks.

While there are several proxy providers, NetNut stands out for several reasons. Here are some reasons to choose NetNut social scraper:

- You are guaranteed 100% success rates.

- Bypass advanced anti-bot features.

- Pay for only the data that you receive

- The API is customized to scrape the specific data points you need

- NetNut dashboard has a user-friendly interface

- Enjoy ultra-fast data collection on demand to get the freshest social insights

- Highly responsible customer support services to help you with any challenges

- Extensive documentation to provide a comprehensive understanding of relevant concepts

Conclusion

AI bots, scrapers, and crawlers are inevitable in this digital era. Subsequently, these tools can significantly affect website performance, data security, and even user experience. With the increase in privacy violations and data breaches, it has become essential for websites to protect their assets. Usually, this involves preventing access to malicious bots, scrapers, and crawlers.

However many businesses and other sectors will suffer without access to data. AI models require data to build their knowledge and work well. Data driven decisions are necessary for the development of an organization. The problem with anti-bot measures is their inability to differentiate good bots from bad bots. Therefore NetNut offers a robust solution that allows the good bots to get access to web data.

Frequently Asked Questions

What is the difference between AI bots, scrapers, and crawlers?

AI bots are automated programs designed to carry out certain tasks. Examples of these tasks include customer service interaction and social media management, which often require 24/7 availability. Subsequently, these tasks are often repetitive, which poses a risk of significant error or negligence when performed by humans. However, AI bots leverage artificial intelligence to mimic human behavior to increase productivity.

Scrapers, as the name suggests, are automated programs designed to extract data from various websites. Since data has become the new gold for many businesses, scrapers have become a valuable tool in this venture. Web scrapers also play a crucial role in market research, competitive analysis, and SEO monitoring.

Lastly, crawlers, also known as spiders, are bots used by search engines to index website content. They work by routinely updating the search engine database, which ensures that users get up-to-date and relevant answers to their queries.

What are the risks associated with bots getting access to a website?

Although bots automate repetitive tasks, they come with some peculiar risks and challenges. Some of them include:

- Data security threats: One of the crucial concerns regarding bots is risk of data exposure. AI bots, scrapers, and crawlers can access sensitive information, which could lead to data breaches. In addition, some businesses can leverage scrapers to obtain proprietary data that gives them an unfair competitive advantage.

- Performance issues: An outstanding characteristic of scrapers is sending too many requests within a short time. Subsequently, an influx of bot traffic can significantly affect the performance of a website and in worst cases lead to a temporary crash. In addition, bot traffic can consume significant bandwidth which may increase cost of operations.

- Legal and ethical concerns: Since bots work based on a script, they can collect personal data without consent and this often raises legal and ethical concerns. In addition, unauthorized web data extraction can violate terms of service which may lead to legal situations.

How do websites traditionally identify and block bot traffic?

Many website owners implement certain strategies to identify and block bot traffic. Usually, the AI bot, scraper, or crawler must interact with a website Robots.txt file to understand what is permissible.

CAPTCHA is another commonly used technique to tell bots apart from humans. Subsequently, if bot activity is suspected, then the IP address is banned temporarily or permanently. However, modern bots are programmed to effectively bypass these traditional blocking techniques. In addition, methods like CAPTCHA can prove to be inconvenient to users, and this can lead to the loss of actual human traffic.