Introduction

Data has become an essential aspect of business operations. Its impact on the growth of ecommerce businesses and machine training model cannot be overemphasized. As technology is evolving, the process of web data extraction becomes automated. Having good tools like Playwright optimizes the process of web scraping.

Web scraping goes beyond copying and pasting data from the internet into an Excel sheet for analysis and interpretation. Many modern websites have advanced features that require advanced tools to get access to the data you need. Therefore, this guide will explain everything you need to know about Playwright scraping in 2024.

Let us dive in!

What is Playwright?

Playwright is a powerful open-source automation library that allows you to automate web browser activities like navigating pages, clicking links, filling out and submitting forms, web scraping, and taking screenshots. This library is gaining widespread adoption due to its unique features and capabilities. It is compatible with many browsers, including Chrome, Firefox, and Safari. In addition, it can work with multiple pages simultaneously without waiting for the operations to complete in any of them.

One of the primary challenges associated with web scraping is CAPTCHA. However, you can easily bypass CAPTCHA with Playwright for seamless web data extraction.

Why Use Playwright for web scraping?

Here are some features of Playwright that make it stand out as a powerful and unique automation browser:

Cross-browser compatibility

Playwright has been widely adopted since its inception due to its cross browser compatibility. It supports various browsers including WebKit (macOS, and iOS), Chromium (Google Chrome) and Firefox which makes it an excellent choice for web scraping. This wide range of compatibility is necessary to ensure your web scraper can adapt to various browser environment thus making it more reliable and versatile.

Multiple language compatibility

This powerful library works with several programming languages like JavaScript, Python, Java, and others. Subsequently, this provides a kind of flexibility that allows for easy integration into existing projects without complete change of the programming language. Developers already familiar with Node.js often use JavaScript while Python is a popular choice for those who want to integrate web scraping with data analysis applications.

Robust API

Playwright has a robust API that is easy to use. Therefore, beginners and experts can use this powerful library for various activities, including automation and testing. The API offers simple methods to perform automated tasks like scrolling, taking a screenshot, and finding a page. In addition, Playwright has extensive documentation that provides clear explanations for its features and how they work.

Dynamic content

Many modern websites are built with JavaScript, which generates content dynamically. This poses a significant threat to web scraping since traditional scrapers rely on static HTML. However, Playwright is an excellent library for handling web pages with dynamic content as it waits for all the elements to be available in the DOM. Subsequently, this ensures that the scraping code does not fail because the elements are loading asynchronously. In addition, Playwright can interact with single-page applications for optimal web data extraction.

Anti-scraping features

Although web scraping is not an illegal activity, many modern websites are taking active steps to prevent web-scraping bots from accessing their pages. Bots used for web scraping usually send too many requests within a few seconds and this could significantly affect the performance of the target website. However, Playwright has several features designed to bypass these anti-scraping measures.

With the option to run in headless mode, the Playwright library allows your scraper to run without interacting with the GUI (graphical user interface), which reduces the chances of detection. More so, you can use the library to intercept and modify requests and responses, which is often useful in bypassing these measures. In addition, Playwright can imitate real-human user interactions like mouse movement, scrolling, taking screenshots and more, which makes your web scraping less likely to trigger the anti-scraping techniques.

Performance and speed

Another exciting feature of Playwright is its performance and speed. When dealing with large datasets, high speed and performance are crucial to effective web data extraction. Subsequently, Playwright was designed to be a faster yet effective alternative to older solutions like Selenium. This library’s architecture allows for fast interaction with web pages, which significantly reduces the time required to extract data. In addition, you can run multiple browser instances in parallel with Playwright and this makes the process of data collection faster.

Debugging tools

Playwright comes with built-in debugging tools. Web scraping often requires testing and debugging to ensure the script is useful especially for large-scale data retrieval. Therefore, you can inspect the web scraping script, check the DOM and identify any issues that could interfere with the efficiency of web data retrieval.

Step-by-step Guide to Playwright Scraping

This section will cover how to use Playwright to extract data from the web with Node.js and Python. We also have a detailed guide on how to scrape Amazon with Python, which you may find enlightening.

If you are using Node.js, create a new project and install the Playwright library via these commands:

npm init -y

npm install Playwright

Here is a basic script that allows you to access data on a dynamic page:

const playwright = require(‘playwright’);

(async () => {

for (const browserType of [‘chromium’, ‘firefox’, ‘webkit’]) {

const browser = await playwright[browserType].launch();

const context = await browser.newContext();

const page = await context.newPage();

await page.goto(“https://amazon.com”);

await page.screenshot({path: `nodejs_${browserType}.png`, fullPage: true});

await page.waitForTimeout(1000);

await browser.close();

};

})();

Let us examine how the above code works:

The first line of code imports Playwright. The second line of code launches multiple instances of browsers. This allows the script to automate Chromium, Firefox and Webkit. Then, a new browser page opened. Subsequently, the page.go to ( ) function navigates to the Amazon web page. Afterwards, there is a wait of 1 second to show the page to the end user. The last line of the code closes the browser.

You can write the same code easily using Python. The first step is to install the Playwright Python library with the pip command. In addition, you may need to install the necessary browser with the install command as shown below:

python -m pip install Playwright

playwright install

Before we proceed, it is crucial to mention that Playwright supports both synchronous and asynchronous models. Here is an example of the asynchronous API:

from Playwright.async_api import async_playwright

import asyncio

async def main():

browsers = [‘chromium’, ‘firefox’, ‘webkit’]

async with async_playwright() as p:

for browser_type in browsers:

browser = await p[browser_type].launch()

page = await browser.new_page()

await page.goto(‘https://amazon.com’)

await page.screenshot(path=f’py_{browser_type}.png’, full_page=True)

await page.wait_for_timeout(1000)

await browser.close()

asyncio.run(main())

Although the above code is similar to the previous Node.js code, there are some differences. The most prominent difference is the use of the asyncio library with Python. Subsequently, the browser object launches a headless mode instance of Chromium, Firefox, and WebKit.

When writing web-scraping code in Node.js, you can create multiple browser contexts, which gives you more control over creating a context object and multiple pages within that context. The result will be new pages in new tabs like this:

const context = await browser.newContext()

const page1 = await context.newPage()

const page2 = await context.newPage()

If you want to handle page context in your code, you can get the browser context on the page with the page.context ( ) function.

Locating elements on a page

Before you can extract data from any element on a page, you must first locate the element. The playwright supports both XPath and CSS selectors. For example, you open a page on Amazon (https://www.amazon.com/b?node=17938598011), and you find the div elements with two classes- a-section and a-spacing-base. To select all the div elements, you need to run a loop over all these elements. Subsequently, you can select these div elements via a CSS selector as shown below:

.s-card-container > .a-spacing-base

Likewise, you can use the XPath selector like this:

//*[contains(@class, “s-card-container”)]/*[contains(@class, “a-spacing-base”)]

To use these selectors, the most common functions are as follows:

- $eval(selector, function): It selects the first element, sends the element to the function, and returns the result of the function.

- $$eval(selector, function): The function is the same as above, except that it selects all elements

- querySelector(selector): This function returns only the first element;

- querySelectorAll(selector): It returns all the elements.

Bear in mind that these methods will work correctly with both CSS and XPath Selectors.

Scraping text

After the page has been loaded, you can use a selector to extract all products using the $$eval function, as shown below:

const products = await page.$$eval(‘.s-card-container > .a-spacing-base’, all_products => {

// run a loop here

})

You can now extract all the elements that contain the required data in a loop:

all_products.forEach(product => {

const title = product.querySelector(‘.a-size-base-plus’).innerText

})

Finally, you can use the innerText attribute to extract data from each data point. Here is how the complete code in Node.js should look like:

const playwright = require(‘playwright’);

(async() =>{

const launchOptions = {

headless: false,

proxy: {

server: ‘https://us-netnut.io:5959’,

username: ‘USERNAME’,

password: ‘PASSWORD’

}

};

const browser = await playwright.chromium.launch(launchOptions);

const page = await browser.newPage();

await page.goto(‘https://www.amazon.com/b?node=17938598011’);

await page.waitForTimeout(1000);

const products = await page.$$eval(‘.s-card-container > .a-spacing-base’, all_products => {

const data = [];

all_products.forEach(product => {

const titleEl = product.querySelector(‘.a-size-base-plus’);

const title = titleEl ? titleEl.innerText : null;

const priceEl = product.querySelector(‘.a-price’);

const price = priceEl ? priceEl.innerText : null;

const ratingEl = product.querySelector(‘.a-icon-alt’);

const rating = ratingEl ? ratingEl.innerText : null;

data.push({ title, price, rating});

});

return data;

});

console.log(products);

await browser.close();

})();

Let us see what the code will look like if it is written in Python.

Python has the eval_on_selector function, which is quite similar to the $eval function of Node.js. However, it is not suitable for this scenario because the second parameter needs to be JavaScript. In this case, it is better to write the entire code in Python.

Therefore, we can use the query_selector and query_selector_all functions, which will return an element and a list of elements, respectively.

import asyncio

from Playwright.async_api import async_playwright

async def main():

async with async_playwright() as pw:

browser = await pw.chromium.launch(

headless=False,

proxy={

‘server’: ‘https://us-netnut.io:5959’,

‘username’: ‘USERNAME’,

‘password’: ‘PASSWORD’

}

)

page = await browser.new_page()

await page.goto(‘https://www.amazon.com/b?node=17938598011’)

await page.wait_for_timeout(1000)

all_products = await page.query_selector_all(‘.s-card-container > .a-spacing-base’)

data = []

for product in all_products:

result = dict()

title_el = await product.query_selector(‘.a-size-base-plus’)

result[‘title’] = await title_el.inner_text() if title_el else None

price_el = await product.query_selector(‘.a-price’)

result[‘price’] = await price_el.inner_text() if price_el else None

rating_el = await product.query_selector(‘.a-icon-alt’)

result[‘rating’] = await rating_el.inner_text() if rating_el else None

data.append(result)

print(data)

await browser.close()

if __name__ == ‘__main__’:

asyncio.run(main())

Bear in mind that the Node.js and Python codes will produce the same results.

Scraping Images

In this section, we shall examine how to scrape images from a web page with Node.js and Python.

Using Node.js

There are several ways to extract images via the JavaScript Playwright wrapper. For the purpose of this tutorial, we will use the https library and fs library. These libraries are crucial for sending requests to download images and store them in a directory. Let us see how they work:

const playwright = require(‘playwright’);

const https = require(‘https’);

const fs = require(‘fs’);

(async() =>{

const launchOptions = {

headless: false,

proxy: {

server: ‘https://netnut.io:9595’,

username: ‘USERNAME’,

password: ‘PASSWORD’

}

};

const browser = await playwright.chromium.launch(launchOptions);

const page = await browser.newPage();

await page.goto(‘https://www.example.com’);

await page.waitForTimeout(1000);

const images = await page.$$eval(‘img’, all_images => {

const image_links = [];

all_images.forEach((image, index) => {

if (image.src.startsWith(‘https://’)) {

image_links.push(image.src);

}

});

return image_links;

});

images.forEach((imageUrl, index) => {

const path = `image_${index}.svg`;

const file = fs.createWriteStream(path);

https.get(imageUrl, function(response) {

response.pipe(file);

});

});

console.log(images);

await browser.close();

})();

In the code above, we initialize a browser instance with NetNut proxies. After navigating to the website, the $$eval function is used to extract all the image elements. Then, the forEach loop iterates over every image element as shown below:

images.forEach((imageUrl, index) => {

const path = `image_${index}.svg`;

const file = fs.createWriteStream(path);

https.get(imageUrl, function(response) {

response.pipe(file);

});

});

In the code above, we use the forEach loop to construct the image name using the index and the path of the image. Thus, we are using a relative path to store the images in the current directory. Next, we initiate a file object via the createWriteStream method from the fs library.

The https library allows us to send a GET request to download the image using the image.src function. Once the code is executed, the script loops through each image available on the target website and saves them to the directory.

Using Python:

For the Python programming language, we will use the request library to interact with the website. You can install it in your terminal with the code below:

python -m pip install requests

Similar to what we did in the Node.js code, the first step is to use the Playwright wrapper to extract the images. In this example, we will use the query_selector_allfunction to extract all the image elements. Once the elements are extracted, a GET request will be sent to each image source URL. Then, the response content is saved in the directory. Here is how the code should look:

from Playwright.async_api import async_playwright

import asyncio

import requests

async def main():

async with async_playwright() as pw:

browser = await pw.chromium.launch(

headless=False,

proxy={

‘server’: ‘https://netnut.io:5959’,

‘username’: ‘USERNAME’,

‘password’: ‘PASSWORD’

}

)

page = await browser.new_page()

await page.goto(‘https://www.example.com’)

await page.wait_for_timeout(1000)

all_images = await page.query_selector_all(‘img’)

images = []

for i, img in enumerate(all_images):

image_url = await img.get_attribute(‘src’)

if not image_url.startswith(‘data:’):

content = requests.get(‘https://www.example.com/’ + image_url).content

with open(f’py_{i}.svg’, ‘wb’) as f:

f.write(content)

images.append(image_url)

print(images)

await browser.close()

if __name__ == ‘__main__’:

asyncio.run(main())

Intercepting HTTP requests with Playwright

Intercepting HTTP requests is often useful in advanced web scraping. You can use this function to modify the response output, customize headers, and abort loading images. Playwright can be used to intercept HTTP requests in Python and JavaScript.

Using Python

The first step is to define a new function called handle_route, which allows Playwright to intercept the HTTP requests. It works by fetching and updating the title of the HTML code and replacing the header to make the content-type: text/html. In addition, it prevents the images from loading so that when you execute the script, the target website loads without any images. Thus, the title and header will be modified. This is shown below:

from Playwright.async_api import async_playwright, Route

import asyncio

async def handle_route(route: Route) -> None:

response = await route.fetch()

body = await response.body()

body_decoded = body.decode(‘latin-1’)

body_decoded = body_decoded.replace(‘<title>’, ‘<title>Modified Response’)

await route.fulfill(

response=response,

body=body_decoded.encode(),

headers={**response.headers, ‘content-type’: ‘text/html’},

)

async def main():

async with async_playwright() as pw:

browser = await pw.chromium.launch(

proxy={

‘server’: ‘https://netnut:5959’,

‘username’: ‘USERNAME’,

‘password’: ‘PASSWORD’

},

headless=False

)

page = await browser.new_page()

# abort image loading

await page.route(‘**/*.{png,jpg,jpeg,svg}’, lambda route: route.abort())

await page.route(‘**/*’, handle_route)

await page.goto(‘https://www.example.com’)

await page.wait_for_timeout(1000)

await browser.close()

if __name__ == ‘__main__’:

asyncio.run(main())

In the example above, the route ( ) method instructs Playwright on the specific function to call when intercepting the requests. It usually involves a regex pattern and the name of the function. Subsequently, when we use the “**/*.{png,jpg,jpeg,svg}” pattern, Playwright is instructed to match all the URLs that end with the given extensions: .svg, .png, .jpg, and .jpeg.

Using Node.js:

Similarly, you can use Node.js to instruct Playwright to intercept HTTP requests, as shown below:

const playwright = require(‘playwright’);

(async() =>{

const launchOptions = {

headless: false,

proxy: {

server: ‘https://netnut.io:5959’,

username: ‘USERNAME’,

password: ‘PASSWORD’

}

};

const browser = await playwright.chromium.launch(launchOptions);

const context = await browser.newContext();

const page = await context.newPage();

await page.route(/(png|jpeg|jpg|svg)$/, route => route.abort());

await page.route(‘**/*’, async route => {

const response = await route.fetch();

let body = await response.text();

body = body.replace(‘<title>’, ‘<title>Modified Response: ‘);

route.fulfill({

response,

body,

headers: {

…response.headers(),

‘content-type’: ‘text/html’

}

});

});

await page.goto(‘https://www.example.com’);

await page.waitForTimeout(1000)

await browser.close();

})();

From the example above, the page.route method intercepts the HTTP requests and modifies the title and headers of the response. Since it prevents any images on the page from loading, this speeds up page loading and optimizes web scraping.

Use of Proxies in Playwright

You can use proxies with Playwright to ensure anonymity and privacy. Before we dive deeper into this section, let us examine how to start a proxy with Chromium.

With Python:

from Playwright.async_api import async_playwright

import asyncio

async def main():

with async_playwright() as p:

browser = await p.chromium.launch()

With Node.js:

const { chromium } = require(‘playwright’);

const browser = await chromium.launch();

The above codes require some modification to function properly with proxies. With Node.js, the launch function can accept an optional parameter of LaunchOptionstype. This object can be used to send other parameters, such as headless. Another required parameter is proxy, which has properties like server, username, password, etc.

Subsequently, we need to create an object that can be used to specify these parameters. The next step is to pass it to the launch method, as shown in the example below:

const playwright = require(‘playwright’);

(async() =>{

for (const browserType of [‘chromium’, ‘firefox’, ‘webkit’]){

const launchOptions = {

headless: false,

proxy: {

server: ‘https://netnut.io:5959’,

username: ‘USERNAME’,

password: ‘PASSWORD’

}

};

const browser = await playwright[browserType].launch(launchOptions);

const page = await browser.newPage();

await page.goto(‘https://www.example.com/’);

await page.waitForTimeout(3000);

await browser.close();

};

})();

If you are using Python, which is one of the best programming languages for web scraping, there is no need to create an object for LaunchOptions. Instead, the values can be specified as distinct parameters, as shown below:

from Playwright.async_api import async_playwright

import asyncio

async def main():

browsers = [‘chromium’, ‘firefox’, ‘webkit’]

async with async_playwright() as p:

for browser_type in browsers:

browser = await p[browser_type].launch(

headless=False,

proxy={

‘server’: ‘https://netnut.io:5959’,

‘username’: ‘USERNAME’,

‘password’: ‘PASSWORD’

}

)

page = await browser.new_page()

await page.goto(‘https://www.example.com/’)

await page.wait_for_timeout(3000)

await browser.close()

asyncio.run(main())

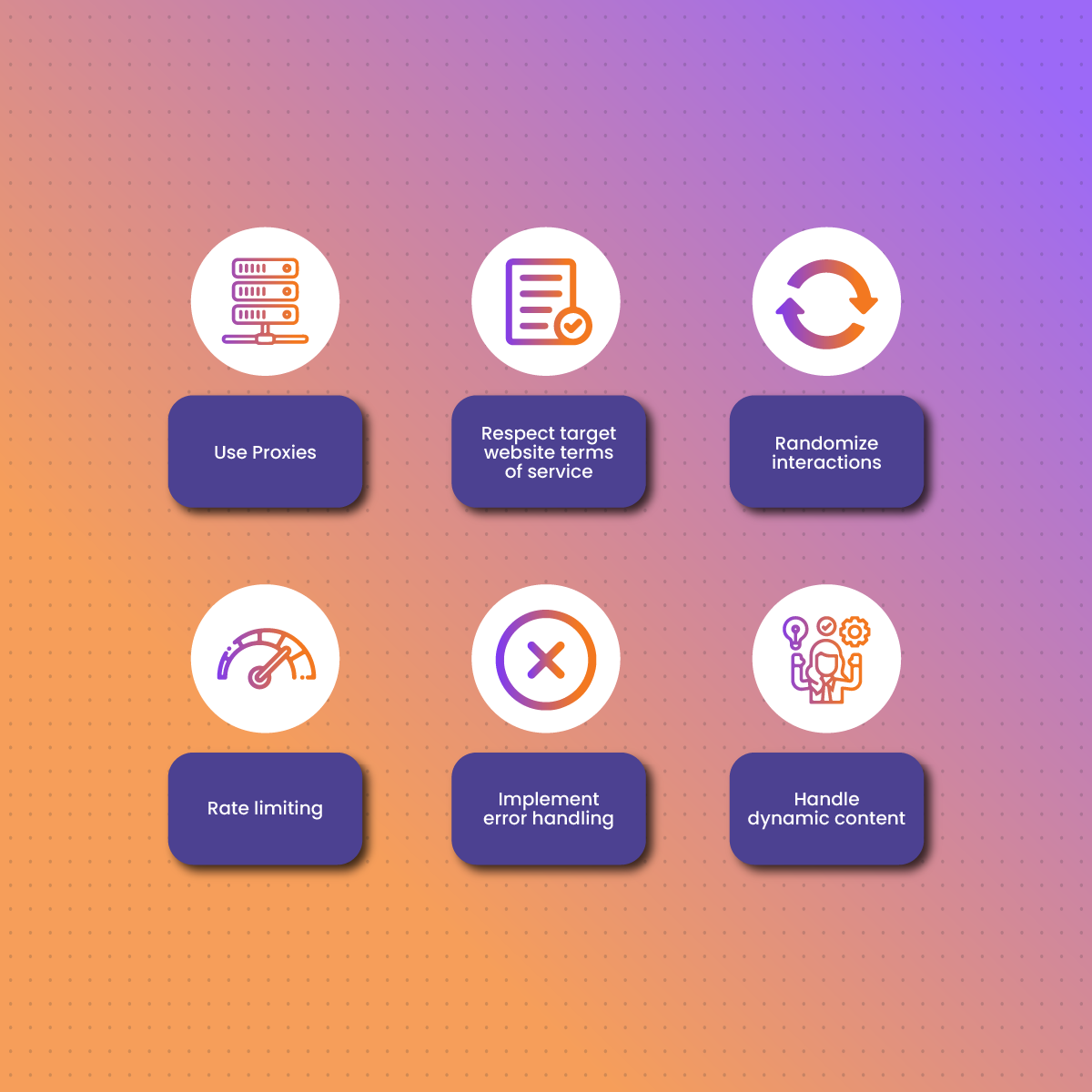

Best Practices for Web Scraping with Playwright

Web scraping with Playwright comes with promises of several possibilities. However, to avoid some pitfalls, you need to follow some best practices. They include:

Use Proxies

A proxy server acts as an intermediary between your network traffic and the internet. One of the biggest challenges to web scraping is IP bans. If the website suspects an unusual amount of traffic from your IP address, it can ban your IP. Therefore, using rotating proxies allows you to rotate your IP address constantly to minimize the chances of getting blocked.

There are various types of proxies- residential, datacenter, and mobile proxies. Residential proxies, although expensive, are the most reliable proxy option. They work with IPs assigned by the ISPs to actual residences. Be sure to avoid free proxies as they are often unreliable and do not offer the best security and privacy features.

Respect target website terms of service

Although web scraping is not illegal, there are some rules you must consider. Since the effect of scraping bots can be significant, websites have terms of use that regulate how they operate. Therefore, before you dive into Playwright scraping, be sure to get familiar with the website’s terms of service. Bear in mind that some websites explicitly prohibit web scraping while others allow it under specific conditions. In addition, find the website robots.txt file, as it may contain an in-depth guide on how the website can interact with bots.

Randomize interactions

Playwright has features that allow you to introduce randomness in your web scraping code. This allows you to avoid detection by mimicking how a human interacts with a website. Examples of these activities include varying the speed of mouse movements and scrolling a page as a way to avoid detection by anti-scraping measures.

Rate limiting

Another crucial tip to optimize Playwright web scraping is to implement delays in your code. This is crucial to avoid overloading the target server and triggering the anti-scraping measures. When you include delays in the script, your scraper activities become similar to human browsing patterns. In addition, avoid sending too many requests within a short period as this can cause the website to lag.

Implement error handling

Several issues, like changes in website structure, incorrect proxy details, or network issues, could lead to an error response when performing Playwright web scraping. However, you manage these situations by including codes that instruct your scraper on how to handle these situations effectively. You can use the retry logic to handle temporary issues like connection downtime. Subsequently, your scraper will not automatically crash when it encounters such errors. Alternatively, you can use the try-catch function, which logs all the errors encountered for analysis without compromising the data extraction process.

Handle dynamic content

Modern websites use JavaScript to load content dynamically, which makes Playwright scraping an excellent method. Although Playwright can handle dynamic content, you need to include a line of code that instructs it to wait for the content of the page to load fully before extracting the data. You can use Page.waitForSelector()method to ensure all the HTML elements are in the DOM before commencing data extraction.

The Best Proxy For Playwright Scraping- NetNut

Choosing the best proxy for playwright scraping involves considering several factors like price, coverage, IP pool, performance, and scalability. While there are several free proxies that can be easily used on public computers, they pose some security risks. Therefore, it becomes crucial to choose a reputable proxy provider like NetNut.

With an extensive coverage of +85 million rotating residential proxies in 195 countries, +5 million mobile IPS in over 100 countries, and +1 million static IPs, NetNut ensures you can extract data from any part of the world with ease.

NetNut offers various proxy solutions designed to overcome the challenges of web scraping. Integrating NetNut proxies with Playwright for web scraping opens doors for exceptional data collection. A unique feature of NetNut proxies is the advanced AI-CAPTCHA solver, which optimizes your web scraper capabilities.

When you use NetNut proxies for web data extraction, you are guaranteed 99.9% uptime, which is necessary for large-scale operations. In addition, these proxies provide high-level security and anonymity that take your online privacy to the next level.

NetNut rotating residential proxies are your automated proxy solution that ensures you can access websites despite geographic restrictions. Therefore, you get access to real-time data from all over the world that optimizes decision-making. Alternatively, you can use our in-house solution- NetNut Scraper API, to access websites and collect data.

Conclusion

This guide has examined the Playwright scraping tutorial. Web scraping is a process that helps businesses make data-driven decisions. Playwright has become a popular choice for scraping dynamic websites due to its robust features. It supports multiple browsers as well as various programming languages like Python, JavaScript and others.

Respecting website terms of use, robots.txt file, rate limiting, and error handling are some practical tips for scalable Playwright scraping. Whether you are dealing with single-page applications, JavaScript-reliant websites or complex authentication mechanisms, Playwright has several tools to ensure effective web scraping.

Using a premium proxy plays a significant role in optimizing Playwright scraping. NetNut offers industry-leading proxies that guarantee unlimited access to data across the globe. These proxies come at a competitive price, and NetNut offers a free 7-day trial. Contact us today to speak to an expert.

Frequently Asked Questions

How does Playwright scraping work?

- Select the target website and the specific data you need to extract.

- Download and install a programming environment like Python and an integrated development environment (IDE) such as PyCharm or Visual Studio Code.

- Install Playwright via the pip command and install the necessary binaries.

- Write a program that can automate the launching of a web browser, identifying the elements, and collecting data from the web page.

- Parse the HTML or XML data into a format that is easy to read, analyze, and interpret.

- Include a proxy server in the code to mask your actual IP address to avoid IP bans.

- Export the parsed data to a storage folder on your computer for further data manipulation.

Can I get blocked when performing Playwright web scraping?

Yes, there is a possibility that you could experience an IP block/ban when using Playwright for web data extraction. Since many modern websites have robust anti-bot measures to prevent web scraping, IP bans have become a common challenge. However, you can avoid this by using our rotating residential proxies. These proxies ensure that you don’t use one IP address to send several requests, which could trigger an IP ban. In addition, you can implement delays in your script for Playwright scraping.

What are the applications of Playwright scraping?

- Personal and academic research

- Companies can generate leads by scraping publicly available emails

- Gather data on consumer behavior and public sentiment

- Monitor price fluctuation in the market to determine the best prices for customers

- Compile data for training machine models

- Market research to determine how to introduce a new product or service.