Introduction Into Web Scraping

Data is becoming increasingly significant for the growth of many organizations. Web pages contain relevant data, but how can you get it? This is where web scraping comes into play.

Collecting data from multiple web pages on most days of the week seems like an easy task. However, this repetitive activity can be much more hectic and frustrating, especially due to human errors and IP blocks.

Automated scraping quickly overtook manual scraping because it saved time and resources, which could be channeled into something else. Web scraping tools provide an efficient alternative to quickly collect large volumes of data from the web.

This guide will define web scraping, how it works, why it is important to businesses, and NetNut’s solution for optimized performance.

What is Web Scraping?

Web scraping is the process of collecting data from the web. The data is often stored in a local file where it can be accessed for analysis and interpretation. A simple example of web scraping is copying content from the web and pasting it into Google Excel. This is a small-scale web data extraction activity.

This process involves using specialized software or scripts to access web pages, retrieve their content, and convert it into a structured format for analysis. Businesses use web scraping to gather competitive intelligence, monitor market trends, collect pricing information, and aggregate data from various sources. By automating data collection, web scraping enables organizations to efficiently gather and analyze web-based information, driving informed decision-making and strategic planning.

Web scraping involves using software applications – scrapers or bots, to visit a web page, retrieve data, and store it in a local file. Instead of copying and pasting, automating the web scraping process becomes critical when you need to collect a large volume of data within a short time.

It is important to note that web scraping may not always be a simple task. This is because websites come in different structures and designs, which could affect the efficiency of web scrapers.

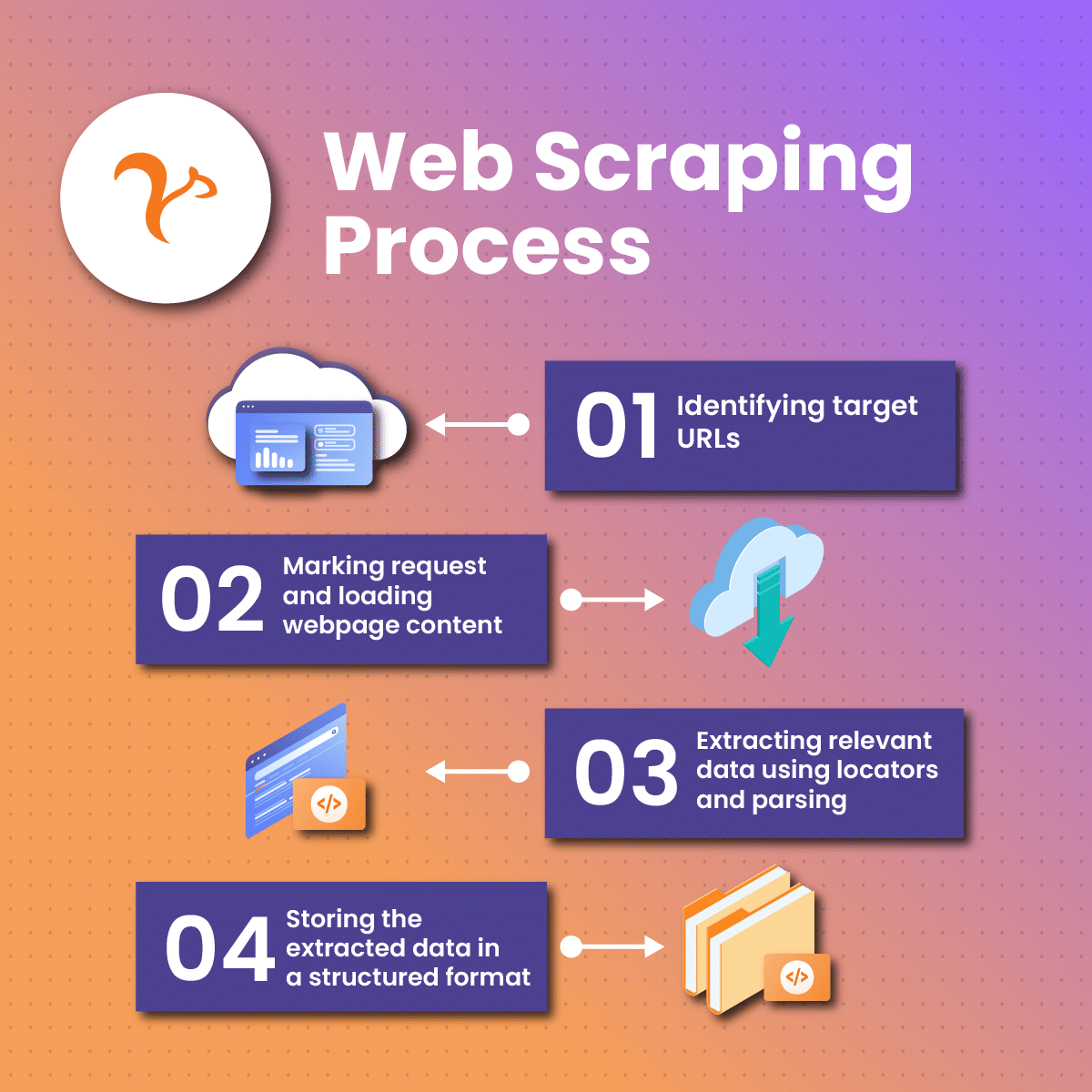

How Does Web Scraping Work?

Web scraping works by using automated tools or scripts to access websites and extract data. The process typically involves several steps: first, the scraper sends an HTTP request to the target website, fetching the HTML content of the page. Next, the scraper parses the HTML, identifying and extracting the relevant data points using predefined rules or patterns. The extracted data is then cleaned and structured into a usable format, such as CSV or JSON. Finally, this data can be analyzed or integrated into databases and applications for further use. Web scraping allows businesses to efficiently collect and utilize vast amounts of web-based information for various strategic purposes.

The precise methods may differ based on the web scraping tool or software type. However, extracting data from websites usually follows these three basic processes.

Sending an HTTP request to the website

When you attempt to visit a website, your browser sends an HTTP request. The response determines if you can access the content of the website. Let us paint a scenario- you must knock at the door to announce your presence when you visit someone. This is similar to making a request to a website to access its content. The owners of the house may open and let you in or decide to ignore you.

Similarly, when the website approves your request, you can access the content on the page. On the other end, you may receive an error response if the website does not grant you access for various reasons. Therefore, the first process of web scraping involves making an HTTP request to the website they want to access.

Extract and parse the website’s code

Once the website grants permission to access its content, the next process of web scraping is extracting and parsing the website code. The code allows the web scraper to determine the website structure. It will then parse the code and break it down into smaller parts to identify and retrieve elements and objects pre-defined in the web-scraping code. The elements may include tags, classes, IDs, ratings, text, or other information you want to collect.

Save data in a preferred format

The last step is saving the data locally. The data extracted is predefined by the instructions you give the web scraping tool. Subsequently, the extracted data is often stored in a structured method, such as CSV or XLS format.

The following steps provide a brief overview of how web scraping works. These steps may seem simple but are often more complicated than meets the eye. Several challenges can arise from any of the steps, making extracting website data difficult.

Different Types of Web Scraping

Businesses can use different types of web scraping techniques to extract relevant data. Let us explore them to see which one is the best option for extracting web data with ease:

Manual web scraping

This is an old method of collecting data from the web. It is a simple technique that involves copying data from any web page and pasting it on your preferred location. Manual web scraping is time and effort-intensive; hence, it is not suitable for large volumes of data. In addition, this method may not be ideal if you need regular data updates.

Manual web scraping is best when you need a small volume of data for private use. However, if you need to publish it, you must give the authors credit or rewrite it from your perspective. Failure to do this makes you guilty of plagiarism, which could significantly affect your brand reputation.

Automatic web scraping

Automatic web scraping is a method of collecting data from the web using technology. Many organizations prefer this method because it is efficient, quick, and prone to fewer errors. Here are some techniques that are integrated into automatic web scraping:

- DOM parsing: This technique analyses and copies the structure of a website, including elements of its design

- Text pattern matching: It helps to gather data that fits an expression pattern

- Vertical aggregation: This technique collects big data and requires no human activity

- HTML parsing: It reads HTML coding to extract links and text from web pages.

Benefits of Web Scraping

Web scraping offers numerous benefits for businesses by providing access to vast amounts of web-based data. It enables companies to gather competitive intelligence, such as monitoring competitors’ prices, product offerings, and customer reviews, which helps in making informed strategic decisions. Web scraping also facilitates market research by collecting data on industry trends and consumer behavior, providing valuable insights for product development and marketing strategies.

Additionally, it automates the data collection process, saving time and reducing the manual effort required to gather information. By leveraging web scraping, businesses can improve their decision-making, optimize operations, and gain a competitive edge in the market.

Below are a few of the most important benefits of web scraping for businesses:

Saves time

One of the advantages of web scraping is that it saves time. You don’t have to comb through various websites to obtain data, which can be time-consuming. However, the web scraping process allows you to extract a large amount of data and store it in your preferred location. This process is executed within a few minutes. Since web scraping is automated, it can retrieve data from multiple sources faster than a human.

Reliable data

Another benefit of web scraping is that it can collect accurate and reliable data. When a human is tasked with collecting data from 30 websites in 30 minutes, it may be tedious and contain several errors. While humans are intelligent beings, even the most detail-oriented person can make errors. Sometimes, these errors can have significant consequences on business decisions.

However, with web scraping, you can avoid these errors to ensure the data you are working with is accurate and reliable. Web scraping involves algorithms that can identify required data and extract and parse them while reducing the risk of errors. In addition, web scraping can extract web data consistently to ensure uniformity and reliability.

Customization and flexibility

Web scrapers are versatile and have various uses. You can easily customize them to suit your data extraction needs so you can maximize their efficiency. This flexibility ensures you can easily collect data from the web efficiently within a short period.

Automating repetitive tasks

Businesses need up-to-date data to stay ahead of the competition. Therefore, web scraping is an excellent way to ensure you have updated data. Since it is an automated process, it frees up your time so you can direct your effort to other activities. In addition, you can create customized web scrapers that automatically extract data based on a predefined frequency with a programming language like Python or JavaScript or web scraping software.

Speed

Another advantage of web scraping is the speed of execution. A scraping project is often completed within minutes. However, the total time depends on the complexity of the project, the tools, and the resources used for the web scraping.

In addition, web scraping has low maintenance costs, which makes it a cost-effective option. Once you initiate web scraping, data is retrieved from multiple sources. As a result, you get a large volume of data at a low cost and incredibly high speed.

Applications of Web Scraping

Web scraping has many applications across multiple industries. Let us explore some of the ways businesses utilize it:

Price monitoring

One of the most common applications of web scraping is price monitoring. An important business strategy is extracting product information and pricing from e-commerce websites. They compare the information to the prices of their product and analyze to identify its impact on profit, which is the primary goal of a business. Therefore, companies can devise dynamic pricing that can increase sales as well as overall revenue.

Market research

Market research allows companies to collect data that provides insight into current trends, market pricing, optimal points of entry, and competitor monitoring. Therefore, web scraping is a critical aspect of the research and development of any organization. It provides accurate information to facilitate decision-making that could alter the direction of operations. Web scraping provides high-quality, large-volume, and insightful data from across the web for optimal market analysis.

News monitoring

A news headline can either mar or make your brand reputation. Therefore, web scraping media sites provide insights into the current reports on your company. This is especially important for companies always in the news or depending on timely news analysis. Subsequently, it is the ideal solution to monitor, gather, and analyze news from your industry.

News monitoring is critical in investment decision-making, online public sentiment analysis, and staying abreast of political trends.

Alternative data for finance

Web scraping provides alternative data for finance. Investors love to see data that indicates the safety of their money. Therefore, companies are increasingly using web scraping to get data that informs their decision-making process. They can extract insights from SEC filings and integrate public sentiments to generate value with web data tailored for investors.

Business Automation

Web scraping is critical in business automation. Imagine you want to get data related to your website or partner website in a structured format. An easy and efficient way is to use web scrapers to identify and collect the required data. This is an amazing alternative to working through internal systems that may be complicated.

Sentiment analysis

Web scraping is a way to obtain data that shows how people are reacting to a brand. You can use Social Scraper to get real-time access to social data. Sentiment analysis involves collecting data from various social media platforms. These provide data on what people like or dislike about their products and services. Subsequently, this helps them to create products that the customers will appreciate.

Furthermore, sentiment analysis can give you a hint at the existence of a counterfeit product. If the reviews from a particular region are highly negative, it means immediate actions must be implemented to rectify the problem.

Lead generation

Lead generation is a critical marketing strategy that can help businesses thrive in this highly competitive digital era. Therefore, collecting emails from various sites using web scrapers offers a competitive advantage. Subsequently, brands can send promotional emails to help them generate website traffic.

Choosing a Tool for Web Scraping

A web scraping tool is a program built to extract predefined data from the web. Often called a web scraper, it sends HTTP requests to target websites and extracts relevant data. Subsequently, it parses the data and stores it on your computer.

Various web scraper providers offer various options and flexibility. Therefore, choosing the best web scraper plays a significant role in the output. Here are some factors to consider before choosing a web scraping service provider:

Handling anti-scraping measures

One of the primary factors to consider before choosing a web scraper is its efficiency in handling anti-scraping measures. Websites are constantly upgrading their anti-scraping technology. Therefore, it is essential that the web scraper can integrate with proxies to unblock websites. In addition, the web scraper should be able to set up an API with any website.

Data quality and delivery

Web data is often unstructured, which can be messy and difficult to work with. As a result, it often results in errors. Therefore, look for web scrapers that clean and sort data before returning it to your computer.

Most web scrapers return data in JSON, CSV, SQL, or XML formats. The CSV format is very common as it is compatible with Microsoft Excel. JSON is easy for machines to parse and simple for humans to interpret. Therefore, instead of manually converting the raw data choose a tool that can deliver data in a suitable format.

Pricing

Another important factor to consider is pricing. Many individuals want to choose a web scraper based on its affordability without paying attention to its performance. Therefore, you need to carefully consider the pricing scheme to ensure it is transparent and free from any hidden fees. Some web scraping service providers offer a trial period to help you determine if their services are suitable for your activities.

Customer support

Regardless of the features of a web scraper, excellent customer support is a priority. You will not always be able to solve all the issues, which is where customer support comes into play. Therefore, be sure to prioritize a provider that offers reliable customer support and resources.

Challenges Associated with Web Scraping

Web scraping comes with several challenges, especially when you frequently need large volumes of data. Therefore, it is important to understand these challenges and how they can affect your web data extraction efforts. Some of these challenges include:

IP Address Blocks

IP blocks are one of the most common challenges with web scraping. When you send too many requests to a website within a short period, your IP address can be blocked. Subsequently, this halts your web-scraping activities and may put you in a difficult situation if you need timely access to data.

Your IP address can also be blocked due to a geographical restriction on the website. In addition, using an unreliable proxy IP can trigger the website to ban or block your IP address.

However, you can easily solve the IP block challenge by using a reputable proxy provider like NetNut. In addition, it is beneficial to follow the website’s terms of service and put delays between each request to avoid over flooding the page with requests.

CAPTCHA

Completely Automated Public Turing Tests To Tell Computers and Humans, often called CAPTCHA, is a common security measure by websites to restrict web scraping activities. CAPTCHA requires manual interaction to solve a puzzle before accessing specific content. It could be in the form of text puzzles, image recognition, or analysis of user behavior.

A solution to this problem could be to implement CAPTCHA solvers into your web scraping code to avoid this issue. However, this may potentially slow down the process of web data extraction. Using NetNut proxies is a secure and reliable way to bypass CAPTCHAs.

Browser Fingerprinting

Browser fingerprinting is a technique that collects and analyzes your web browser details to produce a unique identifier to track users. These details may include fonts, screen resolution, keyboard layout, User Agent String, cookie settings, browser extensions, and more. Subsequently, it combines small data points into a larger set to generate a unique digital fingerprint.

Bear in mind that clearing browser history and resetting cookies will not affect the digital fingerprint. Therefore, the web page can always identify a specific user when they visit.

This technique is used to optimize website security and provide a personalized experience. However, it can also identify web scrapers with their unique fingerprint.

To avoid browser fingerprinting from interfering with your web data extraction, you can use headless browsers or stealth plugins.

Dynamic Content

Web scraping primarily involves analyzing the HTML source code. However, modern websites are often dynamic, which poses a challenge to web scraping. For example, some websites use client-side rendering technologies such as JavaScript to create dynamic content.

Therefore, many modern websites are built with JavaScript and AJAX after loading the initial HTML. Subsequently, you would need a headless browser to request, extract, and parse the required data. Alternatively, you can use tools like Selenium, Puppeteer, and Playwright to optimize the process of web data extraction.

Website Structure

Many websites undergo routine structural changes to optimize layout, design, and features for a better user experience. However, these changes can become a stumbling block to web data extraction. You may get incomplete data or an error response if these changes are not incorporated into the web scraper.

Therefore, you can use the BeautifulSoup library to extract and parse complete data from the website. Alternatively, you can use AI tools like NetNut Scraper API to get complete data from websites that are constantly changing their structure.

Scalability

Scalability is another challenge regarding web data extraction. Businesses require a huge amount of data to make informed decisions that help them stay competitive in the industry. Therefore, quickly gathering lots of data from various sources becomes paramount.

However, web pages may fail to respond if they receive too many requests. A human user may not see the challenge because they can simply refresh the page. When a website responds slowly, the web scraping process is often significantly affected because the bot is not programmed to cater to such situations. As a result, you may need to manually give instructions to restart the web scraper.

Rate Limiting

Another challenge to web scraping is rate limiting, which describes the practice of limiting the number of requests per client within a period. Many websites implement this technique to protect their servers from large requests that may cause lagging or a crash (in worst cases).

Therefore, rate limiting slows down the process of web data extraction. As a result, the efficiency of your scraping operations will be reduced – which can be frustrating when you need a large amount of data in a short period.

You can bypass rate limits with proxy servers. These servers can randomize your request headers so that the website will not identify your requests as coming from a single source.

Best Tips for Web Scraping

In this part of the guide, we will share some tips to optimize web data extraction as well as keeping your activities ethical and legal. They include:

Refine your target data

Web scraping involves coding; hence, it is crucial to be very specific with the type of data you want to collect from the web. If your instructions are too vague, there is a high chance that your web scraper may return too much data. Therefore, investing some time to develop a clear and concise plan for your web data extraction activities is best. Refining your target data ensures you spend less time and resources cleaning the data you collected.

Read the web page robots.txt

Before you dive into web scraping, ensure you read the robot.txt file. This helps you familiarize yourself with specific data that you can scrape and those you should avoid. Subsequently, this information helps guide you in writing the code for the web data extraction activity. A web page robots.txt file may indicate that scraping content from a certain page is not allowed. Failure to comply with this instruction makes your activities illegal and unethical.

Terms and conditions/ web page policies

Reading the robots.txt file is great, but you can take it a step further by reviewing the website policy or terms and conditions. Many people overlook the policy pages because they often align with the robot.txt file. However, there may be additional information that can be relevant to your web data extraction activities.

Sometimes, the terms and conditions may have a section that clearly outlines what data you can collect from their web page. Therefore, there may be legal consequences if you do not follow these instructions in your web data extraction.

Pay attention to data protection protocols

Although some data are publicly available, collecting and using them may incur legal consequences. Therefore, before you proceed with web data collection, pay attention to data protection laws.

These laws may differ according to your location and the type of data you want to collect. For example, those in the European Union must abide by the General Data Protection Regulation (GDPR), which prevents scraping of personal information. Subsequently, it is against the law to use web scrapers to gather people’s identifying data without their consent.

Avoid sending too many requests

There are two primary dangers of sending too many requests to a website. First, the site may become slow, malfunction, or even crash. Secondly, the website’s anti-scraping measures are triggered, and your IP address is blocked.

Big websites like Amazon and Google are built to handle high traffic. On the other hand, smaller sites may not function well with high traffic. Therefore, your scraping activities must not overload the page with too many requests that can cause issues.

We recommend that you do not engage in aggressive scraping activities. Instead, give enough time intervals between requests so that nobody loses. In addition, avoid web scraping activities on a web page during its peak hours.

Use proxy servers

Another essential tip for effective web scraping is the use of proxy servers. A major challenge in web scraping is blocked/banned IP. You can evade this problem by using proxies to hide your IP address. The use of rotating proxies distributes your scraping request across various locations. In addition, proxies help to maintain anonymity and optimize security during web scraping.

Optimizing Web Scraping with NetNut

As mentioned earlier, one tip for optimizing web scraping is using proxies. While there are several free proxies available, you don’t want to sacrifice cost for functionality. Therefore, it becomes critical to choose an industry-leading proxy server provider like NetNut.

NetNut has an extensive network of over 52 million rotating residential proxies in 195 countries and over 250,000 mobile IPS in over 100 countries, which helps them provide exceptional data collection services.

NetNut offers various proxy solutions designed to overcome the challenges of web scraping. In addition, the proxies promote privacy and security while extracting data from the web.

NetNut rotating residential proxies are your automated proxy solution that ensures you can access websites despite geographic restrictions. Therefore, you get access to real-time data from all over the world that optimizes decision-making.

Alternatively, you can use our in-house solution- NetNut Scraper API, to access websites and collect data. Moreover, if you need customized web scraping solutions, you can use NetNut’s Mobile Proxy.

Final Thoughts on Web Scraping

This guide has explored the concept of web scraping and how it can be used to collect various types of data, including texts, videos, images, and more from the web. Web scraping has applications in various industries, including sentiment analysis, market research, lead generation, and more.

Web scraping is a fast and reliable process of getting accurate and complete data from the web. In addition, it saves time and helps to automate repetitive tasks. Websites are constantly upgrading their anti-scraping measures by rate limiting, IP bans, CAPTCHs, browser fingerprinting, and more.

However, NetNut proxies offer a unique, customizable, and flexible solution to these challenges. In addition, we provide transparent and competitive pricing for all our services. A customer representative is always available to answer your questions.

Kindly contact us if you have any further questions!

Frequently Asked Questions About Web Scraping

What type of data is obtainable with web scraping?

In theory, all the data on a website can be collected via web scraping. The popular types of data that businesses often target are:

- Text

- Images

- Product description

- Reviews

- Videos

- Pricing from competitor websites

- Customer sentiment from sites like Yell, Twitter, Facebook

Is web scraping legal?

Web scraping is legal, but you need to follow some rules to avoid crossing the (imaginary) red line. However, web scraping for malicious intents can be described as illegal. For example, web scraping becomes illegal when the aim is to extract data that is not publicly available.

Therefore, before you dive into web scraping, understand the policies of the web page. In addition, get familiar with any laws in your region, state, or country that deal with collecting data from websites.

What are the types of web scrapers?

There are different types of web scrapers based on how they collect and parse web content. They include:

- Basic scrapers- They include HTML scrapers and text scrapers

- Advanced scrapers, such as API scrapers and dynamic content scrapers

- Specific purpose scrapers for e-commerce, social media, real estate, etc

- Image and media scrapers

- Monitoring and alert scrapers

- Custom-built scrapers