Introduction

With the introduction of websites, blogs, social media, and other online platforms, users found themselves drowning in a sea of information. The traditional search engines, while powerful, often struggle to discern the user’s intent accurately. The demand for more efficient and intelligent information retrieval became increasingly evident.

As the demand for more intelligent information retrieval intensified, traditional web crawlers faced limitations. Conventional crawlers were efficient at data extraction from websites but struggled to understand the context, meaning, and relationships embedded within the content. The introduction of AI web crawlers marked a paradigm shift in this dynamic.

An AI web crawler, driven by sophisticated artificial intelligence algorithms, brings a new level of intelligence to the process of web crawling. These algorithms go beyond mere data fetching; they can comprehend the intricacies of language, discern context, and extract meaningful insights from the vast expanse of digital information. This not only improved the accuracy of search results but also opened the door to enhanced AI web scraping activities.

In the subsequent sections, we will explore the workings, benefits, challenges, and prospects of AI web crawlers.

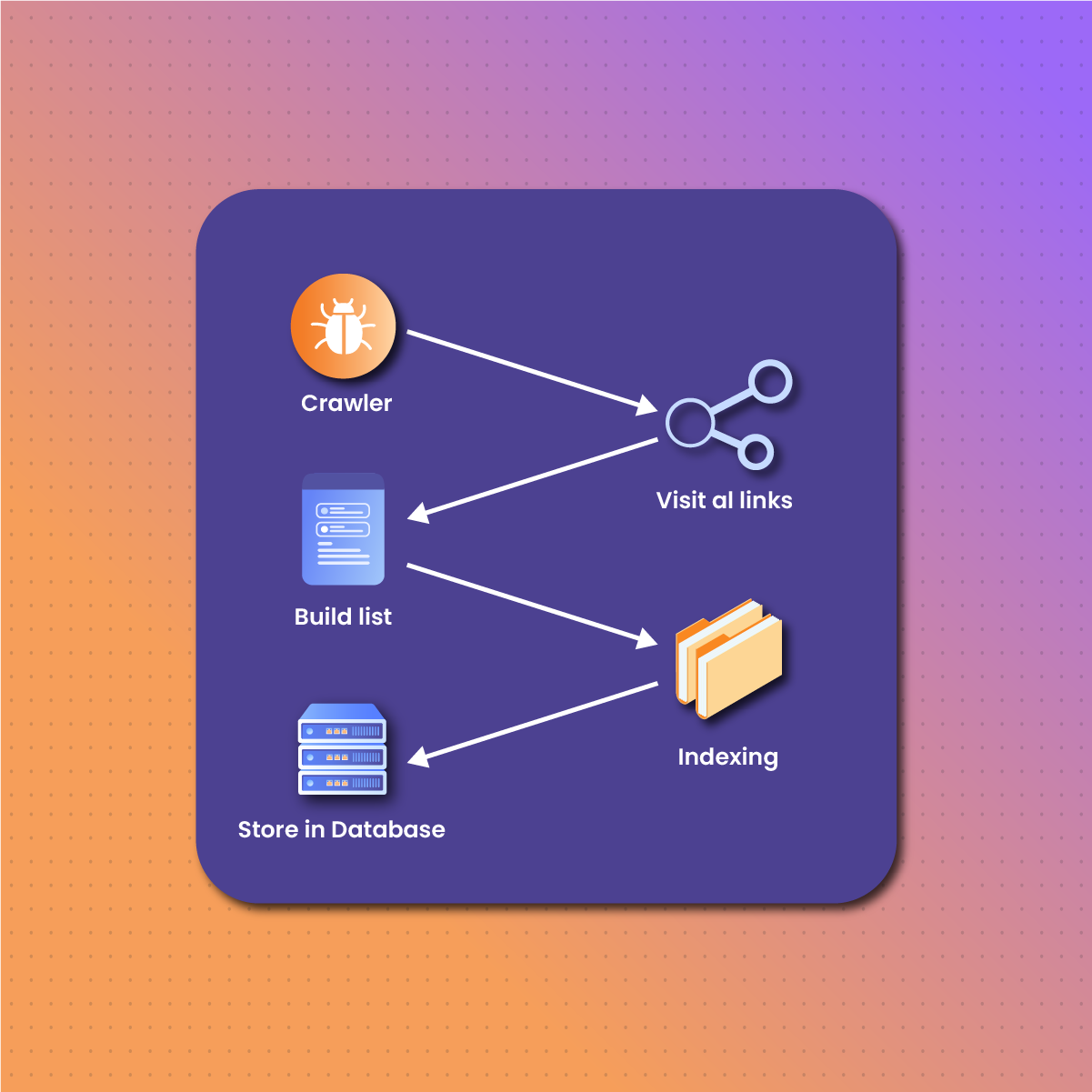

How Does AI Web Crawler Work?

The AI web crawler workflow is a sophisticated and multi-step process designed to navigate the vast landscape of the internet, gather information, and deliver relevant results. This advanced process is powered by artificial intelligence algorithms that enable the web crawler to go beyond traditional methods and understand context, semantics, and user intent. Here’s an overview of the key stages in the AI web crawler workflow:

Seed URLs and Initial Fetching

The journey of an AI web crawler begins with a set of seed URLs – the starting points for its exploration of the internet. Unlike traditional web crawlers that may fetch data indiscriminately, AI web crawlers leverage advanced algorithms to prioritize URLs based on their perceived relevance. This prioritization involves assessing factors such as the popularity of a page, its authority, and its historical significance. By focusing on the most relevant URLs, the AI web crawler optimizes its efficiency, ensuring that the information gathered aligns with user expectations.

Utilization of NLP Techniques for Deeper Understanding

Once the AI web crawler fetches data from a selected URL, it doesn’t stop at a surface-level analysis. Instead, it delves into the realm of natural language processing (NLP), a branch of artificial intelligence that enables machines to understand and interpret human language.

By employing NLP techniques, such as part-of-speech tagging, named entity recognition, and sentiment analysis, the crawler extracts meaningful information from the textual content. This goes beyond mere keyword extraction, allowing the crawler to comprehend the context, relationships, and nuances embedded within the data.

The utilization of NLP not only enhances the accuracy of content extraction but also contributes to a more sophisticated understanding of the information. This deeper comprehension sets the stage for the next crucial steps in the AI web crawler workflow.

Indexing and Storage

With the extracted and analyzed information in hand, the AI web crawler moves on to the indexing and storage phase. Unlike traditional indexing based solely on keywords, AI web crawlers create structured databases that capture the semantic relationships within the content. This structured storage facilitates more efficient retrieval of information during subsequent searches.

The indexing process involves categorizing and organizing the extracted data in a way that allows for quick and accurate retrieval. By creating a structured index, the AI web crawler ensures that relevant information is readily available, contributing to a faster and more responsive search experience.

Adaptive Algorithms Based on User Interactions

One of the distinguishing features of AI web crawlers is their ability to learn and adapt based on user interactions. As users engage with the search results – clicking, spending time on pages, or refining their queries – the AI web crawler employs adaptive algorithms. These algorithms analyze user behavior to discern patterns and preferences, allowing the crawler to refine its understanding of relevance.

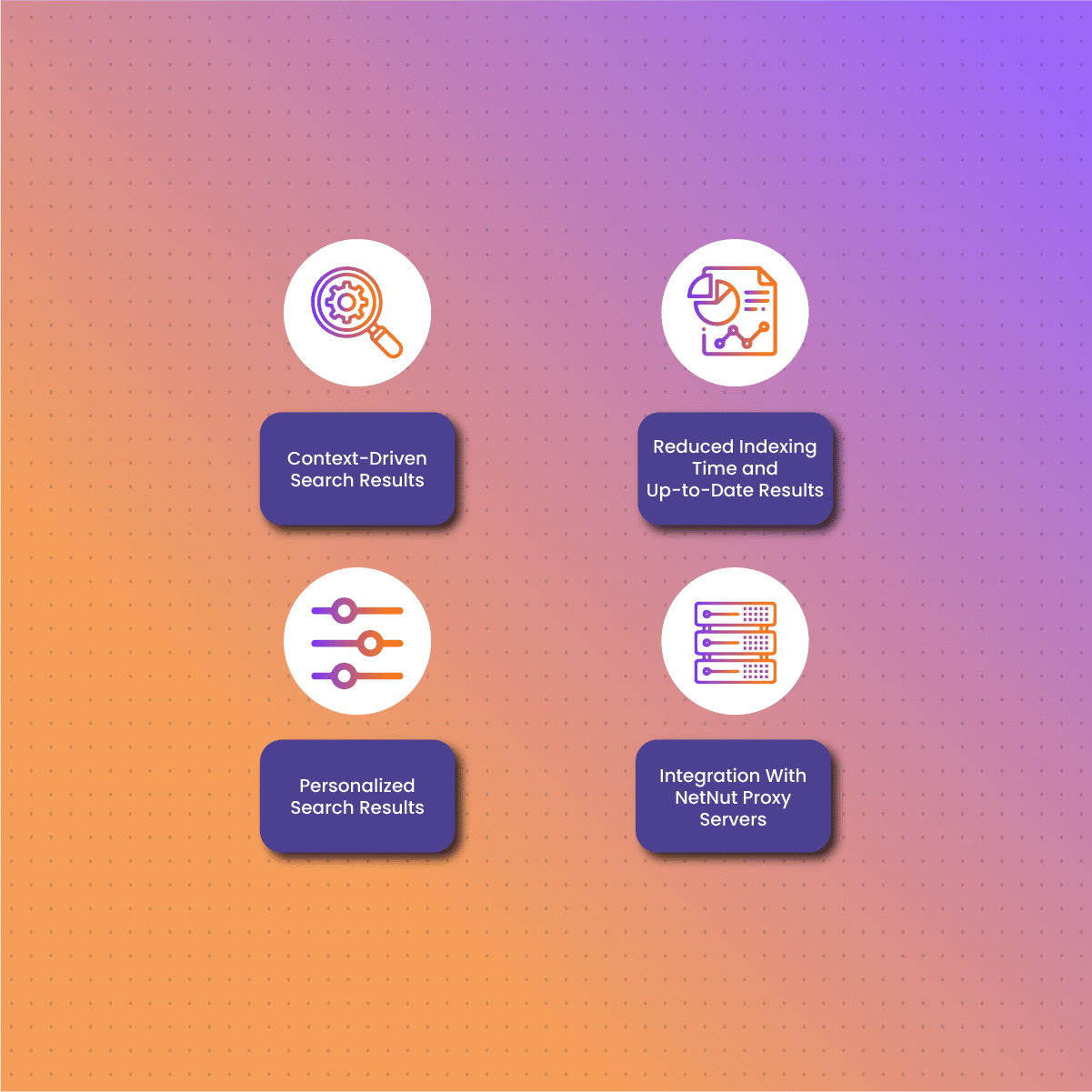

Benefits of AI Web Crawler

The benefits of an AI web crawler is extensive and transformative, representing a significant leap in the field of information retrieval. Here are the key advantages:

Context-Driven Search Results

The hallmark of an AI web crawler lies in its ability to deliver search results that go beyond mere keyword matching. Through their contextual understanding capabilities, these crawlers ensure that the results are driven by the context of the user’s query. Traditional search engines may retrieve pages based on the presence of specific keywords, but AI web crawler takes it a step further. They interpret the semantics of the content, discern the relationships between words, and understand the broader context in which information is presented.

This contextual awareness leads to search results that are more relevant and meaningful to the user. Whether it’s understanding the intent behind a question, recognizing synonyms, or identifying related concepts, an AI web crawler excels in providing a deeper level of relevance. As a result, users experience a more refined and accurate search experience, finding information that aligns more closely with their needs and expectations.

Reduced Indexing Time and Up-to-Date Results

The efficiency of an AI web crawler is a game-changer in the issues of information retrieval. Unlike traditional crawlers that may take considerable time to index new content, AI web crawler operates with reduced indexing times. The machine learning capabilities embedded in this crawler enable them to prioritize and fetch data more efficiently, ensuring that the search engine remains up-to-date with the latest information on the internet.

The accelerated indexing process not only benefits users by providing more current results but also contributes to the overall responsiveness of the search engine. As the AI web crawler continuously learns from user interactions and refines its algorithms, the efficiency gains become even more pronounced over time. Users experience a search engine that is not only fast but also adaptive to the evolving landscape of the web.

Personalized Search Results

One of the most compelling advantages of an AI web crawler is its ability to deliver personalized search results. Through continuous learning from user interactions, these crawlers adapt to individual preferences, tailoring search results to match the unique needs and interests of each user.

The customization goes beyond surface-level personalization. AI web crawler considers factors such as search history, click patterns, and even the semantics of previous queries to anticipate and understand user intent. This level of personalization enhances the overall search experience, making it more intuitive and user-centric.

Integration With NetNut Proxy Servers

NetNut is a proxy service provider that offers residential proxies for various online activities, including web crawling, data mining, and online anonymity. The integration of NetNut with AI web crawler is a game-changer for information retrieval.

NetNut’s Proxy allows for a straightforward connection, enabling AI web crawlers to harness the power of a diverse and reliable network of residential proxies. This integration optimizes the crawling process, ensuring efficiency and accuracy in data extraction.

The diversified residential proxies provided by NetNut including mobile proxies contribute to improved reliability, reducing the chances of encountering blocks or restrictions during crawling. This translates to faster and more consistent data fetching, reducing indexing time and ensuring that an AI web crawler delivers the most up-to-date and relevant search results.

AI Web Crawler- Challenges and Considerations

Some of the challenges associated with AI web crawler are highlighted below:

Balancing Data Collection with User Privacy

While an AI web crawler offers significant advancements in information retrieval, they also raise concerns about user privacy. The process of crawling the web involves collecting vast amounts of data from various sources. Striking the right balance between effective data collection and respecting user privacy is a critical challenge.

An AI web crawler needs to adopt transparent data collection practices and robust privacy measures. This involves providing users with clear information about the data being collected, and its purpose, and offering options for consent. Anonymous proxies are an excellent solution to mitigate privacy concerns.

Algorithmic Biases

AI algorithms, including those used in web crawlers, are susceptible to biases that may inadvertently impact the fairness and neutrality of search results. Biases can emerge from various sources, including biased training data, algorithmic types, or unintentional correlations in the data.

It is crucial for developers and organizations to actively address algorithmic biases in an AI web crawler. This involves conducting regular audits of algorithms, diversifying training datasets to avoid skewed representations, and implementing corrective measures when biases are identified. Transparency in the algorithmic decision-making process and open dialogues with the user community contribute to the ongoing efforts to minimize biases in search results.

Responsible AI Usage in Information Retrieval

The integration of AI into information retrieval poses ethical considerations that demand careful attention. As an AI web crawler becomes more sophisticated, issues related to misinformation, manipulation, and the responsible use of AI technology come to the forefront.

Ensuring responsible AI usage involves adopting ethical frameworks and guidelines in the development and deployment of AI web crawler. This includes transparent communication about the capabilities and limitations of the technology, as well as establishing mechanisms for accountability in case of unintended consequences.

Conclusion

The future of web search lies in the seamless synergy between artificial intelligence and web crawling technologies. The advent of an AI web crawler has ushered in a new era of intelligence in the realm of web search. AI web crawlers bring to the forefront a level of understanding and relevance that goes beyond simple data fetching.

As AI continues to evolve, so too will the capabilities of an AI web crawler, creating a symbiotic relationship that propels the field of information retrieval into new frontiers. Their ability to interpret context, analyze semantics, and continuously learn from user interactions results in search experiences that are not only more accurate but also tailored to the individual needs and preferences of users.

Discover the future of web search with AI web crawlers today!

Frequently Asked Questions And Answers

Are there ethical considerations associated with the use of an AI web crawler?

Yes, ethical considerations include privacy concerns, potential biases in algorithms, and the responsible use of artificial intelligence in information retrieval.

How does an AI web crawler differ from traditional ones?

An AI web crawler goes beyond data fetching, using advanced algorithms for context-aware results. NetNut enhances this by optimizing data retrieval, and prioritizing relevance for a more efficient search experience.

What are types of web crawlers

The types web crawlers include:

- General purpose crawler

- Focused crawler

- Incremental crawler

- Deep web crawler