Data is one of the world’s most sought-after assets. Every big business out there knows this, and most will go out of their way to safeguard their data and information. Indeed, you cannot entirely protect all your data as some can be cached and accessed through the internet, but you would often want to keep your data away from competitors who use web scraping to extract chunks of data online. Well, you are not alone. Many businesses and websites have embraced several anti scraping techniques to protect the privacy of their data.

Of course, we know you are not reading this article right now because you want to learn how to stop web scrapers. Well, in case you are on the wrong boat, let’s clarify something first. This article is for web scrapers who are facing the jaw-breaking measures put in place by website owners to quell their efforts. We will reveal to you all the measures and techniques most developers employ to fight web scraping. We will also teach you how to bypass every single one of these measures. Even if you are new to web scraping, this is an excellent place to start learning.

What Is Web Scraping?

For the newbies, let’s define some terms you’d frequently see in this post. Web scraping simply refers to the extraction of data from websites such as e-commerce sites, social media platforms, etc. Most web scraping activity is done with software. Web scrapers collate data from different web sources to build a data set that is usually used for research and analytical purposes. This is not an in-depth explanation of web scraping. Read our full article on web scraping here.

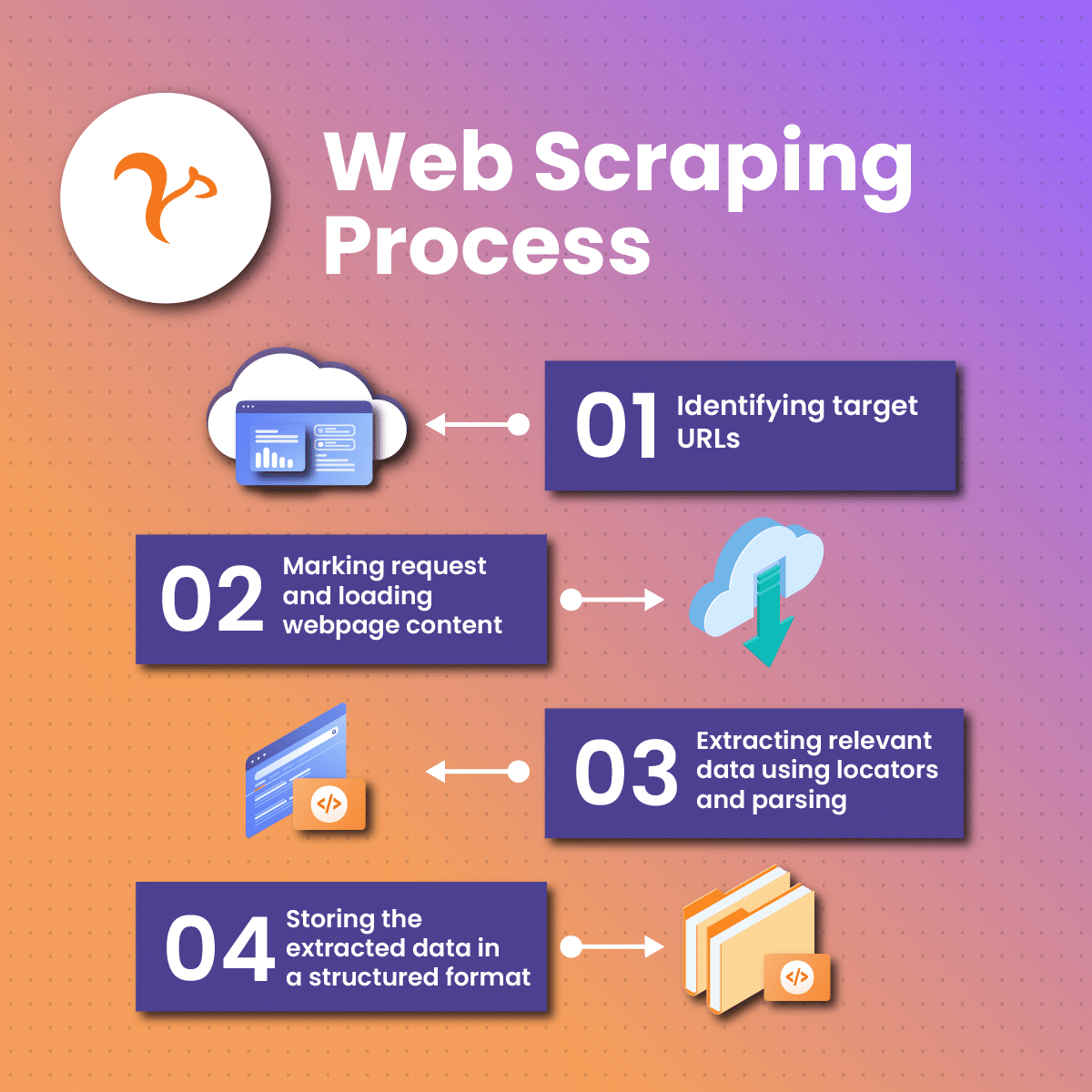

How Web Scraping Works

Again, this is a partial dive into how web scraping works. We have covered that already in this post. However, we will cover some basics here.

Web scraping can be very complex, depending on your knowledge and the tools at your disposal. Web scrapers must first understand the structure of the site they intend to scrape and what type of data they need to extract.

In some cases, web scrapers use a specific URL address or a list to extract data from. There are also other options of either extracting all the data on the URL or narrowing your scraping to specific data types.

Finally, the scraping software will permit the user to download the extracted data to an Excel spreadsheet or other file format.

Benefits Of Web Scraping

Suppose you missed where we mentioned earlier that web scraping provides a resource for research and analytical purposes. In that case, we will briefly go over some reasons or benefits of web or data scraping.

The following are some reasons why companies use web scraping to scale their businesses.

- Social media data scraping can be used to carry out sentiment analysis.

- Real estate uses scraping for listings.

- Scraped product data can be used to build product reviews and comparison tools.

- Data extracted through scraping can be used as new lead information

- Stock market analysis makes use of data scraped from websites

- Web scraping helps in website transitioning.

This article covers a more detailed explanation of the benefits of web scraping and how you can use it to scale your business.

What Is Anti Scraping?

Now that you have a solid foundation of what web scraping is, our guess is this article becomes very easy to understand from this point.

If web scraping is extracting data from the internet, anti scraping would mean the opposite, right? Well, not quite.

Although web and data scraping has supplied businesses with the requisite data to scale at almost no cost, there is a consistent subtle coding battle between anti scraping bots and spiders. One reason for this is that if web and data scraping are misused or not done for their actual purposes, the “victimized” websites can experience slow loading times and possibly crash in a worst-case scenario. To prevent this, some websites and platforms have taken drastic anti scraping measures to avoid all forms of scraping activities. However, some users are legitimate scrapers and use extracted data for their intended purpose. Should they also be victimized because of the few bad eggs in the web scraping industry? I guess not. Do you now see why it was essential for us to write this article?

Well, anti scraping describes all the techniques and measures, including tools, software, codings, etc., that are implemented against online data and web scraping. To put it more simply, anti scraping makes scraping more challenging to carry out automatically from a webpage.

How Anti Scraping Works

Anti scraping works by first identifying and preventing requests from scraping bots or malicious users. Some anti-scrapers also use anti-bot protection tools and any software that can block and restrict web scrapers. Anti-bots are technologies designed to block unwanted bots. However, as we explained earlier, not all bots are bad bots. For instance, Google bots crawl your web pages to index them. They are not malicious and do not cause any harm. So we must be careful not to throw away the baby with the bathwater in fighting against malicious web scraping activities on the internet.

The Differences Between Scraping And Anti Scraping

Scraping and anti scraping mean different things. The first is the process of extracting data and information from the web, particularly web pages, using bots and scripts. The latter, on the other hand, involves measures put in place to protect the information and data on web pages.

Both concepts are interconnected. Anti scraping measures depend primarily on the methods web scrapers use to extract data. Web scraping methods are actively evolving to find new ways to prevent anti scraping measures from catching up with them. They must discover new technological ways to ensure their spider is not being recognized and blocked on the internet.

Common Anti Scraping Techniques and How to Bypass Them

The purpose of this section is to explore all possible ways and techniques anti scraping technologies have adopted in the fight against legitimate and illegitimate web scraping. We will also look at ways to bypass these measures.

Login Or Auth Wall

This has to be one of the most common anti scraping techniques. If you have ever visited a website that prompts you to log in before proceeding, then you already have an idea of how this anti scraping measure works.

Say, for instance, you were trying to get on LinkedIn, and you get a login wall. These login or authentication pages are employed to safeguard the data of the company. Most social media platforms like Facebook and Twitter use this anti scraping measure to hide their data from malicious data scrapers. A user can only get access to the website or platform when they provide accurate login information.

How To Bypass Anti Scraping Login Walls

A server receives a request authenticated through its HTTP headers. Some cookies save the values to send to the server as authentication headers. Cookies are small packets of data stored locally in the browser. The web browser uses these cookies to create a login session based on the response from the server after the user logs in.

For you to bypass a site that uses a login wall to give users access to their data and protect their information against scraping activities, your anti scraping countermeasures must include a crawler or bot that has access to those cookies that save the data of the logged-in user. The values of these cookies are sent as HTTP headers. After the user logs in, you can extract the values in the cookies by executing a request in the DevTools.

Also, you can use a headless browser spider to simulate the user login process and then navigate through it. This would make the logic of your data scraping activity very complex. However, you are in luck, as NetNut API will take care of headless browsers for you.

Keep in mind that you must have accurate credentials for your target website or platform for you to scrape it.

IP Address Reputation

IP tracking is one of the common ways websites detect scraping activities on their sites. The websites can observe the behavior of your IP and tell if it is a robot or not. One way they see scraping activity and set anti scraping procedures in place is when they receive an overwhelming number of requests sent from the same IP address over a short period. When this happens, the anti scraping mechanism will block the IP address. So, when they’re building an anti scraping crawler, they often consider the number of times and frequency of visits per time.

How To Bypass IP Address Anti Scraping Measures

Case 1: Multiple Visits in A Very Short Time

An actual human cannot make rapid website visits in a few seconds. So, if your scraping tool sends multiple requests in a short period, the anti scraping bot will flag and block your IP as a robot.

Solution

Reduce the speed of your scraping tool. Setting a delay time, such as a “sleep” routine, before increasing the waiting period between two procedures would do the trick.

Case 2: Opening a Website at The Same Frequency

Anti scraping tools can tell when there is a set repetitive behavioral pattern in how visitors enter a site. They do this by monitoring the request frequencies. If the requests are always sent at a fixed time and in the same pattern, like twice or per second, etc., the anti scraping measure would be activated.

Solution

Make your request frequency very random. Use a random wait period for each step of your scraping crawler. A random data scraping speed and frequency would often make the activity seem real.

Case 3: High-level Anti Scraping Tools Can Use Complex Algorithms to Track Requests from Several IPs and Study Their Behaviours

This means the anti scraping tool can observe the behavior of different IP addresses across their website and analyze them against each other. If it finds any IP that engages in unusual activities like sending the same volume of requests or visiting the same pages repeatedly at the same time daily, it will flag and block it.

Solution

Use different IPs periodically. NetNut can provide you with rotated IPs and other mobile proxy services. When you send any request via these rotated IPs, the web scraper acts less like a robot, and the chance of being blocked is reduced.

This is a tutorial on how to configure and use different kinds of proxies in NetNut. By rotating the IP of the web scraper, you can prevent your IP from being blocked.

User-Agent And HTTP Headers

User-Agent is a header that websites use to identify their visitors. It holds information about the user, such as the operating system used, the version, browser type, CPU type, browser language, plug-in, etc.

This is how a UA looks like Mozilla/7.0 (Windows NT 10.0; Win64; x64) AppleWebKit/737.36 (KHTML, like Gecko) Chrome/76.0.4694.110 Safari/588.36

When using a scraping tool to extract data from a website, if your scraper does not have a header file, it would identify itself as a script. Websites with anti scraping measures would undoubtedly block the script request. So, the scraper has to act like a browser having a UA header so that anti scraping tools will grant access to it.

Most times, even if you use the same URL to visit a site, the website will show different information or pages to different versions of a browser. This means the information that is allowed on one browser may be flagged on another browser. Therefore, you must use multiple versions of browsers to get to the right web page.

Bypassing User-Agent Anti Scraping Technique

Keep changing the information of the User-Agent until you get the right one. Websites that use advanced anti scraping tools can even block user access if they detect the same UA information over a long period. This is why you must periodically change the User-Agent information of the scraper.

Honeypots

Honeypots are traps set by anti scraping techniques to catch bots and crawlers. These traps appear natural to automated activities but are carefully stationed to detect scrapers. In a honeypot setup, websites add hidden forms or links to their web pages. These forms or links are not visible to actual human users but can be accessed by scrapers. When unsuspecting scrapers fill or click these traps, they are led to dummy pages with no valuable information while triggering the anti scraping tool to block or block them.

Bypassing Honeypots Anti Scraping Technique

A web scraper can be coded to detect honeypots by analyzing all the elements of a web page, link, or form, inspecting the hidden properties of page structures, and searching for suspected patterns in links or forms. Using a proxy like NetNut’s static residential proxies can help you bypass honeypots. However, note that this will only be effective depending on the sophistication of the honeypot system.

The downside of bypassing honeypots is that it can be tedious to do this for every link and form on a web page. Also, a website can simply change its code, and whatever previous information the scraper gathered becomes obsolete. If web scraping is a critical business for you, this method is not recommended.

JavaScript Challenges

Anti scraping mechanisms use JavaScript challenges to prevent crawlers from accessing their information.

Every user faces several JS challenges on a single page. Any JavaScript-enabled browser will automatically understand these challenges and execute them.

The challenge works by adding a brief seconds wait time. This is the period the anti scraping bot uses to carry out a check. After performing the check, it proceeds automatically without the user even knowing.

This means that any crawler that is not built with a JS stack will fail the challenge. And because scrapers usually carry out a server-to-server request without using a browser, the anti scraping system will detect them.

Bypassing JavaScript Challenge Anti Scraping Technique

To overcome the JS challenge, you must use a browser. Your scraping tool can use a headless browser. Integrating a proxy like an ISP proxy with a headless browser can provide you with formidable protection against JS challenges.

CAPTCHAs

Have you ever come across an image of a checkbox prompting you to check the box to confirm that you are not a robot before granting you access to a website? That’s an example of a captcha. Some come in the form of puzzle-like square boxes with different images, prompting you to select the images that fit the one given at the top of the box.

Captchas mean Completely Automated Public Turing test to tell Computers and Humans Apart. It is an automated program that detects whether a visitor is a robot or a human. The program provides several challenges, as explained earlier, that can only be solved by a human.

Many websites use a captcha as an anti scraping technique. It used to be very difficult to solve captchas in the past, but today, several open-source software can now quickly solve captcha challenges. However, they may require advanced coding skills.

Bypassing CAPTCHAs Anti Scraping Technique

While web scraping, not triggering a captcha is often easier than solving it.

For most users, the best way is to slow down the extraction process or randomize it so as not to trigger a captcha test. You can do this by modifying the delay time. Also, using proxies can reduce the chance of starting a captcha test.

Anti Scraping Frequently Asked Questions

Why Do Businesses Use Anti Scraping Measures?

Businesses use websites as a dock for the information and data that power their systems. To keep the competition at bay, they use several anti scraping techniques to protect the privacy of their business and keep them ahead. If you are protesting this and feel everyone in the marketplace should freely compete, we hear you! This is why you can use the anti scraping bypassing techniques described above to help you beat the competition.

What Is Web Scraping?

Web scraping is the process of extracting data from a website or a social media platform using dedicated software, often called bots, scrapers, or crawlers. After the data has been extracted, they are usually exported to a more helpful format like JSON or an Excel spreadsheet.

How Do I Bypass Anti Scraping Techniques?

Depending on the kind of anti scraping technique adopted by your target website, you can use several countermeasures, like random user agents, various proxies, and delaying requests.

Final Thoughts On Anti Scraping

Web scraping has become a significant challenge in today’s world. Websites are constantly on the lookout for better ways to detect and ban crawlers and bots. Altering HTML, noticing patterns, replacing static with dynamic content, setting limits, avoiding walls of text, using CAPTCHAs, etc., and the list is endless. You can’t afford to sleep at this time. You must match their energy or be trodden over. Using delaying requests, random user agents, and lots more are several ways you can evade the prying eyes of anti scraping tools.

As mentioned at several points in this article, NetNut is a dedicated proxy server provider that has been working underground to make web and data scraping as hassle-free as possible. We have tons of proxies, APIs, and integrating systems that can help you bypass any network or website firewall. Get in touch with us today and say goodbye to the menace of anti scraping measures set up by companies avast to healthy competition in the marketplace.