Introduction

Over the years, there has been a rapid evolution of technology. At the heart of this transformative power lies the critical element known as machine learning data. Machine learning data refers to the raw information, observations, or inputs that fuel the training and functioning of machine learning algorithms.

Machine learning data serves as the foundation upon which intelligent systems learn and make predictions or decisions. This data is available in a variety of formats, including but not limited to structured and unstructured data, labeled and unlabeled data, with each serving a unique purpose in the machine learning ecosystem.

Therefore, it becomes necessary to understand machine learning data, its processes, how to get the data, the types of data and strategies to optimize collection of machine learning data.

Do you want to explore the concept of machine learning data? Let us dive in!

About Machine Learning Data

The Foundation of Machine Learning

The availability and quality of data are the foundations of machine learning. Data is the lifeblood that allows machine learning algorithms to learn patterns, make predictions, and continuously improve their performance. The value of data in machine learning cannot be emphasized; it is the engine that drives the entire learning process. It involves;

- Training Models:

Machine learning models are trained using historical data to recognize patterns and relationships. The more diverse and representative the data, the better equipped the model is to generalize and make accurate predictions on new, unseen data.

- Decision-Making:

In supervised learning, where models are trained on labeled data, the machine learns to associate inputs with specific outputs. This learned knowledge is then applied to make decisions or predictions when presented with new, similar inputs.

- Continuous Learning:

Machine learning models are built to learn and improve over time. The model can be retrained when new data becomes available to remain relevant and effective in changing settings.

- Quality Assurance:

The quality of the data directly influences the performance of machine learning models. Inaccurate or biased data can lead to flawed predictions and compromised model reliability. Data cleaning and preprocessing play a crucial role in ensuring the quality of input data.

Sources of Machine Learning Data

Machine learning data can be sourced from a multitude of channels, reflecting the diverse nature of recent digital innovations. Understanding and tapping into these various sources is essential for building comprehensive datasets that capture the complexity of real-world scenarios. These sources are highlighted below:

- Structured Data Sources: Traditional databases, spreadsheets, and organized repositories are rich sources of structured data. These sources provide a well-defined format, making machine learning data processing and analysis easier.

- Unstructured Data Sources: Unstructured machine learning data, including text, images, audio, and video, can be harvested from sources such as social media, customer feedback, and multimedia platforms. Unstructured data, while difficult to interpret, provides significant insights and a more detailed knowledge of user behavior.

- Real-time Data Streams: In some environments, real-time data streams from sensors, IoT devices, and online platforms contribute to up-to-the-minute insights. Integrating real-time data enables models to quickly adjust to changing conditions.

- External Datasets: Leveraging external datasets from open-source repositories or collaborative partnerships can enhance the diversity and richness of the machine learning data. This approach is particularly beneficial when dealing with niche or specialized domains.

In summary, the foundation of machine learning data rests on the dual pillars of the importance of data and the diverse sources from which it is derived.

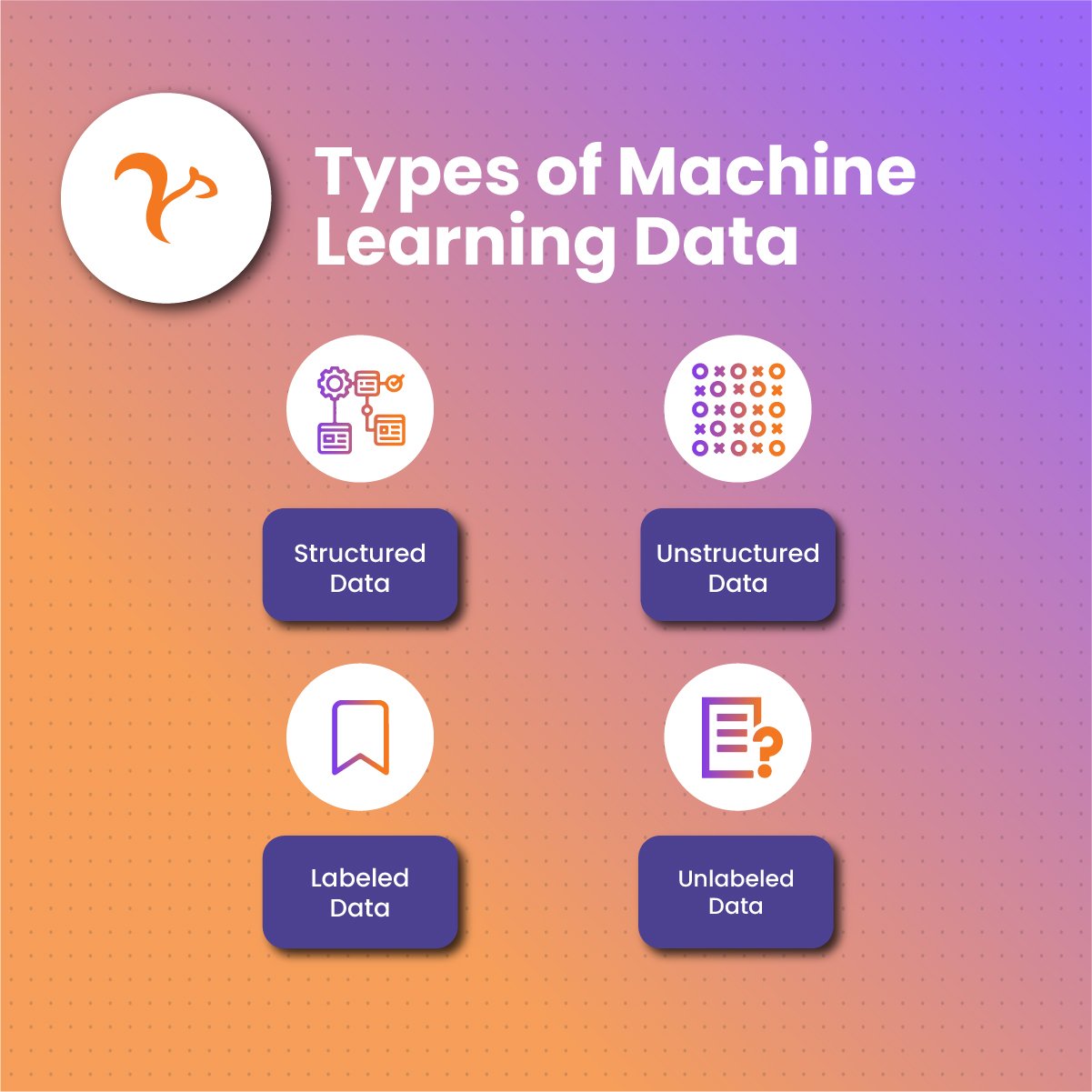

Types of Machine Learning Data

Machine learning data can be broadly classified into several forms based on its structure, substance, and application in the learning process. The following are the various forms of machine learning data:

Structured Data

Structured machine learning data is organized, typically residing in tabular formats with rows and columns. This form of machine learning data is highly regimented, and each data element has a clear and defined relationship with others.

Structured data is frequently used in jobs requiring simple processing, such as corporate analytics, reporting, and decision-making. It serves as the foundation of relational databases, allowing for efficient data retrieval and management.

Unstructured Data

Unstructured machine learning data is not arranged in a tabular format and lacks a predetermined data model. This sort of machine learning data is more complicated, containing text, graphics, audio, and video aspects.

Unstructured machine learning data, in particular, presents processing and analysis issues due to its intrinsic complexity. Natural language processing (NLP), image identification, and audio analysis are critical for gaining useful insights from unstructured machine learning data.

Labeled Data

Labeled machine learning data is data that has been annotated or categorized with specific labels or tags. Each data point in labeled datasets is associated with a known outcome or class. In supervised learning, the model is trained on labeled data to make predictions based on input features.

Applications of labeled data include sentiment analysis, image classification, and spam detection, among others.

D. Unlabeled Data

Unlabeled machine learning data is data that lacks predetermined classifications or tags. Unsupervised learning uses this type of machine learning data, in which the model investigates the underlying structure or patterns in the data without explicit instruction.

Unlabeled data is useful for jobs requiring the discovery of patterns, correlations, or clusters within the data. In scenarios where labeled machine learning data is scarce or expensive to obtain, unsupervised learning with unlabeled data becomes a viable approach for model training.

Each type of machine learning data presents its own set of difficulties and opportunities, and treating these various data types appropriately is vital for constructing successful and accurate machine learning models.

Strategies for Maximizing Machine Learning Data

Maximizing machine learning data involves employing various strategies to enhance the quality, quantity, and efficiency of data usage. Here are key strategies for optimizing machine learning data:

Data Augmentation

Data augmentation involves creating new training samples by applying various transformations to existing data. For image data, this could include rotations, flips, and zooms, while for text data, it might involve paraphrasing or introducing slight variations.

Benefits

- Increased Diversity: By expanding the dataset through augmentation, models are exposed to a more extensive range of scenarios, enhancing their ability to handle diverse inputs.

- Improved Generalization: Augmentation helps models generalize better to unseen data by reducing overfitting, especially in situations where the original dataset is limited.

Transfer Learning

Transfer learning involves taking knowledge learned from pre-trained models on a certain task and applying it to a new, related activity. This is frequently accomplished by taking a pre-trained model (for example, one trained on a large image dataset) and fine-tuning it on a smaller, task-specific dataset.

Benefits

- Knowledge Transfer: Pre-trained models capture generic features and patterns from large datasets, saving time and resources compared to training a model from scratch.

- Efficient Training: Fine-tuning allows the model to adapt quickly to the specifics of a new task, requiring less labeled data for effective training.

Active Learning

Active learning is a method in which the model periodically asks the user for labels on the most informative or uncertain data points. This process optimizes the labeling effort by prioritizing instances that are expected to contribute the most to improving model performance.

Benefits

- Efficient Labeling: By actively selecting the most informative samples, active learning reduces the amount of labeled data needed for model training.

- Resource Utilization: Resources are allocated more efficiently as the model focuses on instances that have the most impact on improving their performance.

Optimizing With NetNut

NetNut is a premium proxy service that stands out for its reliability, speed, and comprehensive global coverage. With a vast network of residential IPs, NetNut provides a scalable and efficient solution for data collection, making it an invaluable asset for machine learning practitioners.

Optimizing NetNut with your machine learning data process offers numerous benefits:

-

- Enhanced Data Diversity: NetNut’s global coverage and residential IPs allow machine learning practitioners to access data from different geographic locations and demographics. This diversity enhances the representativeness of datasets, improving model generalization.

- Efficient Scraping and Crawling: The high-speed capabilities of NetNut proxies accelerate the process of web scraping and crawling. This efficiency is particularly crucial when dealing with large datasets, contributing to quicker model training and development.

- Ensuring Compliance and Ethical Data Practices: NetNut’s proxies provide anonymity and privacy in data acquisition aligns with ethical considerations and compliance requirements. This ensures that machine learning practitioners adhere to regulatory frameworks and maintain responsible data practices.

These strategies play a crucial role in maximizing the use of machine learning data and addressing common challenges in data availability and quality.

Conclusion

The strategic management of machine learning data is not just a present-day consideration; it lays the groundwork for the future of artificial intelligence (AI). As technology advances, the importance of ethical data practices, robust security measures, and efficient data utilization will only increase.

Organizations that proactively address difficulties and adopt effective strategies will be well-positioned to lead the way in the field of artificial intelligence as data becomes an ever more valuable asset.

Feel free to contact us if you have any questions regarding the best proxy solution for your needs.

Frequently Asked Questions And Answers

How can you maintain privacy when collecting machine learning data?

Privacy is a major concern when collecting machine learning data from several sources. However, NetNut proxy servers enhance your anonymity and optimize data security.

How can you improve the diversity of machine learning datasets?

One of the challenges of obtaining data for machine learning is geo-restrictions. However, NetNut is a global proxy provider that offers rotating residential IPs that allows you to bypass these restrictions with ease. As a result, you can obtain a more diverse learning dataset which optimizes the performance of the machine model.

What is the significance of machine learning data?

Just as how humans rely on food and water, machine models rely on data for optimal performance. Therefore, the success of a model is significantly influenced by the quality of machine learning data. In addition, machine learning data is essential because it is the machine model’s source of knowledge and experience.