Introduction

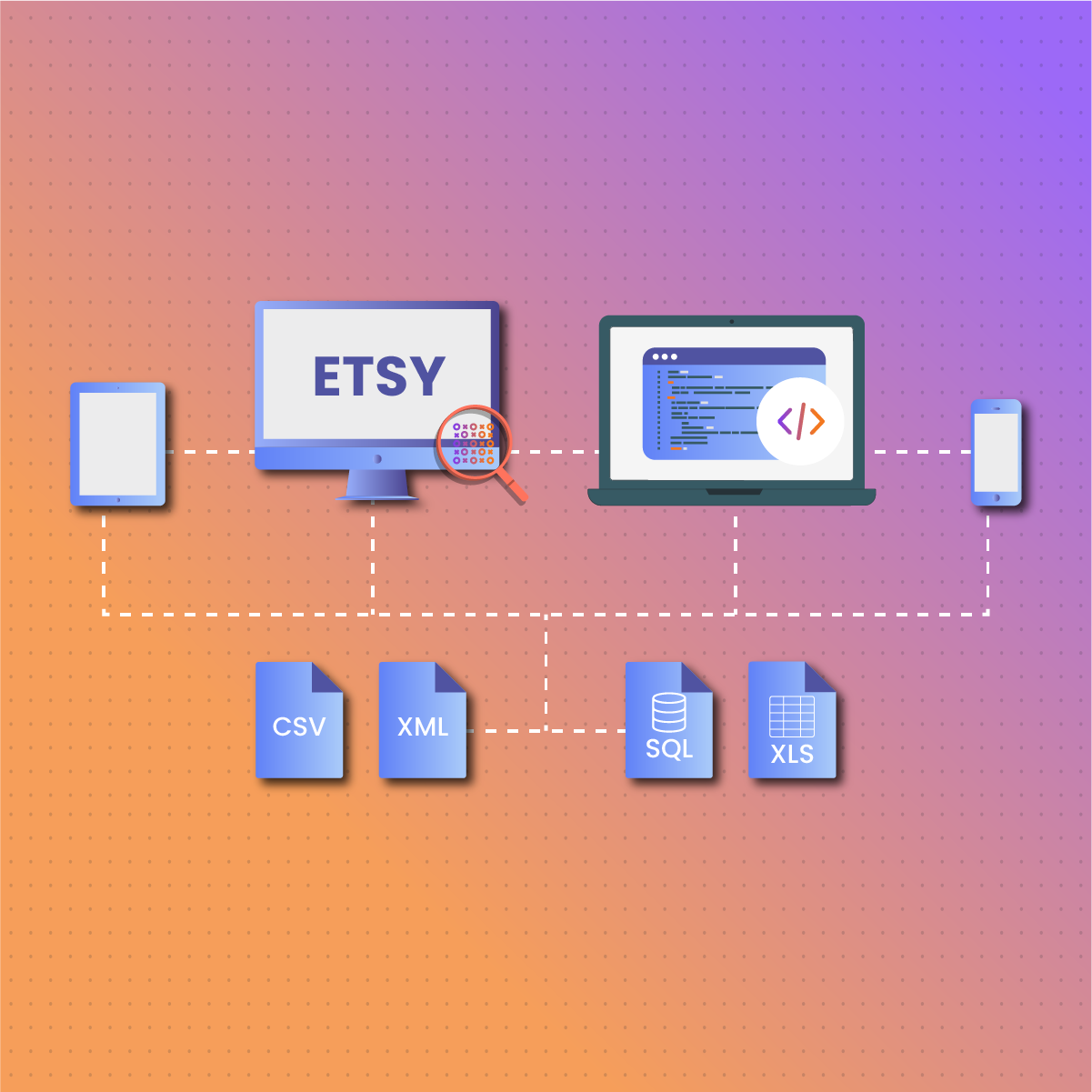

The ecommerce industry is a large space that has made buying and selling online easier. Etsy is one of the best ecommerce platforms. Etsy isn’t just your average online marketplace; it’s crowded with artisans, crafters, and creative entrepreneurs who showcase their unique wares to a global audience.

Consequently, with the large amount of data available on Etsy, some individuals might want to gather information from this platform. This is where the understanding of Etsy scraper comes in.

Imagine sifting through thousands of product listings, analyzing pricing trends, and identifying top-selling items without manually clicking through endless pages. That’s the beauty of Etsy scraper—it automates the stressful process of gathering data from the web. This lets you focus on what matters most: gaining insights and making informed decisions.

If you are wondering how to go about this process, do not worry; we have you covered here! In this guide, we will break down complex concepts. More so, look into easy-to-follow steps and best practices for scraping data on Etsy. So, let’s get into that now!

Understanding Etsy’s Structure

Before learning how to scrape data on Etsy, it is essential to know about this platform. Etsy is more than just a digital storefront—it’s a carefully curated marketplace designed to connect buyers with sellers easily. At its best, Etsy organizes its content into distinct categories, making it easy for users to navigate and discover products that align with their interests and preferences.

When you first land on Etsy’s homepage, you’ll notice categories like themes, jewelry, home décor, clothing, and accessories. These categories serve as entry points into Etsy’s ecosystem, allowing users to explore niche markets and uncover hidden gems within each specialized area.

Within each category, you’ll find a series of individual product listings uploaded by sellers showcasing their unique offerings. Listings typically include essential details such as product images, titles, descriptions, prices, and seller information, giving buyers a comprehensive overview of what’s available.

Clicking on a listing takes you to the product page, where you can look into the item’s features and read reviews from other buyers. And, if you’re ready to commit, make a purchase. Product pages serve as virtual storefronts for sellers, providing a platform to showcase their craftsmanship and connect with potential customers.

In essence, understanding Etsy’s structure isn’t just about navigating the website—it’s about unlocking the full potential of its data-rich ecosystem. With this knowledge, you’ll be well-equipped to embark on your scraping journey and uncover hidden data within Etsy’s digital marketplace.

Getting started: setting up your Etsy scraper environment

Setting up your environment is the first crucial step for a practical Etsy data scraping using Python. By ensuring that your development environment is configured correctly and equipped with the necessary tools and libraries, you’ll be ready to embark on your scraping journey confidently. In this section, we’ll walk you through setting up your Etsy scraper environment step by step.

Installing Python

Before going into Etsy web scraping, you must ensure that Python is installed on your system. Python is a versatile programming language widely used for web development, data analysis, and automation—making it the perfect tool for our scraping adventure.

Installing Python is a straightforward process. You can download the latest version of Python from the official website and follow the installation instructions for your operating system. Once installed, you’ll have access to Python’s powerful features and libraries, paving the way for your scraping journey.

Installing Necessary Libraries (BeautifulSoup and Requests)

Now that Python is up and running, it’s time to install the essential libraries for web scraping: BeautifulSoup and Python Requests. These libraries are indispensable tools for parsing HTML content and making HTTP requests—critical components of any scraping endeavor.

Using Python’s package manager, pip, you can easily install BeautifulSoup and Requests with a single command:

pip install beautifulsoup4

pip install requests

With these libraries installed, you’ll have everything you need to scrape Etsy’s website and extract valuable data.

Importance of environment setup for successful scraping

Setting up your Etsy scraper environment lays the foundation for a successful scraping process. Installing Python and the necessary libraries equips you with the tools and resources needed to navigate the intricacies of web scraping easily.

In addition, a well-configured environment ensures access to the latest features and updates, maximizing the efficiency and reliability of your scraping efforts. Moreover, a correctly set environment streamlines the development process, allowing you to focus on writing code and extracting insights from Etsy’s data-rich pages.

How to identify your target data for the Etsy scraper process

Before web scraping, defining your objectives and deciding what specific information you want to extract from Etsy is essential. This step is crucial for guiding your scraping efforts and ensuring that you focus on gathering the most relevant and valuable data for your purposes.

Are you interested in analyzing pricing trends for a particular category of products? You may want to compile a database of top-selling items to inform your product development strategy. Whatever your goals, taking the time to identify your target data upfront will help streamline your scraping process and ensure that you extract the information you need efficiently.

Importance of defining data requirements before writing the Etsy scraper

Defining your data requirements before writing the scraper is key to success in web scraping. Without a clear understanding of what information you’re seeking to gather, you run the risk of wasting time and resources on extraneous data or, worse, missing out on critical insights altogether.

By establishing precise data requirements upfront, you can tailor your scraping process to focus on extracting the most relevant and actionable information from Etsy’s website. This ensures that you achieve your objectives more effectively and helps avoid unnecessary complexity and confusion during the scraping process.

Examples of potential data to extract (product names, prices, descriptions, seller information)

Regarding scraping Etsy, the possibilities regarding the types of data you can extract are virtually endless. Here are a few examples of the kinds of information you might consider targeting:

- Product Names: Extracting product names allows you to identify and categorize specific items for analysis or further processing.

- Prices: Scraping prices enables you to analyze pricing trends, identify outliers, and compare prices across different sellers and categories.

- Descriptions: Extracting product descriptions provides additional context and detail about each item, helping you better understand its features and benefits.

- Seller Information: Scraping seller information allows you to identify top sellers, analyze seller ratings and reviews, and track seller activity over time.

These are just a few examples of the data types you can extract from Etsy, depending on your specific objectives and use cases. By identifying your target data upfront and understanding its significance, you can ensure that your scraping efforts are focused, efficient, and, ultimately, successful.

Writing your Etsy scraper

Now that you’ve identified your target data and set up your environment, it’s time to write your Etsy scraper. Here, we’ll guide you through creating a Python script to scrape data from Etsy’s website using the BeautifulSoup and Requests libraries.

Importing libraries and setting up the script

The first step in writing your scraper is to import the necessary libraries and set up the script environment. In this case, you’ll need to import BeautifulSoup for HTML parsing and Requests for making HTTP requests to Etsy’s website.

Here’s how you can import the required libraries and set up the script:

import requests

from bs4 import BeautifulSoup

Once you’ve imported the libraries, you can move on to the next step—sending HTTP requests to Etsy’s website.

Sending HTTP requests to Etsy’s website

To scrape data from Etsy, you’ll need to send HTTP requests to the relevant pages on the website. This involves specifying the URL of the page you want to scrape and using the Requests library to retrieve the HTML content of that page.

Here’s an example of how you can send an HTTP request to Etsy’s website and retrieve the HTML content:

url = ‘https://www.Etsy.com’

response = requests.get(url)

In this example, we’re sending a GET request to Etsy’s homepage and storing the response in a variable called response.

Parsing HTML content with BeautifulSoup

Once you’ve retrieved the HTML content of the page, the next step is to parse it using BeautifulSoup. BeautifulSoup allows you to easily navigate and extract data from HTML documents, making it an invaluable tool for web scraping.

Here’s how you can parse the HTML content using BeautifulSoup:

soup = BeautifulSoup(response.text, ‘html.parser’)

In this example, we’re creating a BeautifulSoup object called soup and passing in the HTML content of the page as well as the HTML parser.

Extracting desired data elements

With the HTML content parsed and stored in a BeautifulSoup object, you can extract the desired data elements from the page. This typically involves identifying specific HTML tags and attributes that contain the data you’re interested in and using BeautifulSoup’s methods to extract that data.

For example, if you wanted to extract the titles of all product listings on the page, you could use BeautifulSoup’s find_all() method like this:

product_titles = soup.find_all(‘h3′, class_=’text-gray text-truncate mb-xs-0 text-body’)

This code snippet finds all <h3> elements with the specified class and stores them in a variable called ‘product_titles’.

Example code snippets and explanations

Here’s a complete example of a simple scraper script that retrieves the titles of all product listings on Etsy’s homepage:

import requests

from bs4 import BeautifulSoup

url = ‘https://www.Etsy.com’

response = requests.get(url)

soup = BeautifulSoup(response.text, ‘html.parser’)

product_titles = soup.find_all(‘h3′, class_=’text-gray text-truncate mb-xs-0 text-body’)

for title in product_titles:

print(title.text)

In this script, we’re importing the necessary libraries, sending an HTTP request to Etsy’s homepage, parsing the HTML content, finding all product titles on the page, and printing them to the console.

This example is a starting point for building more complex scrapers that extract additional data elements from Etsy’s website. Experiment with different HTML tags and attributes to extract the needed information. Feel free to consult BeautifulSoup’s documentation for more advanced techniques and features.

Handling Etsy scraper pagination

Pagination is a standard technique websites like Etsy use to organize large amounts of content across multiple pages. On Etsy, when search results or category listings exceed a certain number of items, the website divides the content into separate pages, typically displaying a limited number of items per page.

Pagination is essential for improving user experience by reducing page load times and making navigating through large datasets easier. However, pagination presents a challenge for web scrapers as it requires the scraper to navigate through multiple pages to access all the desired data.

Modifying the scraper to handle multiple pages of data

To handle pagination when scraping Etsy, you must modify your scraper to navigate various data pages automatically. This involves identifying and extracting the pagination links from the initial page and then iteratively scraping each subsequent page until all relevant data has been collected.

Here’s a general outline of how you can modify your scraper to handle pagination:

- Identify the pagination links on the initial page.

- Extract the total number of pages or the following page link.

- Iterate through each page, sending HTTP requests and scraping data.

- Combine the data from all pages into a single dataset for analysis or storage.

Techniques for navigating through pagination

There are several techniques you can use to navigate through pagination when scraping Etsy:

- Extracting next page links: Look for links or buttons labeled “Next” or “Next Page” on the initial page and extract the URL of the next page. Use this URL to send subsequent requests and continue scraping.

- Parsing page numbers: Some websites include pagination links with page numbers that you can parse to construct the URLs of subsequent pages. Extract the page numbers and generate the corresponding page URLs programmatically.

- Limiting the number of pages: Instead of scraping all pages of data, you may limit the number of pages to scrape based on your requirements or preferences. This can help prevent excessive scraping and speed up the process.

- Handling edge cases: Be prepared to handle edge cases, such as disabled or non-existent pagination links, irregular pagination formats, or variations in pagination behavior across different pages or categories.

By using these techniques, you can ensure that your scraper effectively navigates through pagination on Etsy and retrieves all the relevant data across multiple pages. Handling pagination allows you to access a more comprehensive dataset, enabling deeper analysis and insights from Etsy’s wealth of information.

Best practice for Etsy scraper process- use of NetNut proxies

Scraping data from Etsy using Python can be a powerful way to gather valuable insights, monitor trends, and extract information for various purposes. However, when conducting web scraping activities, it’s essential to adhere to best practices to ensure efficiency. This safe method involves the use of a proxy service.

Why should you use NetNut proxy service?

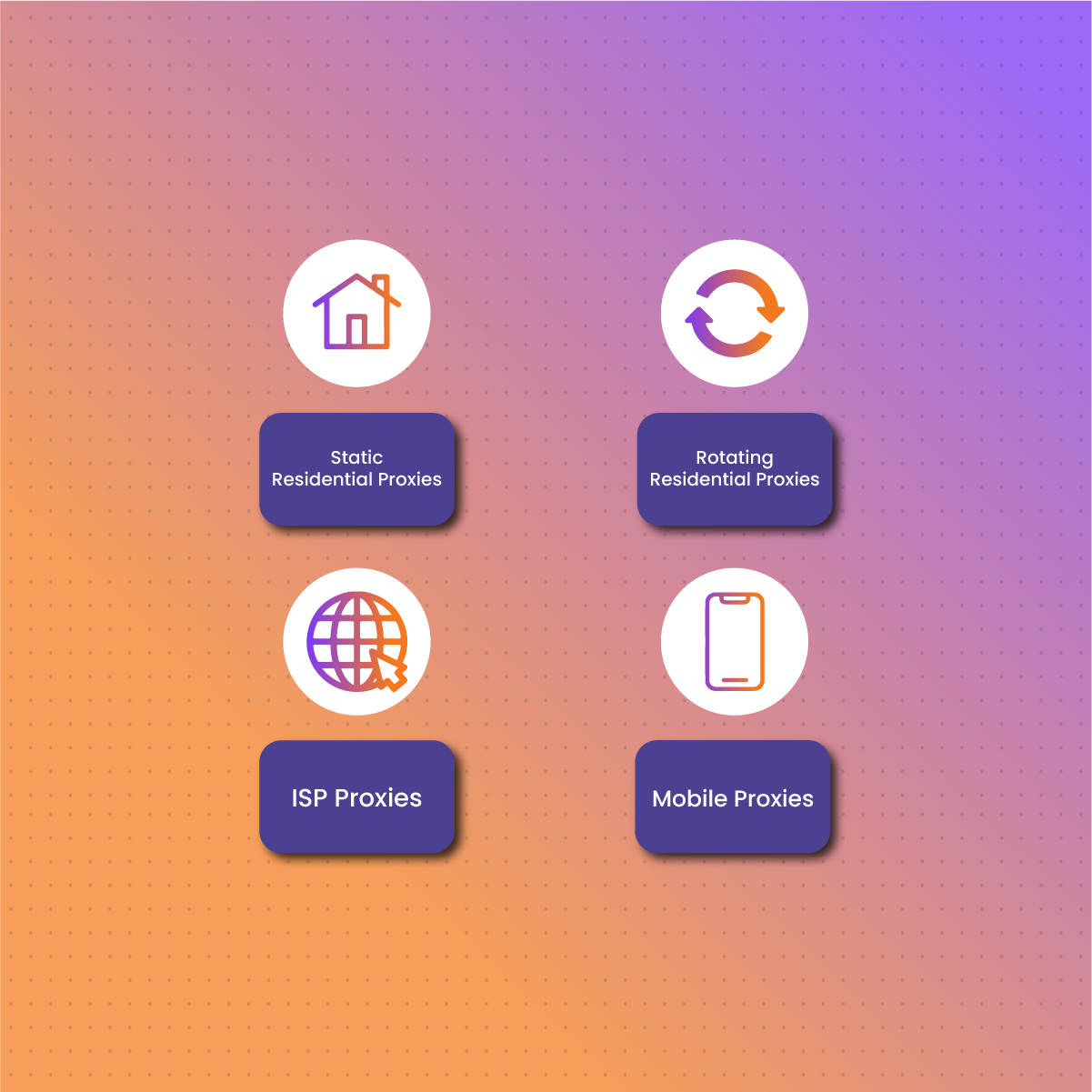

NetNut provides a range of proxy solutions designed to facilitate web scraping activities. These include:

- Static Residential Proxies: Static residential proxies offer consistent IP addresses that remain unchanged throughout the scraping session. They are suitable for tasks that require stable connections and low latency, such as browsing product listings or monitoring prices on Etsy.

- Rotating Residential Proxies: Rotating residential proxies automatically switch between a pool of IP addresses, providing diversity and preventing IP blocking or detection. They are ideal for scraping large amounts of data from Etsy without triggering rate limits or bans.

- ISP Proxies: ISP proxies route traffic through Internet Service Providers (ISPs), mimicking genuine user behavior and reducing the likelihood of detection by anti-scraping measures. They are effective for scraping dynamic content or conducting detailed market research on Etsy.

- Mobile Proxies: Mobile proxies emulate mobile device connections, enabling access to mobile-specific content on Etsy and ensuring compatibility with mobile-responsive features. They are beneficial for scraping mobile listings or capturing mobile user data.

By integrating NetNut proxies effectively, you can enhance your Etsy scraping endeavors’ performance, reliability, and compliance, whether monitoring product prices, analyzing market trends, or extracting valuable data for research purposes. In the long run, integrating proxies can help optimize your Etsy scraping operations and unlock the full potential of Etsy’s data-rich platform.

Conclusion

In summary, you have been armed with knowledge for effective Etsy scraping using Python. From understanding Etsy’s website structure to navigating pagination and from writing your scraper to storing and handling data, we’ve covered processes to equip you with the skills needed to extract valuable information from Etsy’s vibrant marketplace.

Throughout this guide, we’ve emphasized the importance of ethical and responsible scraping practices. More so, by respecting website policies, adhering to rate limits, and avoiding server overload, ensure that your scraping process contributes positively to the online marketplace while maintaining the integrity of Etsy.

Whether you’re a seasoned developer, a data enthusiast, or an entrepreneur seeking market insights, the power of Etsy web scraping with Python opens doors to endless possibilities on Etsy and beyond. Enjoy your Etsy scraping process!

Frequently Asked Questions

Is it legal to web scrape data on Etsy?

Web scraping is legal in many jurisdictions as long as it complies with the website’s terms of service and respects copyright laws. However, it’s essential to review Etsy’s terms of use and robots.txt file to ensure compliance.

Can I use scraped data on Etsy for commercial purposes?

While scraping data from Etsy for personal use or research purposes may be permissible, using scraped data for commercial purposes may require permission from Etsy and potentially involve licensing agreements or legal considerations.

Are there risks of getting blocked by Etsy?

Engaging in aggressive or unauthorized scraping practices may result in being blocked or banned from accessing Etsy’s website. Exercise caution, respect website policies, and monitor scraping activity to avoid potential blocks or penalties.