Headless Browsers: Maximizing Web Data Scraping with Netnut Proxies

Introduction To Headless Browsers

Introduction To Headless Browsers

Extracting data from a static web page differs from getting data on a dynamic page. A dynamic page is characterized by constantly evolving elements. Therefore, it often requires codes to get data from these websites. This often creates problems because you must run the code inside an environment with a graphic interface.

As a result, the browser will need more time and resources to render the web page, which slows down the process of extracting data. This is where headless browsers come in. They are an efficient way to remove data from a dynamic website without updating your codes or waiting for graphic elements to load.

This article will cover web scraping with headless browsers, including what headless browsers are, benefits, challenges, use cases, and how to maximize data scraping.

Let us dive in!

What Is A Headless Browse?

A headless browser is a web browser without a graphical user interface (GUI). It operates programmatically and is commonly used for automated tasks such as web scraping, testing, and monitoring. Unlike standard browsers, which display content for human interaction, headless browsers render web pages in the background, executing JavaScript, handling cookies, and simulating user actions like clicking and form submissions. This capability makes them ideal for tasks where visual feedback isn’t necessary, allowing for faster, more efficient automated interactions with websites.

A headless browser lacks a graphical user interface. Features like icons and drop-down menus on regular web browsers are often absent on the headless browser. However, it can still perform the functions of a regular web browser, such as clicking on the screen, uploading data, downloading, and navigating between various pages.

Headless browsers have two primary functions: they are often used for cross-browser testing. However, they are gaining increasing popularity from their critical roles in web scraping. These headless browsers excel at executing JavaScript and mimicking human behavior, making them a valuable tool for evading anti-scraping filters

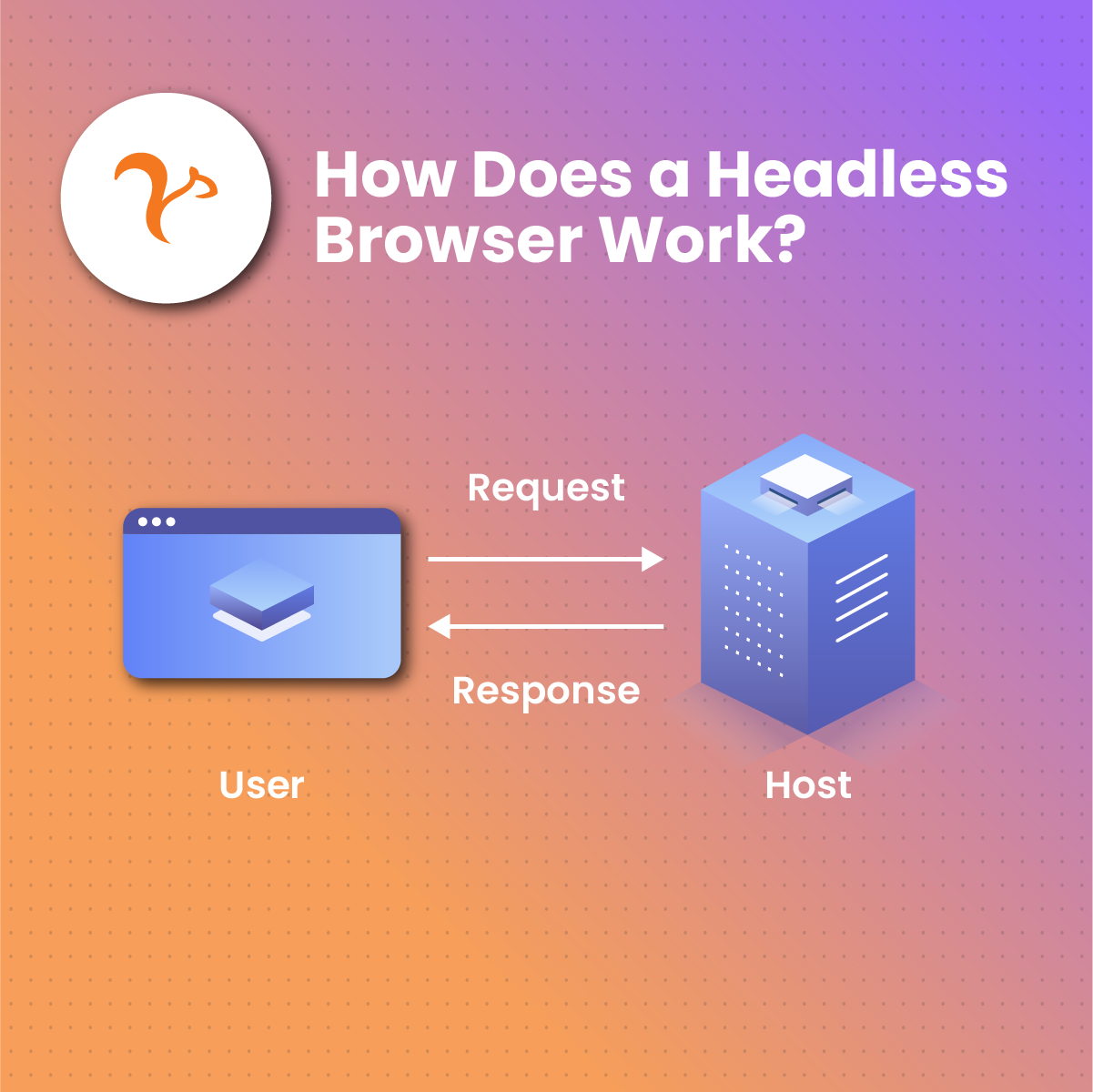

How do headless browsers work?

Headless browsers function by running in the background without a user interface, making them faster and more resource-efficient than traditional browsers. They use a browser engine, such as Chromium or Gecko, to load and interact with web content as a typical browser would, but without displaying it on the screen. This means they can execute JavaScript, manage cookies, handle CSS, and even emulate different device settings like screen size or location. Developers and testers use headless browsers to automate workflows such as data extraction, UI testing, and performance monitoring. They can simulate user actions like clicking, scrolling, and filling out forms, allowing scripts to perform a wide range of web interactions programmatically.

A headless browser works like a regular web browser. When it loads a website, it sends a request to the web server, receives the response (HTML document), parses the page, and executes any JavaScript code.

One unique feature that sets headless browsers apart is that it provides access to web page data through API (Application Programming Interface). However, these APIs do not directly control the headless browsers. Instead, specialized libraries encapsulate the browser’s API into a format that makes it easier to work with.

Most times, people confuse these specialized libraries, otherwise known as drivers for the headless browsers.

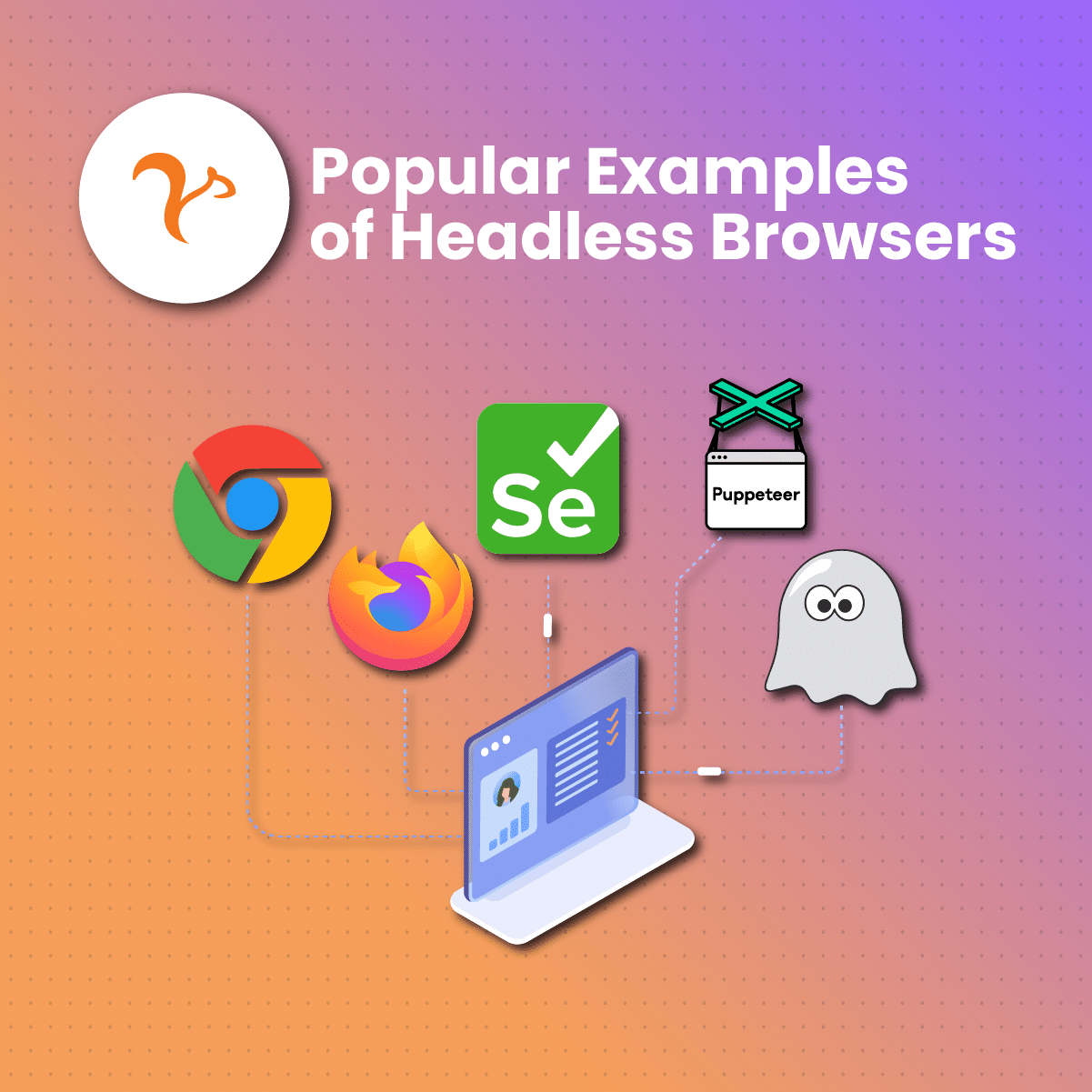

Examples of Headless Browsers

A significant feature of headless browsers is the ability to run on little resources. In simpler terms, the browser should not slow down other applications on the system. Here are some of the examples of headless browsers:

- Chromium: This is arguably the most popular headless browser. Although it was not the first of this type, it came with innovative features. In addition, many other popular browsers, such as Edge and Brave, were built on Chromium.

- Firefox: Firefox is another example of a browser that can run headless. The settings allow you to configure it so it can be used for web testing or data scraping. Firefox can be connected to various APIs in headless mode. This browser can be used as headless with version 56 or higher.

- Google Chrome: Google Chrome is built on Chromium, so it can be configured to run headless. One unique feature of this browser is the ease of mimicking a real user. This lightweight headless browser has a command line for data extraction.

- Splash: Splash is a less popular name. The programming language used to write this browser is Python. It comes with an HTTP API, built-in Python-based IDE, and Lua scripting support. Although not based on an actual browser, it is fast and usually gets the job done.

- WebKit: Webkit, an open-source browser engine behind Apple Safari, can also run headless. Developers often use this feature to test how new programs or updates perform on Safari.

Benefits of Headless Browsers

Headless browsers offer numerous benefits, especially for developers and testers. They provide a fast, efficient way to automate web interactions, enabling tasks such as web scraping, automated testing, and continuous integration without the overhead of a visual interface. This results in quicker execution times and reduced resource consumption. Headless browsers are also valuable for performance monitoring, as they can simulate user experiences across different devices and environments.

They help ensure web applications are functional, responsive, and performant by testing how they handle JavaScript execution, page loading times, and dynamic content. Additionally, headless browsers enhance security by automating tests to detect vulnerabilities and ensure compliance with security standards, making them a crucial tool in modern web development and quality assurance processes.

Specialized Libraries/Drivers For Headless Browsers

Data enthusiasts and developers rely on specialized libraries to harness the potential of headless browsers. They empower users to interact with headless browsers and extract data from web pages.

Here are some common drivers that control headless browsers:

Selenium

Selenium is an open-source tool that supports automation. Although it is old and huge, it is most commonly used and has a big community. Selenium supports several browsers that run on various OS (operating systems). Selenium web driver delivers great results, but it can be slow sometimes.

Playwright

Playwright is another open-source library. Microsoft built it to automate several browsers like Firefox, WebKit, and Chromium. Playwright driver may be the preferred library to control headless browsers because it is available in multiple programming languages such as Python, JavaScript, and more. In addition, Playwright is an improved version of the Puppeteer.

Puppeteer

Puppeteer is an open-source Node.js library that has steadily gained popularity as a reliable headless browser driver. This library automates Chromium and Chrome and is maintained by people close to the Chromium team. You can read a recent article here to get more information.

What are the Use Cases of a Headless Browser?

Headless browsers are unique because they quickly understand HTML pages. In addition, they are faster because they can bypass all the time it takes a regular browser to load the CSS. Therefore, they are mostly used for website testing. Let us examine the use cases of headless browsers:

Headless Browser Scraping

Headless Browser Scraping involves using a headless browser to retrieve data from a web page. You can do much more with a headless browser than with Python services. These headless browsers have the capability to interact with a website’s JavaScript, enabling the extraction of dynamic content. In addition, you can program it to imitate human actions like taking screenshots or interacting with elements on the web page.

Automation

Automation is another use case of headless browsers. Automation tests are run for anything that can be automated to save time and effort. These tests are carried out to check mouse clicks, submission forms, development, QA, and installation.

Website Performance

You can employ a headless browser to test the performance of a website. Typically, a browser without a graphical user interface loads websites faster. Therefore, this makes it a suitable option to test websites that do not require user interface interaction. Although using headless browsers is faster, it is only reliable for small performance tests such as log-in assessments.

Advantages of using Headless Browsers

Automation

Headless browsers are powerful tools for automating interactions with websites. They can perform activities such as page navigation, form filling, and clicking buttons. Likewise, these capabilities also extend to automating tasks during web data extraction. While this process saves time, it only applies to websites that are not protected or regularly updating their anti-scraping strategies.

Efficient Resource Management

Headless browsers take the medal for resource management. They are designed to operate without the need for extensive resources. Therefore, they can render and display the content of web pages without strain on the available resources.

Scalability

The ability of headless browsers to run in the background without causing system slowdown is a testament to their scalability. Since they do not rely on graphical resources, they are ideal for handling multiple processes simultaneously.

Speed

Headless browsers are inherently faster than their graphical counterparts because they operate with limited resource consumption. This speed advantage significantly benefits tasks like data scraping.

Challenges of Headless Browsers

- Compatibility problems: Some websites are programmed to interact with GUI-based browsers. Therefore, headless browsers may not be compatible with these web pages for any activity.

- Lack of visual feedback: The primary drawback of using headless browsers is the lack of visual feedback. Therefore, activities like troubleshooting and debugging that require a visual interface become challenging.

- Maintenance: Another drawback to using headless browsers is the need for regular updates to fix bugs and keep up with current technology trends.

- Limited Interaction with JavaScript: Headless browsers are limited in their interaction with JavaScript. However, some websites are highly reliant on JavaScript to load and display their dynamic content. Therefore, headless browsers are not ideal for handling dynamic interactions on these websites, which may lead to inappropriate rendering.

Using Headless Browsers For Data Scraping

Headless Browser Scraping is the process of extracting data from websites but with a headless browser. In other words, it means data scraping without a graphical user interface.

As a result, you are eliminating the rendering stage of data extraction when you use a headless browser. Although you can use any programming language, you must first install a package or library that can interact with the headless browser.

Does Headless Data Extraction Promise Speed?

Yes, headless data extraction is typically a faster method of scraping. The speed can be attributed to two factors. First, headless browsers eliminate the process of UI rendering, which enhances the efficiency of data extraction. Secondly, it requires fewer resources to extract data from a website.

Since headless browsers eliminate certain processes, it saves time as well as reduces data consumption during web scraping. Therefore, if you are using services that bill you based on the transferred data volume, you can expect significantly lower costs than regular browsers.

Another unique feature of headless browsers is their ability to scrape data from dynamic pages. While Python services may be fast, extracting dynamic data often poses a challenge. However, headless browsers allow you to interact with the web page, fill out forms, and do various other activities.

Are Headless Browsers Undetectable?

No, they are not undetectable. Some websites have updated technology that can detect scraping activities even when using headless browsers. When you use headless browsers to access data, the website identifies it as the activities of a bot and not a human. However, using drivers like Selenium can make the website read your activities like a human’s.

The only problem is that this method sacrifices speed to optimize anonymity.

Some of the scraping detection techniques include:

CAPTCHAs:

CAPTCHAs are used to filter bots. You may have seen these annoying little guys pop up here and there. While the tasks may be easy to complete by humans, machines may take quite some time to get it right.

However, you can bypass restrictions by CAPTCHAs by using a reliable proxy service. CAPTCHAs may seem like a normal part of a website. Still, they play a unique role in identifying and blocking suspected bot scraping activities.

Request Frequency:

The request frequency is a significant indicator of the possible function of a browser. Therefore, the high performance of the headless browser can be a blessing and a challenge. While you can send multiple requests simultaneously, websites with anti-scraping measures can quickly detect and block these activities.

Furthermore, it is almost impossible to identify the number of requests it takes to trigger the anti-scraping measures on a website. This could lead to blocked IP addresses and frustration. This is where the rotating residential proxy comes into play. It allows you to send multiple requests with various IP addresses to avoid being blocked.

User-agent detection:

When a browser sends a request to a website, it is accompanied by a unique header described as the user agent. It includes information on the browser type and version used to access the website. Sometimes, web pages employ user-agent detection to identify scrapers. Therefore, the website will recognize the browser as “headless,” which will trigger a response that blocks the IP address.

However, as with most problems associated with data scraping, there is a solution. Residential proxy service can protect your IP address from being blocked.

IP filtering:

This technique involves collecting identifying data from your system and making a unique hash with them. Various data can be collected with the appropriate software to measure the distortions caused by your system process.

Access to media devices on your system can help the website get a unique fingerprint. As a result, any future scraping activity will be easily identified and blocked. Avoiding these anti-scraping measures can be a hurdle to businesses that thrive on data.

There are two solutions to this problem. Either you keep doing the same thing with various methods- this is also called the trial and error method, which is time-consuming, frustrating, and with little chance of success.

On the other hand, you can get professional services that make the data extraction process simple, easy, and efficient. Even if you need mobile solutions, you will be satisfied and stay caught up when you invest with industry-leading experts.

How to Use Rotating Proxies with Headless Browsers- Netnut

Your data extraction activities can be described as successful when you can scrape data using a proxy and headless browser without the server blocking your IP address.

Do you need a reliable proxy for web scraping activities? Netnut is a global solution that provides various proxies to cater to your specific data extraction needs. These proxies serve as intermediaries between your device and the website that holds the data.

Netnut has an extensive network of over 52 million rotating residential proxies in 195 countries and over 250,000 mobile IPS in over 100 countries, which helps them provide exceptional data collection services.

The various proxy solutions are designed to help you overcome the challenges of web scraping for effortless results. These solutions are critical to remain anonymous and prevent being blocked while scraping data from web pages.

Final Thoughts on Headless Browsers

Data has become a critical aspect of operations in organizations. Web scraping is a way to obtain these data from various web pages, analyze and interpret them. Headless scraping is a way to extract data with a particular browser without a graphic user interface. As a result, it is faster and requires fewer resources and data to scrape data from multiple websites.

This guide also discussed the advantages and challenges of using a headless browser to scrap data. Instead of depending on trial and error, you can go for an API-based solution like Netnut to optimize web data extraction. You get access to proxies that best suit your scraping needs bypass CAPTCHA and anti-bot protection.

Try Netnut today to enjoy industry-leading data scraping service!

Frequently Asked Questions About Headless Browsers

Why should I use the headless browser with Netnut proxy?

Headless browsers and proxies offered by Netnut provide optimized data scraping activities. Netnut proxy ensures anonymity, so you can extract data from various sources by rotating your IP address to avoid triggering anti-scraping measures on the web page.

Does a headless browser require a specific package?

Yes, you need to install some special packages to use headless browsers for data extraction. They include Puppeteer, Playwright, Selenium, and others.

How can I select the best proxy plan for data extraction?

Netnut offers various options to cater to your web scraping needs. Factors like degree of anonymity, frequency, scope, and volume of data may play significant roles in deciding the best plan for you. Book a consultation today to get started!

Can Netnut proxy work with other tools apart from headless browsers?

Yes, Netnut proxies can work with other tools aside from headless browsers. Netnut is a versatile platform that offers solutions that can be integrated into various frameworks to optimize web data scraping.