Introduction

Google Job is a powerful aggregator that gathers job listings from various sources in one place, making it easier for job seekers to find relevant opportunities with ease. This feature can be found within Google Search and is one of the platform’s strategies in ensuring information is universally accessible to individuals from any part of the world.

Subsequently, it provides a unique opportunity for job seekers to access on-site, remote, and hybrid job opportunities from the convenience of their homes. As a job seeker, when you search for a specific job title, Google Jobs displays relevant job listings. One of the amazing features of Google Jobs is that it comes with filters like job type, location, date posted, and more to help you streamline your search results.

This guide will examine how to scrape Google Job listings with Python and NetNut Scraper API. In addition, we will explore common challenges as well as why you need NetNut proxies.

Let us dive in!

Benefits of Scraping Google Job Listings Data

Here are some reasons why you may want to scrape Google Job listings data:

Analyze trends

One of the many reasons to scrape Google Job listings is that it provides useful insight into hiring trends. Subsequently, you can analyze the data to identify trends in job requirements, hiring practices, and skills in demand in your field. As a job seeker, you can use this data to upgrade your skills and ensure you are a top candidate among numerous applicants from various parts of the world.

In addition, trend analysis is crucial to those with multiple skills. It provides insight into the skill that is in high demand.

Competitive edge

Another significant aspect of scraping Google Job data is the competitive advantage it offers job seekers. Learning how to scrape Google Job data gives you access to information that allows you to understand what companies are looking for. You can grasp the hiring trend culture, which will allow you to be the perfect candidate for the job. Within 24 hours of a job posting, about 100 candidates have applied. Therefore, you can only stand a chance when you have something the company is looking for, which you can only learn through data scraping.

Job search optimization

Apart from job seekers, recruiters can also benefit from scraping Google Job listings data. They can optimize the job listings to ensure it is visible on the Google search result page. This is necessary to increase visibility and reach the target audience so the role can be quickly filled. Scraping Google Job data provides access to keywords that can be used to ensure that the listings are exciting and attractive to high value candidates.

Competitor analysis

Another use of Google Job listings data is that it provides useful information for competitor analysis. Businesses gather information like pricing, benefits, and others to get insight into other companies in the industry.

Likewise, Google Job listings data can be used to analyze trends, hiring practices, and market positioning. In addition, businesses and job seekers can leverage salary information available in job listings to benchmark salaries roles.

Research

Scraping data from Google Jobs listings is useful to research. A company that wants to thrive in a highly competitive environment needs to make decisions backed by data. Subsequently, this helps the hiring team understand the demand for a certain skill, the average remuneration, job market dynamics, and other useful information.

Automate job hunting

As a job seeker, you can learn how to scrape Google Job listings with Python to automate your process of finding a suitable employment. When search for employment, you can use this data to find in-demand skills and suitable job opportunities. When you learn how to scrape Google Job listings with Python, it gives you access to a tool that allows you to collect job data based on your criteria with ease.

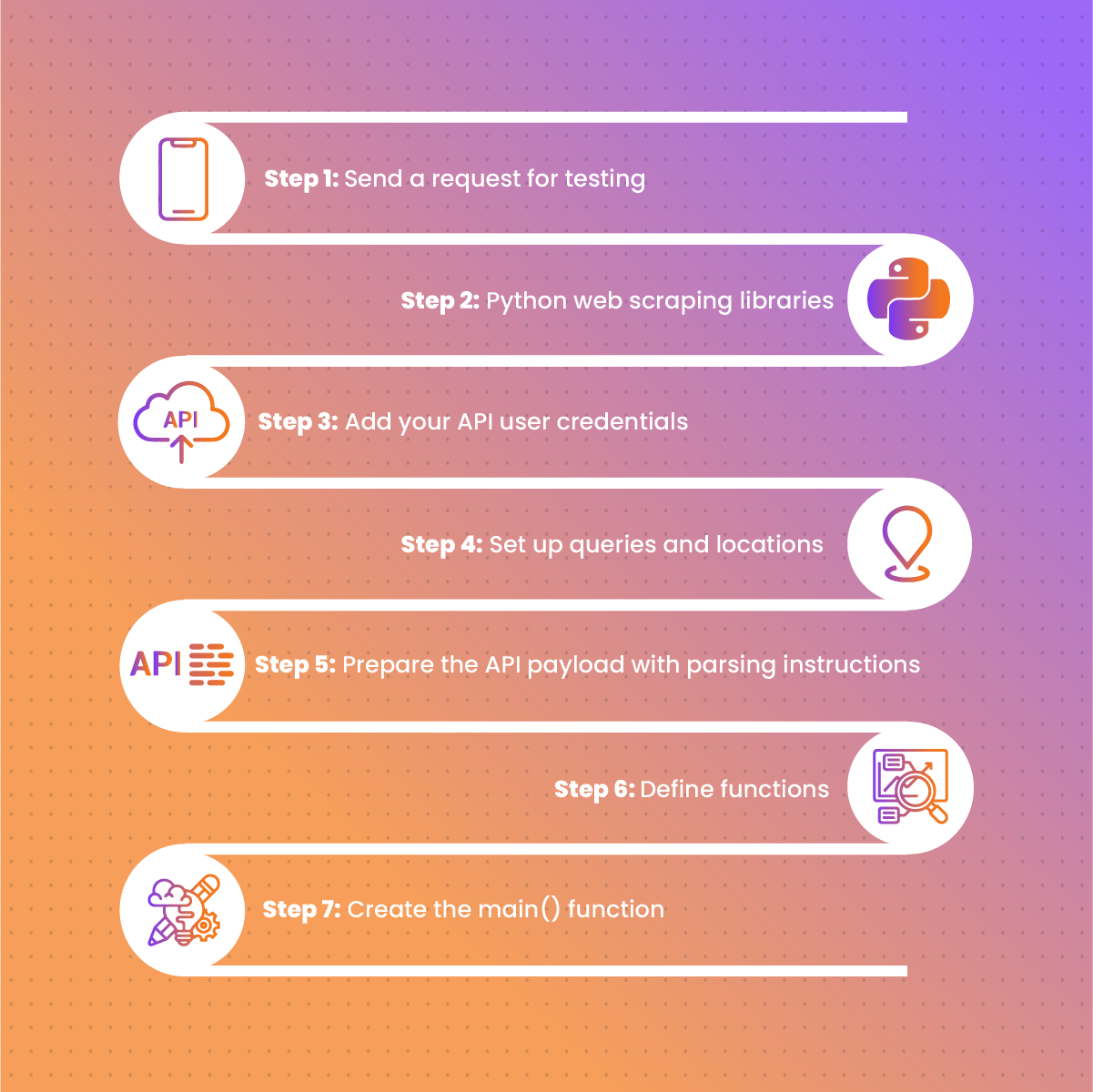

Step-by-Step Guide on How to Scrape Google Job Listings With Python

In this section, we shall examine how to scrape Google Jobs results asynchronously and retrieve publicly available data like company name, job title, location, listed date, platform posted, and salary.

Step 1: Send a request for testing

The first step is to visit NetNut official page and create an account to claim your 1-week free trial. Subsequently, this will give you access to NetNut SERP Scraper API which comes with advanced features like proxy servers, custom parser, headless browser and more.

Once you have settled creation of your NetNut account, head over to Python’s official website to download and install it.

Copy and save your NetNut API user credentials, which we will require later for authentication. Moving on, open your terminal and install the Python request library with the line of code as shown below:

pip install requests

To send a request for testing, run the following code to scrape Google Jobs results and extract the HTML file:

import requests

payload = {

“source”: “google”,

“url”: “ https://www.google.com/about/careers/applications/jobs/results/?q=%22Data%20Analyst%22”,

“render”: “html”

}

response = requests.post(

“ https://serp-api.netnut.io/search”,

auth=(“USERNAME”: “PASSWORD”), # Replace with your API user credentials. For example- Authorization: Basic base64(username:password) where base64(username:password) is the Base64-encoded string of your username and password concatenated with a colon (:) separator.

json=payload

)

print(response.json())

print(response.status_code)

Be patient for the code to run completely, and then you get a JSON response with HTML results and a status code of your request. You should receive the code 200 which indicates your code is working correctly.

Step 2: Python web scraping libraries

There are several Python web scraping frameworks that you can you use to scrape Google Job listings. However, for this guide, we will use asyncio and aiohttp libraries to make asynchronous requests to the API. In addition, you can use the json and pandas libraries to save the retrieved data in JSON and CSV format.

To install the libraries, open the terminal and run this line of code:

pip install asyncio aiohttp pandas

Now, you can import them into your Python file via:

import asyncio, aiohttp, json, pandas as pd

from aiohttp import ClientSession, BasicAuth

Step 3: Add your API user credentials

Create the API user credentials variable and use BasicAuth as aiohttp requires this for authentication:

credentials = BasicAuth (“USERNAME,” “PASSWORD”)

# Replace with your API user credentials

Step 4: Set up queries and locations

You can easily form Google Jobs URLs for different queries by manipulating the q= parameter:

https://www.google.com/about/careers/applications/jobs/results/?q=%22Data%20Analyst%22

This allows you to scrape job listings for as many search queries as you want. However, it is recommended that you visit the URLs with specific parameters via a proxy that is located in your desired location. As a result, you can ensure that the URL works for that location because Google can use a different URL-forming technique, which could present SERPs that may be incompatible with the CSS and XPath selectors in this guide.

Here are the mandatory parameters for the URL to work:

q=, ibp=htl;jobs, h1=, and gl=

URL parameters

Create the URL_parameters list to store your search queries:

URL_parameters = [“data’, “analyst,”]

Locations

Create the locations dictionary where the key describes the country and the value is a list of geo-location parameters. Subsequently, this dictionary will be used to dynamically form the API payload and localize Google Jobs results for your desired location. In addition, the two-letter country code will be used to modify the gl= parameter in the Google Jobs URL, as shown below:

locations = {

“us”: [“United States”],

“uk”: [“United Kingdom”],

“jp”: [“Japan”]

}

You can learn more about geo-locations here.

Step 5: Prepare the API payload with parsing instructions

Google Jobs Scraper API takes web scraping instructions from a payload dictionary, which makes it the most important configuration to modify. However, the url and geo_location keys are set to None because the scraper will pass these values dynamically for each search query and location. The “render”: “html” parameter enables JavaScript rendering and returns the rendered HTML file:

payload = {

“source”: “google”,

“url”: None,

“geo_location”: None,

“user_agent_type”: “desktop”,

“render”: “html”

}

The next step is to use Custom Parser to define your parsing logic with CSS selectors or xPath and extract the data you need. Bear in mind that you can create as many functions as you desire to retrieve more data points than examined in this guide.

Go to the Google Jobs URL in your browser and open the Developers Tools by pressing Ctrl+Shift+I for Windows. On the other hand, if you are using macOS, press Option + Command + I. To open a search bar and test selector expressions, use Ctrl+F or Command+F.

The job listings are within the <1i> tags that are wrapped with <u1>. Since there is more than one <u1> list on the Google Jobs page, you can create an xPath selector by indicating the div element that contains the targeted list, as shown below:

//div[@class=‘nJXhWc’]//u1/1i

Next, you can use this selector to indicate the location of all job listings in the HTML file. Set the parse key to True in the payload dictionary and create the parsing_instructions parameter with the jobs function:

payload = {

“source”: “google”,

“url”: None,

“geo_location”: None,

“user_agent_type”: “desktop”,

“render”: “html”,

“parse”: True,

“parsing_instructions”: {

“jobs”: {

“_fns”: [

{

“_fn”: “xpath”,

“_args”: [“//div[@class=’nJXhWc’]//ul/li”]

}

],

}

}

}

The next action is to create the _items iterator that will loop over the jobs list to retrieve details for each listing, as shown below:

payload = {

“source”: “google”,

“url”: None,

“geo_location”: None,

“user_agent_type”: “desktop”,

“render”: “html”,

“parse”: True,

“parsing_instructions”: {

“jobs”: {

“_fns”: [

{

“_fn”: “xpath”, # You can use CSS or xPath

“_args”: [“//div[@class=’nJXhWc’]//ul/li”]

}

],

“_items”: {

“data_point_1”: {

“_fns”: [

{

“_fn”: “selector_type”, # You can use CSS or xPath

“_args”: [“selector”]

}

]

},

“data_point_2”: {

“_fns”: [

{

“_fn”: “selector_type”,

“_args”: [“selector”]

}

]

},

}

}

}

}

Alternatively, you can create a separate function within the _items iterator. You can import the payload with the following lines of codes:

payload = {}

with open(“payload.json”, “r”) as f:

payload = json.load(f)

Step 6: Define functions

In this guide, we will define various functions that will be useful in scraping Google Job listings.

Submit job

Define an async function called submit_job and pass the session: ClientSession together with the payload to submit a web scraping job to the NetNut API via the POST method. This will return the ID number of the submitted job:

async def submit_job(session: ClientSession, payload):

async with session.post(

“https://serp-api.netnut.io/”,

auth=credentials,

json=payload

) as response:

return (await response.json())[“id”]

Check job status

Create another async function that passes the job_id and returns the status of the scraping job from the response:

async def check_job_status(session: ClientSession, job_id):

async with session.get(f”https://serp-api.netnut.io/{job_id}”, auth=credentials) as response:

return (await response.json())[“status”]

Get job results

The next step is to create an async function that extracts the scraped and parsed job results. Bear in mind that the response is a JSON string that contains the API job details and the scraped content, which you can retrieve by parsing the nested JSON properties.

async def get_job_results(session: ClientSession, job_id):

async with session.get(f”https://serp-api.netnut.io/{job_id}/results”, auth=credentials) as response:

return (await response.json())[“results”][0][“content”][“jobs”]

Save scraped data in CSV format.

To do this, you need to define another async function that will save the scraped and parsed data to a CSV file. Since the panda’s library is synchronous, you must use asyncio.to_thread() to run the df.to_csv asynchronously in a separate thread as shown below:

async def save_to_csv(job_id, query, location, results):

print(f”Saving data for {job_id}”)

data = []

for job in results:

data.append({

“Job title”: job[“job_title”],

“Company name”: job[“company_name”],

“Location”: job[“location”],

“Date”: job[“date”],

“Salary”: job[“salary”],

“Posted via”: job[“posted_via”],

“URL”: job[“URL”]

})

df = pd.DataFrame(data)

filename = f”{query}_jobs_{location.replace(‘,’, ‘_’).replace(‘ ‘, ‘_’)}.csv”

await asyncio.to_thread(df.to_csv, filename, index=False)

Scrape Google Jobs

Moving on, you need to make another async function, which passes the parameters to form the Google Jobs URL and payload dynamically. Next, create a variable job_id and then call the submit_job function to submit the request to the API. You can create a while True loop by calling the check_job_status function to confirm if the API has finished scraping. Finally, you can initiate the get_job_results and save_to_csv functions like this:

async def scrape_jobs(session: ClientSession, query, country_code, location):

URL = f”https://www.google.com/search?q={query}&ibp=htl;jobs&hl=en&gl={country_code}”

payload[“url”] = URL

payload[“geo_location”] = location

job_id = await submit_job(session, payload)

await asyncio.sleep(15)

print(f”Checking status for {job_id}”)

while True:

status = await check_job_status(session, job_id)

if status == “done”:

print(f”Job {job_id} done. Retrieving {query} jobs in {location}.”)

break

elif status == “failed”:

print(f”Job {job_id} encountered an issue. Status: {status}”)

return

await asyncio.sleep(5)

results = await get_job_results(session, job_id)

await save_to_csv(job_id, query, location, results)

Step 7: Create the main() function

At this stage, you have written 90% of the code- the only thing left is to pull everything together by defining an async function called main( ) to create an aiohttp session. This makes a list of tasks to scrape jobs for each combination of location and query. Subsequently, it executes each task concurrently via the asyncio.gather( ) function as shown below:

async def main():

async with aiohttp.ClientSession() as session:

tasks = []

for country_code, location_list in locations.items():

for location in location_list:

for query in URL_parameters:

task = asyncio.ensure_future(scrape_jobs(session, query, country_code, location))

tasks.append(task)

await asyncio.gather(*tasks)

Finally, initialize the event loop and call the main() function with the following lines of code:

if __name__ == “__main__”:

loop = asyncio.new_event_loop()

asyncio.set_event_loop(loop)

loop.run_until_complete(main())

print(“Completed!”)

Combine the codes, and your scraper will be ready to extract data from Google Job Listings.

Challenges associated with Scraping Google Job listings with Python

Here are some challenges you may encounter when scraping Google listings with Python:

Anti-scraping measures

Google uses anti-scraping measures to prevent bot access and scraping of its search results. Since Google Jobs listings is a feature of Google, the anti-scraping measures apply to it. Some of these measures include IP blocks, especially when an unusual amount of traffic comes from your IP address. Alternatively, this could trigger a CAPTCHA test which is designed to tell humans apart from humans. Since the scraper is a bot, it may be unable to complete the CAPTCHA challenge which may lead to IP blocking.

Rate limiting

Another significant challenge regarding how to scrape Google Job listings with Python is rate-limiting. This concept describes a situation where Google limits the number of requests you can send within a specific time frame. Therefore, it becomes impossible to scrape a large number of listings quickly with the same IP address.

Dynamic content

Another challenge to web scraping is a dynamic website. Google Jobs listings are usually dynamically generated, so they rely on JavaScript. Therefore, your regular scraper may be unable to interact and extract the necessary HTML data for complete data retrieval. As a result, the final product of your web scraping produces incomplete data. Subsequently, you need to get familiar with the website so you can frequently update the scraping code as necessary.

Legal and ethical concerns

The legal status of web scraping remains a grey area in many conversations. If your scraping activities violate Google’s terms of service, then your activities can be considered illegal.

Another aspect is ethical data retrieval and use. Although some data are publicly available, using them without proper credit to the source is unethical and may generate some legal issues. Therefore, before you commence any activities regarding how to scrape Google Jobs listings with Python, you need to familiarize yourself with the Terms of Service page as well as the robots.txt file.

Choosing The Best Proxy Server- NetNut

The most common challenge regarding how to scrape Google Jobs listings with Python is IP block. Therefore you need to leverage premium proxies to avoid this problem and ensure uninterrupted data retrieval.

NetNut is a global proxy provider with an extensive network of over 85 million rotating residential proxies in 200 countries and over 250,000 mobile IPS in over 100 countries. Here are some of the reasons why proxies are crucial to the success of your Google Jobs listings scraping activities:

Anonymity:

Privacy and security have become a source of worry for many internet users. Therefore, one of the best practices for scraping a website is to use a proxy to hide your actual IP address to avoid IP blocks. The implication of an IP block is that you cannot access the particular website until a later time. Since proxies route your network traffic through a different server, the target website cannot capture your real IP address. As a result, if your proxy IP gets banned, you can use another one to ensure uninterrupted access to data and maintain anonymity.

IP rotation:

Residential rotating proxies are the best alternatives for rotating proxy servers. Therefore, when choosing a proxy provider, select one that has a large IP pool. This ensures you can regularly rotate IP addresses while avoiding IP bans without compromising the efficiency of your scraping activities or the privacy of your online identity. IP rotation is one of the best practices for scraping a large volume of data from any website, as it shields you from anti-scraping measures that may hinder your activities.

Bypass rate limits

Another reason why you need proxies for scraping Google Job listings is to bypass rate limits. Rate limits are request limits imposed by a specific website that ensure you can’t send more than the stipulated requests within a time frame. Some organizations require real-time data, and rate limits can be a challenge to them. However, with the use of proxies, you can avoid being restricted by proxies. Since proxies act as intermediaries between your device and the target website, you can regularly change your IP to make it seem like you are a new user. NetNut offers automatic IP rotation to ensure you don’t meet any roadblocks in your scraping activities.

Bypass geographical restrictions

NetNut offers proxies across various locations. Therefore you can easily bypass IP geographical restrictions to extract the data you need. When you send a request to a website, it interacts with your IP address, and it can determine your location. Some websites do not allow traffic from certain locations because of several reasons. However, the use of proxies provides geographical flexibility as the website will not be able to determine your actual location as your real IP address has been masked. Instead, you will perceive the request as coming from the location of the proxy IP.

Scalability

Scalability is a crucial aspect of web scraping. NetNut proxies are highly scalable and provide high speed to ensure your data retrieval is completed in a few minutes. We also guarantee 99.9% uptime when you use our proxies for web scraping. Subsequently, distributing your scraping requests across multiple IP addresses increases the number of simultaneous connections without overloading a single IP address. In addition, scalability is one of the reasons why you should use a premium proxy like NetNut.

Conclusion

This guide has examined how to scrape Google Job listings with Python. With NetNut scraper API, you are guaranteed fast and effective data retrieval. Data from Google Jobs can be used to analyze trends, get competitive advantage, automate job hunting, optimize job listings and more.

Rate limiting and various anti-scraping measures are some of the challenges associated with scraping Google Jobs listings data. However, you can bypass these limitations by using premium proxy servers. These IPs route your network traffic to prevent IP block and ensure unlimited data retrieval.

Contact us today to get started!

Frequently Asked Questions

What are the components of Google Jobs listings?

- Job title: The job title, as the name describes, clearly indicates the role associated with the job listing.

- Job summary: This component gives an overview of what to expect from the job and what the organization expects from you.

- Location: This may indicate the physical address of the company or the location requirement- remote, hybrid, or on-site.

- Qualifications: It includes the education, skills, and experience required for the job position.

- Benefits: This component of Google Jobs listings indicates the salary and other benefits that come with a specific job.

- Responsibilities: This outlines the specific tasks you are expected to perform if you get the job.

What are the legal and ethical considerations regarding scraping Google Jobs listings data?

It is crucial to consider the legal and ethical implications of your scraping activities. Therefore, you need to respect the robots.txt file of the website. In addition, you need to adhere to Google Jobs terms of service to ensure your activities are ethical.

Bear in mind that ethical scraping goes beyond compliance with the terms and conditions. It involves ensuring that your scraping activities are not a source of problems for the website. Web data extraction can become a problem when the requests are too frequent, which can affect the server’s performance. In addition, it is crucial to handle data respectfully and prioritize transparency to ensure balanced ethical standards.

Why use Python to scrape Google Jobs listings?

There are several reasons why Python is the most common programming language for scraping websites. They include:

- It has a clear and simple syntax, which makes it easy to use

- Python has powerful web scraping libraries that are open-source, and this ensures free access by all

- It can handle various data formats like JSON, XML, and HTML.

- Seamless integration with data analysis and visualization tools for efficient data management.

- Python has libraries that support parallel processing, which makes web scraping faster

- A large and active community that offers support as necessary