Introduction

ZoomInfo, based in Vancouver, Washington, is a company that offers a subscription-based service that provides access to company profiles and business contacts. The platform was originally founded in 2007 by Kirk Brown and Henry Schuck as DiscoverOrg and then renamed ZoomInfo. The original mission of the company was to focus on providing contact for businesses. However, it has expanded to provide business intelligence data for various organizations.

ZoomInfo collects data, including contact information from thousands of subscribers. Therefore, you can use ZoomInfo scraper to collect data that can be used to generate leads and find potential customers. In addition, the platform offers various tools and services that businesses can use to aid customer relationship management (CRM) and lead generation.

This guide will dive into how a ZoomInfo scraper works, its significance, best practices for using it, common challenges, and why you should use NetNut proxies.

About ZoomInfo

ZoomInfo is a B2B intelligence platform where businesses can find and interact with buyers. This platform can be leveraged by recruitment departments, sales, account management, enterprise, and marketing. ZoomInfo is the open directory for finding information about over 50 million business natives and over 5 million businesses.

Subsequently, businesses can find prospects, send a message to them, and search for company leads. As a result, the ZoomInfo platform has become a place to find and access real-time data that can significantly influence critical business decisions. In addition, you can leverage ZoomInfo to track your target audience. Moreover, the platform gives you access to more leads via web form optimizations.

Furthermore, ZoomInfo is an excellent tool for data management. Subsequently, it ensures that your data is compliant with GDPR and CCPA. In addition, you can synchronize your communications in the CRM and add new and existing leads.

ZoomInfo is not a free platform, so you have to pay to access one of the three tiers. However, the first plan called the Professional plan, offers a free trial that allows you to use the platform before making any payment. On the other hand, the Advanced and Elite plans do not come with the perks of free trials.

Moving on, ZoomInfo is a data hub because it compiles data from various sources such as annual reports, public records, and business websites. In addition, the platform gathers data from other sources and makes it available to subscribers.

How to Scrape ZoomInfo with Python

In this section, we shall examine how to build a ZoomInfo scraper with Python. Let us dive in.

Install Python and supporting software

The first step is to download and install the latest version of Python on your device. You also need a code editor to create, modify, and save program files. In addition, the code editor can identify and highlight any errors in your code. In other words, the code editor provides a virtual environment that optimizes your productivity and makes the process of building a ZoomInfo scraper more efficient.

Be sure to read the official documentation on how to install Python and any code editor you choose. They come with explanations that may be useful in helping you solve some challenges associated with building a ZoomInfo scraper.

Install Python web scraping libraries

Python web scraping libraries are central to building a ZoomInfo scraper. Since there are several Python web scraping libraries, you need to study them extensively to understand the best option for you.

The request library is necessary to make an HTTP connection with the ZoomInfo page. Subsequently, this package allows you to download the raw HTML from ZoomInfo. One exciting feature of the request package is that once you implement the GET command, you can collect data on the website via the content property on the response generated. In addition, it supports critical API and its functionalities, including GET, DELETE, POST, and PUT. Request is easy to use, especially for beginners in Python web scraping with access to APIs. Apart from the request library being simple, it is easy to understand. One benefit is that it reduces the need to manually include query strings in your URL. In addition, it supports authentication modules and handles cookies efficiently.

Another library that we can use to build the ZoomInfo scraper is BeautifulSoup, which is a powerful tool for parsing data. Subsequently, this library is required to extract the data from the raw HTML obtained via the request library. BeautifulSoup is commonly used to parse XML and HTML documents. In addition, it provides all the tools you need to structure and modify the parse tree to extract data from websites. Moreover, BeautifulSoup allows you to traverse the DOM and retrieve data from it. One valuable feature of BeautifulSoup is its excellent encoding detection capabilities. Therefore, it can produce better results for web scraping on HTML pages that do not disclose their entire codes.

Define target page

The next step in building a ZoomInfo scraper is to define your target ZoomInfo page. This involves identifying the kind of information you want to get. The target_url variable holds the URL of the web page to be scraped. Bear in mind that the scraper is a bot, so it can be detected and blocked by anti-scraping measures such as CAPTCHAs and browser fingerprinting. In the other section of this guide, we will examine the best practices to help you avoid common anti-scraping techniques.

Inspect ZoomInfo

While you may be tempted to go straight to writing codes, inspecting the website is crucial. After you have selected the target website, you must inspect and interact with the website like a regular user to get familiar with the interface. Go through the terms and conditions to understand the website. Also, you need to inspect the HTML structure of the web page because this plays a significant role in how it appears.

Browsers often come with tools for inspecting a particular webpage. For example, if you are working with Chrome, inspecting a page is quite easy. On the browser, click on the three dots in the top right corner. Select ‘More Tools’ and then click on ‘Developers Tools’. MacOS users can find this option by selecting the menu bar and choosing ‘View’ > ‘Developer’ > ‘Developer Tool’.

On the Developers Tools page, find the “Elements” tab to explore the content of the website. This page displays the elements, including those with a class or ID name. Take note of these ID names, as you need to integrate them when building the ZoomInfo Scraper. In addition, it allows you to inspect the DOM (Document Object Model) of a web page. The HTML code gives an overview of the content of a website created by the developer.

Create a Folder

After installing these Python web scraping packages and other software, create a new folder on your computer. This folder will contain all the project documents. It is essential to have these codes saved in case you need to make modifications. Creating a folder for all the tools you need to create a ZoomInfo scraper makes your work organized.

Write the script to build the ZoomInfo scraper

Writing the script is often described as the heart of Python web scraping activities. It involves developing a set of instructions for your code on how to retrieve data from HTML elements on the web page. The next step is to import the Python web scraping packages you installed earlier. When building a ZoomInfo scraper, you need to create the headers dictionary and set the “User-Agent” header to mimic a common web browser. This can help in bypassing certain restrictions or anti-bot measures on websites.

Do not forget to specify the target URL in the script that the request library will use to send an HTTP GET request. In addition, the headers parameter is passed to include the user agent header in the request. In addition, the verify=False can be used to disable SSL certification, which is necessary when handling invalid or self-signed certificates. However, we recommend using valid certificate verifications for optimized security.

Furthermore, the resp.content function returns the raw HTML content of the response. Subsequently, the response is printed to the console via the print() function. If your code is free from programming errors, you should receive an output with a status code 200.

To test the script, open your terminal and run the command to begin the test process. Once you are sure the code works, you need to add a # before the “Print” command. This is necessary to prevent the code from rerunning in the future.

Extract data from ZoomInfo

This is the stage where you get the data you actually want with the ZoomInfo scraper. To retrieve data, you need to parse the HTML content with a parsing library such as BeautifulSoup. Parsing involves analyzing the HTML structure of a website to understand its elements. BeautifulSoup is equipped with tools for creating and modifying parsed trees.

You can use this code to scrape the pagination page of ZoomInfo:

import request

import beautifulsoup

def scrape_directory(url: str, scrape_pagination=True) -> List[str]:

“””Scrape Zoominfo directory page”””

response = httpx.get(url)

assert response.status_code == 200 # check whether we’re blocked

# parse first page of the results

selector = Selector(text=response.text, base_url=url)

companies = selector.css(“div.tableRow_companyName_nameAndLink>a::attr(href)”).getall()

# parse other pages of the results

base_url = “https://www.zoominfo.com/”

if scrape_pagination:

other_pages = selector.css(‘div.pagination>a::attr(href)’).getall()

for page_url in other_pages:

companies.extend(scrape_directory(base_url + page_url, scrape_pagination=False))

return companies

data = scrape_directory(url=”https://www.zoominfo.com/companies-search/location-usa–Miami–los-industry-real-estate“)

print(json.dumps(data, indent=2, ensure_ascii=False))

Save the scraped data

Once you have extracted the data, you can store it in your preferred format. The most common ways to store data extracted with the ZoomInfo scraper are CSV, JSON, or database. Use the following code:

#Write data to csv

df = pd.DataFrame(listings)

df.to_csv(‘listings.csv’, index=False)

print(‘Data written to CSV file’)

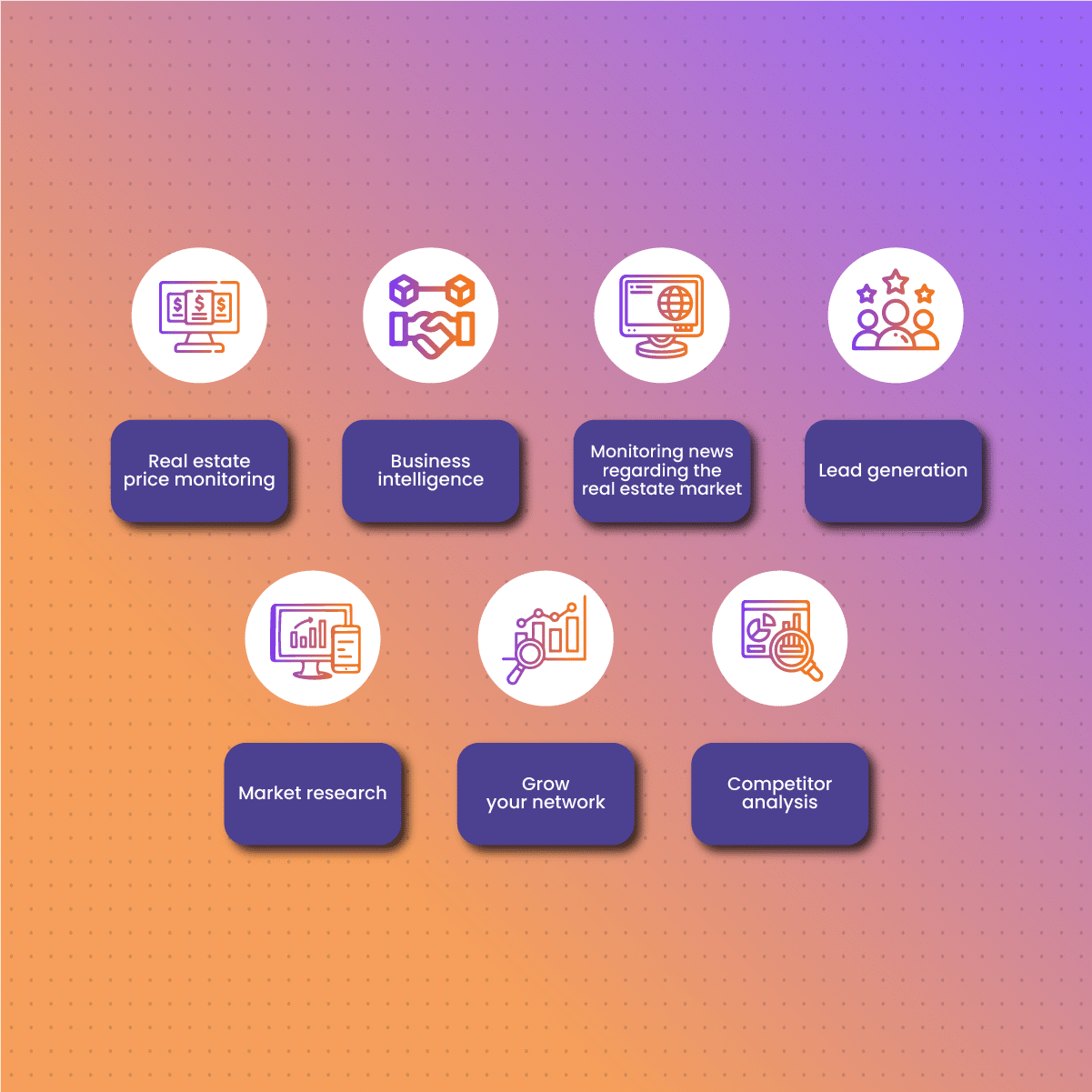

Application of ZoomInfo Scraper

The data obtained with a ZoomInfo scraper can be applied across various industries for different purposes. Here are some of the applications of data obtained from ZoomInfo data:

Real estate price monitoring

Price monitoring is a significant application of data from ZoomInfo. The digital marketplace is constantly evolving, and prices fluctuate due to several reasons. Therefore, as a business owner, you must stay informed about these price changes. Subsequently, this data helps you continuously adjust your price, which gives you a competitive edge.

Therefore, scraping becomes an invaluable tool that delivers real-time market data that empowers business owners to make informed decisions regarding pricing. As a result, businesses can find a balance between profit and competition.

Business intelligence

Another application of ZoomInfo scraper is business intelligence. You can use it to extract data that informs your business operations and identify potential threats and possible areas of growth. In addition, scraping ZoomInfo provides information regarding core decision-makers within organizations. As a result, marketers can find and contact the right people in an organization to pitch their proposal. Therefore, you can find business partners who share the same vision and collaborate to make a profit.

In addition, scraping ZoomInfo allows you to monitor the performance of sales initiatives. This provides valuable insights into how the public is responding to marketing and sales campaigns. Subsequently, this data can help organizations refine their sales strategy for optimized results.

Monitoring news regarding the real estate market

News monitoring is critical in investment decision-making, online public sentiment analysis, and staying abreast of political trends. Subsequently, a news headline can affect your brand reputation as well as the value of the company shares.

Data from ZoomInfo provides insights into the current reports on your company. This is especially important for companies that are always in the news or depend on timely news analysis. Subsequently, it is the ideal solution to monitor, gather, and analyze news from your industry.

Lead generation

Every real estate business needs leads to thrive, but where can they get reliable ones? ZoomInfo is a reliable place to get the name, phone number, and email of prospective leads. List building is an integral part of digital marketing. A business cannot thrive without customers, and in this highly competitive market, you can’t always afford to wait for fate to bring your customers to you.

Instead, you can leverage ZoomInfo to get emails from your target population. Email marketing plays a significant role in creating awareness of the brand’s products, services, discounts, and awareness. When more people know about what your brand offers, there is a chance of an increase in sales and total revenue.

Market research

Data is the cornerstone of every thriving business. Therefore, there is a need to retrieve data on other companies in the industry to stay ahead of trends. Another significant aspect of market research is that it plays a significant role in business intelligence efforts.

As a result, businesses can gain insights to make necessary adjustments to their operation strategies. This allows them to stay updated, ahead of the competition, and quickly adapt to new trends within the marketplace.

Grow your network

ZoomInfo scraper allows you to build a solid database that becomes useful for reaching out to professionals in your field. You can customize your search based on job title, location, or company size. In addition, you can extract their contact information, such as their email addresses, which allows you to connect with them.

Connecting with other professionals in your industry puts you at the forefront of emerging opportunities and best practices you could have never known from the corner of your room. It also provides an opportunity for mentorship, which allows you to share your knowledge, which allows you to build a positive reputation.

In addition, growing your network via the use of a ZoomInfo scraper helps to create a community of professionals who think alike, support each other, and collaborate for new and interesting ventures.

Competitor analysis

Extracting data from ZoomInfo provides useful insights into competitor strategies. You can identify companies that are within your industry and analyze data regarding company description, product description, customer reviews, target population, and prices of products and services.

Subsequently, this data can help you deduce the strategy they are using and how it is affecting sales. In addition, you can also compare your business against key competitors by studying key metrics, including company size, revenue, sales, and market share.

Challenges Associated with Using a ZoomInfo Scraper

The use of a ZoomInfo scraper comes with several challenges, especially when you frequently need large volumes of data. Therefore, it is important to understand these challenges and how you can handle them. Some of these challenges include:

IP ban/block

IP blocks are one of the most common challenges when scraping data from ZoomInfo. When you scrape ZoomInfo, your IP address is exposed, and the website can collect identifying information about you, including your location, browser version, fonts, screen resolution, and more.

Subsequently, when you send too many requests to a website within a short period, your IP address can be blocked. As a result, your ZoomInfo scraper stops working, and this may put you in a difficult situation if you need timely access to data.

Your IP address can also be blocked due to a geographical restriction on the website. In addition, using an unreliable proxy IP can trigger the website to ban or block your IP address. However, you can easily solve the IP block challenge by using a reputable proxy provider like NetNut. In addition, it is beneficial to follow the website’s terms of service and put delays between each request to avoid over-flooding the page with requests.

CAPTCHA challenge

Completely Automated Public Turing Tests To Tell Computers and Humans, often called CAPTCHA, is a common security measure by websites to restrict scraping activities. CAPTCHA requires manual interaction to solve a puzzle before accessing specific content. It could be in the form of text puzzles, image recognition, or analysis of user behavior.

A solution to this problem could be to implement CAPTCHA solvers into your ZoomInfo scraper. However, this may potentially slow down the process of web data extraction. Using NetNut proxies is a secure and reliable way to bypass CAPTCHAs.

Rate limiting

Another challenge to using a ZoomInfo scraper is rate limiting, which describes the practice of limiting the number of requests per client within a period. Many websites implement this technique to protect their servers from large requests that may cause lagging or a crash (in worst cases).

Therefore, rate limiting slows down the process of extracting data from ZoomInfo. As a result, the efficiency of your scraping operations will be reduced – which can be frustrating when you need a large amount of data in a short period.

Scalability issues

Scalability is another challenge regarding ZoomInfo data extraction. Businesses require a huge amount of data to make informed decisions that help them stay competitive in the industry. Therefore, quickly gathering lots of data from various sources becomes paramount.

However, web pages may fail to respond if they receive too many requests. A human user may not see the challenge because they can simply refresh the page. When a website responds slowly, the web scraping process is often significantly affected because the bot is not programmed to cater to such situations. As a result, you may need to manually give instructions on how to restart the web scraper.

Browser fingerprinting measures

Browser fingerprinting is a technique that collects and analyses your web browser details to produce a unique identifier to track users. These details may include fonts, screen resolution, keyboard layout, User Agent String, cookie settings, browser extensions, and more. Subsequently, it combines small data points into a larger set to generate a unique digital fingerprint.

Bear in mind that clearing browser history and resetting cookies will not affect the digital fingerprint. Therefore, the web page can always identify a specific user when they visit. This technique is used to optimize website security and provide a personalized experience. However, it can also identify ZoomInfo scrapers with their unique fingerprint. To avoid browser fingerprinting from interfering with your web data extraction, you can use headless browsers or stealth plugins.

Dynamic webpage content

ZoomInfo scraping primarily involves analyzing the HTML source code. However, modern websites are often dynamic, which poses a challenge to scraping. For example, some websites use client-side rendering technologies such as JavaScript to create dynamic content.

Therefore, many modern websites are built with JavaScript and AJAX after loading the initial HTML. Subsequently, you would need a headless browser to request, extract, and parse the required data. Alternatively, you can use tools like Selenium, Puppeteer, and Playwright to optimize the process of web data extraction.

Practical Tips for Using A ZoomInfo Scraper

We have discussed some of the core challenges associated with scraping ZoomInfo. Therefore, it becomes necessary to highlight some practical tips that optimize the process of extracting data from ZoomInfo. They include:

Use reliable proxy servers

The use of reliable proxy servers is one of the most practical tips for effective data extraction from ZoomInfo. Remember that we mentioned that a primary challenge to data scraping is IP blocks. Therefore, you can avoid such incidents by using proxies to hide your actual IP address. Subsequently, using rotating IPs ensures that the website does not realize that your scraping requests are coming from a single location. Another significance of using proxies is that they generally help your scraping bot evade anti-scraping measures.

Read the robots.txt file

Before you dive into scraping, ensure you read the robot.txt file. This helps you familiarize yourself with specific data that you can scrape and those you should avoid. Subsequently, this information helps guide you in writing the code to automate ZoomInfo data extraction. The robots.txt file may indicate that scraping content from a certain page is not allowed. Failure to comply with this instruction makes your activities illegal and unethical.

Review the terms and conditions/web page policies

Apart from reading the robots.txt file, we recommend paying attention to the website policy or terms and conditions. Many people overlook the policy pages because they often align with the robot.txt file. However, there may be additional information that can be relevant to extracting data from ZoomInfo.

Sometimes, the terms and conditions may have a section that clearly outlines what data you can collect from their web page. Therefore, there may be legal consequences if you do not follow these instructions in your web data extraction.

Limit the number of requests

Getting real-time data at our fingerprints can be so exciting that you want to gather more. As a result, you send too many requests within a short period. There are two major consequences of sending too many requests to a website. First, the site may become slow, malfunction, or even crash. Secondly, the website’s anti-scraping measures are triggered, and your IP address is blocked.

Therefore, one practical tip for effective data collection from ZoomInfo is to avoid aggressive scraping. This is because it often results in a lose-lose situation. Instead, introduce rate limits into the ZoomInfo scraper so that it automatically implements intervals between each session.

Refine your target data

Scraping data from ZoomInfo often involves coding; hence, it is crucial to be specific with the type of data you want to collect. If your instructions are too vague, there is a high chance that the scraper may return too much data. As a result, you need to predetermine the type of data you need so you are not left with a ton of irrelevant data.

Therefore, investing some time to develop a clear and concise plan for your web data extraction activities is best. Refining your target data ensures you spend less time and resources cleaning the data you collected.

Compliance with data protection protocols

Although some data are publicly available, collecting and using them may incur legal consequences. Therefore, before you proceed with ZoomInfo data collection, pay attention to data protection laws.

These laws may differ according to your location and the type of data you want to collect. For example, those in the European Union must abide by the General Data Protection Regulation (GDPR), which prevents scraping of personal information. Subsequently, it is against the law to use web scrapers to gather people’s identifying data without their consent.

Optimizing ZoomInfo Scraper With NetNut Proxy Solution

As mentioned earlier, one of the best tips for effective data scraping from ZoomInfo is to use reliable proxies. A proxy serves as an intermediary between your device and the internet/ website you are visiting. Scraping a platform like ZoomInfo without a proxy exposes your real IP address, your activities can be tracked, and a digital fingerprint can be generated.

Therefore, it becomes crucial to choose an industry-leading proxy server provider like NetNut. With an extensive network of over 85 million rotating residential proxies in 195 countries and over 250,000 mobile IPS in over 100 countries, NetNut is committed to providing exceptional web data collection services.

NetNut proxies can help you avoid IP bans and continue accessing the data you need. These proxies come with an AI-powered CAPTCHA-solving mechanism to ensure you can easily bypass anti-scraping techniques.

In addition, NetNut proxies allow you to scrape websites from all over the globe. Some websites have location bans, which becomes a challenge for tasks like geo-targeted scraping. However, with rotating proxies, you can bypass these geographical restrictions and extract data from websites.

On the other hand, if you don’t know how to code or have no interest in coding, you can use NetNut Scraper API. This method helps you extract data from various websites while eliminating the need for codes and libraries.

Furthermore, if you want to scrape data using your mobile device, NetNut also has a customized solution for you. NetNut’s Mobile Proxy uses real phone IPs for efficient web scraping and auto-rotates IPs for continuous data collection.

Conclusion

This guide has provided insight into how a ZoomInfo scraper works. ZoomInfo is a powerful choice for businesses that are intentional about lead generation. We focused on how to create a program using Python to scrape the platform.

The data obtained from ZoomInfo can be leveraged for business intelligence, talent acquisition, price monitoring, competitor analysis, and more. Some of the challenges associated with using this tool include IP blocks, CAPTCHA, fingerprinting, rate-limiting, and more.

These challenges can be handled when you use a reliable proxy server. Be sure to check out a recent guide on how to choose the best proxy solution. Feel free to contact us if you need to speak to an expert regarding your web data extraction needs.

Frequently Asked Questions

What are the advantages and limitations of ZoomInfo?

Here are some advantages of ZoomInfo:

- An excellent data source for customers

- Access to accurate and reliable contact information such as email and phone number that is critical for list building

- ZoomInfo offers advanced data search, which provides a granular view

- It offers numerous workflow automation

- Data clean-up assistance to aid data management.

The limitations of ZoomInfo include:

- The free plan is limited to only ten contacts

- ZoomInfo plans are quite expensive

- It has a steep learning curve

- The platform has a data destruction clause

- The pricing could do with more transparency

How can I maintain the quality of data scraped from ZoomInfo?

Maintaining the quality of data scraped from ZoomInfo is critical to ensure its reliability when making critical decisions. Here are some strategies to maintain data quality from ZoomInfo:

- Frequently update your data because businesses update their information. You can schedule a time to scrape new information and update your database.

- Validate the data you extracted from ZoomInfo by checking for missing data, inconsistencies, and data that does not conform to standard formats.

- Employ error handling in your ZoomInfo scraper code so it can make the required adjustments to overcome errors and fetch the required data.

- Leverage tools like a checksum or visual regression testing to check for changes in the structure of the website and modify your code as required.

- Incorporate quality check tests in your code, which could involve statistical analysis and cross-referencing with other data sources to confirm the quality and accuracy of the data.

- Keep a record of your scraping activities on ZoomInfo, including the time, type of data, and any challenges you face.

What are the alternatives to scraping ZoomInfo?

- The first alternative is the ZoomInfo API, which allows you to collect data using a method that complies with the platform’s terms of service.

- Search engine API offers access to company and professional data that can be used for business intelligence.

- You can purchase data from third-party providers using a database similar to ZoomInfo. However, choose a reputable provider that obtains their data through legal and ethical means.

- LinkedIn API allows you to access data on this professional networking platform.

- Lead generation services can help you connect with potential customers instead of scraping ZoomInfo.