Introduction

Businesses, both big and small, need data to make informed decisions. Therefore, automated web scraping has become a solution to collecting data from various sources available on the internet. Manually copying data from a website and pasting it into your desired format is resource and time-consuming. In addition, there are prone to be several errors that could significantly affect decision-making.

One of the ways to scrape data is to use a programming language to build a scraper. In this guide, we shall focus on web scraping with Rust programming language. One of the most popular languages for building a web scraper is Python because it has powerful scraping libraries.

Web scraping with Rust is worth a shot because it also offers powerful data extraction and parsing libraries. This guide will examine web scraping with Rust, how it works, challenges, best practices, and how to optimize it with NetNut.

What is Rust?

Rust is a programming language that was developed by Graydon Hoare in 2006 when he was still working at Mozilla. This language was built with a focus on parallelism, speed, and memory safety. Since its inception, Rust has been widely adopted by big companies like Microsoft, Amazon, Meta, and Discord. The language can be used for various applications, including video games, simulation engines for virtual reality, operating systems, and more.

Rust stands out among its competition because it offers improved memory safety and minimal runtime while ensuring the highest performance. The language evolved from older languages like Erlang and C++. However, it provides better memory safety and is free from bugs that can potentially cause a delay in web scraping. In addition, Rust supports generic programming and metaprogramming in both static and dynamic styles.

These features make web scraping with Rust an excellent option. Here are some other notable features of Rust:

- It is fast

- The performance is memory-efficient

- Highly reliable and practical

- Comprehensive documentation

- It offers auto-formatting features.

- Rust has an integrated package manager called Cargo; it downloads your package’s dependencies, compiles the packages, makes distributable packages, and uploads them to crates.io.

Dependencies are the available libraries that you use when working with Rust. Subsequently, you don’t have to handle them manually, as Cargo automatically manages them for you.

Advantages of web scraping with Rust

Here are some advantages of web scraping with Rust:

Memory safety

The primary advantage of web scraping with Rust is memory safety and this describes a software’s immunity to bugs and other security vulnerabilities. This is an important feature, especially in web scraping, because you don’t want websites to have access to your actual IP address and data. In addition, anonymity is crucial in ensuring the efficiency of a web scraper. Although Rust is similar to C++, the latter is associated with code safety. On the other hand, Rust provides smart options for allocating and reallocating memory.

It supports concurrent programming

Another advantage of Rust is that it supports concurrent programming. Subsequently, you can run multiple processes at the same time instead of sequentially. Concurrent programming sets it apart from other powerful languages like Python, which do not have a built-in concurrency mechanism. As a result, Rust ensures scalability and handles large amounts of data without compromising on performance, and this makes it an excellent choice for web scraping.

Great community support

One of the factors to look out for when choosing a programming language for building a web scraper is community. Rust has an active community where “Rustaceans” exchange information. Although you may need to understand some terms, the community members are often excited to teach the newcomers.

In addition, the Rust community’s crate registry, Crates.io, allows users to upload their crates as well as use existing libraries uploaded by others. Crates are similar to other language cell packages. They are defined as units of compilation and linkage.

Furthermore, the community is quite intimate, and this led to the Rust Foundation- an independent non-profit organization that supports, maintains, and governs the ecosystem. Subsequently, the Rust community is devoted to creating a future where sustainability is the order of the day.

Integration with other Rust libraries

Another benefit of using Rust is that the libraries seamlessly integrate with each other to ensure optimal performance. These libraries are known as crates and have various functionalities. For example, in this guide, we will use Reqwest and Scraper to build a web scraper. Other libraries include Tokio for asynchronous activities, Regex for regular expressions, and serve for JSON serialization/deserialization. In addition, the Rust package manager, Cargo, makes it easy to manage these libraries to ensure efficient web scraping programs.

How to Scrape a Website with Rust

This section will examine a step-by-step guide on how to scrape a web page with Rust, as well as the necessary tools you need.

Step 1: Install Rust

The first step is to install Rust on your device by going to the download page. Once you visit the page, choose your operating system and click on download. Follow the instructions to set up the tool-chain manager Rustup. Subsequently, this will install cargo, the Rusk package manager

Step 2: Install IDE

The IDE (Integrated Development Environment) is another important software you need. It helps to manage the code and ensure it runs smoothly. Visual Studio Code is a popular IDE that is compatible with Rust.

To get started with Rust, initialize a new project with the cargo command:

cargo new rust_web_scraper

The rust-web-scraper directory will contain:

Cargo.toml; which is a manifest that describes the project and lists its dependencies.

src/; this folder to place your Rust files.

In addition, you can find the main.rs file inside src:

fn main() {

println!(“Hello, world!”);

}

Step 3: Download Rust libraries

To do web scraping with Rust, you need to download some libraries, such as Reqwest and scraper.

The Reqwest library was designed to fetch data via the HTTP protocol. In addition, the simplified API can be used to make posts and get requests to the target website. It is accompanied by a complete client module that allows you to add redirect policies, cookies, headers, and more.

In addition, Reqwest follows Rust’s async protocol via futures. There are two distinctive features of Rust’s asynchronous protocol. First, there are flags to denote our asynchronous code blocks (the await keyboard). They are quite similar to the await keyboard in JavaScript programming language. Secondly, there is a runtime that recognizes these flags and executes the asynchronous program. Subsequently, Rust has adopted the async keyword to indicate functions and block scopes that we should await responses from. In addition, Reqwest also depends on the Tokio runtime to queue the async events efficiently.

Another Rust library that we need is Scraper- a parser that provides an API for manipulating HTML documents. Scraper is a flexible HTML parsing library in Rust that facilitates efficient data retrieval from HTML and XML documents. Since the scraper library cannot send requests, it is often used with the Reqwest library.

Subsequently, it works by parsing the HTML document into a tree-like structure. It supports CSS selectors, which makes it easy to find and retrieve specific elements on a page. However, the scraper has limited functionality compared to BeautifulSoup- a parsing library in Python.

Add the libraries to your project via the Cargo command as shown below:

cargo add scraper Reqwest–features “request/blocking”

Subsequently, the libraries will be added to Cargo.toml

[dependencies]

Reqwest= {version = “0.11.18”, features = [“blocking”]}

scraper = “0.16”

Step 4: Identify the target website page

You can use Reqwest’s get () function to get the HTML from a URL. For example:

let response = reqwest::blocking::get(“https://www.example.com/”);

let html_content = response.unwrap().text().unwrap();

Subsequently, the Reqwest function sends an HTTP GET to the provided URL. On the other hand, blocking works to make sure that the get () function blocks the execution until you get a response from the server. This is to ensure the request Would be asynchronous.

Moving on, you can use the unwrap () function twice to extract the HTML from the response. Therefore, if the request is successful, it returns the requested value. However, it produces an error response if it is unsuccessful.

Use the code below in the main() function of the scraper to obtain the target HTML document:

fn main() {

// download the target HTML document

let response = reqwest::blocking::get(“https://www.example.com/”);

// get the HTML content from the request-response

// and print it

let html_content = response.unwrap().text().unwrap();

println!(“{html_content}”);

}

Step 5: Parse the HTML data

Here, you need the parse_document () function to feed the retrieved HTML string to the scraper. Subsequently, it returns an HTML tree object like this:

let document = scraper::Html::parse_document(&html_content);

In addition, you need to choose an effective selector to find the HTML elements. First, you need to inspect the website using the developer tools. Then you can use it with a CSS selector like:

let html_product_selector = scraper::Selector::parse(“li.product”).unwrap();

let html_products = document.select(&html_product_selector);

subsequently parse() function from Selector defines a scraper selector object and select ()

used to select the preferred elements. For example, you want to scrape the price of products on an ecommerce website:

for html_product in html_products {

// scraping logic to retrieve the info

// of interest

let url = html_product

.select(&scraper::Selector::parse(“a”).unwrap())

.next()

.and_then(|a| a.value().attr(“href”))

.map(str::to_owned);

let image = html_product

.select(&scraper::Selector::parse(“img”).unwrap())

.next()

.and_then(|img| img.value().attr(“src”))

.map(str::to_owned);

let name = html_product

.select(&scraper::Selector::parse(“h2”).unwrap())

.next()

.map(|h2| h2.text().collect::<String>());

let price = html_product

.select(&scraper::Selector::parse(“.price”).unwrap())

.next()

.map(|price| price.text().collect::<String>());

// instantiate a new product

// with the scraped data and add it to the list

let_product = Product {

url,

image,

name,

price,

};

Step 6: Export to CSV

Once you have successfully retrieved the data you want, you need to collect it in a structured

and useful format. Therefore, you need to install the CSV library with this code:

cargo add csv

Now you can use the following lines of code to convert the data retrieved above to CSV

// create the CSV output file

let path = std::path::Path::new(“products.csv”);

let mut writer = csv::Writer::from_path(path).unwrap();

// append the header to the CSV

writer

.write_record(&[“URL”, “image”, “name”, “price”])

.unwrap();

// populate the output file

for product in products {

let url = product.url.unwrap();

let image = product.image.unwrap();

let name = product.name.unwrap();

let price = product.price.unwrap();

writer.write_record(&[url, image, name, price]).unwrap();

}

// free up the resources

writer.flush().unwrap();

Challenges associated with Rust web scraping

Web scraping, regardless of language used, involves some fresh challenges. They include:

CAPTCHA

CAPTCHA is a test designed to tell humans apart from computers. Many modern websites implement CAPTCHA as an anti-bot strategy. Humans can easily solve these challenges and access desired content. However, bots or automated programs like the scraper built with Rust cannot interact and solve this test. The inability to solve CAPTCHA means that the scraper will not be able to send the HTTP request, which is the starting point of web data retrieval.

You can leverage NetNut proxy solutions that come with built-in CAPTCHA-solving software.

IP block

The universal challenge to scraping data from the internet is IP block. Quite a number of factors could trigger an IP block. When you are visiting a website either for regular

browsing or to collect data, the website interacts with your IP address. Subsequently, it can create a unique browser fingerprint that identifies your activities. As a result, the website can block your IP if it is generating too many requests within a short period.

Limited ecosystem

Although Rust has powerful web scraping libraries, they are quite limited. Subsequently, Rust has a less extensive ecosystem, unlike languages like Python. Therefore, developers may spend a lot of time trying to build their web scraping utilities.

Dynamic content

Rust programming language is not suitable for rendering dynamic content. Many modern websites use dynamic content generated through JavaScript. As a result, a regular web scraper will be unable to parse the HTML content to extract all the desired information correctly. Therefore, the scraper will return an error response or retrieve incomplete data.

One way to overcome this limitation is through the use of headless browsers with Webdriver like Headless_Chrome. Subsequently, this tool allows for scripted interaction with websites, which allows you to retrieve data from dynamic websites effectively.

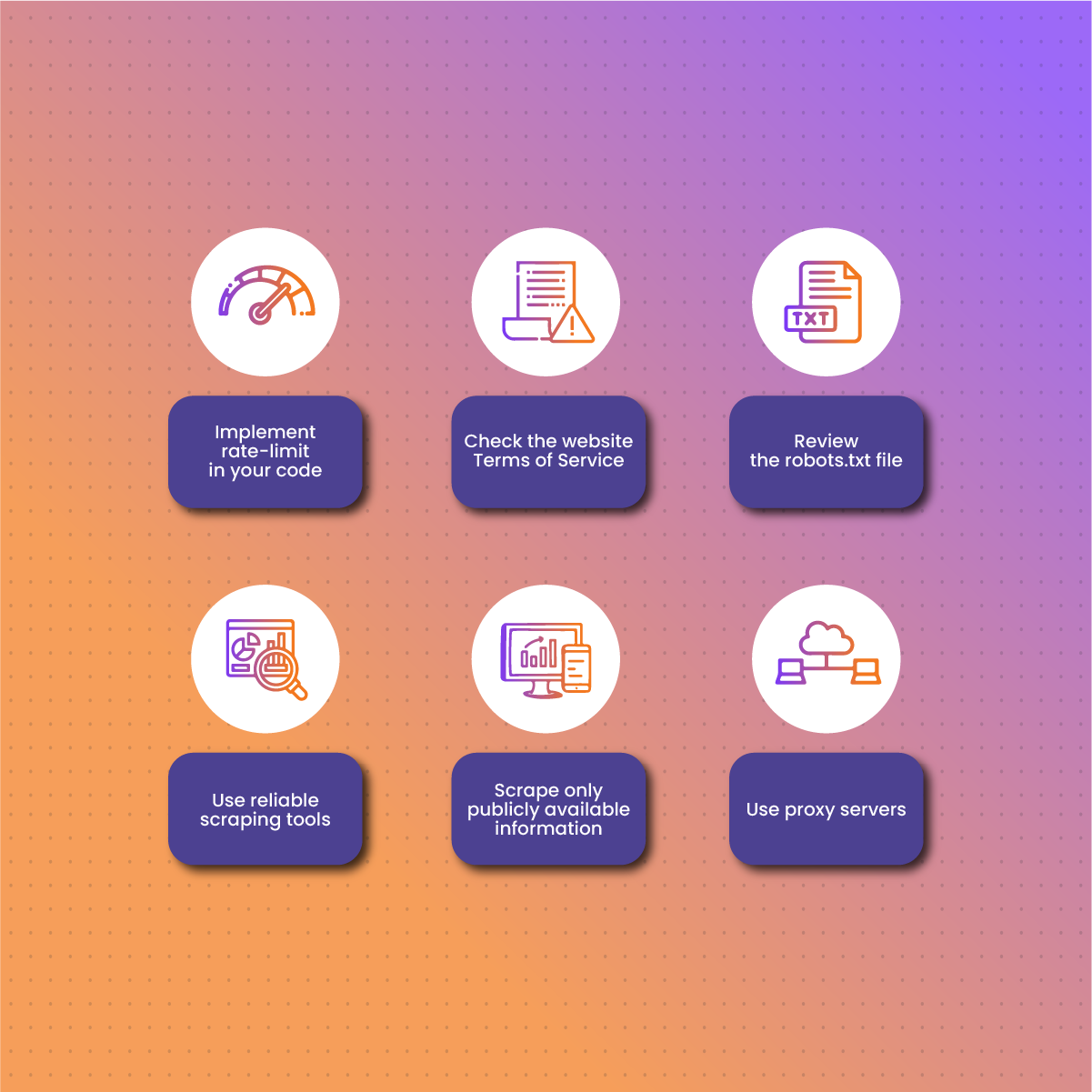

Ethical Practices for Scraping Data with Rust

Earlier, we examined some of the challenges associated with web scraping with Rust. Regardless, you can avoid some of them by practicing ethical web data scraping. When you scrape a website aggressively, it may cause it to lag and become slow, and this negatively affects the actual customer’s experience. To ensure you are not causing harm to the target website during scraping, be sure to follow these practices below:

Implement rate-limit in your code

Too many requests within a short period, also known as aggressive scraping, can block your IP address. In addition, it can cause the website server to be overloaded, which affects the performance of all users. Therefore, you need to add delays in your Rust web scraping script. As a result, your requests will not be too frequent, so the website server will not overload. In addition, rate limiting reduces the chances of your scraper triggering the website’s anti-scraping measures.

Check the website Terms of Service

One of the steps involved in web scraping is inspecting the web page. Therefore, before initiating the web scraper, you need to check the Terms of Service page. It often contains information on intellectual property rights, copyright, how data is collected, how to use the data, and more. Subsequently, it becomes imperative that you understand the boundaries of how they want their data to be retrieved and scraped. Failure to comply with their terms may land you in serious legal situations.

Review the robots.txt file:

Websites usually have a robots.txt file that indicates how bots can access their pages. The information on this file is quite different from the terms of service page, so don’t skip one for the other. Some websites prohibit using scrapers. Instead, they indicate the use of API, which does not affect the performance of the website. Therefore, you need to comply with the robots.txt file to maintain ethical scraping and avoid legal issues.

Use reliable scraping tools

As mentioned above, some websites have APIs that allow you to access and retrieve publicly available data. However, be sure to choose a reliable scraping tool that is well-maintained, regularly updated, and follows ethical practices. Check out our in-house solution- NetNut Scraper API, which is an effective means of collecting data from websites while maintaining the best practices and latest ethical principles.

Scrape only publicly available information

One critical ethical practice is to scrape only publicly available data. Do not attempt to obtain data that is protected by any form of authorization. In addition, scraping private or sensitive data without permission is unethical and could lead to legal issues. Therefore, it becomes necessary to understand your country, state, or county’s law regarding data extraction. This includes Data protection laws like GDPR (General Data Protection Regulation).

Use proxy servers.

Proxies serve as an intermediary between your device and the internet. In other words, it works by masking your actual IP address. In addition, it allows you to bypass geographic restrictions so you can gather unbiased data for machine learning training. Using a reputable proxy is necessary to avoid issues of IP blocks and bans.

Optimizing Rust Web Scraping With NetNut Proxies

Your IP can easily be banned or blocked during web scraping due to several reasons. However, the best practice to prevent it is to use a reliable proxy server. Reputable proxies provide anonymity, efficiency, and security that optimize web scraping.

NetNut is an industry-leading proxy server provider with an extensive network of over 85 million rotating residential proxies in 195 countries and over 250,000 mobile IPS in over 100 countries; NetNut is committed to providing exceptional web data collection services.

NetNut also offers various proxy solutions to help you overcome the difficulties associated with web scraping. Since your IP address is exposed when you send the initial request, the website can allow access or block your IP. Moreover, our proxies come with a smart CAPTCHA solver, so your scraper is not interrupted by the test.

In addition, NetNut proxies allow you to scrape websites from all over the globe. Some websites have location bans, which becomes a challenge for tasks like geo-targeted scraping. However, with rotating proxies, you can bypass these geographical restrictions and extract data from websites.

Furthermore, if you want to scrape data using your mobile device, NetNut also has a customized solution for you. NetNut’s Mobile Proxy uses real mobile phone IPs for efficient web scraping and auto-rotates IPs for continuous data collection.

Conclusion

This guide has examined web scraping with Rust- a programming language that boasts memory security and high performance. We have also reviewed the step-by-step process of scraping a website with Rust, the possible challenges, and the best practices to overcome them.

Some of the limitations of using Rust are that it has a steep learning curve, fewer web scraping libraries, and the community is not as large as Python. Data retrieved using Rust can be applied to various industries.

Using proxies can optimize web scraping with Rust. Therefore, feel free to contact us today. Our customer care is always available to help you choose the best scraper for you.

Frequently Asked Questions

Is Rust a good language for web scraping?

Yes, Rust is a good language for web scraping. Since it compiles native code, it is an excellent choice for handling large-scale activities like web scraping. In addition, it has some libraries that facilitate the extraction of data from web pages.

Bear in mind that it is not the easiest language to use. Subsequently, beginners may find it easier to work with languages like Python that have a simpler syntax. However, if your aim is on optimal performance, Rust stands out because of its concurrent nature, which ensures the web scraper program works at high speed.

What are the disadvantages of using Rust for web scraping?

One of the disadvantages of using Rust for web scraping is that it is a new language, so it has a small community. Therefore, beginners may be unable to get expert opinions when they encounter a challenge while building or running the web scraper.

Another significant challenge of using Rust is that the syntax is not easy to understand. Therefore, reading and understanding the language can quickly become very frustrating.

What languages are the competitors to Rust?

Here are some of the most popular choices for building a web scraper:

- Python stands at the top of the list because of its popularity, ease of use, and simple learning curve. In addition, Python offers extensive documentation, access to numerous web scraping frameworks, and an active online community. However, Python is not as scalable as Rust because it does not come with built-in concurrency.

- C++ is another versatile and powerful language that allows you to write code easily. Since it is statistically typed, its performance is quite faster than Python. However, the language has a steep learning curve and is resource-intensive.

- Another language that can be used to build a web scraper is Powershell. Developed by Microsoft, this programming language works well on any website but is especially faster in retrieving data on infrastructure built with the Microsoft-produced NET platform. One of the challenges is that it is not easy to use because it is object-based instead of text-based.