Introduction

In this digital age, data is one of the most valuable assets to several people. Therefore, it becomes crucial for individuals, businesses, and researchers to access updated data in a timely manner. Many organizations have adopted the digital strategy where customer interaction is possible without a physical session. Therefore, the web has become a treasure trove because all these million websites can be accessed from any part of the world.

Subsequently, web scraping, the process of collecting data from websites, is gaining popularity. Automated scraping quickly overtook manual scraping because it saved time and resources, which could be channeled into something else. As a result, it becomes practical that we examine the web scraping frameworks, their features, limitations, and advantages.

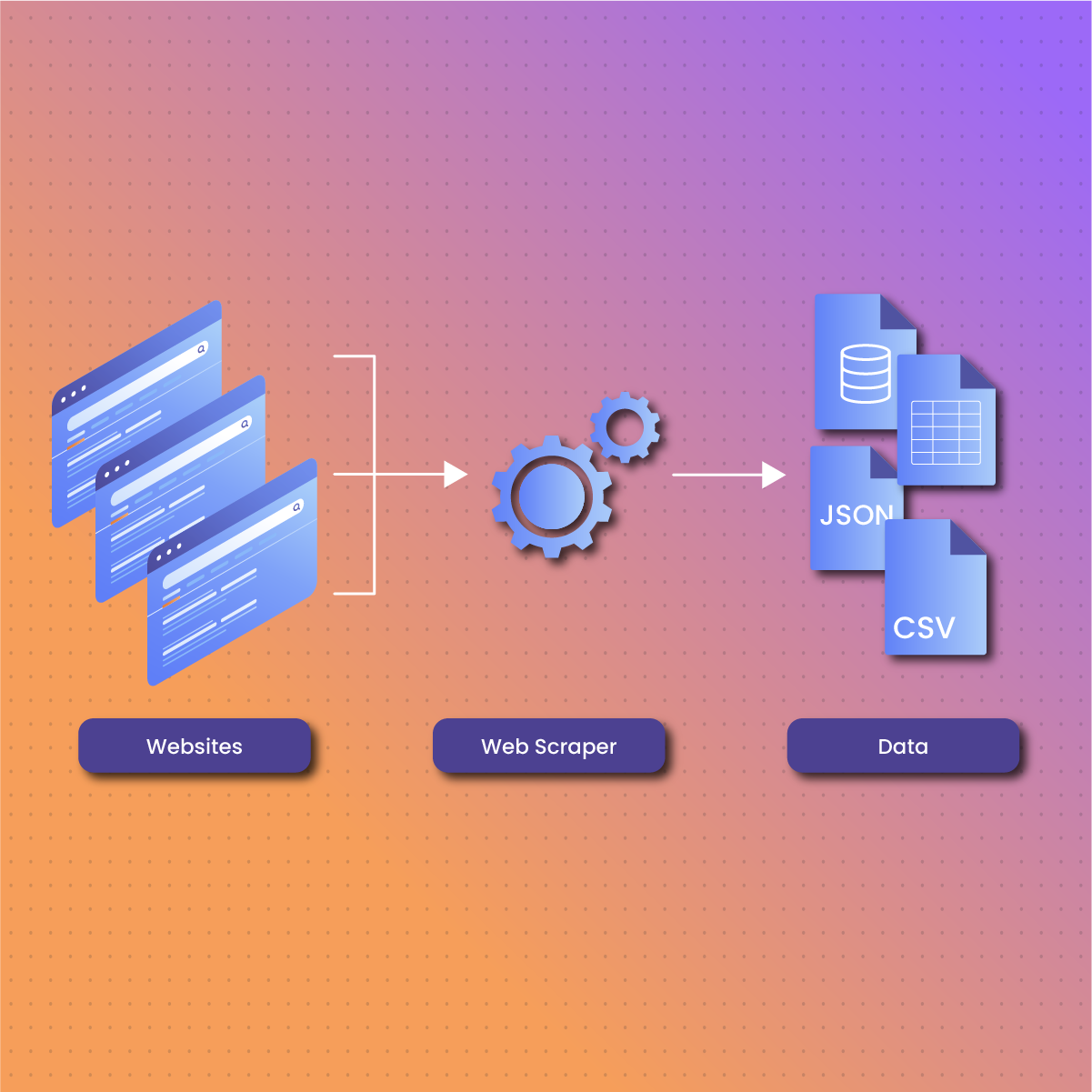

What is Web Scraping?

Web scraping is the process of collecting data from the web. The data is often stored in a local file where it can be accessed for analysis and interpretation. A simple example of web scraping is copying content from the web and pasting it into Excel.

Subsequently, this involves the use of a web scraping framework to visit a web page, retrieve data, and store it in a local file. Instead of copying and pasting, automating the web scraping process becomes critical when you need to collect a large volume of data within a short time.

It is important to note that web scraping may not always be simple. This is because websites come in different structures and designs, which could affect the efficiency of web scrapers.

What is a Web Scraping Framework?

A web scraping framework is a collection of tools and libraries that simplifies the process of retrieving data from websites. These frameworks play significant roles in sending HTTP requests, parsing HTML documents, handling cookies, and storing the retrieved data. Therefore, web scraping frameworks include features that allow them to handle JavaScript rendering and anti-bot measures.

While various web scraping frameworks exist, their basic functionality is quite similar. Here is a breakdown of how a web scraping framework works.

HTTP request

The web scraping framework works by sending HTTP requests to web pages to collect data. It involves indicating the specific URL of the page you want to retrieve. Similarly, when the website approves your request, you can access the content on the page.

On the other end, you may receive an error response if the website does not grant you access for various reasons. Therefore, the first web scraping process involves making an HTTP request to the website they want to access. The web scraping framework provides features that allow you to customize requests, set headers, and handle redirects.

HTML parsing

One of the core features of a web scraping framework is HTML parsing. Once the website grants permission to access its content, the next step is extracting and parsing the website code. Subsequently, parsing the HTML elements allows you to extract relevant data. The web scraping framework navigates the HTML structure, selects specific elements (via XPath expressions or CSS Selectors), and extracts requested data.

Handling cookies and sessions

Another critical feature of a web scraping framework is handling cookies and sessions. This becomes necessary because most websites require authentication to access the content of a web page. Subsequently, these frameworks allow you to interact with these websites. In addition, they can store and send cookies along with requests as well as maintain sessions.

JavaScript rendering

Another function of the web scraping framework is JavaScript rendering. This is necessary because some websites use JavaScript to dynamically load content. Subsequently, web scraping frameworks can render JavaScript so you can collect data from websites that rely on client-side rendering.

Handle concurrency

Concurrency is another function of a web scraping framework. Subsequently, multiple HTTP requests can be made at the same time, which significantly optimizes the process of collecting data. Concurrency is especially important when you are trying to extract data from a website that has multiple pages. However, you ensure you implement request throttling to avoid aggressive scraping.

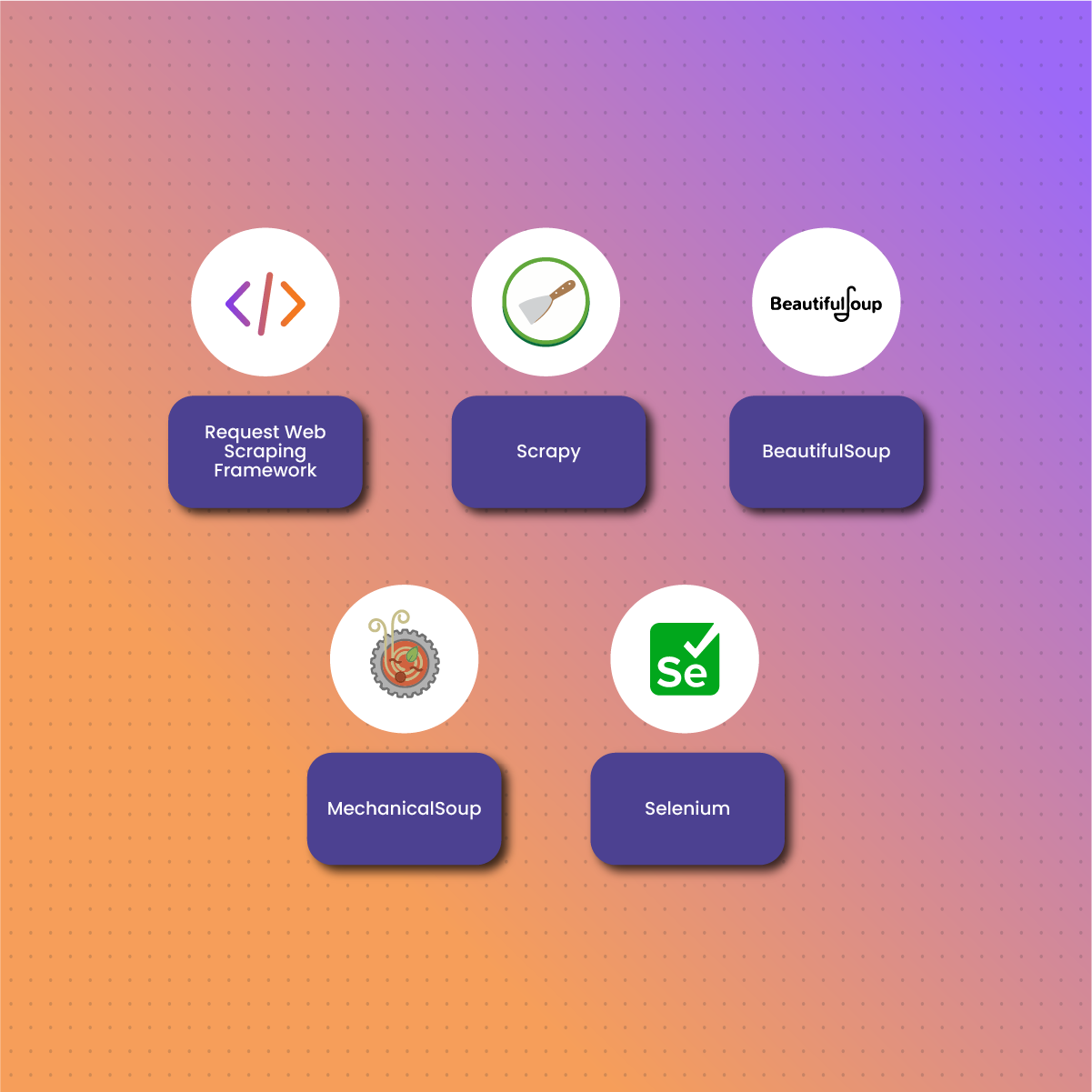

Top 5 Web Scraping Frameworks

Here are some of the most popular web scraping frameworks:

Request web scraping framework

Request Library is a popular web scraping framework that allows you to perform HTTP requests efficiently. This is a critical feature because submitting HTTP requests is necessary for web data retrieval. In addition, you can use the request web scraping framework to provide specific commands to collect data from a website.

One exciting feature of the request web scraping framework is that once you implement the GET command, you can collect data on the website via the content property on the response generated. In addition, this package supports critical API and its functionalities, including GET, DELETE, POST, and PUT. As a result, developers can easily interact with APIs and web services.

Another feature of the request web scraping framework is that it can handle errors such as timeouts and connection errors. Furthermore, some websites employ an SSL certificate as a method to optimize security. However, when you use the request package for web scraping, it validates the SSL certificates. As a result, it reduces the chances of your IP address being flagged and blocked.

Pros of Request Package

Simplicity: Request is easy to use, especially for beginners in web scraping with access to APIs. In addition, this web scraping framework does not involve complex steps like PoolManager. Therefore, you can collect data from a web page with little practice.

Speed: A significant benefit of using the request web scraping framework is its speed. Therefore, it is an efficient option for collecting data from various websites.

Ease of use: Apart from the request web scraping framework being simple, it is easy to understand. One benefit is that it reduces the need to manually include query strings in your URL. In addition, this package supports authentication modules and handles cookies efficiently.

Cons of Request Library

Limited efficiency for dynamic websites: One of the primary limitations of this web scraping framework is that it cannot interact with dynamic websites that contain JavaScript codes.

Not suitable for sensitive data: Another major drawback to using the request web scraping framework is that it does not provide data security. Data is often retained in the browser’s memory as they are visible in the URL query string.

Scrapy

Scrapy is a popular web scraping framework. It is designed to extract data from highly complex websites. Scrapy is more than just a library – you can also use it for data mining, monitoring, and automated testing.

With the Scrapy web scraping framework, you can write a crawler that can efficiently bypass CAPTCHAs to retrieve data from web pages. In addition, this web scraping library comes with built-in selector features that allow asynchronous execution of requests and data extraction.

Furthermore, the Scrapy package uses an auto-throttling method to modify the crawling speed automatically. You can also integrate it with a lightweight web browser – Splash, to maximize web scraping.

Several organizations utilize Scrapy to extract data from various web pages. For example, Lambert Labs uses this web scraping framework to collect data, including videos, text, and images from the internet. Another organization, Alistek, uses this package to retrieve data from various online and offline data sources.

One exciting feature of the Scrapy web scraping framework is that it has built-in support that identifies and extracts data from HTML/XLM files via XPath expressions and CSS selectors. In addition, it also has built-in support for creating feed exports in various file types, including XML, CSV, and JSON and storing them in S3, FTP, or local file systems.

Pros of Scrapy

Robust support: Scrapy provides excellent support for encoding, which makes it easier to handle various web scraping activities. In addition, the extensibility support allows you to add some features using APIs.

Framework for scraping purposes: Scrapy is a comprehensive web scraping framework, and it is highly efficient for crawling activities.

No reliance on BeautifulSoup: Scrapy does not require external libraries like BeautifulSoup for parsing, which makes its process more straightforward than other web scraping packages.

Cons of Scrapy

Steep learning curve: Beginners may find it challenging to understand and use this web scraping framework.

Limitation with JavaScript: The Scrapy package is not efficient for scraping JavaScript-based web pages.

Various installation steps: Using Scrapy with various operating systems requires a unique installation process. Therefore, the process of using this web scraping framework is more complex than other web scraping libraries.

BeautifulSoup

BeautifulSoup is one of the most popular web scraping packages. It is most commonly used for parsing XML and HTML documents. In addition, this web scraping framework provides all the tools you need to structure and modify the parse tree to extract data from websites. Moreover, BeautifulSoup allows you to traverse the DOM and retrieve data from it.

BeautifulSoup 4.11.1 comes with various options for browsing, identifying, and altering a parse tree. Incoming documents are automatically transformed to Unicode, while sent documents are sent in UTF-8.

This web scraping framework allows you to scan an entire parsed document, identify all necessary data, or automatically identify encodings under a specific format. BeautifulSoup is extremely useful and widely adopted for critical web data retrieval activities.

For example, the NOAA’s Forecast Applications Branch uses this web scraping framework in the TopoGrabber script to obtain quality USGS data. Another example is Jiabao Lin’s DXY-COVID-19-Crawler, which uses BeautifulSoup to retrieve data on COVID-19 from Chinese medical websites.

One valuable feature of the BeautifulSoup web scraping framework is its excellent encoding detection capabilities. Therefore, it can produce better results for web scraping on HTML pages that do not disclose their entire codes.

Pros of BeautifulSoup

Ease of use: BeautifulSoup has a user-friendly and highly interactive interface, which makes it an ideal choice for web scraping, even for beginners.

Excellent community support: This web scraping framework offers excellent community support for users. Therefore, beginners with challenges can reach out to experts and receive assistance.

Comprehensive documentation: Another significant feature of this web scraping framework is that it offers thorough documentation. Therefore, developers can access these documents to make modifications to their activities.

Versatility: The BeautifulSoup web scraping framework offers versatile features that allow developers to customize the codes to optimize web data collection.

Cons of BeautifulSoup

Highly dependent: BeautifulSoup cannot work independently as a parser. Therefore, you need to install dependencies, which may add complexity to the process of web scraping.

Limited scope: The primary focus of this web scraping framework is parsing XML and HTML. As a result, its capabilities for more complex scraping tasks may be limited. Using BeautifulSoup to retrieve large volumes of data may trigger anti-scraping techniques, which may cause your IP address to be blocked.

MechanicalSoup

MechanicalSoup is a web scraping framework built on two powerful libraries-BeautifulSoup and Request library. Therefore, MechanicalSoup boasts similar functionalities to its two mother packages. This web scraping framework can automate website interaction, including submitting forms, following redirects, following links, and automatically sending cookies.

Since MechanicalSoup is built on BeautifulSoup, it allows you to navigate the tags of a web page. Additionally, it leverages BeautifulSoup’s methods- find all() and find() to retrieve data from an HTML document.

This web scraping framework has a feature described as “StatefulBrowser,” which extends the browser and provides relevant options for interacting with HTML data as well as storing the state of the browser.

Pros of MechanicalSoup

Supports CSS and XPath selectors: This web scraping framework supports CSS and XPath selectors. Therefore, it is ideal for locating and interacting with elements on a website.

An excellent option for simple web crawling: MechanicalSoup is an ideal choice when you need a simple web crawling script without JavaScript capabilities, including logging into a website and checking boxes.

Speed: This web scraping framework offers excellent speed. It is also efficient in parsing simple web pages.

Cons of MechanicalSoup

Limited to HTML pages: MechanicalSoup is limited to HTML pages as it does not support JavaScript. Therefore, you cannot employ this web scraping framework to access and extract data on Javascript-based websites. As a result, if the website you want to interact with does not include any HTML page, MechanicalSoup may not be the best option.

Incompatibility with JavaScript elements: Since the MechanicalSoup web scraping framework is limited to HTML pages, it cannot interact with JavaScript elements, including buttons, menus, or slideshows on the page. Also, it does not support JavaScript rendering.

Selenium

Selenium is a free and open-source web scraping framework that allows you to execute automated tasks on a website. In simpler terms, it will enable you to instruct a browser to perform specific tasks like form submission, alert handling, automatic login, and social media data scraping. Selenium is an excellent tool for rendering JavaScript web pages, which differentiates it from other web scraping packages.

The Selenium web scraping framework is compatible with various browsers like Chrome, Firefox, and more. It can be integrated with Python via APIs to create test cases. Moreover, you can use Selenium for web data retrieval due to its headless browser abilities. A headless browser is a web browser that functions without a graphical user interface.

One of the primary features of Selenium is it gives you access to a Javascript code interpreter. Javascript execution is a critical aspect of web data retrieval. Therefore, this web scraping framework supports JavaScript rendering for data retrieval. As a result, Selenium gives you total control over the page and the browser.

Furthermore, rendering images is a critical but time-consuming aspect of web scraping. However, the Selenium web scraping framework allows you to eliminate this process to maximize the process of data retrieval.

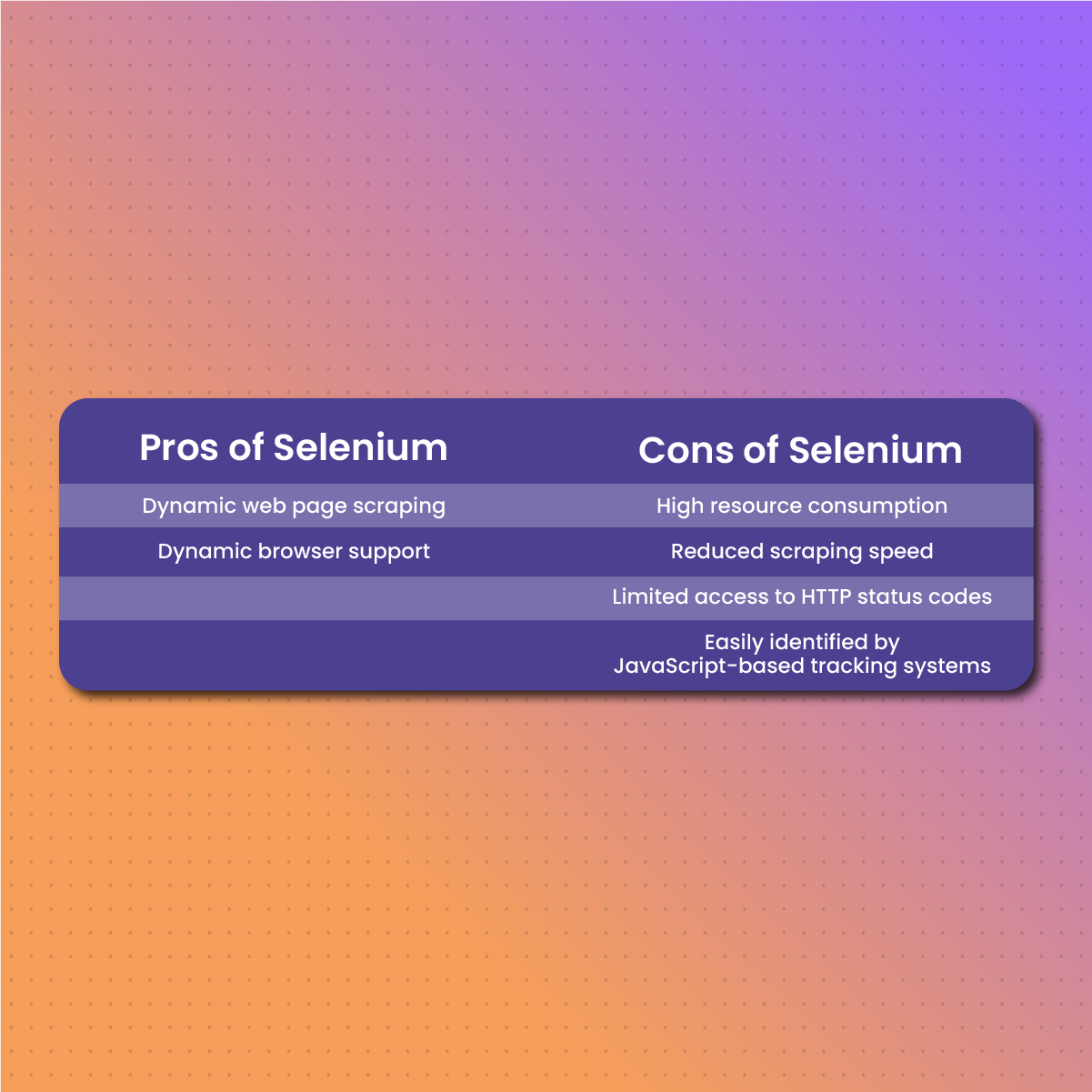

Pros of Selenium

Dynamic web page scraping: One of the pros of using this web scraping framework is it is an excellent option for retrieving data from dynamic web content. As a result, the package allows you to interact with the page in a manner that imitates a human user. Therefore, with Selenium WebDriver, you can retrieve data from interactive web pages.

Dynamic browser support: Selenium WebDriver supports various browsers like Google Chrome, Opera, Internet Explorer, Firefox, Android, and iOS. This flexibility of this web scraping framework allows you to select the best browser to optimize your activities.

Cons of Selenium

Requires lots of resources: When using Selenium, the entire web browser is loaded into the system memory. Since the Selenium web scraping framework has a human-like interactive approach, it often consumes time and system resources.

Reduced speed: Although Selenium’s ability to imitate human-user interactions is valuable, it often leads to reduced scraping speeds. Therefore, using this web scraping framework may cause a significant reduction in efficiency in data retrieval, especially for large datasets.

Limited access to status codes: Since this web scraping framework focuses on automating interactions with dynamic websites, it may not provide complete access to HTTP status codes. As a result, it may not be an efficient option to handle errors and quality control during web data retrieval.

Easily identified by JavaScript-based tracking system: JavaScript-based traffic systems like Google Analytics can easily identify Selenium WebDriver being used to collect data. Therefore, your IP address may be flagged or banned from accessing that website.

Optimizing the Use of Web Scraping Frameworks with NetNut Proxies

As mentioned earlier, one tip for optimizing web scraping is using proxies. While there are several free proxies available, you don’t want to sacrifice cost for functionality. Therefore, it becomes critical to choose an industry-leading proxy server provider like NetNut.

NetNut has an extensive network of over 52 million rotating residential proxies in 195 countries and over 250,000 mobile IPS in over 100 countries, which helps them provide exceptional data collection services.

NetNut offers various proxy solutions designed to overcome the challenges of web scraping. In addition, the proxies promote privacy and security while extracting data from the web.

NetNut rotating residential proxies are your automated proxy solution that ensures you can access websites despite geographic restrictions. Therefore, you get access to real-time data from all over the world that optimizes decision-making.

Alternatively, you can use our in-house solution- NetNut Scraper API, to access websites and collect data. Moreover, if you need customized web scraping solutions, you can use NetNut’s Mobile Proxy.

Conclusion

This guide has examined the concept of a web scraping framework and how it works. Web scraping is a crucial process of getting data from websites. These frameworks are a collection of tools and features that optimize the process of extracting data from the web.

We also examined the Request, BeautifulSoup, MechanicalSoup, Scrapy, and Selenium packages. Subsequently, before you dive into using any of these libraries for web scraping, ensure you understand their features, advantages, and limitations.

There are several challenges associated with web scraping. Therefore, you need to leverage proxies to optimize your scraping activities. NetNut proxies offer a unique, customizable, and flexible solution to these challenges. In addition, we provide transparent and competitive pricing for all our services.

Feel free to contact us if you have any further questions!

Frequently Asked Questions

What are some factors to consider when choosing a web scraping framework?

- Choose a framework that has a strong community for support

- Select a package that can parse DOM (Document Object Model) effectively

- The web scraping framework should support headless browsing

- Consider the speed of execution

- Select a package that makes data handling and storage convenient.

What are the challenges associated with web scraping?

Although web scraping frameworks are designed to optimize web data retrieval, you may encounter some challenges. These include:

- IP blocks: These can happen when you send too many requests to a website within a short period.

- CAPTCHA challenge: This is an anti-scraping measure that attempts to differentiate bots from human users

- Browser fingerprinting: This technique involves collecting and analyzing your web browser details to produce a unique identifier. Although fingerprinting is often used to optimize website security and provide a personalized experience, it can also identify web scrapers.

- Dynamic content: You may encounter some challenges if your web scraping framework is not built to handle dynamic websites.

- Rate limiting: This happens when a website limits the number of requests you can send within a period. Therefore, it slows down the process of extracting data, which can be quite frustrating.

What are some practices that can optimize web scraping?

Web scraping frameworks are designed to streamline the process of data retrieval. However, here are some practices that can significantly reduce the chance of experiencing challenges:

- Read the robot.txt file

- Read the website terms and conditions

- Understand data protection protocols in your state

- Avoid aggressive web scraping

- Always use a reliable proxy server